2016 Fall

Chris Chen

Chris Chen

MAT594GL Autonomous Mobile Camera/Drone Interaction

Inspection | Sensor Array Designer

Inspection | Sensor Array Designer

Project Overview

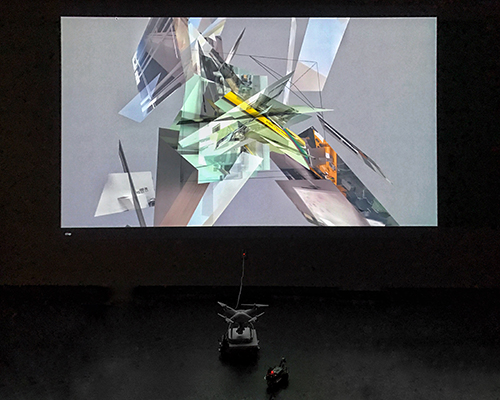

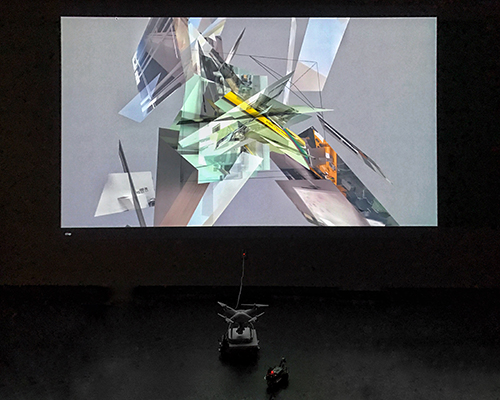

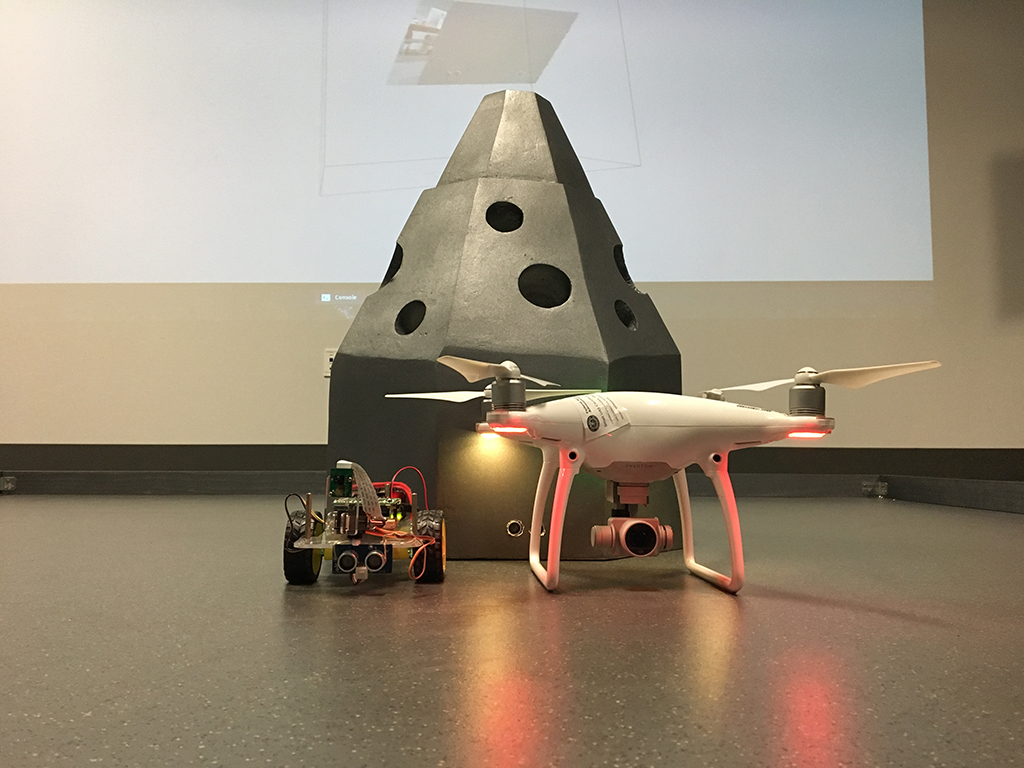

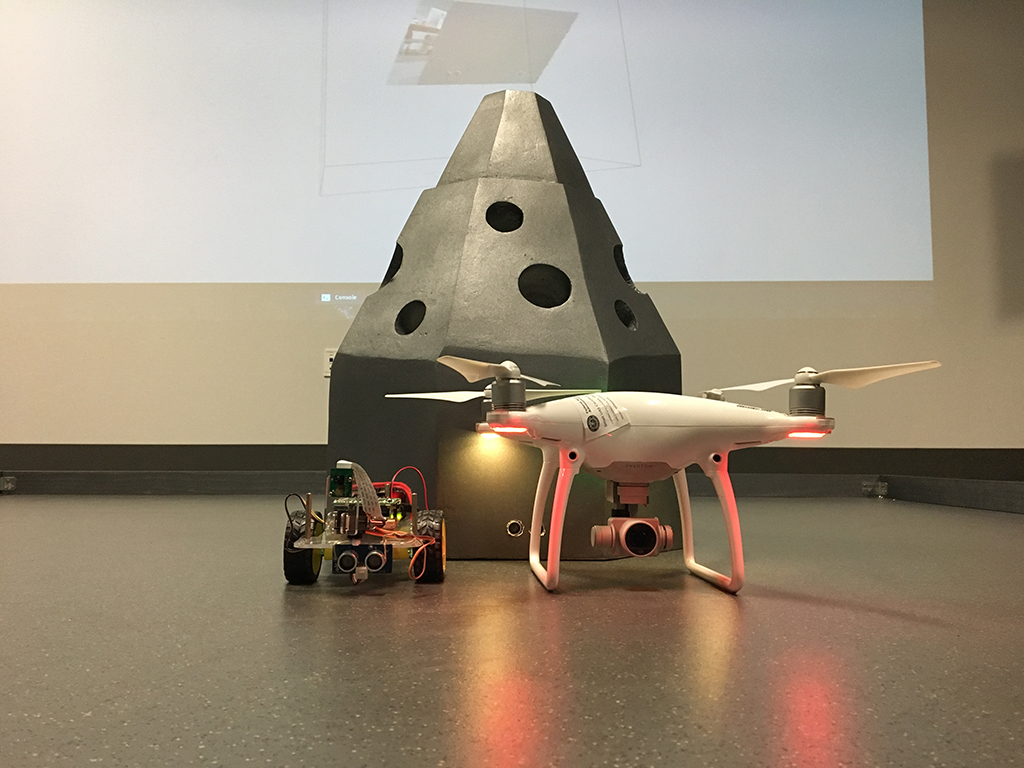

Inspection tries to blur the boundaries between physical and virtual world by creating continuously evolving virtual 3d photomontage as a response to the physical world, where a drone, a ground robot, and eight ultrasonic sensors consist a reactive collective system to connect the two worlds. The dynamic virtual assemblage constructed by Voronoi Algorithm not only creates novel aesthetic structure but also evokes questions about the relationship between objects and space.

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

Sensor Array

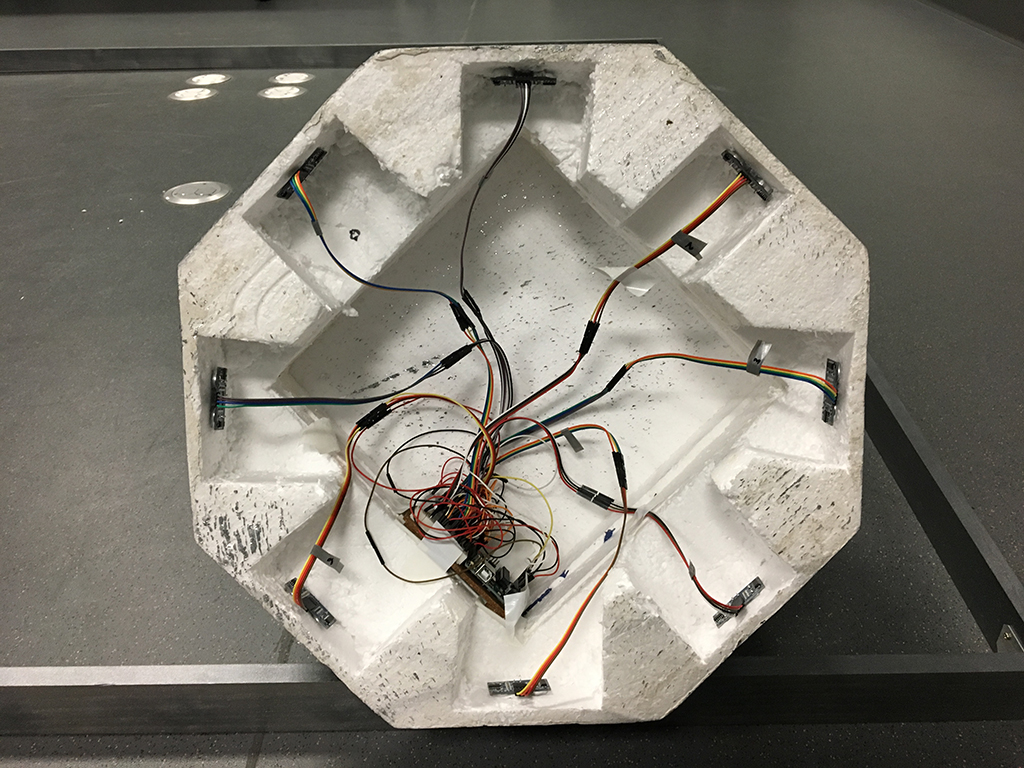

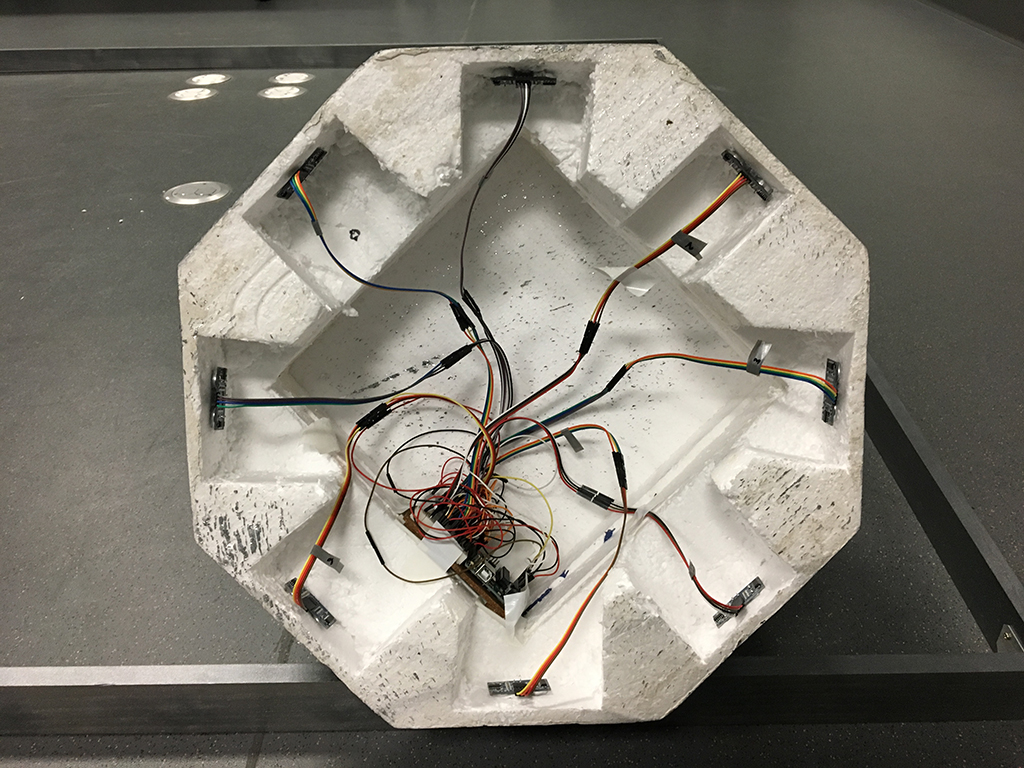

In order to have an interactive and dynamic system, the environment of the robots itself must facilitate connections with the individual components of the greater project. The ultrasonic sensor array system allows for this, acting as a localization system that detects how far away the roving ground robot is to the focal point of the system. This data, in turn, is translated to a format that the drone can read and is transmitted to the server created by Rodger, where it is cached and uploaded. From there, the drone, programmed by Weihao, is able to adjust its own position and its camera orientation to adjust to the constant stream of data. The enclosure and housing for the array itself was created by Mengyu and Dan, which allowed for the mounting of the entire system inside of it.

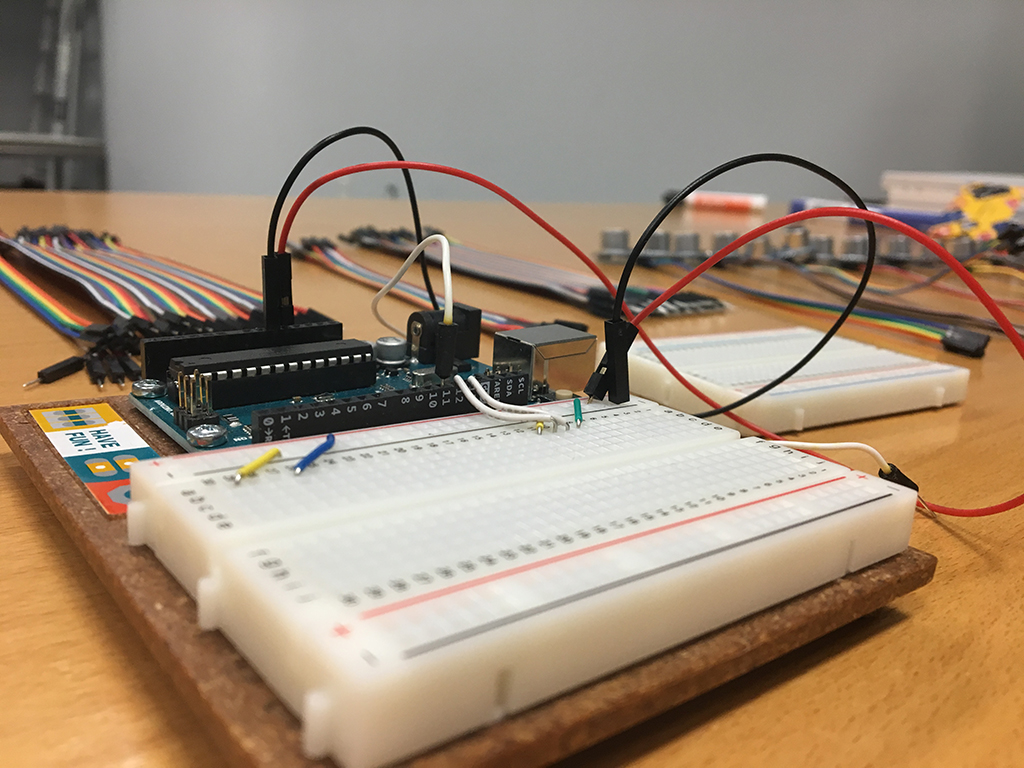

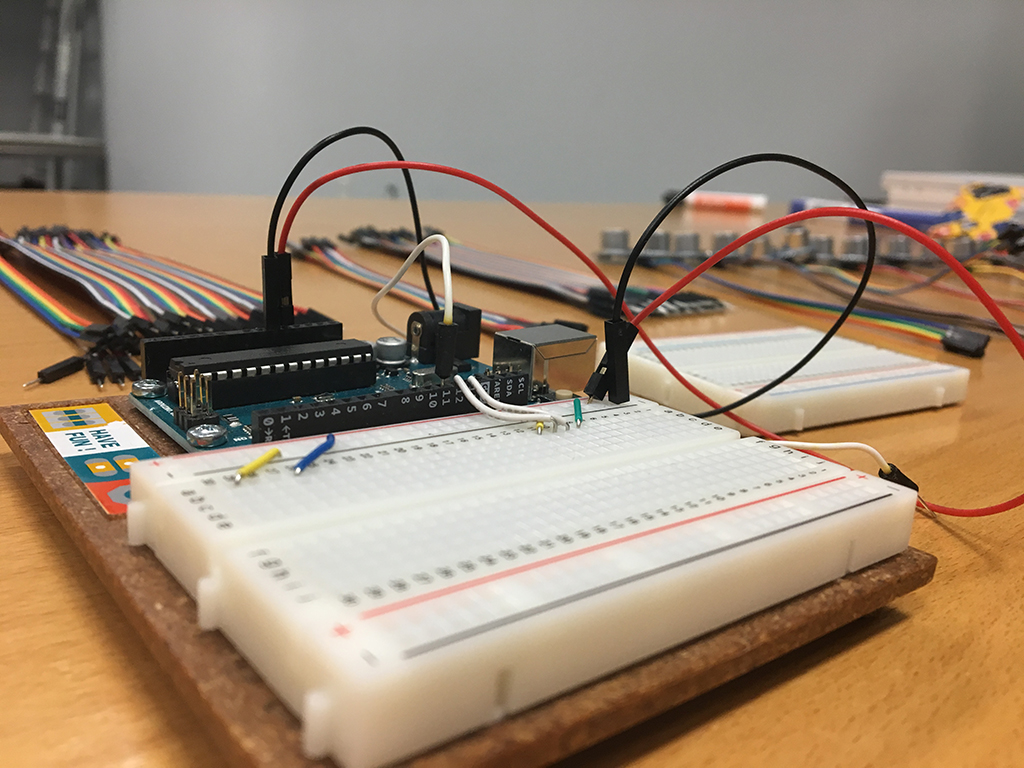

Creation and Process

I built the ultrasonic system on the Arduino platform, programming the microcontroller to control each of the eight sensors: first sending signals and then receiving and converting the delay, giving us the distance. This data, however, is not in a readable format for the drone; for this, I used Processing to create streams of object-oriented JSON files which denotes the individual sensors and their respective data.

There were certain issues when approaching this project, including transmission, programming, and design. These were mainly resolved with tinkering with the Arduino IDE, particularly with the programming and transmission aspects, although Processing and Python proved particularly useful, as well. One particular challenge we had to deal with was that the sensors themselves were as accurate as we had hoped, returning errors when the distance of objects were too large; for this we had to rely on a timeout system for the extended bounceback along with a performance stage.

There were certain issues when approaching this project, including transmission, programming, and design. These were mainly resolved with tinkering with the Arduino IDE, particularly with the programming and transmission aspects, although Processing and Python proved particularly useful, as well. One particular challenge we had to deal with was that the sensors themselves were as accurate as we had hoped, returning errors when the distance of objects were too large; for this we had to rely on a timeout system for the extended bounceback along with a performance stage.

Moving Forward

This project in particular has much more room to grow - for me, this constitutes improvements in how to expand the capabilities of ultrasonic sensors to their limit, and thus incorporating how to include the audience themselves within the system. In the future, I want to investigate a different combination of tools and processes to find better, more unrestricted solutions to some of the issues we faced today. Luckily, this project afforded me many opportunities to explore Arduino and Processing - even if many of the experiments did not end up featuring in the project, they provided useful insights. At the same time, working within a team has given me inspiration to see other possibilities, encouraging me to work with some of the tools they did and produce other interactive systems.