2016 Fall

Rodger (Jieliang) Luo

Rodger (Jieliang) Luo

MAT594GL Autonomous Mobile Camera/Drone Interaction

Inspection | Project Manager

Inspection | Project Manager

Project Overview

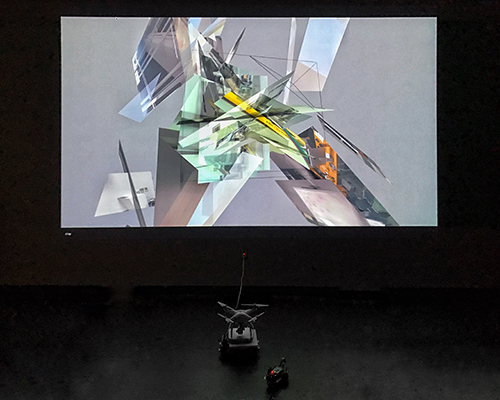

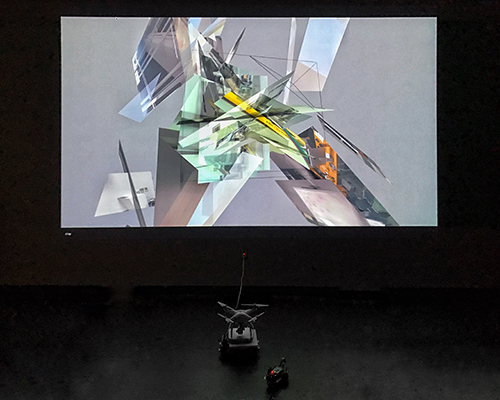

Inspection tries to blur the boundaries between physical and virtual world by creating continuously evolving virtual 3d photomontage as a response to the physical world, where a drone, a ground robot, and eight ultrasonic sensors consist a reactive collective system to connect the two worlds. The dynamic virtual assemblage constructed by Voronoi Algorithm not only creates novel aesthetic structure but also evokes questions about the relationship between objects and space.

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

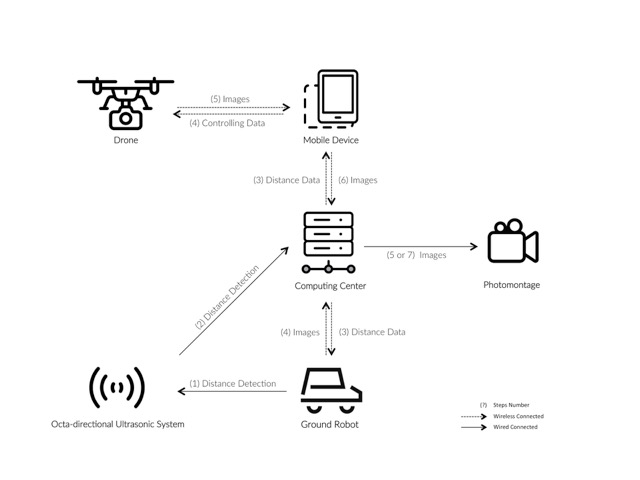

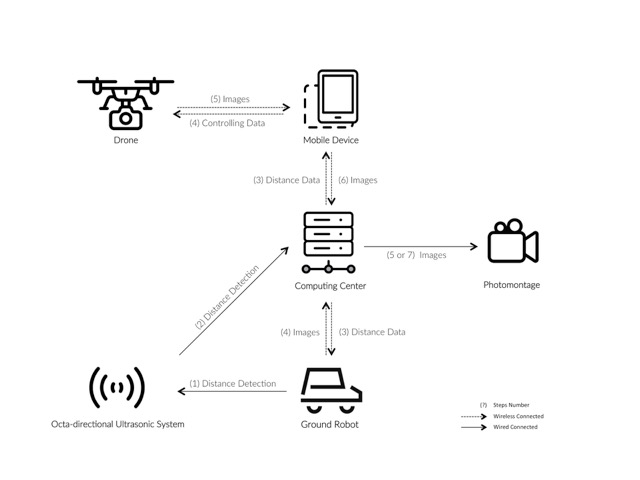

Project Architecture

The illustration below gives a clear idea of the architecture of the system for the project.

Architurecutre of The System - Illustration made by Weihao Qiu

Architurecutre of The System - Illustration made by Weihao Qiu

Ground Robot Research

The ideal ground robot for the project in terms of hardware should consist omni-directional base, pan and tilt servo, HD camera, and WiFi module. And the most important feature is that the robot is programmable. Because of all the requirements, there are not too much options available on the market.

The first robot I've looked into is called Riley, which is a commercial bot that can be controlled via mobile device for home surveillance purpose. It has all the features mentioned above with a decent appearance. However, the company doesn't provide any open APIs and hasn't replied any of my monthly requests asking for APIs.

Riley Robot

The second robot being investigated names X80SV, which is a ready to use mobile robot platform designed for researchers developing advanced robot applications such as remote monitoring, telepresence and autonomous navigation/patrol. Besides the features that I've mentioned above, it also has a wide range of sensors for accurate collision detection. I didn't end up to choose it because the price is about $3,200, which is too expensive for a proof-of-concept project, but it should definitely be under consideration if we have more fundings in the future.

X80SV Robot

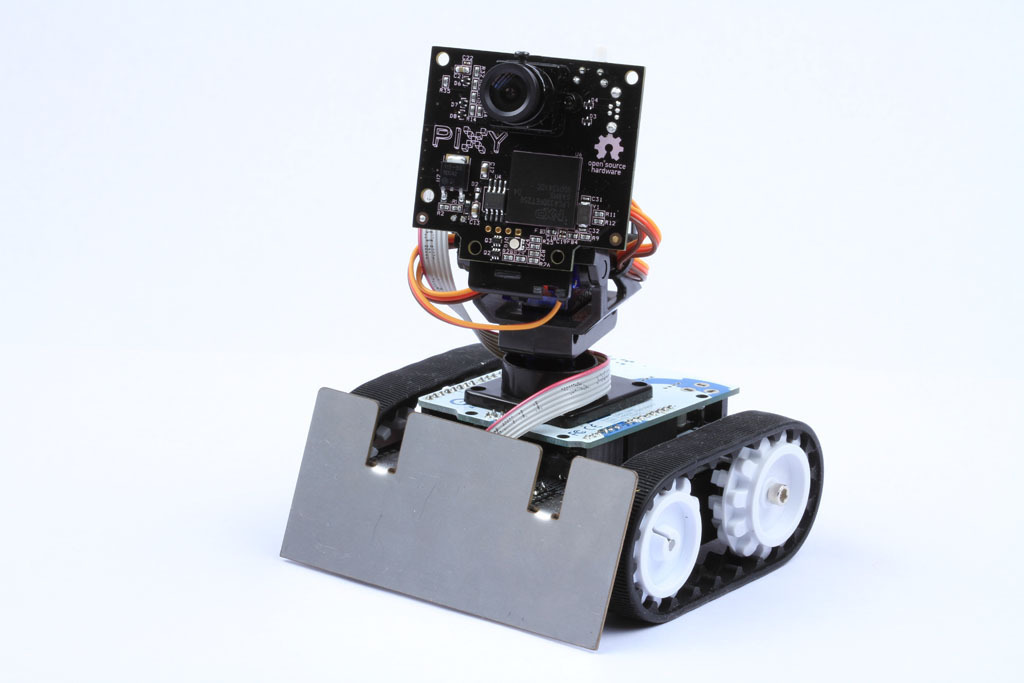

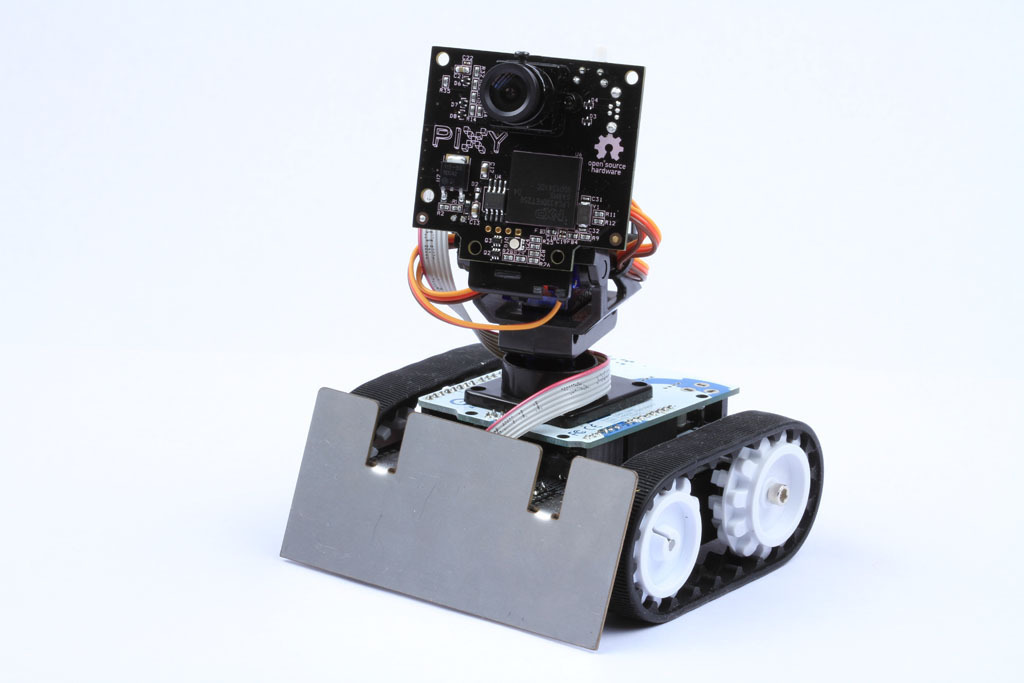

The above two robots kind of represent all the ready-to-use robots on the market that fit the requirements of the project. They are either too expensive for the current stage of the project or not providing any accesses to their systems. In that case, I changed my strategy to semi-assembled robots. In physical computing world, the most two popular electronics platforms are Arduino and Raspberry PI. After several rounds of research, I found a solution to combine Arduino-based Zumo robot with Pixy camera which is developed by Charmed Labs and Carnegie Mellon University. An advantage of Pixy camera is that it embeds a processor to perform color-based object recognition. The obstacles that made me suspend this robot for the project are 1) the image taken by the camera is in low-resolution; 2) the pipeline of transferring image data from Pixy to Arduino hasn't really been established.

Arduino-powered Zumo robotic base with Pixy camera

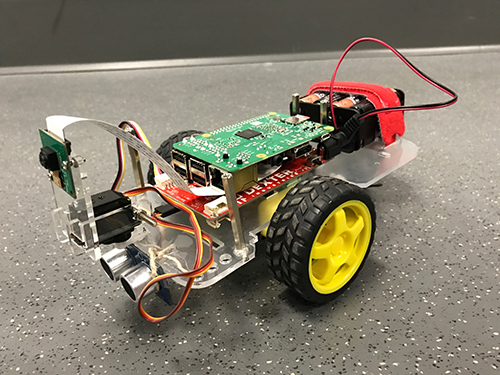

The final winner among all the robots consists a GoPiGo robotic shield, a Raspberry PI platform, and a HD camera module. With this combination, we can program complex movements for the robots and advanced machine learning based computer vision algorithms. The downsides at the current moment are that the robot has only one dimensional servo and the battery package is a little bit primitive, but those issues are definitely solvable.

Modified GoPiGo robot powered by Raspberry PI

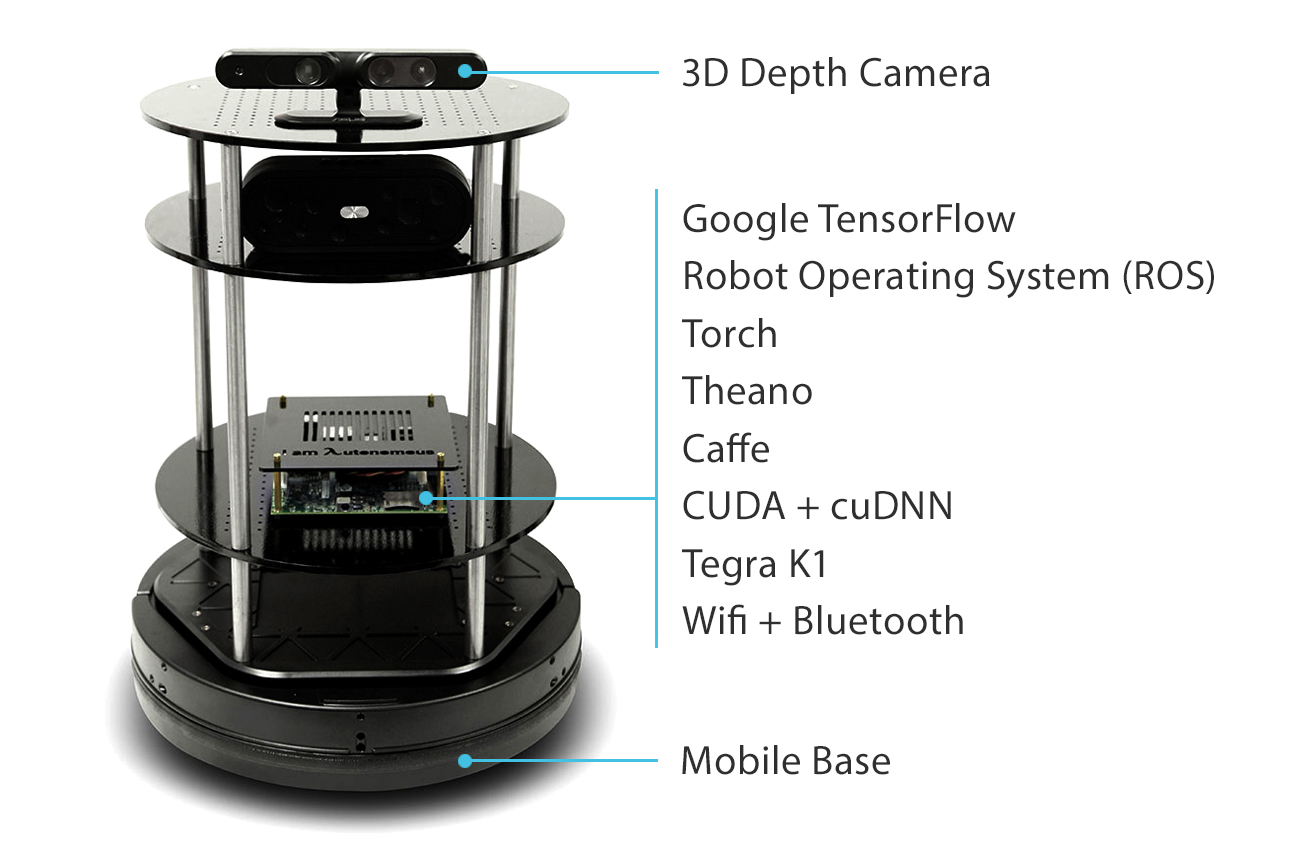

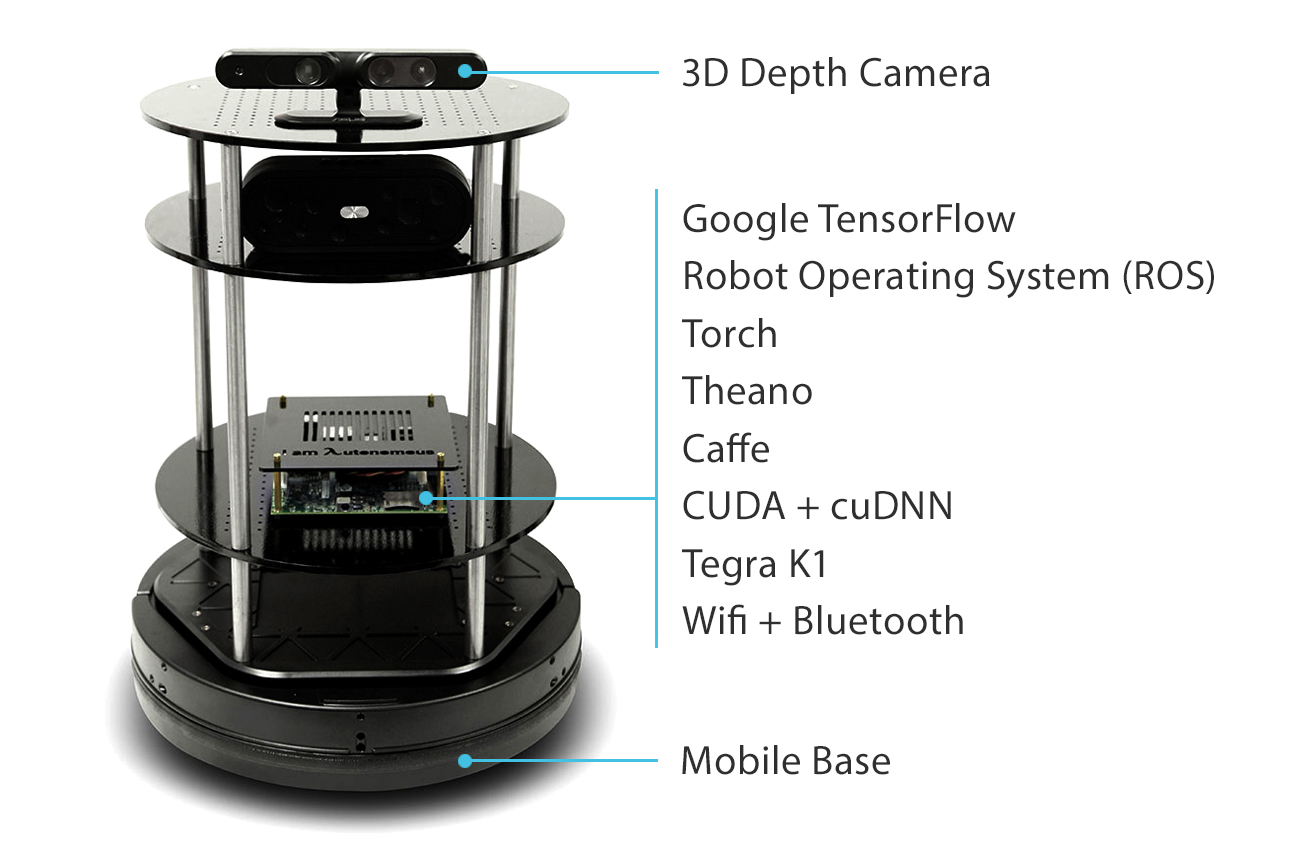

Another robot that might be out of the scope of the current project is called Deep Learning Robot developed Autonomous Inc. It's very powerful as it uses 3D depth camera and installs Google TensorFlow and CUDA for deep learning, and the price is $999, which is reasonable for all the features it has. My doubt is that the robot is in the size of body, which might not fit into the concepts of insect-inspired swarm intelligence.

Deep Learning Robot

The first robot I've looked into is called Riley, which is a commercial bot that can be controlled via mobile device for home surveillance purpose. It has all the features mentioned above with a decent appearance. However, the company doesn't provide any open APIs and hasn't replied any of my monthly requests asking for APIs.

Riley Robot

The second robot being investigated names X80SV, which is a ready to use mobile robot platform designed for researchers developing advanced robot applications such as remote monitoring, telepresence and autonomous navigation/patrol. Besides the features that I've mentioned above, it also has a wide range of sensors for accurate collision detection. I didn't end up to choose it because the price is about $3,200, which is too expensive for a proof-of-concept project, but it should definitely be under consideration if we have more fundings in the future.

X80SV Robot

The above two robots kind of represent all the ready-to-use robots on the market that fit the requirements of the project. They are either too expensive for the current stage of the project or not providing any accesses to their systems. In that case, I changed my strategy to semi-assembled robots. In physical computing world, the most two popular electronics platforms are Arduino and Raspberry PI. After several rounds of research, I found a solution to combine Arduino-based Zumo robot with Pixy camera which is developed by Charmed Labs and Carnegie Mellon University. An advantage of Pixy camera is that it embeds a processor to perform color-based object recognition. The obstacles that made me suspend this robot for the project are 1) the image taken by the camera is in low-resolution; 2) the pipeline of transferring image data from Pixy to Arduino hasn't really been established.

Arduino-powered Zumo robotic base with Pixy camera

The final winner among all the robots consists a GoPiGo robotic shield, a Raspberry PI platform, and a HD camera module. With this combination, we can program complex movements for the robots and advanced machine learning based computer vision algorithms. The downsides at the current moment are that the robot has only one dimensional servo and the battery package is a little bit primitive, but those issues are definitely solvable.

Modified GoPiGo robot powered by Raspberry PI

Another robot that might be out of the scope of the current project is called Deep Learning Robot developed Autonomous Inc. It's very powerful as it uses 3D depth camera and installs Google TensorFlow and CUDA for deep learning, and the price is $999, which is reasonable for all the features it has. My doubt is that the robot is in the size of body, which might not fit into the concepts of insect-inspired swarm intelligence.

Deep Learning Robot

Autonomous Movements

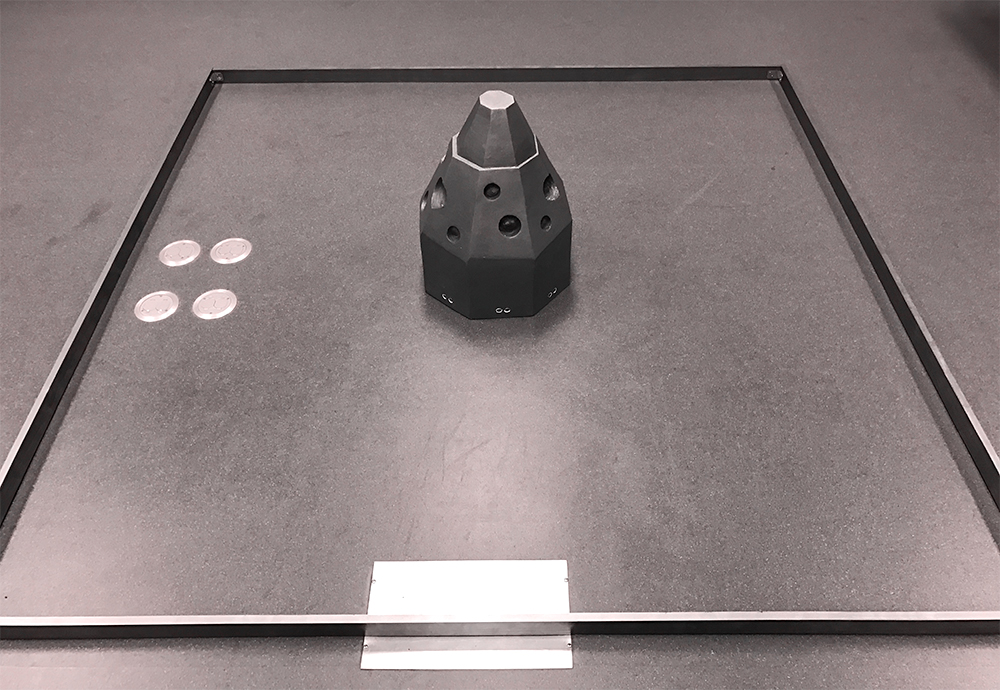

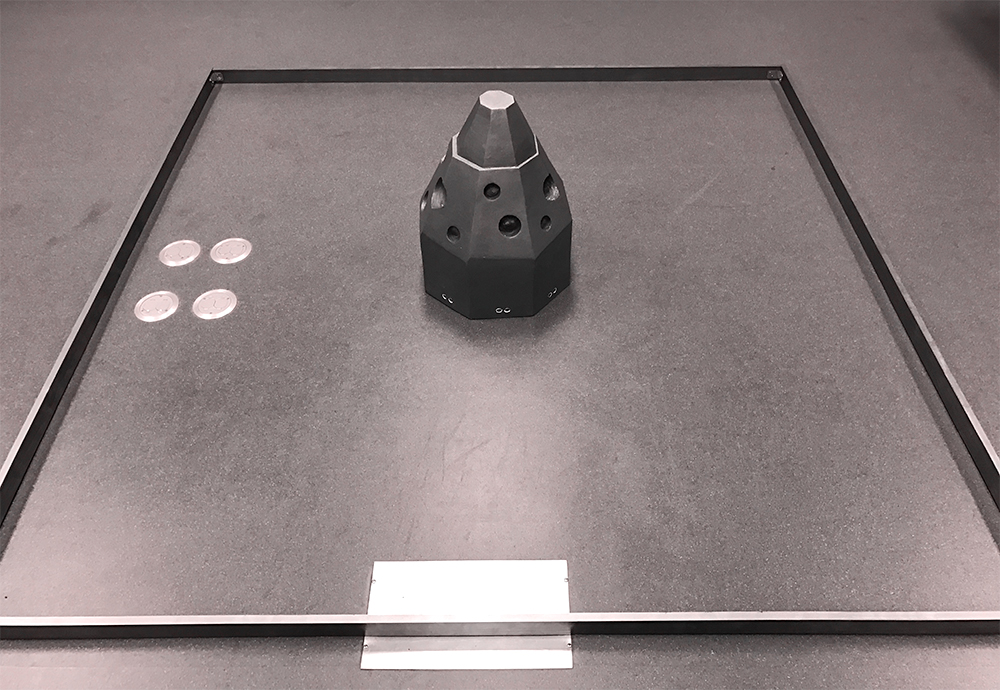

I designed a basic autonomous movement algorithm by using ultrasonic sensor to detect if there is any obstacle in the way of the robot. Robot would keep moving forward if the front is clear, and choose another direction if detect something in front. Ideally, the ground robot of this project can move autonomously in an restricted area to explore the environment. However, due to the limitation of motor speed and number of sensors, the movement of the ground robot is functional but not active enough to create a continuous exploring behavior in the area with zero collisions. To improve the condition, I can install two more ultrasonic sensors on the side of the robot and change a more powerful motor.

Robot's Moving Area

Robot's Moving Area

Server Establishment

Server is crucial for the project because all the images taken by the robot and the drone require fast and stable transforming speed via WiFi as well as sensory data from ultrasonic sensors. We implemented two Python based serves that deal with drone and ground robot separately to avoid image data conflicting, and the two servers are both based on HTTP protocol, which means the data can be acquired from URLs. In order to avoid any external interference, we also setup a private local network that only circulates through the drone, the ground robot, and the ultrasonic sensors.

First iteration of robot sending image back to server and being displaed in a virtual space

First iteration of robot sending image back to server and being displaed in a virtual space

Visual Representation

The basic idea of the visual representation is to dynamically and synchronously displaying images taken by the drone and the robot to aesthetically explore structure in terms of space and time.

One screenshot of the final presentation

As shown above, all the images are placed asymmetrically followed by an adapted Voronoi Diagram algorithm in 3D space. To distinguish images taken by the drone and the robot, I color-coded drone images to orange and ground robot images to blue. Once an image is taken either by the ground robot or the drone, it will automatically uploaded to the virtual space to dynamically construct an aesthetic structure, which I call it evolving 3D photomontage. Please see the animation below to have a better understand of how the dynamic structure was constructed.

Animation of the process to construct the 3D photomontage

One screenshot of the final presentation

As shown above, all the images are placed asymmetrically followed by an adapted Voronoi Diagram algorithm in 3D space. To distinguish images taken by the drone and the robot, I color-coded drone images to orange and ground robot images to blue. Once an image is taken either by the ground robot or the drone, it will automatically uploaded to the virtual space to dynamically construct an aesthetic structure, which I call it evolving 3D photomontage. Please see the animation below to have a better understand of how the dynamic structure was constructed.

Animation of the process to construct the 3D photomontage

Future Works

- Setup Localization System

- Optimize Ground Robot

- Implement Swarm Intelligent Algoritm with Multiple Robots