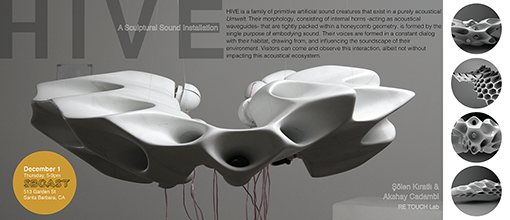

The exhibit is in collaboration with RE TOUCH LAB, and is part of the first Thursdays events at SBCAST.

SBCAST, 513 Garden Street, Santa Barbara.

Date: Thursday, December 8th

Time: 4pm

Location: Third floor conference room (3001), Elings Hall

Abstract:

In 2005, computer performance plateaued. Herb Sutter wrote:"...the performance lunch isn’t free any more. Sure, there will continue to be generally applicable performance gains that everyone can pick up, thanks mainly to cache size improvements. But if you want your application to benefit from the continued exponential throughput advances in new processors, it will need to be a well-written concurrent (usually multithreaded) application. And that’s easier said than done, because not all problems are inherently parallelizable and because concurrent programming is hard."

Today, computers are made faster by adding more processors (e.g., multi-core CPUs and specialty devices like GPUs and video encode/decode) and connecting them together in a network. While this strategy can make computers faster, it does not automatically boost performance like the increases in clock rates did for so many years. In general, adding N processors does not make your computer N times faster; processors do not add up that way.

In fact, many computers today resemble the distributed systems and computing clusters of the late 1990s, but on a miniature spatial scale. At the same time, full-sized distributed systems form the basis of the Internet, High-Performance Computing, Cloud computing, the Internet of Things, Digital Signage, Multiplayer Online Games, High-Frequency Trading, and many other important technologies.Increasingly, computer-based interactive audiovisual works such as Digital Musical Instruments, Ensemble Electronic Music performances, Multimedia Installations, and Augmented/Virtual Reality Environments are implemented as hardware/software systems which are distributed across several computing components connected by a network. Today, the business of distributing interactively generated light and sound necessarily requires writing code for a distributed system.

Yet, no Creative Coding framework or language addresses all the concerns of Interactive Distributed AudioVisual Systems (IDAVS). Instead, the Creative Coder invents an ad hoc messaging scheme to "make things talk." Too often these systems fail due to bugs, hardware failure, or unexpected user input at just the wrong moment. Because these systems are complex and rapidly developed, they are commonly brittle, meaning it may take a considerable amount of time and effort to recover from a failure.

To design a reliable, low-latency IDAVS, one must engage the system at many levels (i.e., Hardware, Operating System, Development Environment, Programming Language, Software Library, Framework, and Model), using a diverse set of tools and skills. Rigorous testing is required to achieve reliability and/or reduce recovery time after system failures, but that takes considerable time and effort. Is there some way to make it easier to make reliable IDAVS and to enable rapid Creative Coding for these systems? Yes. There are lots of ways.

This dissertation explores models, abstractions, pitfalls, and best practices that rise from the author's practice-based research and also experience teaching the subject of Creative Coding for Interactive Distributed AudioVisual Systems. Through designing, constructing, and performing with IDAVS, we examine the ways in which the Creative Coder engages with these systems. Finally, we offer a design specification for Creative Coding frameworks that aims to specifically target IDAVS, incorporating these models, abstractions, and best practices.

Date: Friday, December 9th

Time: 4pm

Location: Experimental Visualization Lab, room 2611, Elings Hall

Abstract:

In the past decade, hardware modular synthesizers have seen a massive resurgence. Electronic musicians and composers are discovering the flexibility and hands-on nature of this creative equipment at an unprecedented rate. New Eurorack module designs are reaching this market on a weekly basis, and computer musicians have an expanding variety of software modular platforms to choose from.

As hardware modules take on more polymorphic design strategies, they are breaking further away from software modulars which usually rely on simpler, single-function designs. This difference in design perspectives has created a difficult situation for modular pedagogy and documentation. For a student interested in learning how a hardware modular works, a software modular may be an affordable alternative, but it doesn't quite capture the patching techniques, the physical immediacy, or the multi-level control strategies of the hardware environments. For students and educators who are able to afford a hardware modular, they may find themselves overwhelmed by poor documentation, a constant influx of new designs, and a lack of guidance when putting together a new system.

This dissertation aims to resolve a number of these issues. My primary contribution is a set of three taxonomies to analyze modular design, modular control strategies, and modular patching techniques. Each taxonomy is a step toward a more complete pedagogical framework for modular synthesis, along with a useful analysis as to exactly how software modulars differ from their hardware counterparts.

As a result of these taxonomies, I am also presenting two large software projects. The first is Unfiltered Audio, a plug-in company that I created in 2012 with two other MAT students. Our software designs are heavily influenced by hardware modular synthesizers. I will present some of our most notable designs, along with how they relate to the new taxonomies.

I will also present Euro Reakt, a collection of over 140 modules for the affordable Reaktor software modular environment. This collection is perhaps the closest a software modular system has come to hardware design, as it focuses on polymorphic module behavior along with intuitive control sets and layouts. Each module is thoroughly documented, and the Euro Reakt package comes with many pre-built demonstration systems. This makes it an ideal choice for students, educators, musicians, and composers.

Date: Wednesday, December 7th

Time: 3pm

Location: MAT Conference Room, Elings Hall, room 2003

Abstract:

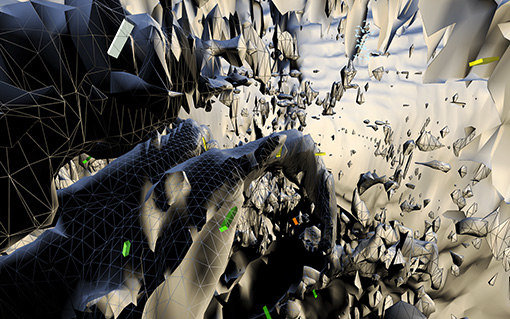

"WorldMediaViewer" is software for displaying multimedia collections as 3D virtual environments using metadata. Users can navigate scenes consisting of rectangular images, panoramas, videos, and sounds that appear over time in virtual space at the approximate locations they were captured in the real world.

A hybrid system linking data visualization, worldmaking, and interactive cinema, WorldMediaViewer brings browsing large multimedia collections closer to the experience of taking photos, capturing video, and recording sounds in the field. Its metadata-based spatial model provides a flexible, computationally inexpensive alternative to image-based geometric models. While producing less conventionally “realistic” results, this method benefits from the versatility of metadata and its use across media types. It allows, for instance, navigating environments of nearly any size or dimension, combining static images and time-based media, importing media collections with insufficient data for a geometric model, and reinterpreting “noisy” data, for instance, blurred subjects or shifting light conditions, as valid data with its own informational and aesthetic value.

By harnessing metadata to display media files in their spatial and temporal context, WorldMediaViewer opens a window onto the creative process, while offering a framework for composers and artists with little to no technical background and without costly equipment to create and collaborate on 3D virtual environments using small or large multimedia collections.

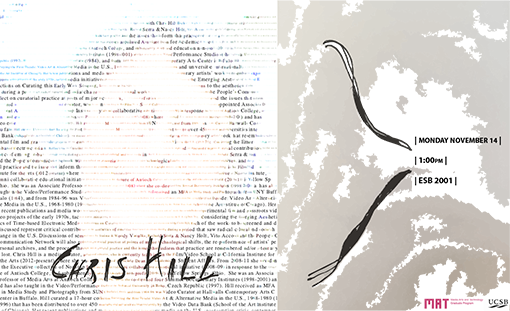

Speaker: Chris Hill

Time: Monday, November 14th, 1pm.

Location: Engineering Science Building, room 2001.

Each of the works to be screened and discussed represent critical contributions to the aesthetics of emerging time-based electronic media during a period that saw radical cultural and social change in the U.S. Discussions of seminal work by Steina & Woody Vasulka, Richard Serra & Nancy Holt, Vito Acconci and the People’s Communication Network will also reflect on curatorial practice at points of major technological shifts, the re-performance of artists’ personal archives, and the process through which cultural practice and the issues that inform that practice are remembered and/or potentially lost.

Chris Hill is a media curator, artist and educator, who is currently teaching in the Film/Video School at California Institute for the Arts (2012-present) where she was recently appointed Associate Dean for Academic & Student Affairs. From 2008-11 she served on the Executive Collective of Nonstop Institute, a faculty/alumni collaborative educational initiative (2008-09) in response to the closure of Antioch College, and subsequently an arts and education non-profit (2009-11) in Yellow Springs, Ohio. She was an Associate Professor of Media Arts at Antioch College (1997-2008) where she co-directed four Summer Documentary Institutes (1998-2001) and has also taught in the Video/Performance Studio at the Technical University in Brno, Czech Republic (1997). Hill received an MFA in Media Study and Photography from SUNY Buffalo (1984), and from 1984-96 was Video Curator at Hallwalls Contemporary Arts Center in Buffalo. Hill curated a 17-hour collection Surveying the First Decade: Video Art & Alternative Media in the U.S., 1968-1980 (1996) that has been distributed to over 450 museums and universities internationally by the Video Data Bank (School of the Art Institute of Chicago). Her recent publications and media work have investigated documentary media on the U.S. incarceration crisis, contemporary artists’ work that re-embodies experimental film and grassroots video projects of the early 1970s, tactical media initiatives in response to a community emergency, and beekeeping.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

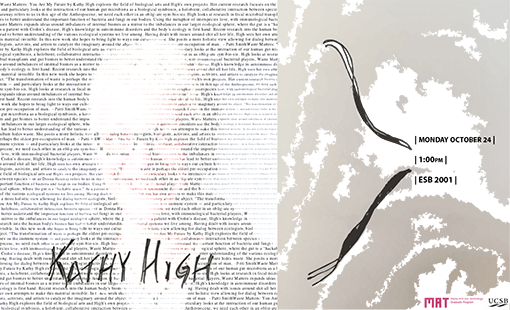

Speaker: Kathy High

Time: Monday, October 24th, 1pm.

Location: Engineering Science Building, room 2001.

"Waste Matters: You Are My Future", explores the field of biological arts and High's own projects. Her current research focuses on the immune system — and particulary looks at the interaction of our human gut microbiota as a biological symbiosis, a holobient, collaborative interaction between species – or as Donna Haraway refers to us in this age of the Anthropocene, "we need each other in an obligate symbiosis". High looks at research in fecal microbial transplants and gut biomes to better understand the important function of bacteria and fungi in our bodies. Using the metaphor of interspecies love, with immunological bacterial players, Waste Matters expands ideas around imbalances of internal biomes as a mirror to the imbalances in our larger ecological sphere, where the gut is a "hackable space". As a patient with Crohn’s disease, High's knowledge in autoimmune disorders and the body’s ecology is first hand. Recent research into the human body's biomes has lead to better understanding of the various ecological systems we live among. Having dealt with issues around shit all her life, High sees her own attempts to make this material invisible. In this new work she hopes to bring light to ways our culture hides waste. She posits a more holistic view allowing for dialog between ecologists, biologists, activists, and artists to catalyze the imaginary around the abject. "The transformation of waste is perhaps the oldest pre-occupation of man". - Patti Smith

Kathy High (USA) is an interdisciplinary artist, educator working in the areas of technology, science and art. She works with animals and living systems, and considers the social, political and ethical dilemmas surrounding areas of medicine/bio-science, biotechnology and interspecies collaborations. She has received awards from Guggenheim Memorial Foundation, Rockefeller Foundation, and National Endowment for the Arts, among others. Her art works have been shown at documenta 13 (Germany), Guggenheim Museum, Museum of Modern Art, Lincoln Center and Exit Art (NYC), UCLA (Los Angeles), Science Gallery, (Dublin), NGBK, (Berlin), Fesitval Transitio_MX (Mexico), MASS MoCA (North Adams), Videotage Art Space (Hong Kong). High is Professor of Video and New Media at Rensselaer Polytechnic Institute in Troy, NY.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

When:

Monday October 17, 9:30am - 12:00pm and 5pm - 8pm

Tuesday October 18, 9:30am - 12:00pm and 6:30pm - 9pm

Where:

Media Arts and Technology Conference Room, 2nd Floor, Elings Hall

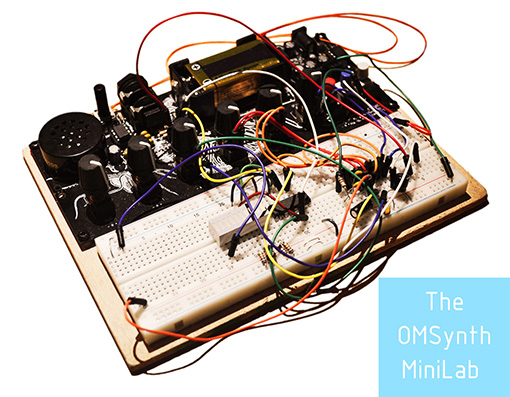

Over the course of 2 days, students will learn about the basics of electricity and "electrical thinking", while building a variety of interactive sound circuits. This workshop will utilize a circuit building tool designed by Edwards called the OMSynth MiniLab, that allows students to start tweaking and hacking functional circuits in minutes.

Workshop materials: $45, you take home your circuits.

Please confirm participation by sending an email to: peljhan (at) mat.ucsb.edu with the subject line CASPERELECTRONICS - WILL PARTICIPATE.

The number of participants is limited.

Speaker: Pete Edwards

Time: Monday, October 17th, 1pm.

Location: Engineering Science Building, room 2001.

From circuit bending to modular synthesis, artists and inventors around the world have strived to push beyond the pre-defined roles of modern electronics to find new and inspiring applications for the medium. Edwards will share his experience in the field of creative electronics as an artist and teacher for more than a decade to show how adventurous artists can not only harness the power of electricity but also gain inspiration from its organic behaviour.

Peter Edwards is a american artist, teacher and inventor working in the field of creative electronics. Over the past 15 years Edwards has worked closely with DIY electronics communities through his business casperelectronics and through outreach projects at universities and arts organisations around the world. He studied sculpture at the Rhode Island school of design and developed the creative electronics department at Hampshire College. more recently, he studied electrical engineering and electro acoustics at the Royal Conservatory of The Hague while collaborating with musical electronics pioneers STEIM in Amsterdam. In 2016 Edwards moved to the Czech Republic to join forces with synthesizer producing arts collective Bastl Instruments. He now splits his time between Brno with Bastl Instruments and Brooklyn with arts collective The Silent Barn.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Thursday, October 6th, 5-10pm.

513 Garden Street, Santa Barbara

The installation has been extended through Saturday, October 22, 2016, and will be open to the public during the following dates and hours:

- Friday, Oct. 14, 5-7:30pm

- Saturday, Oct. 15, 3-5:30pm (followed by the DEEEP MODULAR event by David Muir Williamson at SBCAST, at 530pm: https://www.eventbrite.com/e/deeep-modular-tickets-26166984154)

- Sunday Oct. 16, 5-7:30pm

- Friday, Oct. 21, 5-7:30pm

- Saturday, Oct. 22, 5-7:30pm

ACCORDS No. 2

Accords is an installation-composition project exploring both 1. the cross-modal construct of flavor through the five traditional senses and 2. the idea of music as a modulated and structured temporal experience, not necessary limited to sound.

This second piece in the series is a highly participatory, cross-sensory musical experience, which is comprised of several creative steps. First, visitors compose their own drinkable floral "chords," choosing from an array of floral waters and syrups to mix into a glass of sparkling mineral water. When the olfactive, gustative, and tactile qualities of their drinks are balanced to satisfaction, visitors can then take their glasses over to a digital composing station to tune sonic, resonant, and vibrating elements in the space either in harmony or in contrast with their drinks, thus creating musical multi- or cross-sensory polychords. While waiting their turns at the composing station, visitors can stand on vibrating platforms around the room to vicariously experience the mechanical wave profiles of other participants' drinks. To close, visitors clean their own glasses with a ceremonious libation of lavender soap.

In particular, this work hopes to shed light on a potential cognitive link between sound (with perhaps the other mechanical wave sense of vibratory touch) and the chemical senses (as suggested by several psychological studies). At the very least, it hopes to encourage some synaesthetic (th/dr/)inking!

With visual ink drop projections by Mark Hirsch.

About the artist

Crawshaw is a transdisciplinary composer, researcher, and educator. She is currently a PhD student both in the Media Arts and Technology (MAT) program at UC Santa Barbara, and in the Ecole Doctorale "Esthétique, Sciences et Technologies des Arts" (EDESTA) at the Université Paris 8 Vincennes-Saint-Denis. As an artist and researcher, she has worked on a variety of compositions, installations, and performative projects both in France and the United States. Crawshaw’s research interest is in exploring musical composition both 1) across the different sensory modalities and 2) as a means of structuring formative temporal experiences, particularly pedagogical ones. One main focus has been on composing computer music that engages the somatosensory system with very low bass frequencies and/or vibrotactility (vibrational touch). Her other work is concerned with the development of a transdisciplinary pedagogical model.

Friday, October 28th, 8pm.

Lotte Lehmann Concert Hall, Music Building

The Center for Research in Electronic Art Technology (CREATE), the Media Arts and Technology Program (MAT), and the Department of Music present Jean-Claude Eloy's "The Midnight of the Faith". The piece is written for electronic and concrete sounds projected octophonically around selected sentences by Edith Stein, recorded by German actress Gisela Claudius. The concert will be presented non-stop, with "Part I: Dawn" running for 70 minutes and 20 seconds (proposition, agitation, contemplation, illumination-jubilation-sublimation) and "Part II: Twilight" running for 50 minutes and 6 seconds (interrogation, tension, confrontation).

Admission is free and the event is open to the public.

Speaker: Øyvind Brandtsegg, Norwegian University of Technology and Science

Wednesday, November 9th, 6pm.

Studio Xenakis, room 2215 Music Building

The project explores cross-adaptive processing as a drastic intervention in the modes of communication between performing musicians. Digital audio analysis and processing techniques are used to enable features of one sound to inform the processing of another. This allows the actions of one performer to directly influence another performer’s sound, and doing so only by means of the acoustic signal produced by normal musical expression on the instrument. The project is run by the Norwegian University of Technology and Science, Music Technology, Trondheim. We collaborate with our partners at De Montfort University, Maynooth University, Queen Mary University of London, Norwegian Music Academy and University of California San Diego. The project is based in practical experimentation and for this we rely on collaboration with a range of performers. Project leader is Professor Øyvind Brandtsegg.

Øyvind Brandtsegg is a composer and performer working in the fields of algorithmic improvisation and sound installations. His main instruments as a musician are the Hadron Particle Synthesizer, ImproSculpt and Marimba Lumina. Hadron is a flexible real-time granular synthesizer, widely used within experimental sound design with over 200,000 downloads of the VST/AU version. Brandtsegg uses it for live processing of the acoustic sound from other musicians. As musician and composer he has collaborated with a number of artists, e.g. Oslo Sinfonietta, Motorpsycho, Kristin Asbjørnsen, Live Maria Roggen, Trondheim Jazz Orchestra, Trio Alpaca, Tre Små kinesere, Zeena Parkins, Maja Ratkje. In 2008, Brandtsegg finished his PhD equivalent artistic research project, focused on musical improvisation with computers. He has given lectures and workshops on these themes in USA, Germany, Ireland, and of course in Norway. Since 2010 he is a professor of music technology at NTNU, Trondheim, Norway. Currently he is doing research into cross-adaptive processing for live performance, collaborating with an international team of researchers from the UK, USA, Holland and Norway.

CREATE: The Center for Research in Electronic Art Technology

Speaker: Patrick McCray

Time: Monday, September 26th, 1pm.

Location: Engineering Science Building, room 2001.

In the mid-1960s, an art and technology movement burst forth across the U.S. and Europe. It was catalyzed by corporate support, media exposure, a curious public, and – most of all – the enthusiastic participation of artists and engineers in formal and institutional collaborations. This talk explores this sudden blossoming of enthusiasm for art and technology and its subsequent and rather sudden retreat. While not ignoring the artists, I wish to restore the engineers and scientists to the foreground. I wish to recover the history of the engineers who contributed time, technical expertise, and aesthetic input to their artist colleagues. Following this thread through to the present day, I argue that today’s proliferation of academic and commercial art/design/technology/innovation centers is a legacy of a foundation set down by artists and engineers in the 1960's.

W. Patrick McCray is a professor in the Department of History at the University of California, Santa Barbara. Originally trained as a scientist, McCray’s most recent book (2013) is The Visioneers: How an Elite Group of Scientists Pursued Space Colonies, Nanotechnologies, and a Limitless Future. This won the Watson and Helen Miles Davis Prize from the History of Science Society as the "best book written for a general audience", as well as the Eugene M. Emme Award from the American Astronautical Society. Besides authoring three other books about the history of science and technology, he also recently co-edited a collection of essays called Groovy Science: Knowledge, Innovation, and the American Counterculture which the University of Chicago published in 2016.

In addition to grants from the National Science Foundation – including one to create a center at UCSB looking at the societal implications of new technologies – McCray has held research fellowships from the American Council of Learned Societies (2010), the California Institute of Technology (2012), and the Smithsonian (2015). He is a Fellow of the American Association for the Advancement of Science (elected 2011) and the American Physical Society (elected 2013). Finally, in 2016, McCray was an invited attendee at the World Economic Forum’s annual meeting in Davos, Switzerland.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, September 8th

Time: 3pm

Location: Elings Hall, room 2003 (MAT Conference room).

Abstract:

The field of music information retrieval (MIR) has enabled research in "intelligent" audio processing. Emerging applications of MIR techniques in music production might provide mixing engineers, musicians and composers control over extant effects processing plugins based on the audio content of the music. In adaptive effects processing, mapping functions are used to modulate algorithm parameters via features that are extracted from the input audio signal or signals. We present an audio software plugin that is designed to facilitate feature-parameter mappings within digital audio workstations. We refer to it as the Adaptive Digital Effects Processing Tool (ADEPT).

Date: Monday, September 5th

Time: 3pm

Location: Elings Hall, room 2611 (Experimental Visualization Lab).

Abstract:

Biometric technology has brought enhancements to identification and access control. As more digital applications request people to input their biometric data as a more convenient and secure method of identification, the possibility of losing their personal data and identities may increase. The phenomenon of biometric data abuse causes one to question what their true identity may be and what methods CAN be used to define identity and hidden narratives. The questions of identification and the insecurity of biometric data have become my inspiration, providing artistic approaches to the manipulation of biometric data and having the potential to suggest new directions for solving the problems. To do so, in-depth investigation of the narratives beyond the visual features of the biometric data is necessary. This content can create a close link between an artwork and its audience by causing the latter to become deeply engaged with the artwork through their own stories.

This dissertation examines narratives and artistic explorations discovered from one form of biometric data, fingerprints, drawing on insights from various fields such as genetics, hand analysis, and biology. It also presents contributions on new ways of creating interactive media artworks using fingerprint data based on visual feature analysis of the data and multimodal interaction to explore their sonic signatures. Therefore, the artwork enriches interactive media art by incorporating personalization into the artistic experience, and creates unique personalized experience for each audience member. This thesis documents developments and productions of a series of artworks, Digiti Sonus, by focusing on its conceptual approaches, design, techniques, challenges and future directions.

Date: Tuesday, August 30th

Time: 3pm

Location: Elings Hall, room 2611 (Experimental Visualization Lab).

Abstract:

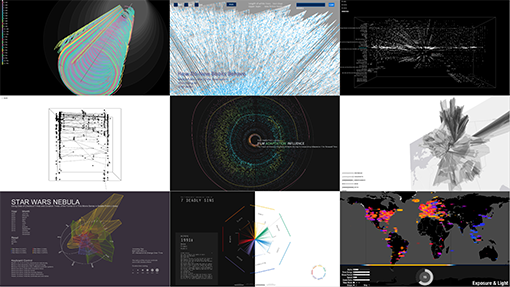

"Photo" is derived from the Greek word "phos" which means light. Photography is the art and science of capturing this light to create images using optical-mechanical and other light-sensing mechanisms. Modern digital cameras today embed image "metadata" in the photos, which is also referred to as EXIF data. In recent years, there has been significant interest in the scientific research community to analyze aesthetics using pixel-based image processing algorithms. However there has been no scientific research in analyzing metadata to understand photographic aesthetics, especially from a photographer’s point of view. "Into the Technicalities of Photography" explores image aesthetics using this EXIF data. The approach analyzes four EXIF tags: Focal Length, Shutter Speed, Aperture and ISO. I propose to analyze this data to understand if sets of selected images when collectively examined reveal photographer's aesthetic preferences. The research presented aims to visualize and organize images as actionable data for better understanding of light, exposure and perspective, which I believe is useful for all photographers.

Dates: Saturday, June 25, 12pm to Sunday, August 14, 5pm.

Location: UCSB Art, Design, and Architecture Museum

Opening Reception: Friday, June 24 from 5:30pm to 7:30pm

Image generated by the AlloSphere Research Group. Professor JoAnn Kuchera-Morin, director. Based on an fMRI scan of professor Marcos Novaks' brain.

Selected students' works are highlighted in this exhibition, exploring the limits of what is possible in technologically sophisticated art and media from both an artistic and engineering viewpoint. Featuring projects by Chang He, Mark Hirsch, Kurt Kaminski, Lu Liu, Weihao Qiu, F. Myles Sciotto, Ambika Yadav, Jing Yan, and Junxiang Yao. The exhibition is part of the Art, Design, and Architecture Museums' summer program "Sub-Rosa, Behind the Scenes".

Dates of Show: May 26, 27, 28 & June 2, 2016

Animation by Jing Yan

Please join us for our annual display of Master's and Doctoral student work in Media Arts and Technology. Come experience the Allosphere, interactive installations, live audio-visual performances and new media art.

White Noise - a noise containing all frequencies presented in equal proportion, represents all possibilities in equal likelihood. As this year's theme, it represents the blank canvas; it's the marble awaiting the sculptor to bring these possibilities to life. At MAT, our research is like white noise in that it contains "signals" from every field. As technologists and artists, we weave through this diverse research in novel ways, creating new works that transcend the present way we view the world. Our show is the product of this process, and we invite all to join us in its celebration.

Postcard Front (PDF) | Postcard Back (PDF)

Speaker: Zhang Ga

Time: Friday, May 27th, 1pm.

Location: California NanoSystems Institute.

Zhang Ga is an internationally recognized media art curator and professor of Media Art at Tsinghua University and associate professor at the School of Art, Media and Technology at Parsons The New School for Design. He holds appointments as Consulting Curator of Media Art at the National Art Museum of China, Senior Researcher at the Media and Design Lab of EPFL | Federal Institute of Technology Lausanne in Switzerland, and Visiting Scientist at the MIT Media Lab in the US.

Ga studied art in China, at the Berlin Academy of Arts in Germany (UdK) with a DAAD fellowship and at the Parsons School of Design in the US.

Ga directed and curated Synthetic Times: International New Media Art Exhibition, a Beijing Olympics Cultural Project organized by the National Art Museum of China in 2008, eArts Beyond at Shanghai eArts Festival i2009, and Timelapse at the National Art Museum of China and Biel Contemporary Art Museum in 2010. In addition to "Sense Exercise", his upcoming curatorial projects include TransLife for the National Art Museum of China, Beijing (2011). He speaks widely around the world on media art and culture, he organized conferences and digital salons, written and lectured on new media art practice and criticism, and served on jury duties for media art grants. Zhang Ga directs the Netart Initiative, a loosely knit, open source-based, hub-styled, forum-oriented, action-enabled consortium.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, June 2nd

Time: 11am

Location: Elings Hall, room 2611 (Experimental Visualization Lab).

Abstract:

From visualizations to movies to video games, a camera is often an indispensable tool for structuring narratives given a set of input data. Today, an ever-increasing number of images are gathered by public, private, benevolent, and/or malicious agents, autonomous or controlled, and it is humans who must parse them in order to turn raw data into knowledge. However, the size of these datasets renders them intractable to understanding without a level of analysis and automation: either in what to capture, or in how to capture it, or in how to present/re-present it.

This dissertation examines the process of automation of real and virtual cameras, drawing on insights from artificial intelligence, robotics, narrative theory, and interactive systems design, and presents two contributions: an analysis of automation in camera systems, and a prototype software tool for virtual camera control. Informed by the content of Edward Branigan's book Projecting a Camera, the software provides a proof-of-concept for generating automated visual narratives through camera control in virtual simulations.

Date: Tuesday, May 24th, 3:30pm - 5:30pm.

Location: Old Little Theater.

The aim of this workshop is to combine contemporary improvisational performance with critical making through myth. Working with excerpts from Euripides’ famous tragedy The Bacchae, through improvisation, we will construct a combined musical- and movement-based language to explore and reinstate the often forgotten musical component of ancient Greek tragedy. Participants, acting simultaneously as musical composers and actors, will use a combination of voice, acoustic instruments, and provided gesture-controllable tools (hooked up to electroacoustic sounds) to compose and perform musical and gestural motifs. These motifs will explore the growing Dionysian influence over the text and characters animating the tragedy.

To support this exploration, we will look at procedural improvisational techniques and discuss examples of mythological creative works from both antiquity and the avant-garde. Specifically, we will examine works that embed thematic and philosophic reflection into their very compositional building blocks. In this workshop, the themes of identity and behavior will direct our compositional efforts. More generally, this workshop will explore the unification of music with movement, of dramatic writing with music composition, of the self with the other, and of myth with digital media tools.

The Bacchae examines the need for stepping outside oneself and serves as a cautionary tale against highly repressive behavior. In this play, Dionysus— god of theatre, wine, and madness— reigns supreme as the embodiment of the notion of “the other” (in relation to the self). In this workshop, participants will have the opportunity to embrace the Dionysus in us all.

Bring your instruments and be sure to read Euripides' Bacchae before attending!

Speaker: Andreas Schlegel

Time: Monday, May 23rd, 4pm.

Location: Engineering Science Building, room 2001.

In recent years computational and screen-based works have flourished within media arts and have since made significant influence across multiple disciplines. With the shift in computing platforms towards mobile, embedded systems, micro-sized devices and the advent of bio-electrical systems the potential for new tools and artistic expressions, transdisciplinary investigations and interdisciplinary collaborations is imminent. Technology and networks had become an ubiquitous part of our everyday lives. With easy access to hardware and software, the availability of technology for daily activities had now become the norm and defines the conditions in which we live, work, learn, and interact. With these shifts in the relationship we have with technology, I would like to question how these conditions and systems we operate in inform and shape future art practices and interdisciplinary projects? During my talk I will present a series of works concerned with exploring such systems in my artistic practice, education, performance, and urban space.

Andreas Schlegel works across disciplines and creates artifacts, tools and interfaces where technology meets everyday life situations in a curious way. Many of his works are collaborative and concerned with emerging and open source technologies where audio, visual and physical output is created by humans and machines through interaction, computation and process.

Currently Andreas lives and works in Singapore and leads the Media Lab at Lasalle College of the Arts where he also lectures across faculties. His work at the lab is practice-based, collaborative and interdisciplinary in nature and aims to blur the boundaries between art and technology. In 2007 he co-founded Syntfarm, an art collective interested in the intersection of art, nature and technology, where many of his collaborative projects are based. He stays active within the international media arts environment and has been contributing to the open source project Processing.org since 2004. In his current research he aims to find artistic expressions for real-time data that resides within generative and networked systems.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Eric Lyon

Time: Monday, May 16th, 4pm.

Location: Engineering Science Building, room 2001.

Composing computer music for large numbers of speakers is a daunting process, but it is becoming increasingly practicable. This paper argues for increased attention to the possibilities for this mode of computer music on the part of both creative artists and institutions that support advanced aesthetic research. We first consider the large role that timbre composition has played in computer music, and posit that this research direction may be showing signs of diminishing returns. We next propose spatial computer music for large numbers of speakers as a relatively unexplored area with significant potential, considering reasons for the relative preponderance of timbre composition over spatial composition. We present a case study of a computer music composition that focuses on the orchestration of spatial effects. Finally we propose some steps to be taken in order to promote exploration of the full potential of spatial computer music.

Eric Lyon is a composer and computer music researcher whose focuses on articulated noise, spatial orchestration and computer chamber music. His software includes FFTease and LyonPotpourri. He is the author of "Designing Audio Objects for Max/MSP and Pd" which explicates the process of designing and implementing audio DSP externals. His music has been selected for the Giga-Hertz prize, MUSLAB, and the ISCM World Music Days. Lyon has taught computer music at Keio University, IAMAS, Dartmouth, Manchester University, and Queen’s University Belfast. Currently, he teaches at Virginia Tech, and is a faculty fellow at the Institute for Creativity, Arts, and Technology.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Luis Blackaller

Time: Monday, May 9th, 4pm.

Location: Engineering Science Building, room 2001.

Luis Blackaller is a writer, director, designer and media artist from Mexico City. He has a multidisciplinary background that covers aspects of entertainment, science, design, art and visual storytelling. He earned a BS with honors as a mathematician in the UNAM, and graduated as a character animator from the Vancouver Film School. With more than twelve years of experience in the Mexican film and television industry, Luis has worked as a designer, art director, motion graphics artist and storyboard artist among academy award winners in films like Amores Perros, 21 Grams and Babel. In 2008, Luis earned a MS from the MIT Media Lab under the mentorship of John Maeda, where he designed and developed interactive software systems and tools to study the spread of user generated content online, alternate revenue models, and online creative social systems and their relationship with artistic expression and communication. As a MIT student, he attended writing workshops with Junot Diaz and Joe Haldeman, received character design and world building training from Frank Espinosa, and learned transmedia methods with Henry Jenkins.

After graduating from MIT, Luis worked as producer and creative director for several research initiatives at MIT, where he designed and supervised the execution of alternative strategies of communication and promotion of research with the help of documentary videographers and web developers. More recently, Luis can be found in Venice, California, where he holds the position of Creative Director at WEVR. During his tenure at WEVR, he has developed, shot and post-produced more cinematic virtual reality than almost anyone in the world, contributing to define the new sensibilities, visual language and production techniques that are pushing forward the emerging medium of VR.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, May 5th

Time: 5-10pm

Location: SBCAST, Unit E

513 Garden St

Santa Barbara

As part of MAT/SBCAST First Thursdays, David Gordon will present FLUID MOTION, a transdisciplinary performance combining live painting, video, and sound. Gordon’s painting process harnesses fluid dynamics to create complex marbling patterns, similar to Japanese suminagashi ink painting. By placing high flow acrylic paint on a wet canvas flat against the ground, then slightly tilting it back and forth, complex patterns emerge, often evoking aerial landscapes, astronomical photos, or microscopic processes. Custom sonification software will generate electronic sound based on color, shape, and motion of the wet paint surface.

Date: Friday, May 13th

Time: 1pm

Location: Elings Hall, room 2615 (the transLAB).

Abstract:

In this thesis, I explore the possibility of empowering the moving body through immersive technological environments that simulate aspects of nature. I explore this through a series of dance performances and virtual worlds called Kodama. Kodama was the content grounding the development of a proof of concept programming platform called Seer, designed for building body-driven interactive audio visual worlds.

Speaker: Masashi Nakatani

Time: Friday, May 6th, 4pm.

Location: Engineering Science Building, room 2003.

Abstract:

It has been uncovered by our research group that light touch is mediated and amplified by Merkel cells, which are known as skin mechanoreceptors that transduce mechanical stimuli into electrical signals that activate somatosensory neurons. This function is achieved by the force-gated ion channel called Piezo2, and recent study shows that this channel also plays an important role in proprioception. This talk will overview latest research topics in haptic neuroscience and introduce a possible bridge between sensory biology and behavioral science. The speaker will also provide several ideas how behavioral neuroscience experiments can be implemented with haptic engineering based on our developed haptic toolkits.

Bio:

Research Institute for Electronic Science, Hokkaido University - After receiving a Ph.D. in engineering, Masashi Nakatani is pursuing the way how sense of touch can serve our emotional reality. He serves as the founder of TECHTILE, a TECHnology based tacTILE design. Currently he is working on the basic study of touch neuroscience, and prevailing haptic content in education for understanding human's ability to realize the world.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Jay LeBoeuf

Time: Monday, May 2nd, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

How do leading audio, music, and video tech companies bring products from ideation through commercialization? Join us we go behind the scenes at leading companies and explore key roles in the media tech industry including marketing, product management, software/hardware development, user experience, R&D, and more. We'll explore case studies of your favorite products.

Bio:

Jay LeBoeuf is the Executive Director of Real Industry, an education nonprofit that prepares its students to enter the technology industry by exposing them to leading tech companies’ practices. He is a lecturer in music technology and music business at Stanford University's Center for Computer Research in Music and Acoustics (CCRMA), and he advises digital media startups such as Chromatik, LANDR, and Kadenze. As an entrepreneur, Jay founded Imagine Research, an intelligent audio technology startup that built a search engine for sound. In 2012, iZotope acquired Imagine Research and Jay joined the iZotope executive team leading research & development, technology strategy, and intellectual property.

Prior to creating Imagine Research, LeBoeuf was an engineer and researcher in the Advanced Technology Group at Avid Technology. There he led research that expanded the power of Pro Tools, the industry standard for digital audio workstations. He has been recognized as a Bloomberg Businessweek Innovator, awarded $1.1M in Small Business Innovation Research grants by the U.S. National Science Foundation, and interviewed on BBC World.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Curtis Roads

Time: Monday, April 25th, 6pm.

Location: Engineering Science Building, room 2001.

Abstract:

Electronic technology has liberated musical time and changed musical aesthetics. In the past, musical time was considered as a linear medium that was subdivided according to ratios and intervals of a more-or-less steady meter. However, the possibilities of envelope control and the creation of liquid or cloud-like sound morphologies suggests a view of rhythm not as a fixed set of intervals on a time grid, but rather as a continuously flowing, undulating, and malleable temporal substrate upon which events can be scattered, sprinkled, sprayed, scrambled, or stirred (scrubbed) at will. In this view, composition is not a matter of filling or dividing time, but rather of generating time. The core of this paper introduces aspects of rhythmic discourse that appear in my electronic music. These include: the design of phrases and figures, exploring a particle-based rhythmic discourse, deploying polyrhythmic processes, the shaping of streams and clouds, using fields of attraction and repulsion, creating pulsation and pitched tones by particle replication, using reverberant space as a cadence, contrasting ostinato and intermittency, using echoes as rhythmic elements, and composing with tape echo feedback. The lecture is accompanied by sound examples.

Bio:

Curtis Roads creates, teaches, and pursues research in the interdisciplinary territory spanning music and sound technology. His specialties include electronic music composition, microsound synthesis, graphical synthesis, sound analysis and transformation, sound spatialization and the history of electronic music. He is keenly interested in the integration of electronic music with visual and spatial media, as well as the visualization and sonification of data. Certain of his compositions feature granular and pulsar synthesis, methods he developed for generating sound from acoustical particles. These are featured in his CD+DVD set POINT LINE CLOUD (Asphodel, 2005). His writings include over a hundred monographs, research articles, reports, and reviews.

Books include the textbook "The Computer Music Tutorial" (1996, The MIT Press), "Musical Signal Processing" (co-editor, 1997, Routledge, London), "L'audionumerique" (2007, Dunod, Paris), "The Computer Music Tutorial" - Japanese edition (January 2001, Denki Daigaku Shuppan, Tokyo), "Microsound" (2001, The MIT Press) explores the aesthetics and techniques of composition with sound particles. The Chinese edition of "The Computer Music Tutorial" was published in 2011 (People's Music Publishing, Beijing). His new book is "Composing Electronic Music: A New Aesthetic" (2015 Oxford). A revised edition of "The Computer Music Tutorial" is in the works.

His new set of music is "Flicker Tone Pulse".

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Wednesday, April 20th, 7:30pm.

Lotte Lehmann Concert Hall, Music Building

Works by Warren Burt, Clarence Barlow, Curtis Roads, Harald Bode, Yon Visell, and Scott Perry.

Admission is Free.

Speaker: Ingo Günther

Time: Monday, April 18th, 4pm.

Location: Engineering Science Building, room 2001.

World Processor x Geo-Cosmos, 2011.

Abstract:

Ingo will share his experience as advisor to the New York Hall of Science, and the Museum of Emerging Sciences and Innovation in Tokyo, Japan. He will also discuss the creative process behind notable artworks such as "World Processor x Geo-Cosmos", "Exosphere / Globefield", "Ceterum Censeo: Video ex Nihilo", and others.

Bio:

Ingo Günther grew up in Germany, and has traveled to Northern Africa, North and Central America and Asia, before studying Ethnology and Cultural Anthropology at Frankfurt University (1977), and sculpture and media at Kunstakademie Düsseldorf (Schwegler, Uecker, Paik, MA 1982, postgrad year 1983). He was awarded the German National Academic Foundation Scholarship, a P.S.1 residency, New York, a DAAD scholarship, and Kunstfonds grant.

Early sculptural media works and journalistic projects were pursued in TV, print, and the art field. Played a pioneering and crucial role in the evaluation and interpretation of satellite data for international print media and TV news. 1987 documenta 8 installation K4 (C31) (Command Control Communication and Intelligence). Worked as artist, correspondent and author for German and Japanese news media. 1989 began Worldprocessor project and founded the first independent TV station in Eastern Europe (Channel X, Leipzig). Research in Cambodian, Burmese and Laotian refugee camps inspired the Refugee Republic project (since 1990).

Works shown, among other public venues, at: Nationalgalerie Berlin, 1983 and 1985; Venice Biennale, 1984; documenta, Kassel, 1987; P3 Art and Environment, Tokyo, 1990, 1992, 1996, and 1997; Ars Electronica, Linz, 1991; Centro Cultural de Belem, Lisbon, 1995; Hiroshima City Museum of Contemporary Art, 1995; Guggenheim Museum, New York, 1996; Neues Museum Weserburg Bremen, 1999; Stroom, The Hague, 1999; V2 Rotterdam, 2003; Yokohama Trienniale, Japan; Kunstverein Ruhr, Essen, 2005; San Jose Museum of Art, San Jose, CA, 2006; Espacio Fundacion Telefonica, Buenos Aires, Argentina, 2007; Iwaki City Museum, Japan, 2009; Kumu Kunstimuuseum, Tallinn, Estonia, 2011; Museum of Emerging Sciences and and Innovation, Tokyo, Japan, 2012-14; CCCA, Barcelona, 2014. Early retrospective at Kunsthalle Düsseldorf, 1998; Bremen, Germany and Stroom, The Hague, NL in 1999.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Ali Momeni

Time: Monday, March 7th, 4pm.

Location: California NanoSystems Institute, Elings Hall, room 1601.

Abstract:

Ali Momeni will discuss several current projects at the intersection of performance art, design and engineering. These projects include a human-robot collaborative theatrical performance based on Ferdowsi's epic poem Shahnameh, a participatory public projection work about disease and healthcare, a investigation of the sounds of the gut as indicators for activities in the mind, and a mobile augmented reality application for creative engagement with works of art in museums and galleries.

Bio:

Momeni was born in Isfahan, Iran and emigrated to the United States at the age of twelve. He studied physics and music at Swarthmore College and completed his doctoral degree in music composition, improvisation and performance with computers from the Center for New Music and Audio Technologies in UC Berkeley. He spent three years in Paris where he collaborated with performers and researchers from La Kitchen, IRCAM, Sony CSL and CIRM. Between 2007 and 2011, Momeni was an assistant professor in the Department of Art at the University of Minnesota in Minneapolis, where he directed the Spark Festival of Electronic Music and Art, and founded the urban projection collective called the MAW. Momeni currently teaches in the School of Art at Carnegie Mellon University and directs CMU ArtFab, co-directs CodeLab and teach in CMU’s IDEATE and Emerging Media Programs.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Monday, March 14th

Time: 1pm

Location: Elings Hall, room 2615 (the transLAB).

Abstract:

To make the transition from industry to society, robots need to be able to interact with novice users. While natural conversations and emotions are difficult to express through robots, their expression makes robots more relatable and predictable. I believe that it is important to find a universal language so that robots can communicate to be better able to navigate uncontrolled environments with untrained users who may or may not even speak the same language. Looking at non-linguistic communication as a universal language, I am interested in what emotive responses are caused by non-linguistic sound.

ROVER, the Reactive Observant Vacuous Emotive Robot, is an art installation that explores mobile embodied interaction which can also be used to collect data from users to learn about human and robot interaction, particularly emotive response to sound. The work was shown as a series of art installations/performances and a pilot study was done. A system was defined for creating emotive sound based on research in music and linguistics manipulating aspects of fundamental frequency, amplitude, articulation/timbre, and motive. The pilot study investigated this, focusing on the effect of mobile embodied interaction on emotive expressive responses to algorithmically generated non-linguistic utterances. It was found that happy sounds increased self reported valence and sad sounds decreased it. Also, it was found that singing computers are comparatively more emotionally arousing than singing robots. This finding could be due to a variety of factors including volume, distance, input method or position. Whether the robot moved did not affect arousal. These are promising results and present us with a precedent to continue with a full study focusing on analyzing the video collected by ROVER.

Speaker: David Rokeby

Time: Wednesday, March 2nd, noon.

Location: Engineering Science Building, room 1001.

Abstract:

David Rokeby will explore the idea that the computer can function as a sort of prosthetic organ of philosophy. Computers can serve as a tool with which to reinvigorate well-worn but unresolved philisophical questions in tangible and visceral ways. Through his computer-based art installations, Rokeby has probed questions of embodiment, consciousness, perception, language, and time as well as more political questions such as surveillance. He will illustrate these interests and explorations with examples from his 35 years of arts practise.

Bio:

David Rokeby is a Toronto-based artist who works with a variety of digital media to critically explore the impacts these media are having on contemporary human lives. David Rokeby's early work Very Nervous System (1982-1991) was a pioneering work of interactive art, translating physical gestures into real-time interactive sound environments. He has exhibited and lectured extensively internationally and has received numerous international awards including a Governor General’s Award in Visual and Media Arts (2002), a Prix Ars Electronica Golden Nica for Interactive Art (2002), and a British Academy of Film and Television Arts "BAFTA" award in Interactive art (2000).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Alessandro Febretti

Time: Monday, February 29th, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

In the domain of large-scale visualization instruments, Hybrid Reality Environments (HREs) are a recent innovation that combines the best-in-class capabilities of immersive environments, with the best-in-class capabilities of ultra-high-resolution display walls. Co-located research groups in HREs tend to work on a variety of tasks during a research session (sometimes in parallel), and these tasks require 2D data views, 3D views, linking between them and the ability to bring in (or hide) data quickly as needed. Addressing these needs requires a matching software infrastructure that fully leverages the technological affordances offered by these new instruments. I detail the requirements of such infrastructure and outline the model of an operating system for Hybrid Reality Environments: I present an implementation of core components of this model, called Omegalib. Omegalib is designed to support dynamic reconfigurability of the display environment: areas of the display can be interactively allocated to 2D or 3D workspaces as needed.

Bio:

Alessandro Febretti is a Senior Interactive Visualization Specialist at Northwestern University and a PhD Candidate at the Electronic Visualization Lab, University of Illinois at Chicago. His research is at the intersection of immersive environments, scientific visualization and collaborative work. While at EVL he concentrated on Visualization Instrument research (hardware and software) and on human-computer interaction in single and multiuser scenarios. Alessandro contributed to the creation of a small scale hybrid workspace (the OmegaDesk) and a large scale Hybrid Reality Environment (the CAVE2). In addition to his work on hybrid systems, He designed and implemented desktop and web based visualization tools and user interfaces in a variety of research domains, from Astrophysics to Art History. Prior to joining EVL, Alessandro worked at Milestone Games and taught Videogame Design at the Polytechnic university of Milan, Italy.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Dates: February 18, 19 and 20.

Location: Systemics Lab, 3rd floor Elings Hall (California Nanosystems Institute).

The Systemics Lab and Hackteria are inviting you to join a 3 day collaborative exploration workshop on DIY tools for sonification of biomolecular and nanochemical processes. Our current guest, Marc Dusseiller aka dusjagr, will guide you through various possibilities to access the world of the smallest at the converging interphase of life and technology on the nanoscale. How can we see or hear phenomena happening on a few nanometers? Or can we even smell them?

We will look at resistive sensors, DIY microscopes, self-made spectrometers and simple photometers, as well as more elaborate tools such as the quartz crystal microbalance (yes, it's a piezo!). To sense, measure, program and make music, we will use the Teensy 3.2 ARM M4 microcontroller, fully compatible with the arduino environment. All these tools can be used in chemical- and bioanalytics as well as environmental monitoring. We will explore and discuss the role of sonification and extended perception for such sensing technologies.

Thursday 3 - 7pm

Friday 10.30am - 5pm

Saturday 10.30am - 5pm

Facilitator

Dr. Marc R. Dusseiller is a transdisciplinary scholar, lecturer for micro- and nanotechnology, cultural facilitator and artist. He performs DIY (do-it-yourself) workshops in lo-fi electronics and synths, hardware hacking for citizen science and DIY microscopy. More info here: seminar.mat.ucsb.edu/#marc.

With special guest Leslie Garcia, www.interspecifics.cc.

Speakers: Dmitry Gelfand and Evelina Domnitch

Time: Monday, February 22nd, 5pm.

Location: California NanoSystems Institute, Elings Hall, room 1605.

Abstract:

Despite the overwhelming, century-long success of quantum physics it is still unknown as to where and if there lies a horizon separating our reality from the quantum world of light upon which it is based. It is this slippery frontier that Domnitch and Gelfand explore through installations and performances. Formerly considered impossible to imagine, let alone perceive, a variety of quantum phenomena have been detected on macroscopic scales, from colloidal liquids and biological systems to astrophysical phenomena. These thresholds can be experienced through meticulously orchestrated conditions coupled with an active attunement of the senses.

Bios:

Dmitry Gelfand (b.1974, St. Petersburg, Russia) and Evelina Domnitch (b. 1972, Minsk, Belarus) create sensory immersion environments that merge physics, chemistry and computer science with uncanny philosophical practices. Current findings, particularly in the domain of mesoscopics, are employed by the artists to investigate questions of perception and perpetuity. Such investigations are salient because the scientific picture of the world, which serves as the basis for contemporary thought, still cannot encompass the unrecordable workings of consciousness.

Having dismissed the use of recording and fixative media, Domnitch and Gelfand’s installations exist as ever-transforming phenomena offered for observation. Because these rarely seen phenomena take place directly in front of the observer without being intermediated, they often serve to vastly extend one’s sensory threshold. The immediacy of this experience allows the observer to transcend the illusory distinction between scientific discovery and perceptual expansion.

In order to engage such ephemeral processes, the duo has collaborated with numerous scientific research facilities, including the Drittes Physikalisches Institut (Goettingen University, Germany), the Institute of Advanced Sciences and Technologies (Nagoya), Ecole Polytechnique (Paris) and the European Space Agency. They are recipients of the Japan Media Arts Excellence Prize (2007), and four Ars Electronica Honorary Mentions (2013, 2011, 2009 and 2007).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Time: Thursday, February 18th

Four shows at: 6pm, 7pm, 8pm and 9pm

Location: The AlloSphere, 2nd floor Elings Hall.

Featuring a multichannel remix of John Chowning's "Turenas", and works by the UCSB CREATE Ensemble, Akshay Cadambi, David Gordon, Federico Llach and Fernando Rincon Estrada.

[ Event Poster ]

Time: Monday, February 8th, 4pm.

Location: Engineering Science Building, room 2001.

Bio:

Dr. Marc R. Dusseiller is a transdisciplinary scholar, lecturer for micro- and nanotechnology, cultural facilitator and artist. He performs DIY (do-it-yourself) workshops in lo-fi electronics and synths, hardware hacking for citizen science and DIY microscopy. He was co-organizing Dock18, Room for Mediacultures, diy* festival (Zürich, Switzerland), KIBLIX 2011 (Maribor, Slovenia), workshops for artists, schools and children as the former president (2008-12) of the Swiss Mechatronic Art Society, SGMK. In collaboration with Kapelica Gallery, he has started the BioTehna Lab in Ljubljana (2012 - 2013), an open platform for interdisciplinary and artistic research on life sciences. Currently, he is developing means to perform bio- and nanotechnology research and dissemination, Hackteria | Open Source Biological Art, in a DIY / DIWO fashion in kitchens, ateliers and in developing countries. He was the co-organizer of the different editions of HackteriaLab 2010 - 2014 Zürich, Romainmotier, Bangalore and Yogyakarta.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Pat Scandalis

Time: Monday, January 25th, 4pm.

Location: California NanoSystems Institute, Elings Hall, room 1601.

Abstract:

The pervasiveness of handheld mobile computing devices has created an opportunity to realize ubiquitous virtual musical instruments. These devices are powerful, connected and equipped with a variety of sensors allowing parametrically controlled, physically modeled instruments that previously required an 8-DSP farm with minimal UI.

We will provide a brief history of physically modeled musical instruments and the platforms that these models have been run on. We will also give an overview of what is currently possible on handheld mobile devices, including modern DSP strategies using a high level expression language that can be re-targeted across multiple platforms. moForte Guitar and GeoShred will be used as real world examples.

Bio:

Pat Scandalis, CTO and acting CEO, has worked for a number of Silicon Valley High Tech Companies. He has held lead engineering positions at National Semiconductor, Teradyne, Apple and Sun. He has spent the past 18 years working in Digital Media. He was an Audio DSP researcher at Stanford University’s Center for Computer Research in Music and Acoustics (CCRMA). He co-founded and was the VP of engineering for Staccato Systems, a successful spinout of Stanford/CCRMA that was sold to Analog Devices in 2001. He has held VP positions at TuneTo.com, Jarrah Systems and Liquid Digital Media (formerly Liquid Audio). He most recently ran Liquid Digital Media, which developed and operated all online digital music e-commerce properties for Walmart. He holds a BSc in Physics from Cal Poly San Luis Obispo and is currently a visiting scholar at CCRMA, Stanford University.

Our primary speaker will be joined by:

Nichlolas J Porcaro, Chief Scientist, is a software developer proficient in many languages including Objective-C, C++, PHP, Javascript, Python and Perl. He holds a B.S in electrical engineering from Texas A&M, and was a visiting scholar at the Center for Computer Research in Music and Acoustics (CCRMA), Stanford University. He was a founder of Staccato Systems, and worked for several other startup companies in a variety of fields – electronic design automation, geophysics, e-commerce, and digital audio. He has also done independent artistic work with 3D graphics with sound. In late 2011 he released an iPhone app called UndAground – New York, a cultural guide to New York City. He is also a jazz pianist.

Dr. Julius O. Smith III, Founding Consultant teaches a music signal-processing course sequence and supervises related research at the Center for Computer Research in Music and Acoustics (CCRMA). He is formally a professor of music and associate professor (by courtesy) of electrical engineering at Stanford University. In 1975, he received his BS/EE degree from Rice University, where he got a solid grounding in the field of digital signal processing and modeling for control. In 1983, he received the PhD/EE degree from Stanford University, specializing in techniques for digital filter design and system identification, with app to violin modeling. His work history includes the Signal Processing Department at Electromagnetic Systems Laboratories, Inc., working on systems for digital communications, the Adaptive Systems Department at Systems Control Technology, Inc., working on research problems in adaptive filtering and spectral estimation, and NeXT Computer, Inc., where he was responsible for sound, music, and signal processing software for the NeXT computer workstation. In addition, he was a founding consultant for Staccato Systems, Inc., and Shazam Entertainment Ltd., where he co-developed the core technology. Prof. Smith is a Fellow of the Audio Engineering Society and the Acoustical Society of America. He is the author of four online books and numerous research publications in his field.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Dennis Del Favero

Time: Wednesday, January 13th, 12pm.

Location: California NanoSystems Institute, Elings Hall, room 1605.

Abstract:

This seminar investigates a range of Australian Research Council funded projects recently exhibited at venues such as the Sydney Film Festival, ISEA and Chronus Shanghai. Realized through interdisciplinary collaborative research, involving the domains of media aesthetics, interactive narrative and machine learning, these projects create integrated 360-degree 3D immersive environments in which the user interacts with an intelligent digital world. Through its discussion of the aesthetics and AI architecture underpinning these environments, the paper enters into an explanation of what is termed ‘parallel’ aesthetics, a function of the mutually interdependent relationship between human users and autonomous machine images and characters. Understanding interaction as a parallel aesthetic or co-evolutionary narrative, the paper explores parallel aesthetics and co-evolutionary narrative as a dynamic two-way process between human and machine intelligence.

Bio:

Dennis Del Favero is a research artists, Director of the UNSW iCinema Centre, Sydney and Executive Director of the Australian Research Council (Humanities and Creative Arts). His work has been widely exhibited in solo exhibitions in leading museums and galleries such as Sprengel Museum Hannover, Neue Galerie Graz and ZKM Media Museum Karlsruhe and in major group exhibitions including the Sydney Film Festival, Biennale of Architecture Rotterdam, Biennial of Seville and Battle of the Nations War Memorial, Leipzig (joint project with Jenny Holzer). His work is represented by Scharmann & Laskowski Cologne and William Wright Artists Projects Sydney. He is a Visiting Professorial Fellow at ZKM, Germany, Visiting Professorial Fellow at Academy of Fine Arts, Vienna, Visiting Professor at City University of Hong Kong and Visiting Professor at IUAV University of Venice.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Charlie Roberts

Time: Monday, January 11th, 5pm.

Location: Engineering Science Building, room 2001.

Abstract:

In most canonical live coding performances, programmers code music and/or art while projecting source code, as it is written, for audience consumption. Although the live coding community actively debates both the meaning and necessity of this projection, I propose that visual annotations to source code can playfully help communicate algorithmic development to both performers and audiences. In this talk I will briefly outline the history of live coding, describe prior work in the live coding community using visual annotations to illuminate source code, and show my work with the live coding environment Gibber to make source code (and maybe even audiences) dance. I will conclude with a short performance demonstrating these ideas.

Bio:

Charlie Roberts is an Assistant Professor in the School of Interactive Games and Media at the Rochester Institute of Technology, where his research explores human-centered computing in creative coding environments. He is the primary author of Gibber, a live-coding environment for the browser, and he has given live-coding performances across the US, Europe, and Asia. His recent scholarly work on live coding includes articles in the Computer Music Journal; the Journal of Music, Technology and Education; and an invited chapter in the Oxford Handbook of Algorithmic Music. Charlie earned his PhD from the Media Arts and Technology Program at UC Santa Barbara while working as a member of the AlloSphere Research Group.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.