Trans

Ether

Active

Memory

System

(TEAMS):

MNEMONIC

MNEMONIC is an interactive computer system for the encoding and retrieval of transactive memory elements involving human emotional gesture and musical performance.

MNEMONIC is a two-stage technical/artistic system utilizing the concepts of transactive memory. Technically, it is a device for the archiving and retrieval of human emotional gestures. Artistically, it is a psychologically involved device for creating interactive musical theatre performances.

The first stage of MNEMONIC is the encoding stage. A listener is asked to concentrate on musical phrases. The emotional responses of the listener are transcribed by means of a brainwave scanner and facial motion sensors. These responses are recorded into a computer database indexed by corresponding musical phrase; the listener may also ascribe optional descriptive keywords to the phrase. The combined responses of a large sampling of listeners gives a general emotional gesture profile to each musical phrase.

MNEMONIC's second stage is retrieval. A live performer plays musical phrases that she/he views on a computer screen. Brain-wave scanners pick up a listener’s responses, as in the encoding stage, but without facial sensing; this input data is sent to MNEMONIC, which checks its database to find the closest match. It then realizes the corresponding facial gestures on a third performer, using electrodes connected directly to facial muscles. The order of musical phrases is determined either by a preset “storyline” or via real time updates from any of the human performers. An additional feedback possibility is present if the listener is able to view the responses of the facial performer, or of the audience.

SYSTEM COMPONENTS

Input:

Facial motion sensors: receives input from the listener during the encoding process

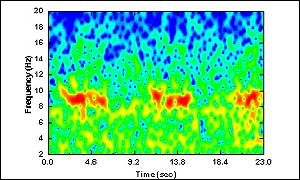

Brain wave scanner: receives input from the listener during the encoding process, and also during the retrieval process

Processing:

Musical Phrase Database: contains MIDI data for a large number of musical phrases, which the computer can output as sound (via synthesis) and/or score display (for human performer playback)

Emotional Gesture Database: contains directory of brain wave scans and facial gestures associated with each emotion. Input from brain scanner is compared to directory information, and the best possible match is sought, within a certain error threshold.

Facial Response Mapper: converts facial motion data from the encoding stage to corresponding electrical data for gesture replication. “Story” Generator: contains a sequence of desired emotions (indexed by keyword), which is read through by a timing system. Corresponding musical phrases are sent to the display for performer playback.

Output:

Muscle Control: A series of finely tuned low-power electrical impulse generators that trigger facial muscles to replicate the encoded gestures for each emotional response.

Musical Score Display: shows MIDI data in standard western musical notation for performer playback.

EXTENSIONS/FUTURE WORK:

Musical analysis could extend the capabilities of future versions of MNEMONIC. Pitch tracking and/or score following would allow for the system to be implemented in less controlled situations, e.g. improvised music, or concerts using scores that do not contain exact combinations of the phrases in MNEMONIC's database. In such situations, musical analysis would determine the closest match between encoded phrases and the music being played, and would interpret the listener’s responses accordingly.

In a research context, MNEMONIC could be used in a variety of applications: for testing emotional response changes over time in a subject; for mapping changes of responses under certain circumstances (stress, drugs, etc.); for comparing the brain wave response of first-generation (listener) vs. second-generation (viewer of system) interpreter, etc.