2006W

MAT 256 Course

3.23.2006

Terrence Handscomb (terrence at umail dot ucsb dot edu)

Joriz de Guzman(lawmajor1 at aol dot com

Dance Dance Revolution and Markov Probability

Popular Culture Representations From an Interdsciplinary Perspective.

Table of Contents

The following links provide quick access to the various sections on this site:

- Project Background

- Project Description

- Project Methods

- Screenshots and Sample Output

- Links

- Team Participants

Project Background

The goal of this project was to develop a media based interactive installation, with data input and analysis procedures involving a user interface. Our work process involved identifying and resolving some of the dominant issues of interdisciplinary working; in our case collaboration between an arts major and a science major. Our collaboration model was loosely based on the working relationship between our two instructors, a signal processing engineer and a media artist. We abandoned this model.

We began by identify our strengths, weaknesses and differences. This we presumed would determine the type of project, which would result in an effective collaboration. Strengths and differences, we decided, would be a major factor contributing to a strong result.

These were ...

1. The compatibility of our respective disciplines - Terrence is an MFA student. Joriz is a Comp Sci. PhD student.

2. Age compatibility. Terrence is a 50 year old established artist with a successful career. Joriz is fifteen years old with a full fellowship and the cultural outlook and visual vocabulary of an optimistic teenage child prodigy. Terrence on the other hand has a cynical outlook on life and the critical propensity to vocalise his point of view. Age and cultural difference were a major collaborative factor.

Joriz likes to dance and Terrence enjoys satirical popular culture arts representations; so a DDR (Dance Dance Revolution) interface metaphor seemed exciting and pertinent. Popular culture representations are often contentiously included in elite arts discourse.

Video example of popular DDR event

Project Description

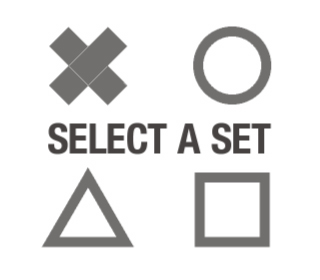

In our application, we incorporated many aspects of information theory. This was based on an inter-related and dependent visualization of probabilities and distributions of our state machine. We chose the four basic “buttons” of a DDR pad (which when activated can be identified as states), which implemented the user interaction to build a visualization model; this entails the notion of Markov Chains, Memory, Information Encoding/Decoding, Noise, Entropy and Machine Learning.

The audio-visual drive and interaction model immerses the user in an environment where his/her actions are identified as representing the theoretical components of the visualization model. At each button event, a distribution of symbols is activated, the corresponding data is calculated; which in turn, serves as the basic underlying data set. An audio experience further introduces a noise component. This entails the need to reduce/eliminate the noise. Here, the user will experiment by activating different combinations of the four symbols. When the user generates a “correct” sequence, the corresponding audio track will turn off or on (depending on what the current state is). This interaction of turning on and off can be recognized as a means of decoding "cryptic" information and the process of finding patterns is closely related to machine learning.

When the user activates the system, his/her motions are logged into a symbol buffer. At this stage in the project's development, he result is shown on the screen as a binary sequence of numbers. If the user wishes to do so, sequences of bits can be inverted pairwise.

Markov chains can be focused for the purpose of prediction. Given a current state, the probabilities of switching states can be predicted. In the application the first- and second-order Markov chains are based on the symbol distribution. Each side of the viewing window will be associated with a certain state/symbol and there are inter-state lines whose curvature is based on the probability that it will move to that next state (to which the line is connected).

Entropy is the measure of information in a system. Given our four symbols, we assume that a distribution will produce equal probabilities to each of the states. When the entropy is high, measured information is low. This means that the current state of the system is expected and does not deviate from stasis/stability. However, when the distribution of symbols is highly unexpected, the entropy will be lower, thus information content is higher.

Project Methods

This project was developed in Processing - 0103 Beta, using open GL graphics acceleration and Krister ESS for MP3 loading. Links to related sites are presented below:

- The PROCESSING (beta) homepage

- DDR Game - Where exercise gets fun!

- Ess sound library site (AIFF, WAVE,AU, MP3

Some representative screen captures are listed below, followed by a video depicting a sample run of the application:

Team Participants

Terrence Handscomb

Terrence Handscomb. I am a MFA student specialising in critical theory and video. During the last twelve years I have worked predominantly with media based art. I have exhibited extensively in Europe, Australia and New Zealand.

Joriz De Guzman

Joriz De Guzman. I am a graduate student in the Department of Computer Science at the University of California, Santa Barbara. My research interests lie in the field of Computer Vision with Bioinformatics/Bio-Image Informatics as a sub-interest.