Self Organizing Images

Zach Davis

MAT 259, Winter 2006

This project attempts to use the SOM-PAK self organizing map software package to draw out some of the correlations between digital images and the exif data captured along with those images. Exif data is automatically embedded into images by most digital cameras, and includes such parameters as timestamp, camera model, f-stop, and shutter speed. For this project I chose to focus on five exif parameters which I felt would be most directly linked to image content. These five are: aperture, f-stop, flash, focal length, and shutter speed. The Kohonen self organizing map (SOM) was applied to a database of images on each of the five parameters individually, as well as together as a group. Then an animation was created from the various steps in the SOM process to show the images actually be sorted. The final product is a C++ program.

The images used and their corresponding metadata were obtained from another project I am working on, a web application that allows users to upload images and annotate them (fotofiti.com). When photos are uploaded to the site, the exif data is automatically parsed and stored in a database along with the corresponding image and any user-provided metadata. At the start of this project, roughly four hundred images were in the fotofiti database. However, for the purposes of this project, any images which were not in JPEG format were excluded, along with any images which lacked information on all five of the exif parameters in question. Images were not selected in any way based on visual content. The final database of images used for this project included 239 images, all of which had information for at least one of the pertinent exif parameters. Each image has several sizes in the database, but for the purposes of this project only the 100 by 100 pixel version of each image were used. The exif data was obtained along with the images, but required some normalization before being input to the SOM-PAK software.

The fotofiti home page

In The Beginning

Early online visualization attempt

Concept Reworked

I would put the motivation for my reworked concept like this: In the past, traditional (analog) photography was very reliant on an in depth knowledge (from the photographer) of how such settings as f-stop, ISO speed, and shutter speed ultimately effect what is captured on film. In today's digitally-dominated world of photography, many cameras don't even allow the user access to these settings, and even on those that do, many people simply use the automatic setting anyways. Thus this project aims to see if, in the digital world, there is still a visually recognizable correlation between camera settings and image content. However, as I began to familiarize myself with the exif data and particularly with how the SOM-PAK worked, I got the feeling that the actual process of organizing the images was more informational than simply displaying the output of the process in its own right. This led to the final concept, which was similar in motivation to the one discussed at the beginning of this section, but replaced the static image output with an animation of the SOM process on each exif parameter.

As previously mentioned, the final form of this project was written in C++, using the OpenGL and GLUT libraries for windowing and graphical output. In addition, Matthew Kennedy's OpenGL Image Loader library was used for decoding the JPEG images and loading them into OpenGL as textures. The project can be broken down into four main tasks: loading the images into OpenGL; running the SOM-PAK software on a given dataset; parsing the .map file created by SOM-PAK; and creating a visual output based on the content of the .map file.

The first section, loading the images into OpenGL, was by far the most computationally expensive portion of the program. Each image took between one and two seconds to load into OpenGL, and as previously mentioned 239 images were being used in the dataset, resulting in roughly a five minute run-time just to load all of the images. All of the images were loaded at the start of the program so that once they were loaded, the animation could run smoothly without the need to dynamically load new images.

For the second task, running the SOM-PAK software, I wanted to use the SOM-PAK code, written in C, as a static library (rather than having to run the executables from within my code), but after spending some futile time attempting to make this option work, I fell back on actually executing command line arguments from within my program using the WinExec() function. To do this, I had to create a string which contained the name of the executable I wanted to run along with any parameters I wanted to pass to it. For example, "randinit -xdim 15 -ydim 15 -din aperture.dat -cout aperture.map -topol rect -neigh bubble -rand 984" initializes a map from the dataset contained in aperture.dat, with a rectangular topology, a bubble neighbor-function, and a random seed of 984, and outputs the results to aperture.map. One of these strings had to be created each for each SOM-PAK executable, then run with the WinExec() function. In order to be sure that the program did not move on before the output file was created, I use a for loop which tries to open the output file at regular intervals. The program does not move on until the file is successfully opened. Unfortunately, this means that a new file must be created each time a SOm-PAK executable needs to be called. Each time a new dataset is loaded, the map must be initialized with randinit. Then for each successive step of the SOM can be run with vsom. For my animation, I was running 10 step increments of the SOM, meaning that each time I ran vsom, it did 10 iterations of the SOM. I had two vsom modes: a "rough" organizer which had a large radius (12), higher learning rate (0.05), and would run up to 1,000 iterations; and a "fine" organizer, which had a smaller radius (2), a lower learning rate (0.02), and would run up to 10,000 iterations. In addition, in order to add labels to the map to determine where to place the images, vcal had to be run once each time a new map was to be displayed.

The third task, parsing the map, essentially just involved opening the .map file to be parsed, reading it line by line, and creating a data structure based on the information read. The first line of each map file contains information about the map, including the map dimensions, topology, and neighbor-function. Each successive line contains the weight values for each node in the map and the label for the image with the closest (in Euclidian distance) weight vector, if there is one. The weight vectors and image associations are recorded for each node, and stored for use in creating the visual output.

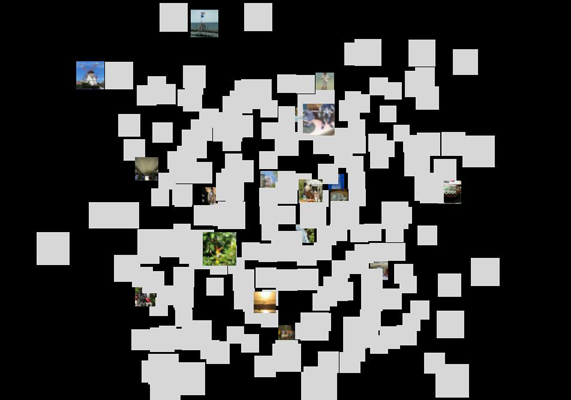

The final task was to actually display the information generated from the first three tasks. The output is a 15 by 15 grid of squares representing the 225 nodes of the map. If a node had an image associated with it when the map was parsed, this image is applied as a texture to the square. If no image is associated with a given node, that square is left "blank", a solid light gray color (the background is black). The height of each square is calculated based on the Euclidean distance between the weight vectors of neighboring nodes. The greater the difference between a node and its surrounding neighbors, the greater the "height" of the square off of the xy-plane (out of the screen for the default viewing angle). This creates a landscape of staggered squares, where images which are grouped together and on roughly the same plane have been found to be similar, and differences in height show the differences found between neighboring nodes.

The first task, loading the images, is done only once. But the next three tasks are done repeatedly to create snapshots of the SOM at various points in the process. These snapshots are displayed in a continuous fashion, thus creating an animation which shows both how the images are being moved and grouped in the map and how the weight vectors are changing.

After studying the results of the project, both the static images and the animations, it was clear that for the methods and dataset used that no patterns or correlations were emerging from running the SOM on the five chosen exif parameters. The static results showed most of the images to be on the same plane, indicating that there was widespread similarity throughout the images and no distinct grouping was found. This finding was backed by the animations. In the animations for all of the parameters, during the "rough" organization (where the radius was 10 nodes), the maps did not seem to reach any sort of stable point, with images freely moving from one side of the map to the other. The "landscape" of the maps were also very telling, as the images quickly converged from a variety of different heights to a single plane, again indicating widespread homogeneity within the data.

I would like to continue with this work, as I strongly feel that there is much to be learned from the metadata of images in relation to how we as humans perceive those images visually. It is also clear that how the initialization of the SOM is done, as well as how the data itself is formatted, has a significant effect on the outcome of the map, and thus more experimentation is warranted in an attempt to extract more interesting and meaningful results from this process. Most exciting to me personally, is the possibility of training the SOM with images which have been manually labeled as indoor/outdoor, landscape/architecture, night/day, and so forth, and then running the SOM on fresh data to see how those images are then organized. I truly feel that while my results thus far show little more than homogeneity (which is not to be discounted, I feel that this points out an important fact about how automatic settings on digital cameras adversely affect the uniqueness of digital photographs), I am confident that more exciting (or at least more concrete) results can be garnered with further work in this context.

Focal length final map

259.exe - For Windows XP and 2000

Source Code - C++ and Visual Studio .NET project files