MAT 594 - 2008S

JAVIER VILLEGAS - Slices- Segmenting

Luminance Images to

Change the Elements of a

Scene

This project explores the concepts of monocular cues for depth perception, and computer vision for tracking and interaction through double relation with a 3D scene, being the object observation and determining the advantage point

The geometrical distortion due to the relative positions between an observer and an object have been subject of study from centuries. The projection of 3D objects over planes and the possibility of generate this projections artificially allows different options of explorations, changing the shape of an object will change its perceived position in space.

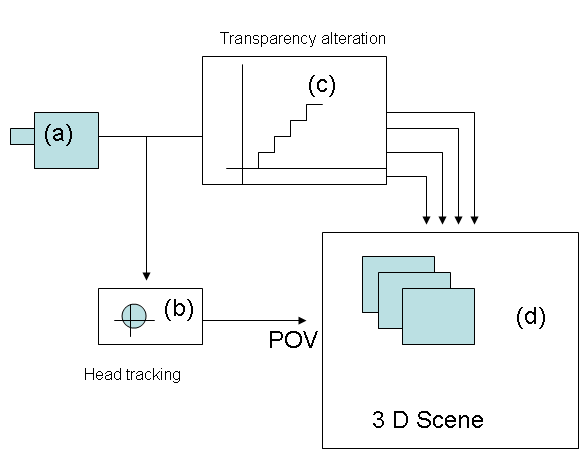

Fig 1 shows the general scheme of the system:

The image is captured from a webcam in (a) using the OpenCV library cvcam.

The Camshift algorithm[1] is trained to the skin color of the observer an then is used to track the position of his/her head (b).

In (c) one color plane of the image is used to define different regions of segmentation (can be luminance or any of the RGB components)

Finally in (d) transparent masks of the segmented regions are blended to in an array of 6 parallel planes.

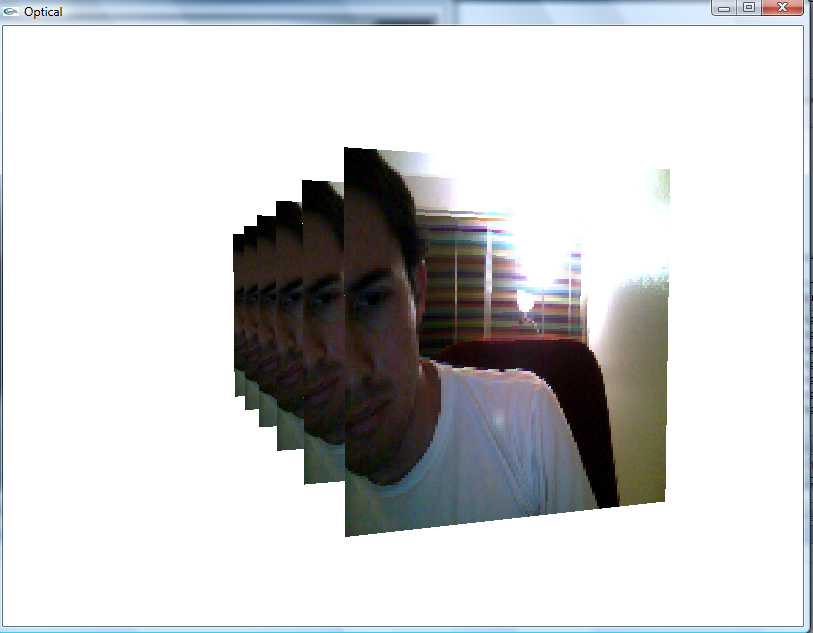

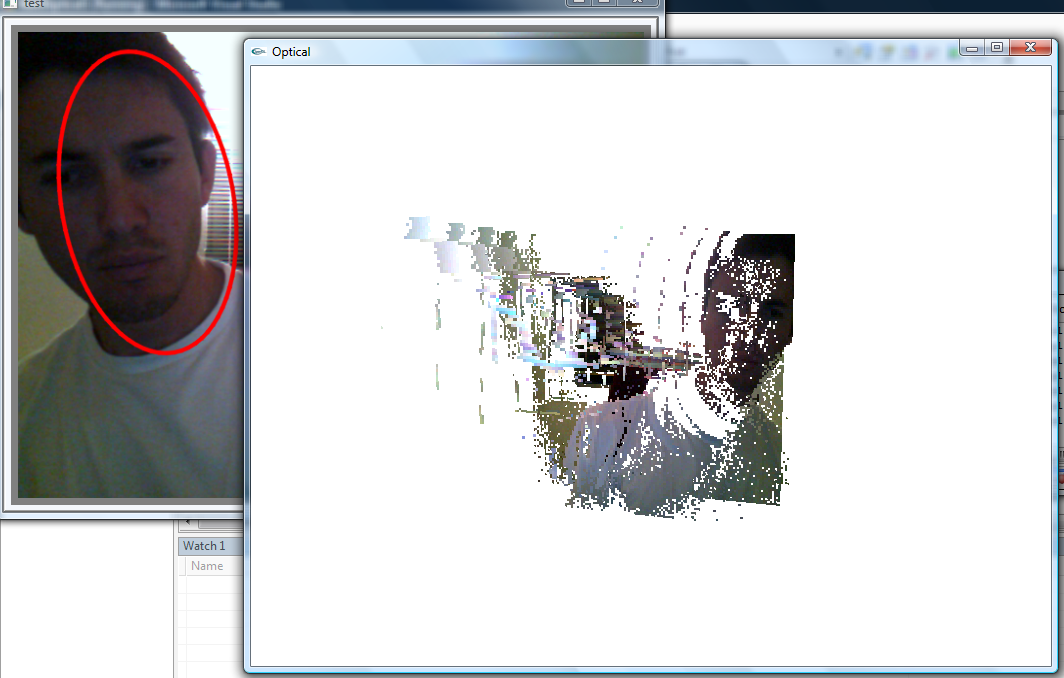

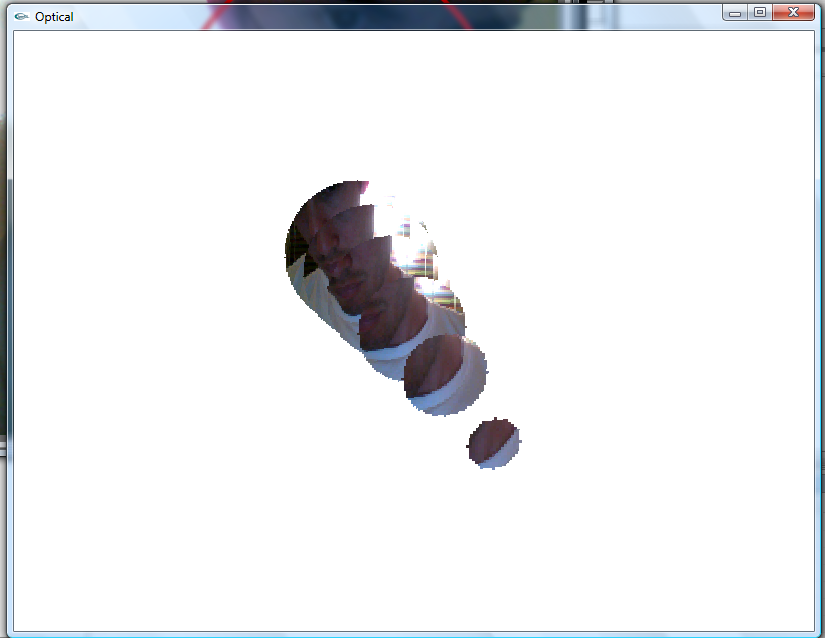

Fig 2 to Fig 4 show different output modes of the system:

Fig 2

Fig 3

Fig 4

The system was implemented to work in real time using OpenCV for capture and processing of the image data and OpenGL for modeling the 3D scene, the implementation shows the potential in mixing OpenGL and OpenCV for real time applications, possible future explorations include the use of more complex 3D geometries and the inclusion of previous frames of the video data.

[1] Bradski. Computer Vision Face Tracking For Use in a Perceptual User Interface. Microcomputer Research Lab, Santa Clara, CA, Intel Corporation. Intel Technology Journal 1998.

[2] OpenCV beta 4 API. http://www.cs.indiana.edu/cgi-pub/oleykin/website/OpenCVHelp

[3] Kelley Stephem, Computer Graphics using OpenGL. Pearson Prentice Hall. 2007.