2006W

MAT 256 Course

3.23.2006

Bo Bell (bobell at mat dot ucsb dot edu)

John Roberts (roberts dot john at gmail dot com)

Social Sonification

A low attention aural user interface for communicating events in the environment.

Table of Contents

The following links provide quick access to the various sections on this site:

Project Description

The goal of this project was to combine our research interests - sonification and human computer interaction - in an attempt to create a pleasing, low attention, aural user interface that could notify an audience of specific events. Our idea was to create an interface that could monitor a video stream, captured in a high-traffic social setting, for low-probability events - events that were "out of the ordinary" for the setting under surveillance. The interface that we have created consists of two abstract, related parts: the aural interface, and the graphical interface.

We designed the application in such a way as to rely on the aural interface for notification of important changes in a video scene (the specification of where an "important change" will occur is described below, in the discussion of the graphical side of this project's interface). Our goal was to create a pleasing aural interface that responded to events in the video stream. The aural interface we created utilizes properties of the video stream - sonification occurs based on the color values present in key frames that are registered as the video plays. At the beginning of the video stream, the sound produced is at a low volume, and is harmonious. This sound represents the background of the scene, when no disturbances are occurring. As different disturbances are detected, the sound raises in volume to notify the user of the disturbance, and becomes more dissonant to aurally reflect this disturbance. The specification of events that can cause such disturbances can be made with the graphical portion of interface, and is described below.

The graphical interface provides the user with the ability to select regions of interest in the video stream - specifically,

regions where motion is not expected to occur. Selection of such areas creates a division in the video scene into "hotspots",

what we call the areas where the user has specified that motion will not typically occur, and background areas, which are

simply all areas in the scene that are not hotspots. This user driven segmentation of the scene allows us to provide, on

the graphical interface side, visual cues when hotspot areas are impinged upon. During periods of time where there is no

motion in the hotspots, the hotspot region is blurred and the background region is rendered as normal. When motion is

detected in the hotspot region, the blurring effect is inverted, causing the hotspot region to come into focus against the

now-blurred background region. This creates the effect of centering user attention on an area of interest, and removing the

background as a visual distraction when motion has been detected in a hotspot.

The graphical interface provides the user with the ability to select regions of interest in the video stream - specifically,

regions where motion is not expected to occur. Selection of such areas creates a division in the video scene into "hotspots",

what we call the areas where the user has specified that motion will not typically occur, and background areas, which are

simply all areas in the scene that are not hotspots. This user driven segmentation of the scene allows us to provide, on

the graphical interface side, visual cues when hotspot areas are impinged upon. During periods of time where there is no

motion in the hotspots, the hotspot region is blurred and the background region is rendered as normal. When motion is

detected in the hotspot region, the blurring effect is inverted, causing the hotspot region to come into focus against the

now-blurred background region. This creates the effect of centering user attention on an area of interest, and removing the

background as a visual distraction when motion has been detected in a hotspot.

Project Motivation

Surveillance: This project takes the idea of "user surveillance" and warps it into a space in which the areas being surveyed are shifted in focus. One typically thinks of "hotspots" in a surveillance context as a means to focus on certain areas of a video stream. We are more interested in creating a sonification of those areas, where a user doesn't need to visually focus on them; their attention will be drawn to the areas when relevant activity occurs therein.

Frequency: The audio component of the project is based on a continually updated transformation of frequency. The larger area is computed as a visual color mean; the light frequency of this space is converted into audio frequency at a low frame rate. Whenever activity occurs in the "hotspots," the light energy of that spot is used to drive the audio feedback that alerts the user to the activity. This audio mapping uses a conversion of the color space of the input video from RGB (raw color) information to HSL (color, diffusion, luminance) space. The Hue frequency is mapped in a linear method to sound frequency, while the saturation and luminance values correspond to diffusion and amplitude of the audio stream.

Noise: We incorporate noise (referred to in the context of engineering, as opposed to sonification) as a means of further directing focus to areas of interest. Though noise typically produces unwanted artifacts in images in video, we introduce noise as an artistic component in both the aural and graphical interfaces to communicate changes in the scene. Aurally, this noise is sonified as dissonance when motion is detected in the hotspots; graphically, noise is incorporated as a blurring effect serving to focus user attention on areas of interest as events occur within the video stream.

Project Methods

This project was developed in Max/MSP, using additional libraries. Links to related sites are presented below:

- The Max/MSP product homepage

- Jitter, an extension to Max/MSP

- cv.jit, computer vision extension for Jitter

Screenshots

Some representative screen captures are provided below:

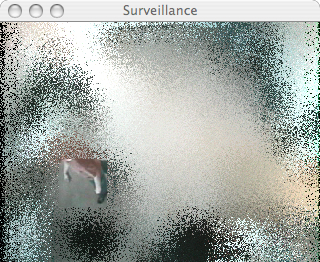

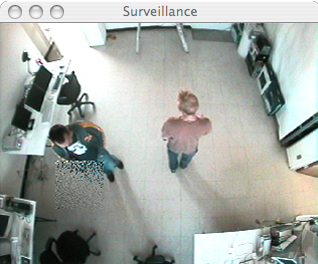

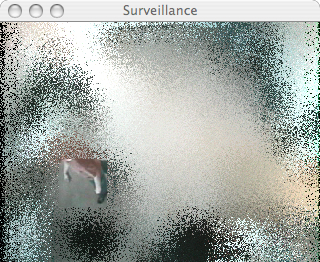

A screenshot of the application showing no activity in the "hotspot".

A screenshot of the application showing no activity in the "hotspot".

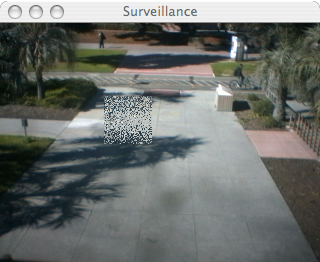

A screenshot of the application showing activity in the "hotspot". Note the inversion of the blurring effect.

Project Source

The Max/MSP patch file developed for this project can be downloaded from here.

References

For ease of reference, and for those who just need to know more, we have included a list of some related links, articles, publications, and projects, that help to explain some of the background knowledge we acquired for this project, or sources of inspiration for our brilliance in design:

- Color Spaces

- RGB Color Space

- HSV Color Space

- HSL Color Space

- Sonification

- Intro to Sonification (PDF)

- Artistic Representations of Social Data

- Information Aesthetics

- Mapping Social Behavior ... to Auditory Display(PDF)

- Wireless Gesture Controllers to Affect Information Sonification (PDF)

- Related Digital Artwork

- Julie Freeman's Lake Project

- Sonification of Network Traffic

- Information MuSIC Site

- Stetho System

Team Participants

Bo Bell

Bo Bell is a media artist and composer working in electronic music and multimedia theater production. He has presented sound and stage projects in multiple venues (Theatres, Schools, Clubs, House Parties, etc.) in New York, California, Europe, and Japan. He is a Ph.D. student in either the Music or Media Arts and Technology departments at UCSB, depending on who you ask.

John Roberts

John Roberts is a grad student in the Computer Science department at UCSB. John was the other half of the design team, responsible for artistic input into the realization of the project presented here. John also developed this site.