Media Art & Technology

Ciao: A Multi-User Audiovisual Interactive Installation using Mobile Phones

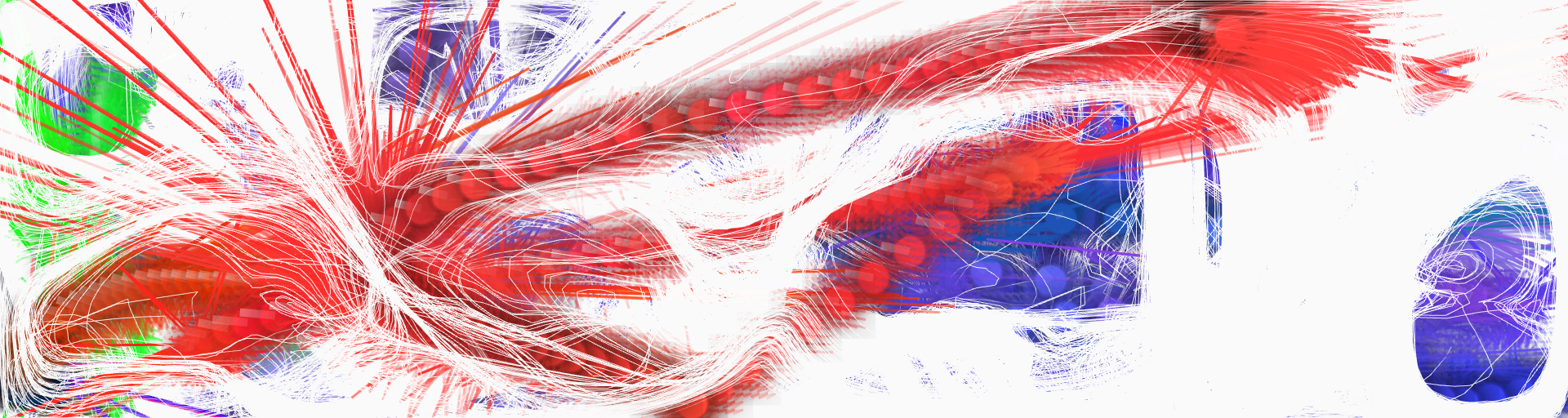

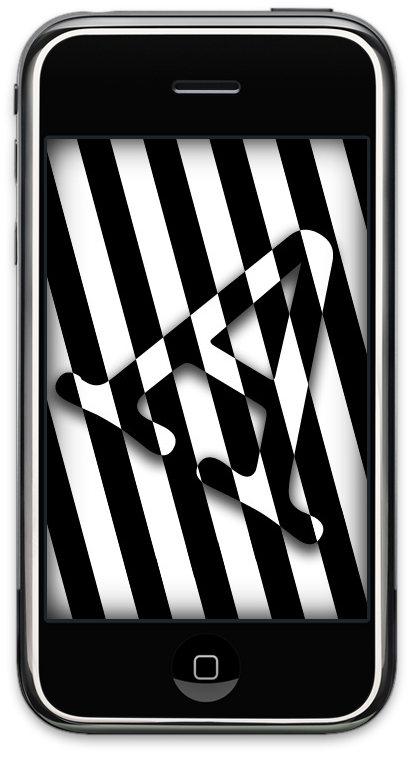

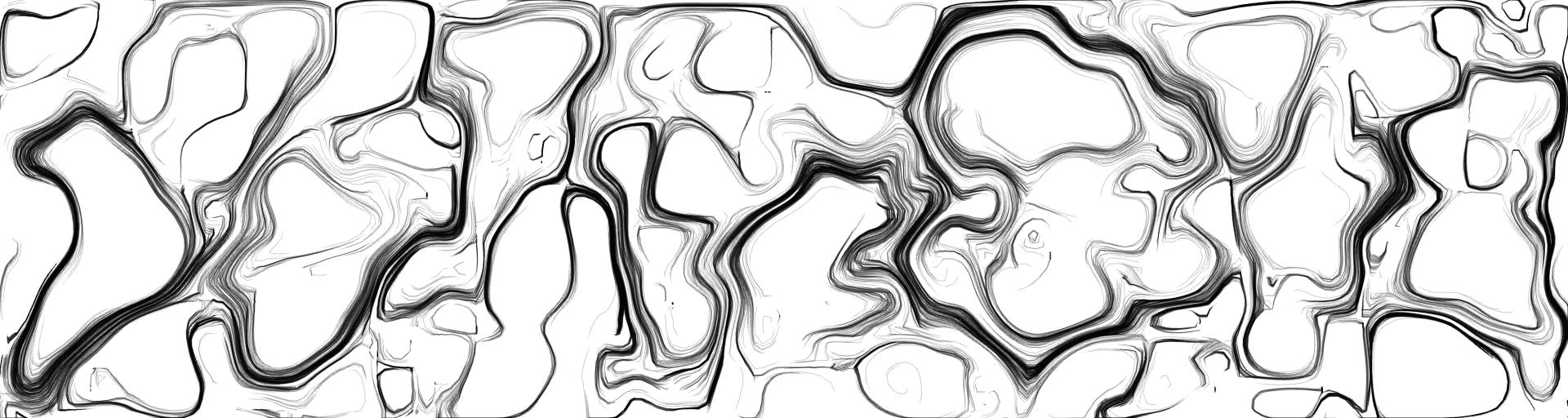

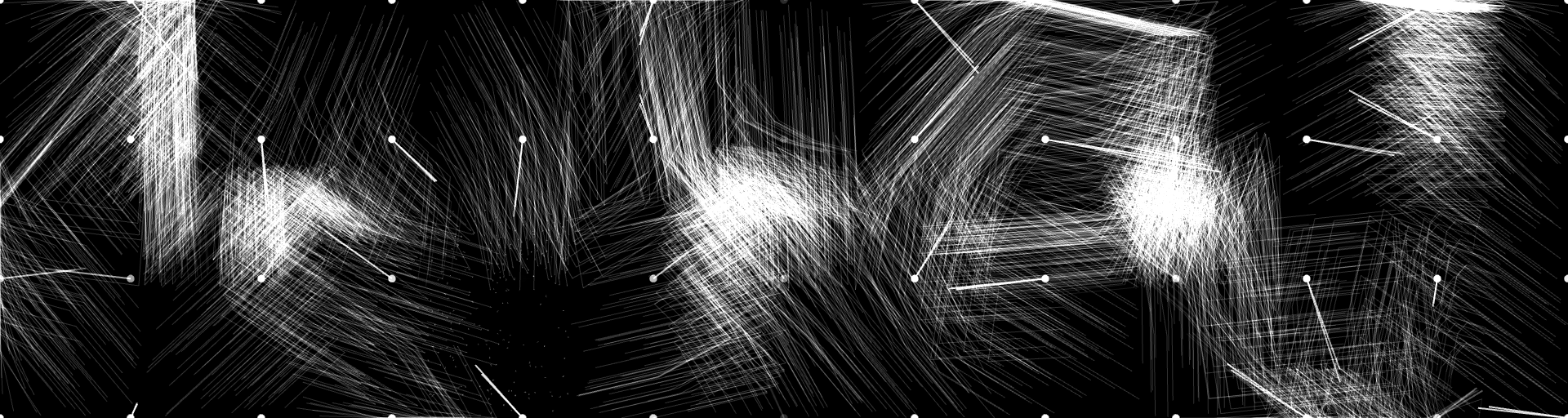

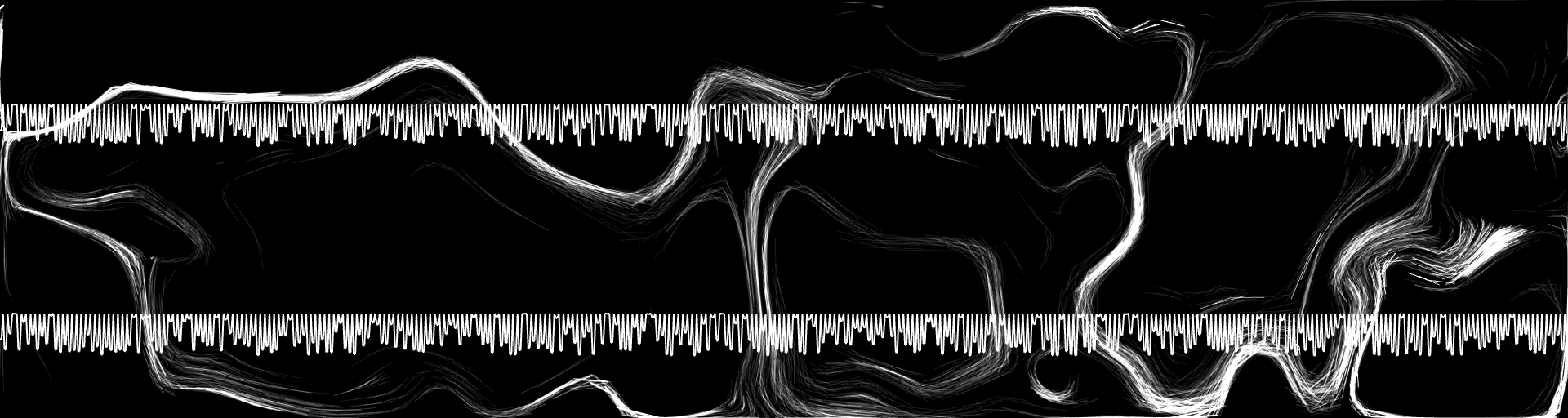

Ciao is a real-time interactive audiovisual installation where audience members are able to engage with projected visuals and sound through their mobile phones, (iPhone or iPod Touch). The images above represent the actions that occurred in the virtual space by the participants in the installation interacting with Ciao through their mobile phones. An iPhone application was designed to enable this type of interaction and a Processing/Java application was built to handle and orchestrate multiple users engaging in the installation. The installation consists of an empty room with a large wall that is projected on to by three projectors synced to create a large window span. The installation space also contains a stereo sound setup to allow for basic spatialization of sound in the space.

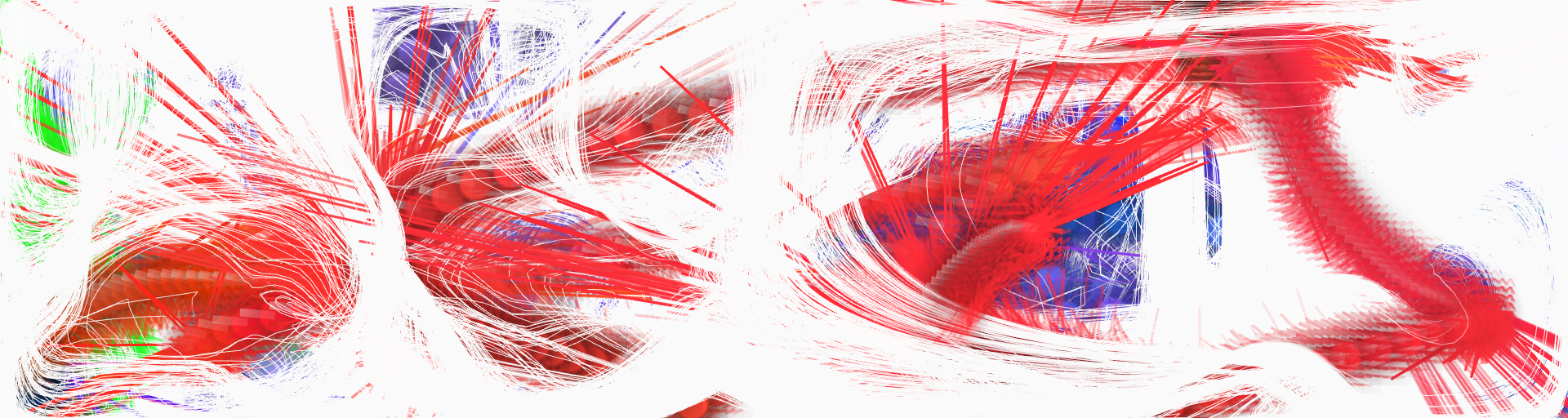

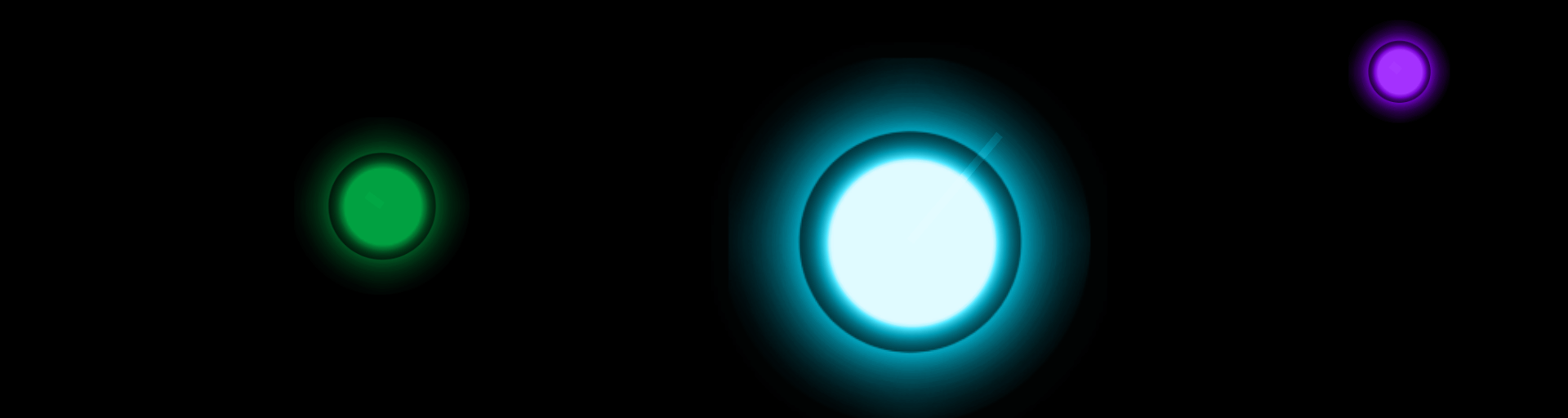

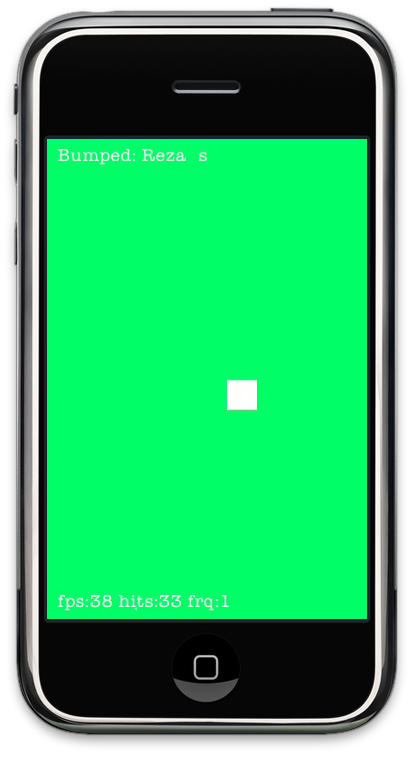

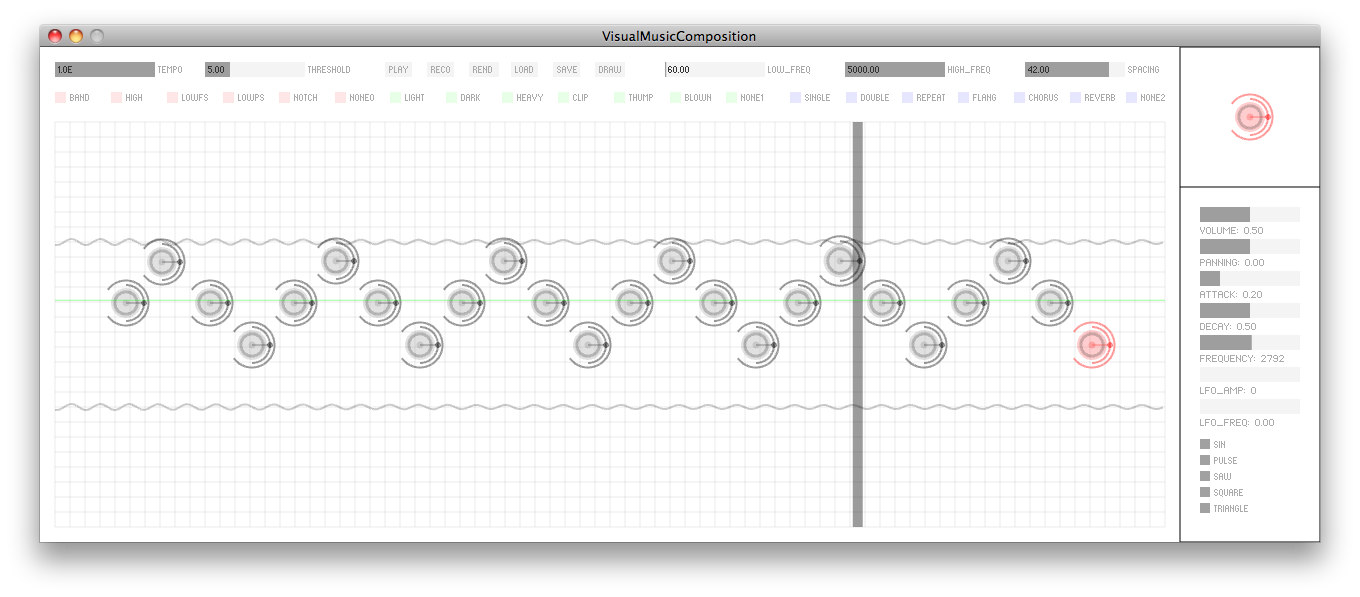

The iPhone application provides a minimal yet natural interface for interaction with the installation. The application tracks finger positions on screen, (as seen by the white square in the images above), taps, proximity, device orientation, and audio input (from the microphone) to provide multimodal input to the installation. The application provides multimodal feedback using the display, speaker, and vibration capabilities of the phone. These input and output mechanism are critical in allowing an audience member to engage and feel part of the installation. The Processing application handles how a user is displayed and is sonified in the installation. Additionally, the application is able to differentiate users from each other by uniquely representing every participant with their own avatar on screen. When a user turns on the iPhone application he/she connects to the Processing application, where orbs represent individual participants in the installation. In the first image above there are three participants in the installation.

The user is able to use their phone screen as a track pad to move their avatar in the virtual space. The iPhone's orientation will determine the color of their phone screen, which correlates to the color of their orb in the virtual space. Moreover, if a user covers the proximity sensor on their phone then their avatar is hidden in the installation space (their orb disappears). When a participant is hiding he/she is not able do anything else in the virtual space until they come out of hiding. Moreover, if the user was to tap of their screen with two fingers they would trigger a sound in the installation, moving around in the installation also triggers their sound. Moreover if a user taps on their screen with three fingers they release a missile into the virtual space, which can hit other participants in the space.

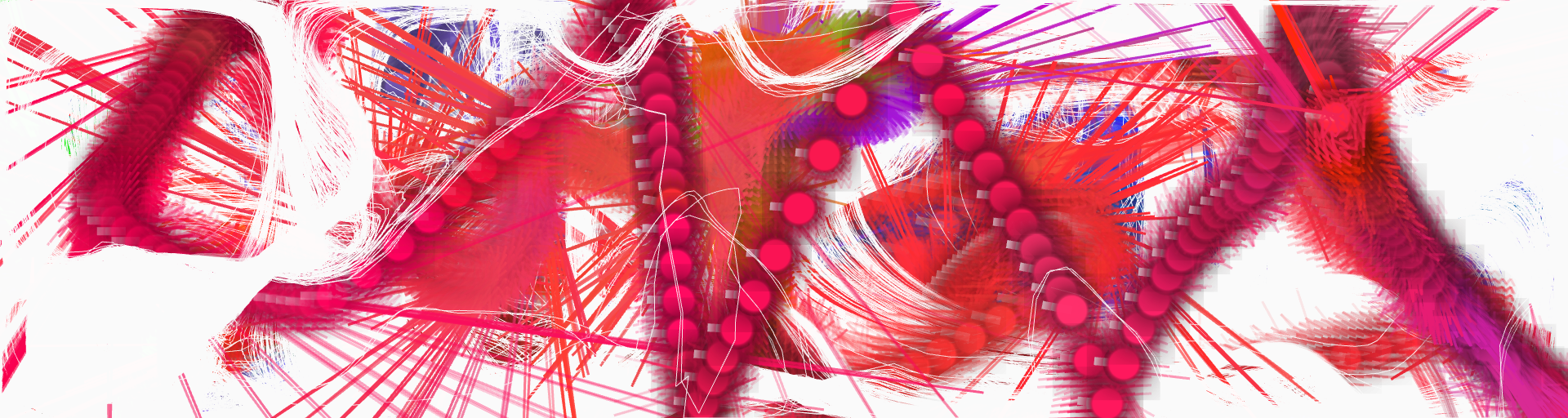

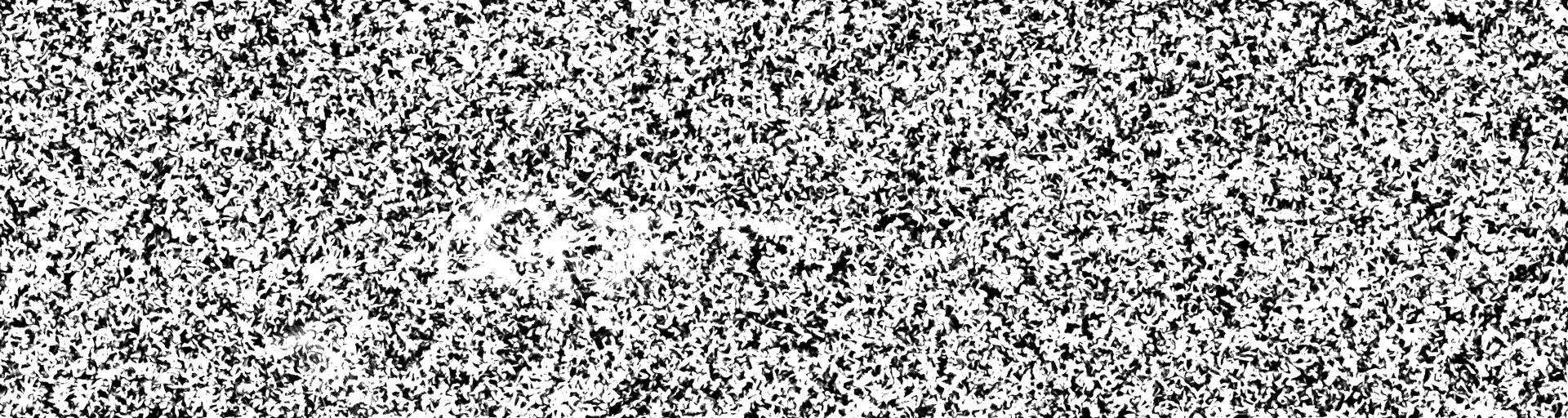

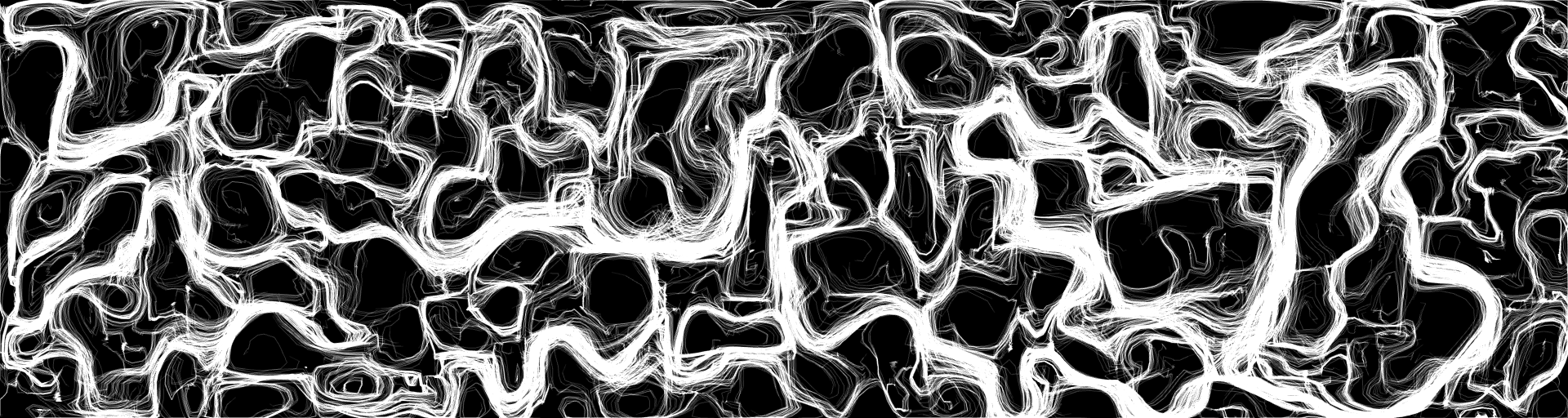

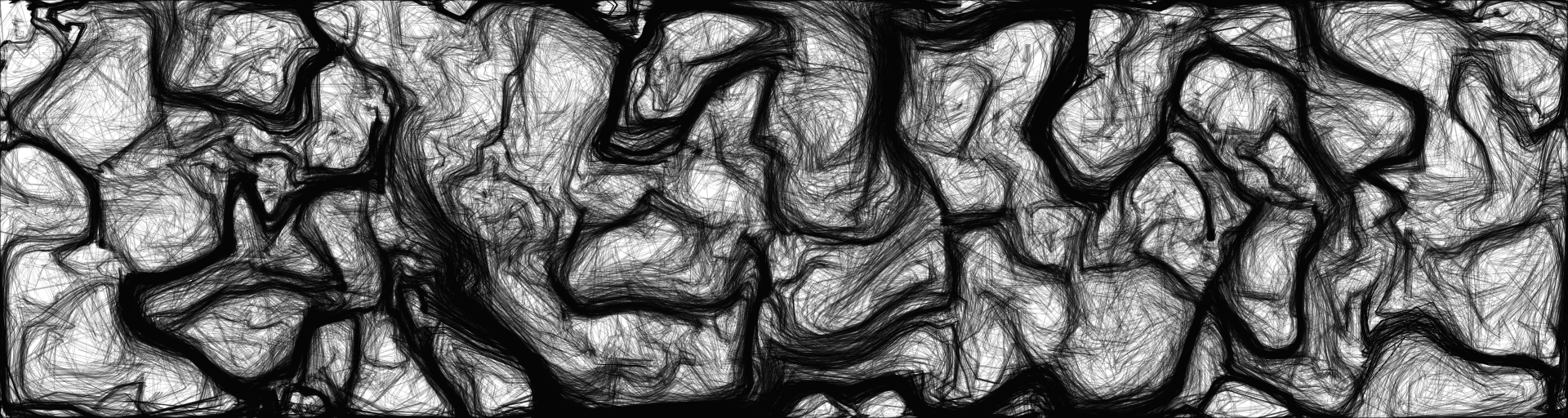

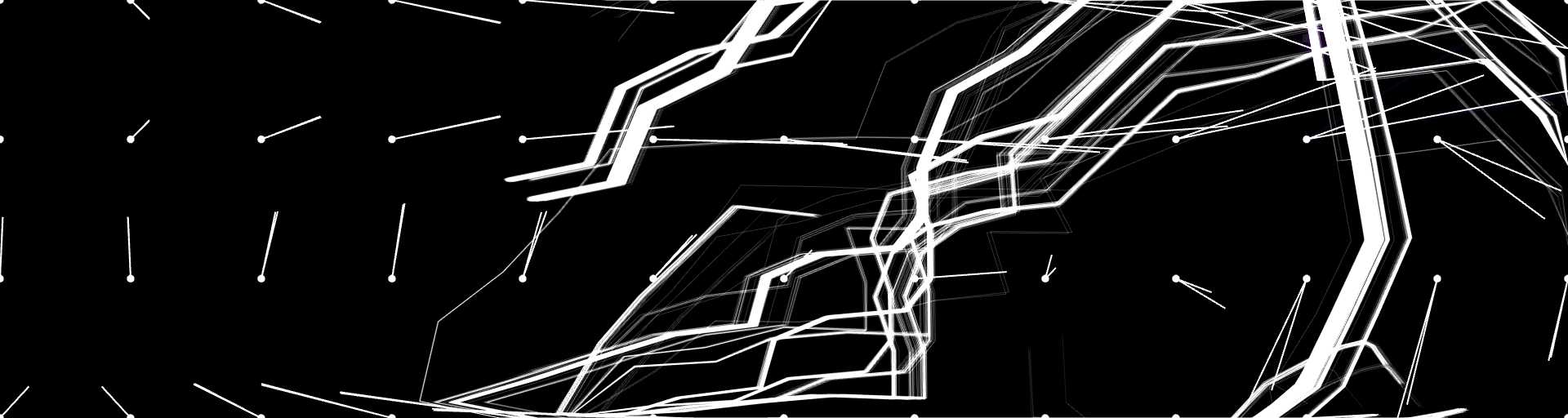

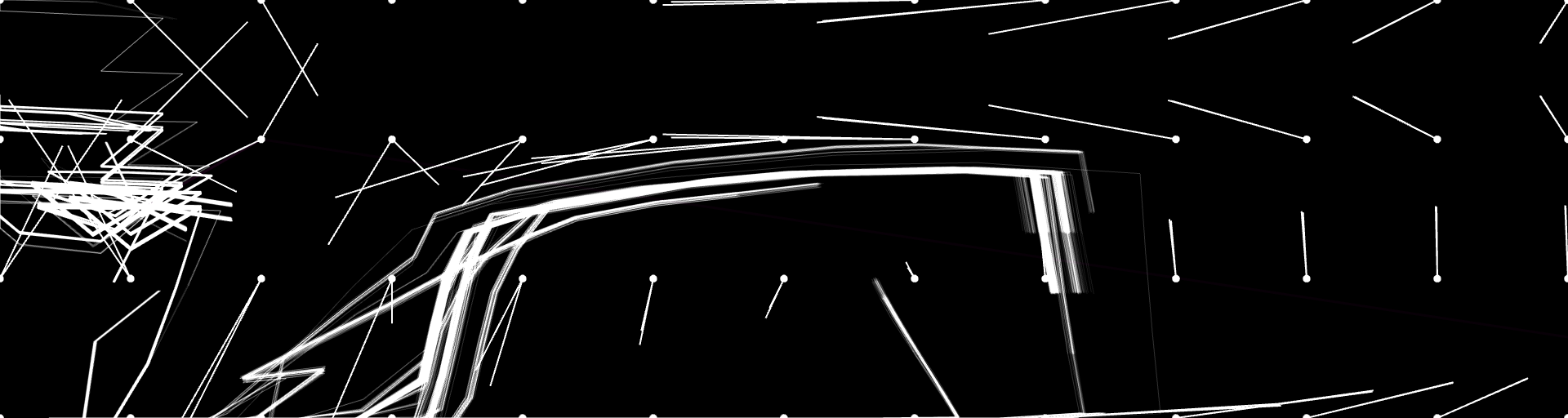

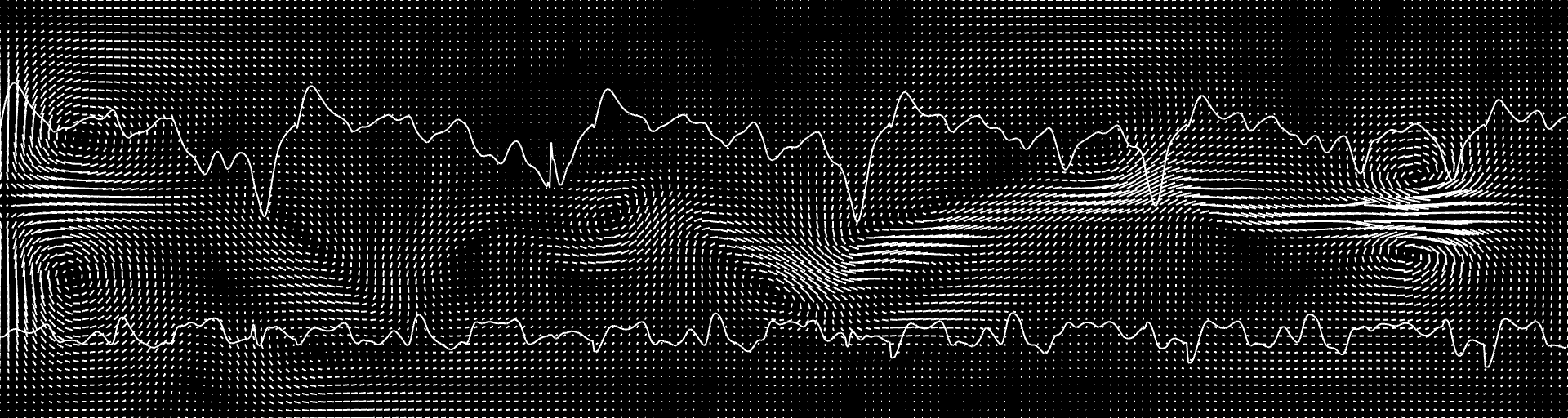

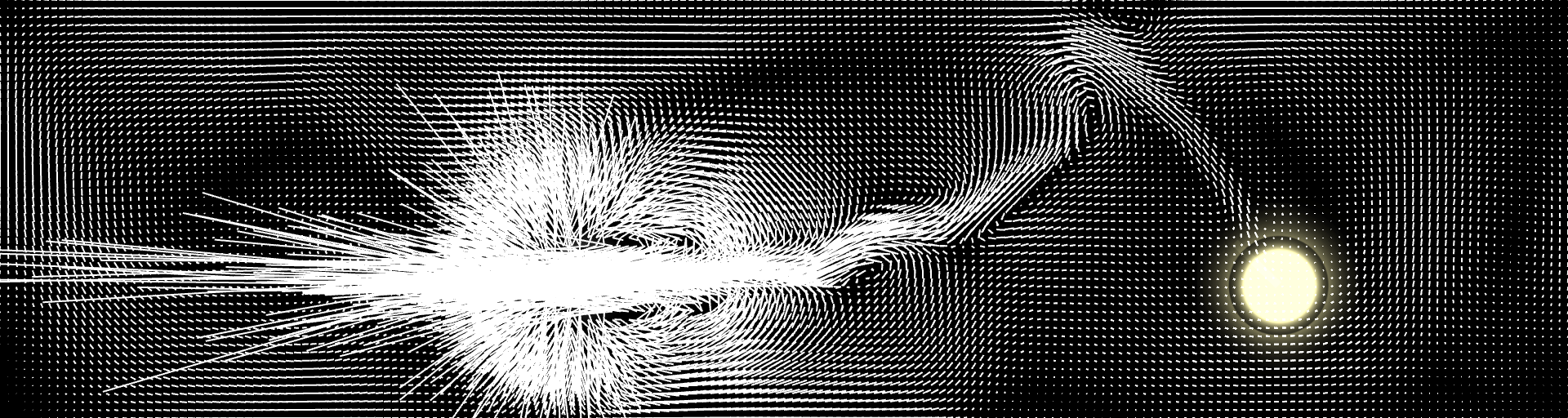

All visual elements in the virtual space are affected by the field or can affect the field. For example a participant can move across the field and plow the field and create waves, which can move other participants in the virtual space. If the participant is not touching their phone's touch screen the their movement in the virtual space is defined by the fluid field. Additionally, by moving throught the field a participant increases the density of where they have traveled in the space, leaving a trail of high density, as represented in the image above. If a participant releases a missles, then the missle will plow the field, as seen in the second to last image in the series of images above. Lastly the field is rendered in such a way that its density, direction and magnitude can be visualized, as seen from the fourth and fifth images from the first image in the series above. Black represents areas of low density, white represents areas of high density, and the waves in the pseudo-surface (or lines) rendered represent the directions and magnitudes of the field cells.

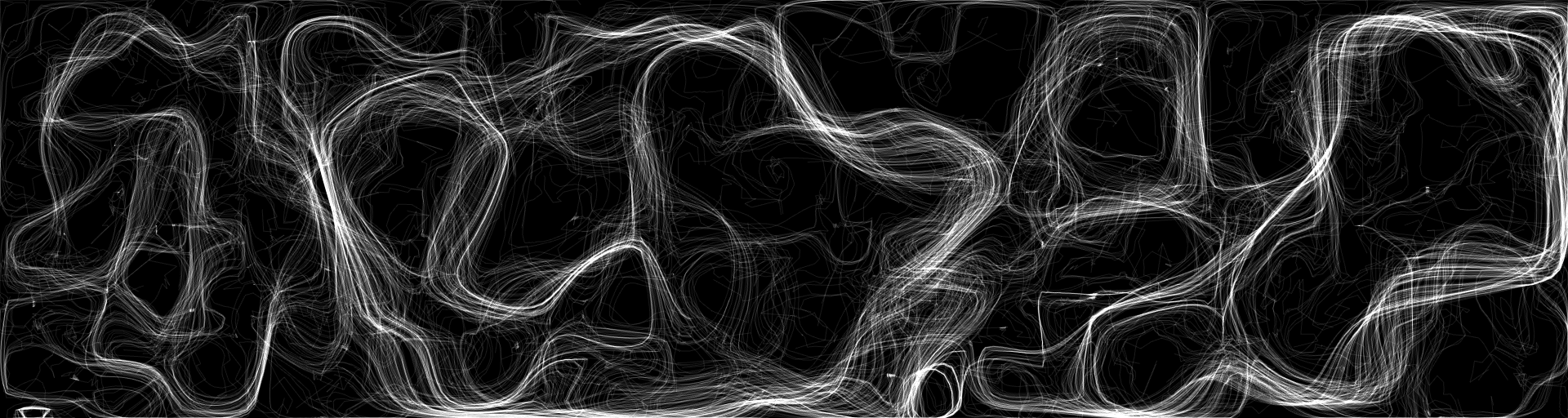

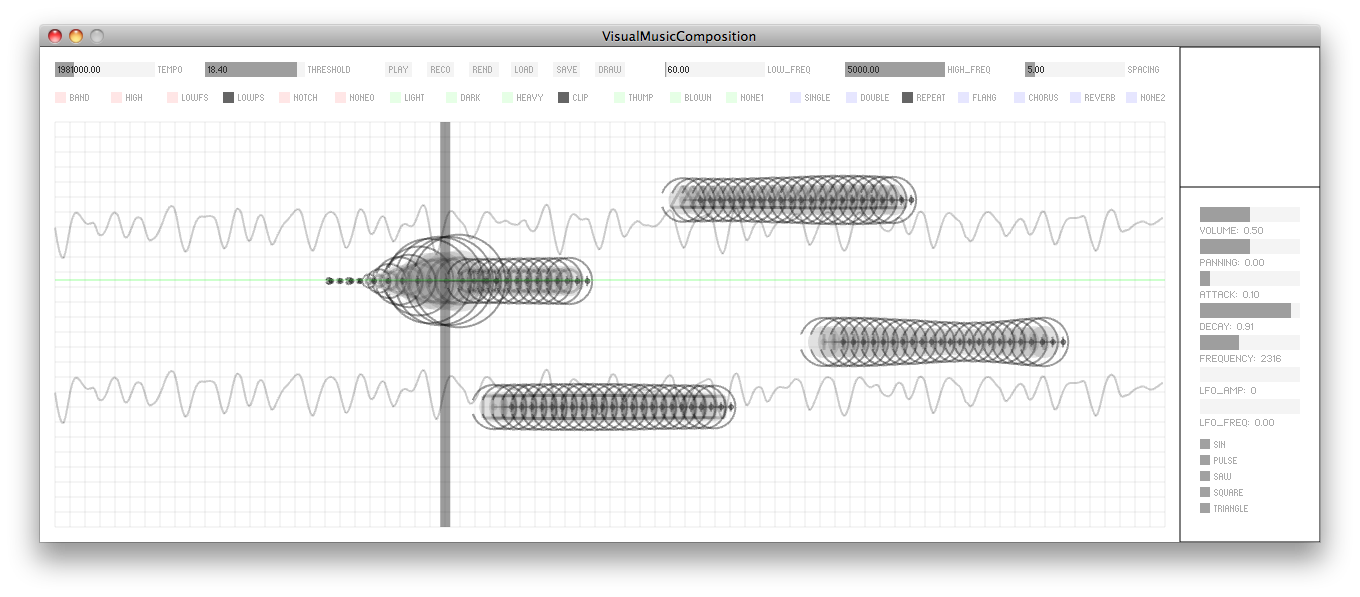

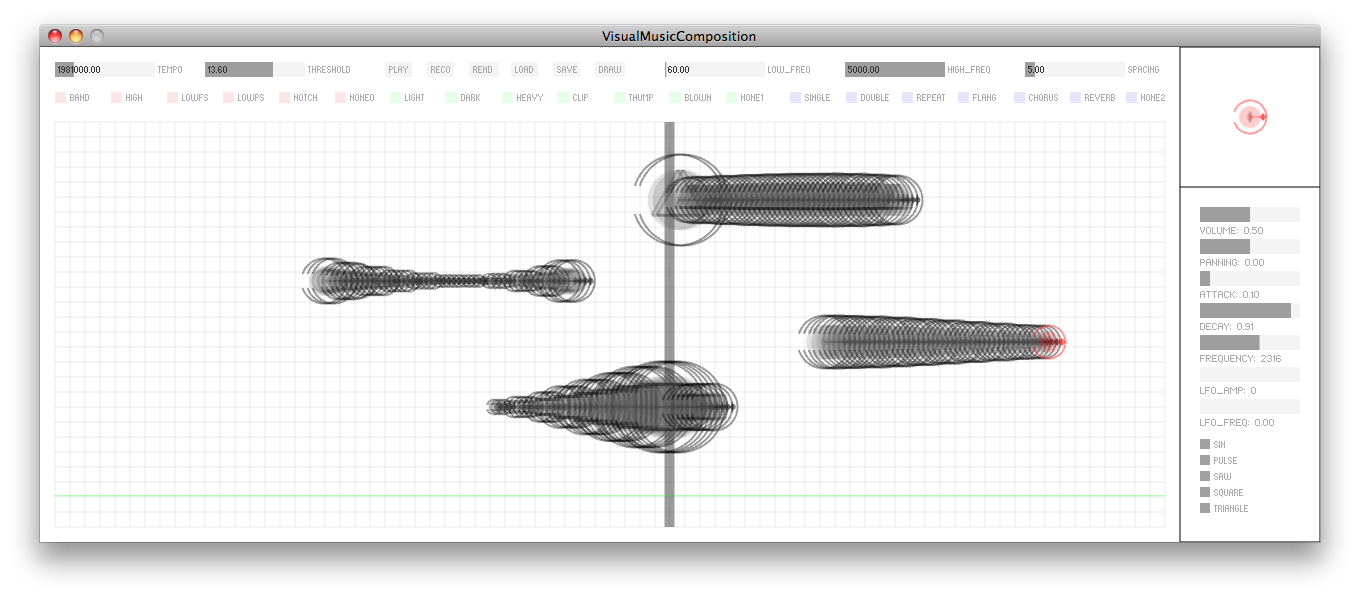

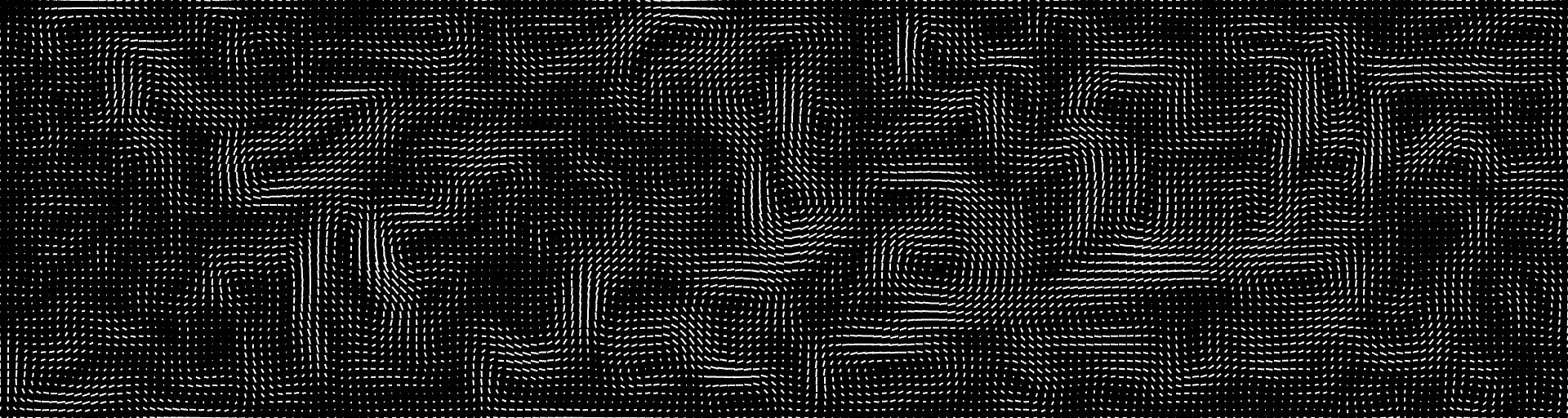

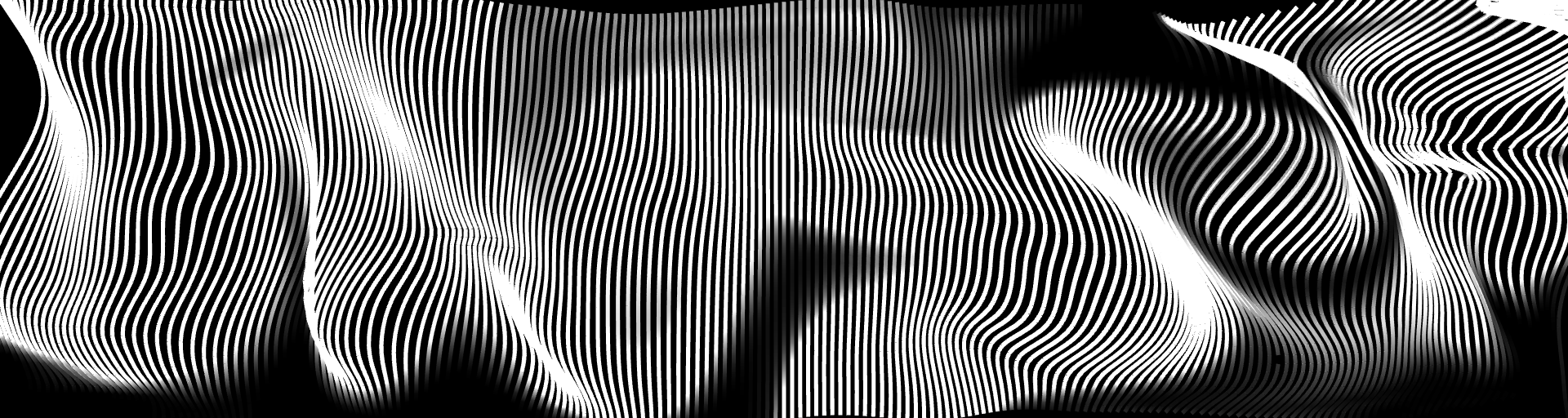

Visual Design: A fluid field was implemented in the installation to provide smooth motion for particles and orbs in the virtual space. The first image in the series of images above represent the field’s discrete cells, visualized by lines that show the field’s direction and magnitude, as seen the images above. The field uses Jon Stam’s Real-Time Fluid Dynamics for Games to provide a visually accurate and real-time fluid field. Moreover the images above show the fluid field in various representations and in various levels of coarseness. The second image is a very fine fluid field in comparison to the first image. Additionally, the fluid has a density, and is represented in the images above by the gradients of white (i.e. the higher the density the lighter the area). In the installation participants are able to affect the field by moving through it or by blowing into their phone's microphone, effecting blowing into the virtual fluid field.

All visual elements in the virtual space are influenced by the field or can affect the field. For example, a participant can move across the field and create waves, which can move other participants in the virtual space. If a participant is not touching their phone's screen then the fluid field influences their movement in the virtual space. A participant can increases the density of the field by simply moving through it. The image above represents trails of high density, caused by user movement, which have diffused over time into the space. If a participant releases a missile, then the missile will affect the field, as seen in the second to last image in the series of images above. Lastly, the field is visualized by rendering its density, direction and magnitude as seen from the fourth and fifth images from the first image in the series above. Black represents areas of low density, white represents areas of high density, and the waves in the pseudo-surface (or lines) rendered represent the directions and magnitudes of the field’s cells.

When two participants bump into each other in the virtual space particles are inserted into the fluid field. When this happens they also receive messages on their phones of whom they bumped into. When a participant is hit in virtual space they receive a visual indication on their phone and in the virtual space whether they have lost or gained power in the installation (represented by a hit count). Field particles scan the field's direction and magnitude at their locations and move in that direction read from the field. The field is continually diffusing over time; this provides smooth motion to the particles as they move through the field, similar to how perlin noise would move particles in space. A particle is visualized by a path, which represents its trail or history as it flows through the field. When participants interact with the installation they are able to directly manipulate the field, there by indirectly manipulating the particles in that field.

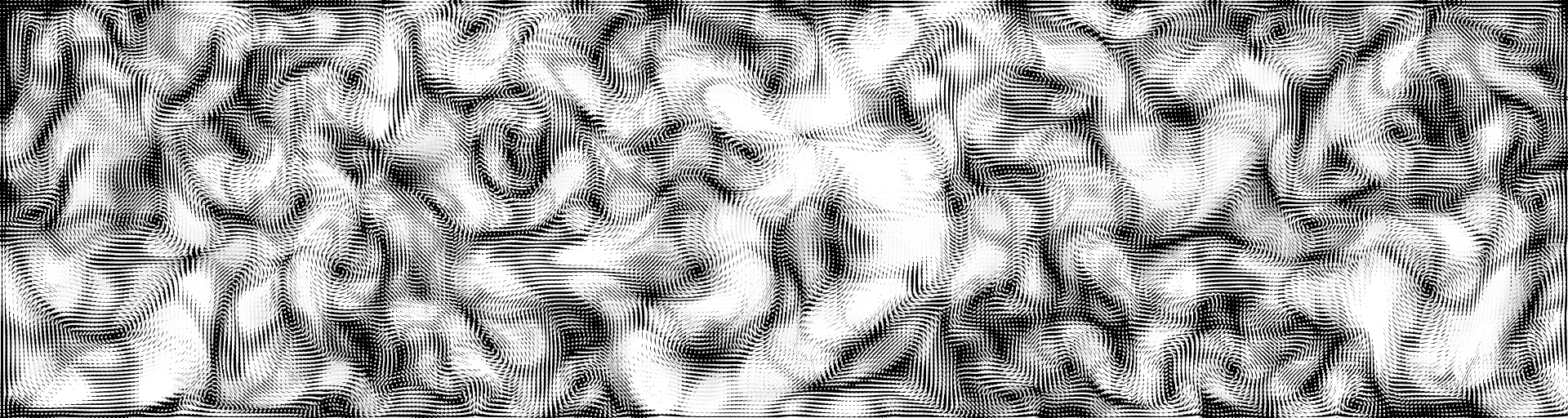

The first image in the series above represents a virtual space that is heavily populated with particles (which are white), thus creating a fine stucco texture in visual design. The second, third and fourth images represent the transient state of the particles right after they are placed in the fluid field. As you can see initially there is an even distribution of particles in the image and over time the particles trace out and visualize vortices in the fluid field. Over time the particle paths converge and smooth flow is observed, as seen in the fifth image from the top of this series. If a coarse fluid field is utilized, blocky particle flow is observed. This creates a different feel and aesthetic in the installation, which is more dramatic and spastic. Dynamically changing the coarseness of the fluid field is problematic for real-time fluid dynamics, thus the bottom three images were experiments using a coarse fluid field and were not used in the installation.

Sound Design: The installation went through a couple of interactions each approaching the sound design from a different methodology. The first attempt to integrate sound into the installation involved using interactions among the participants in virtual space to trigger sound samples. Interactions included hitting one of the virtual spaces’ boundaries/walls (left, right, top bottom), releasing a missile into space, hitting another participant, shake gestures, and blowing into the microphone.

This approach yielded pleasant sound effects, however over time became repetitive and boring. Also, solely using sampled sounds limited the sound spectrum and variety to a limited number and the application could not precisely control the sound.

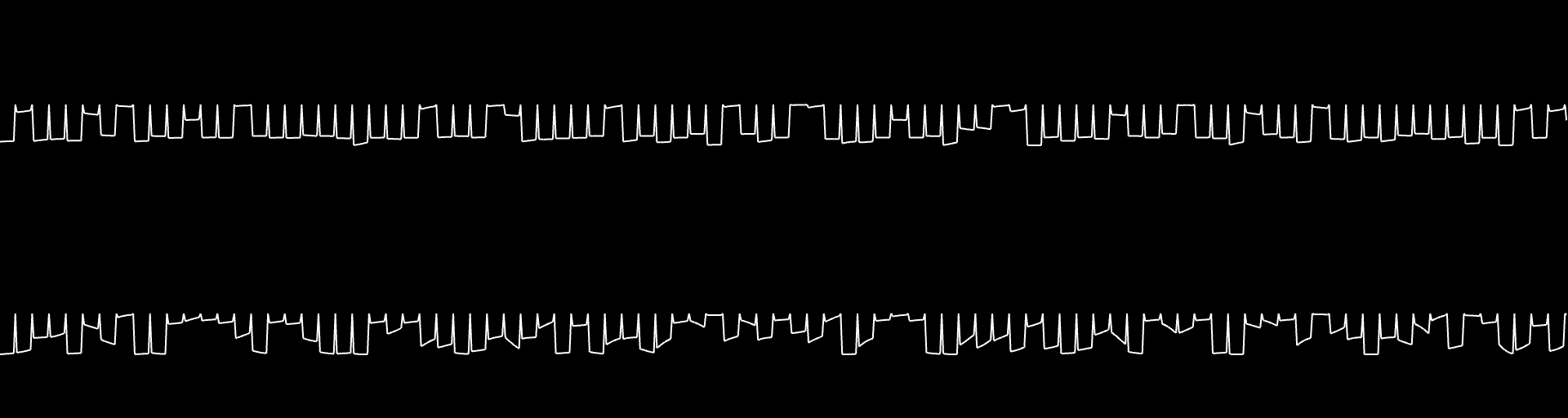

The next method took a low level approach, simply filling the audio buffer with samples derived from the installation, specifically from individual fluid cells and their velocity magnitudes. Samples in the audio buffer were linked to the cells of the fluid field to create sound, effectively sonifying the fluid field. Participant's interactions in the virtual space changed the field and consequently changed the sound produced by the field. This approach produced mountain like waveforms, as seen in the fifth image from the top in the series above. However, the sound had a harsh quality and did not diminish over time corresponding to decreased audience participation.

The next method was to use the particles in the scene to create the sounds in the installation. This was done by mapping their trail positions in space to samples in the audio buffer, as illustrated in the last two images. The first of the last two images represents a sound waveform composed of many particles with small trails; the second image utilizes fewer particles with longer trails. This approach created a metallic sound. These approaches did not reflect users’ interactions to a degree that provided users a sense of control over the sounds. Therefore, this method was not used in the installation. However these two methods led to the final sound design solution. This approach involved using oscillators as instruments in the installation and utilizing properties of the avatars to control the sound properties in the installation.

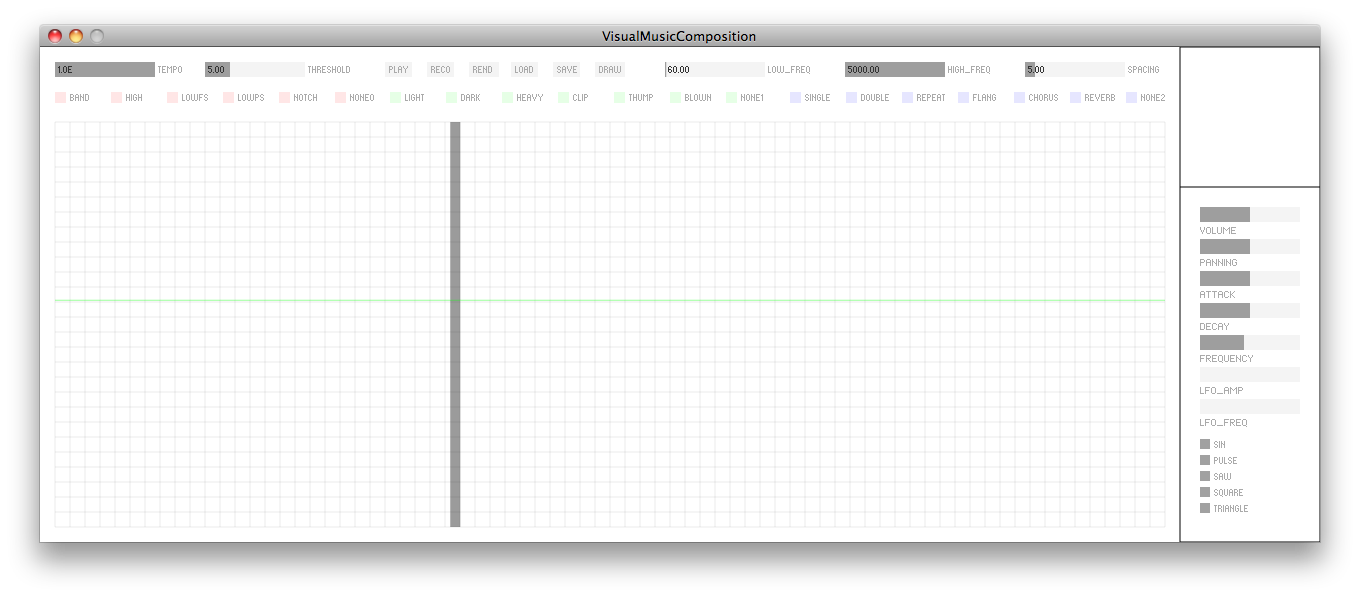

The first four images above illustrate a tool that was used to prototype the real-time sound synthesis instruments in the installation. The tool allowed for the creation of sound oscillators (sine, pulse, square, saw, and triangle waves) and tweaking of their properties, as frequency, volume, panning, lfo (low frequency oscillator) frequency, and lfo amplitude, in real-time in a minimal, yet flexible prototyping environment. Further, each oscillator was embedded in a "note." Each note has an envelope that is dynamic; therefore the envelope's attack and decay can be modified in real-time. This tool allowed for the visual prototyping of instruments that were used in the installation. The prototyping software also allowed for sound filtering (band pass, low pass, high pass, and notch filtering), time effects (single echo, double echo, repeating echo, flanging, chorusing, and simulated reverb), and distortion effects. Below is a video illustrating some of the tool’s capabilities and how each note could be modified in the installation.

The final sound designed embedded instruments into each avatar. Participants were able to trigger their instruments by double tapping on their screens, or blowing into their phone's microphone. Participants were able to change their oscillator type by hitting the top boundary. The top boundary space was subdivided into five sections, each representing a different oscillator that the participant could "pick up" by hitting the specific area of the boundary designated to that oscillator type. Moreover, because the sounds were being created in real-time using sound synthesis, users were able to modify their sounds’ attack and decay by the hitting the right and left boundary, respectively. The higher the hit on the right wall the faster the attack. Similarly, the higher the hit on the left wall the higher the delay time, thus providing a longer stretched out sound. The bottom boundary allowed users to change the frequency of their oscillator, similar to hitting keys on a keyboard.

Each user's sound triggers when they collide with a boundary, thus allowing each user to be able to design their own sound in the installation by receiving immediate feedback when they have modified their sound properties. Missiles have sounds as well; the envelope of their sounds is controlled by their lifetime and velocity. Missiles emitted from user inherit the user's sound frequency and upon creation the missile’s frequency is shifted up a fifth in pitch to create a consonant sound. All missile sounds decay and eventually are removed from the sound space. Lastly, users are able to interact with each other and exchange frequencies by colliding into each other. Finally the fluid field was used to filter the sounds coming out of the installation. This was implemented by using the sum of the field’s density to control the frequency cut off of a low pass filter.

Furthermore, future work would involve using the fluild field's properties to drive filter and effects on the resulting sounds in the installation, thus create a link between all users, the installation and interactions in the installation.