2016 Fall

Weihao Qiu

Weihao Qiu

MAT594GL Autonomous Mobile Camera/Drone Interaction

Inspection | System Design / Drone Software and Web Server Development

Inspection | System Design / Drone Software and Web Server Development

Project Overview

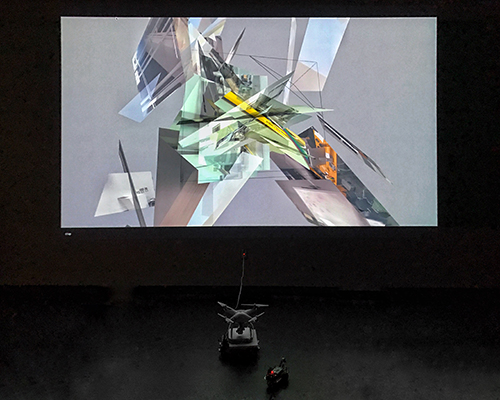

Inspection tries to blur the boundaries between physical and virtual world by creating continuously evolving virtual 3d photomontage as a response to the physical world, where a drone, a ground robot, and eight ultrasonic sensors consist a reactive collective system to connect the two worlds. The dynamic virtual assemblage constructed by Voronoi Algorithm not only creates novel aesthetic structure but also evokes questions about the relationship between objects and space.

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

Within nice weeks, the project started from scratch including assembling robots and setting up private servers, and ended up to a public interactive performance. The whole team includes five people with mixed backgrounds that ranging from art to computer science, engineering to political science. The following texts will specifically introduce the works that I've done in the project. Please check this page to see other team members' contributions to the project.

Photo taken by Weihao Qiu

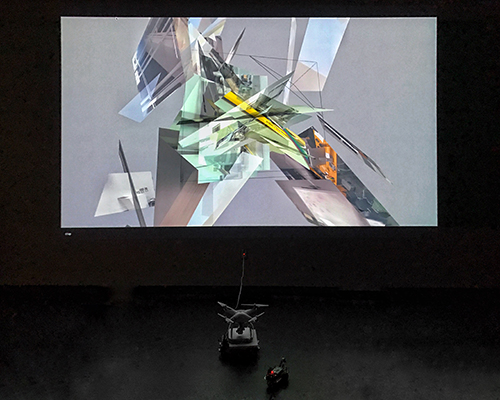

System Blueprint

By Weihao Qiu

The system is built to connect the drone controller(an iOS mobile device), the robot and the detection system with the central computer. Through some unified APIs for uploading and downloading data from a local server, every component in this system can indirectly communicate with others.

The main pipeline is that the computing center will guide the drone and therobot to move around based on the distance data from detecion system and make them take some photos, then generate a photomontage with all the photos collected in real-time.

Description of components:

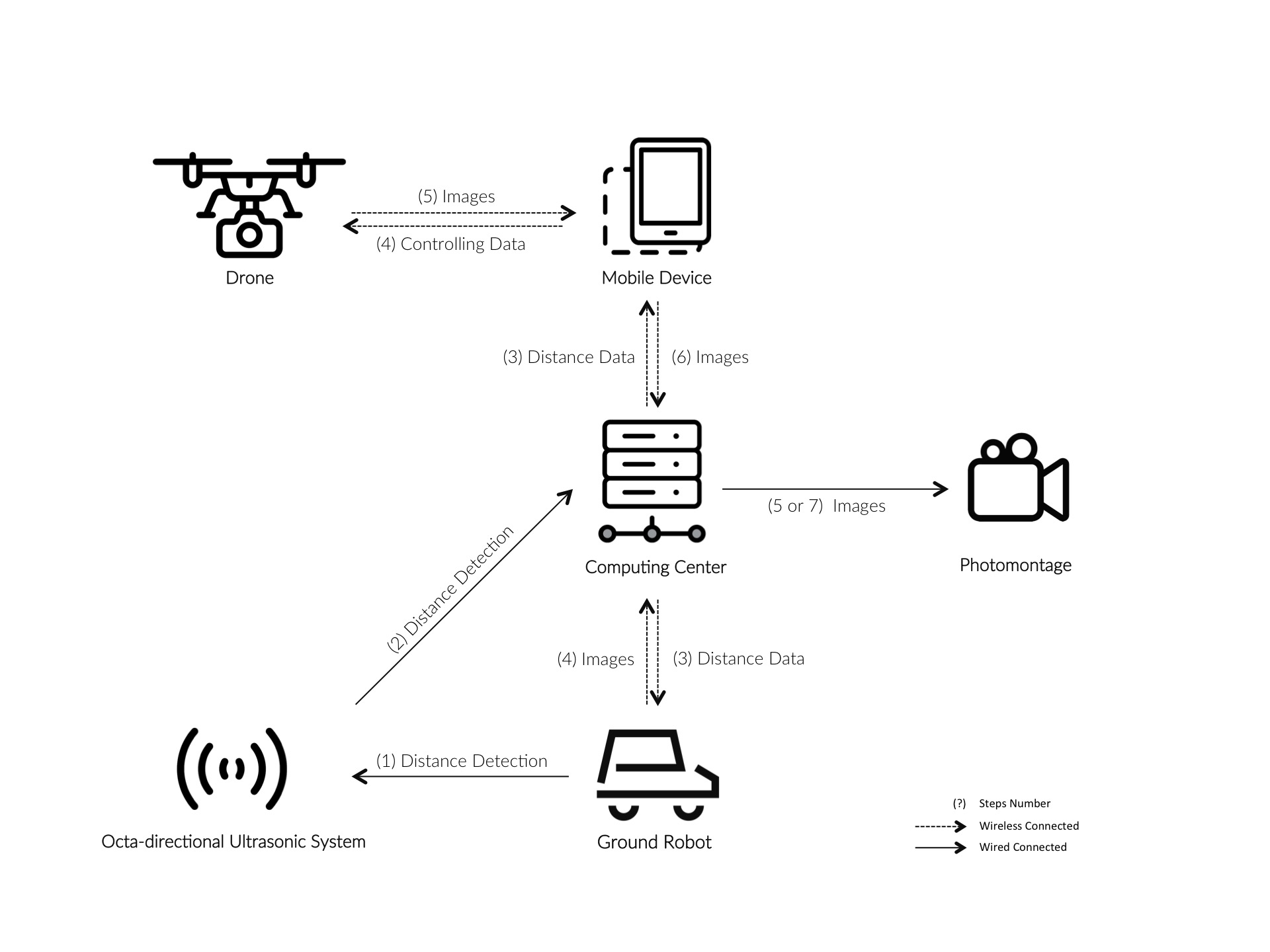

1. Drone: A DJI Phantom 4. It uses ultrasonic sensor and optic-flow camera to realize indoor localization without GPS.

2. Mobile Device: An iPad mini 2. It runs application to control the movement of the drone. It also fetchs and sends the photos taken by the drone to the web server.

3. Computing Center: Two Mac Minis. One of them is used to collect data from detection system and transfer data to the web server. The other one acts as the web server, which respond to any request from the mobile device and the robot.

4. Octa-Directional Ultrasonic System: Eight ultrasonic sensors in eight directions (45 degree per direction) connected to one Arduino board. The detection system is put in the center of the playground. As an ultrasonic sensor can detect the distance to the nearest obstacle in one direction, this system can yeild distance data in all eight directions. As the robot moves around the system, it detect which direction the robot locates to it and send the data to the computing sever through a web API.

5. Ground Robot: A ResperryPi-based robot with ultrasonic sensor and camera for obstacle avoidance and photography. The movement is guided by the data it downloads from the web server and the photos are sent to the web server.

DJI Phantom 4 drone, controller and mobile device(iPad mini).

DJI Phantom 4 with the ground robot.

Drone Autonomous Navigation Algorithm

This algorithm is engineered to make the drone look at where the robot locates. The robot will block the ultrasonic sensor corresponding to direction it locates, so that the distance value from that sensor will be smaller than others. Based on this fact, by finding out which sensor yeild the least distance data, the drone can know which direction it should look at.

Because the drone relys on compass to tell the direction, it can only tell the relative angle compared with the North Pole, the octa-directional ultrasonic system should be calibrated with a compass when it is installed.

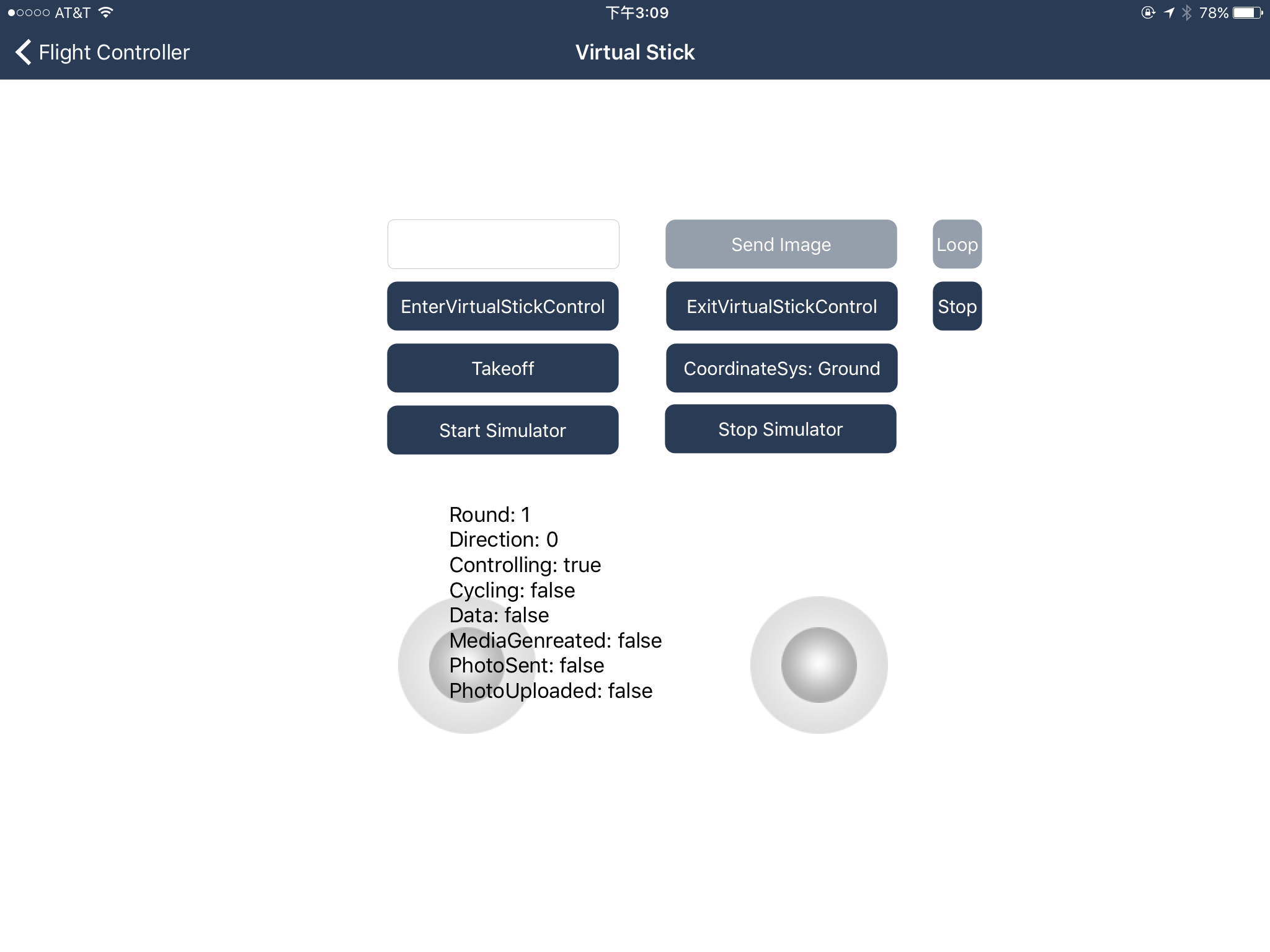

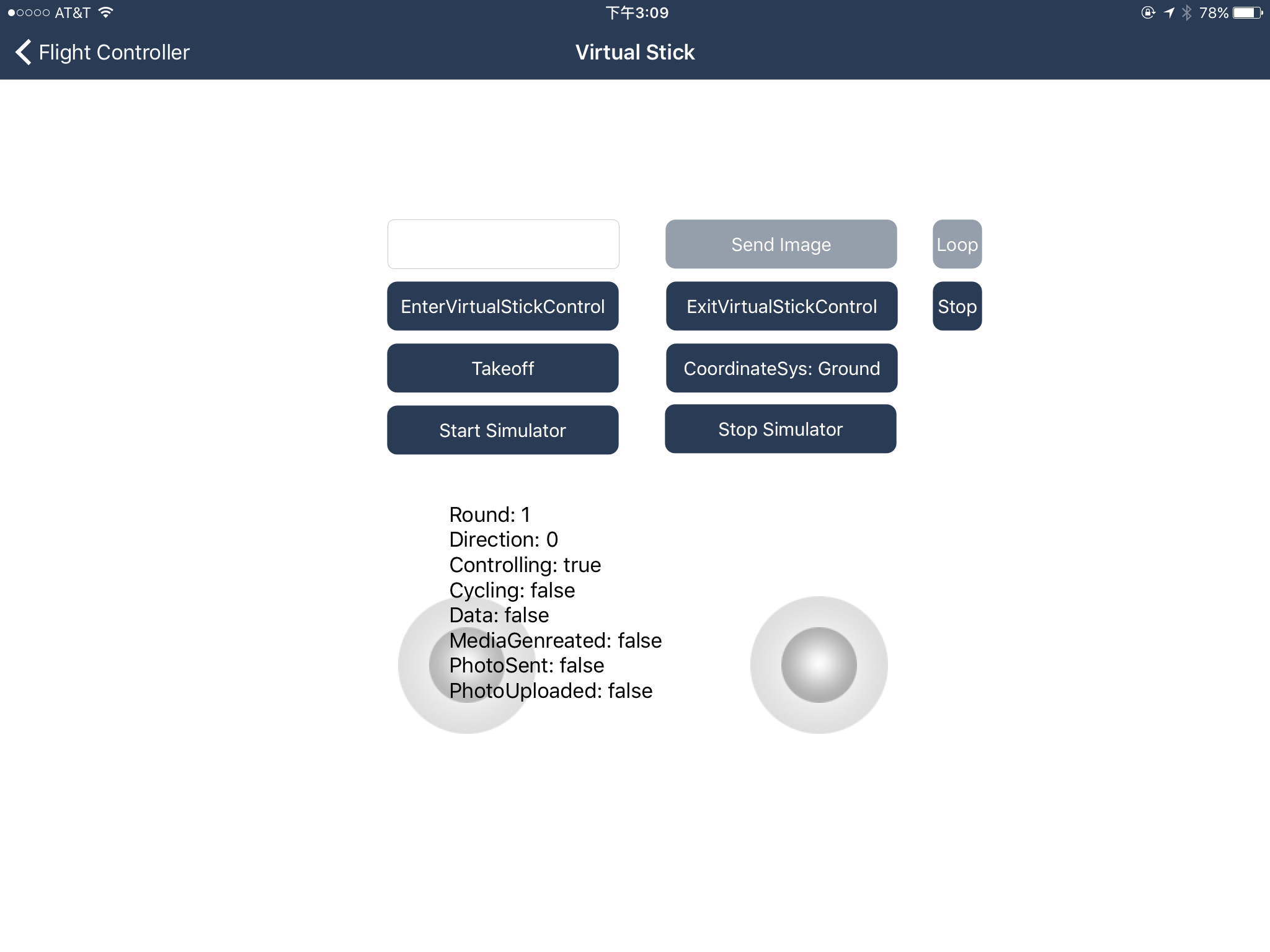

The interface of the application on mobile device to control the drone.

Because the drone relys on compass to tell the direction, it can only tell the relative angle compared with the North Pole, the octa-directional ultrasonic system should be calibrated with a compass when it is installed.

The interface of the application on mobile device to control the drone.

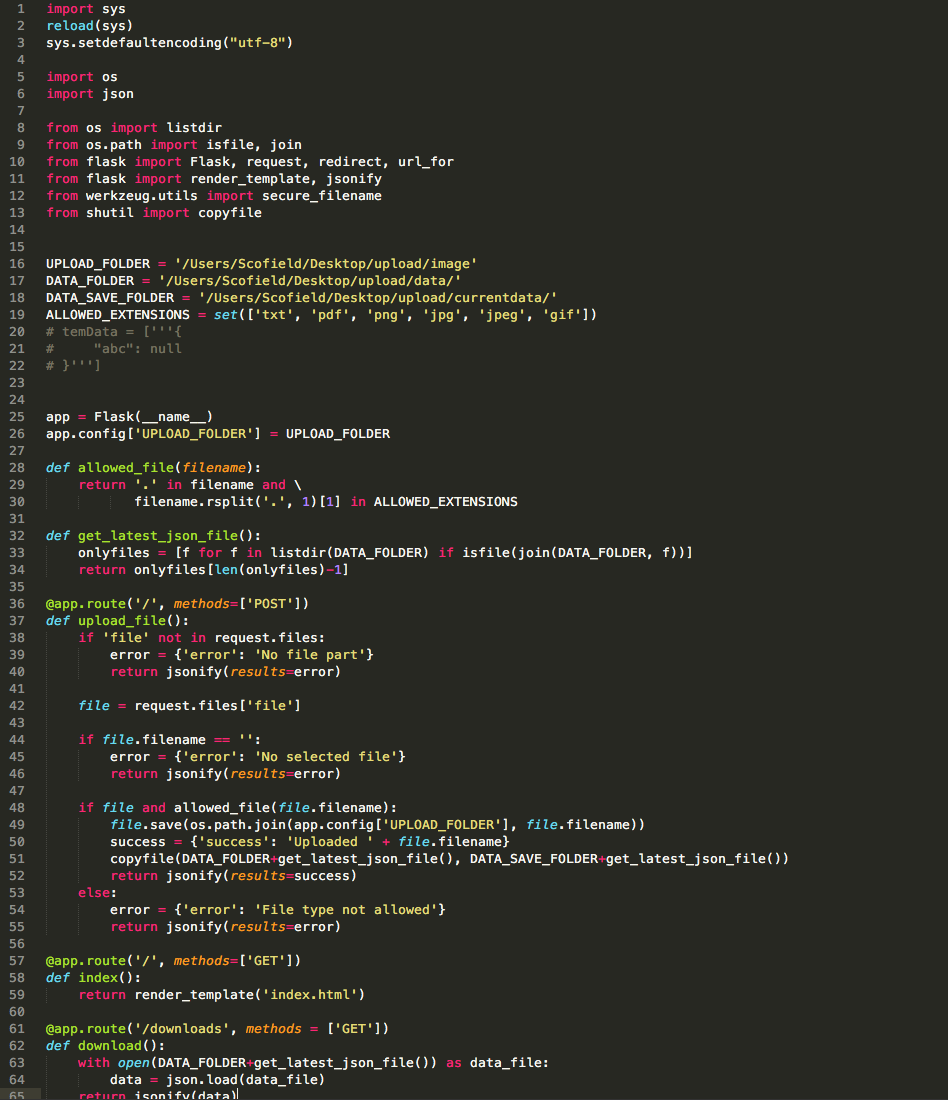

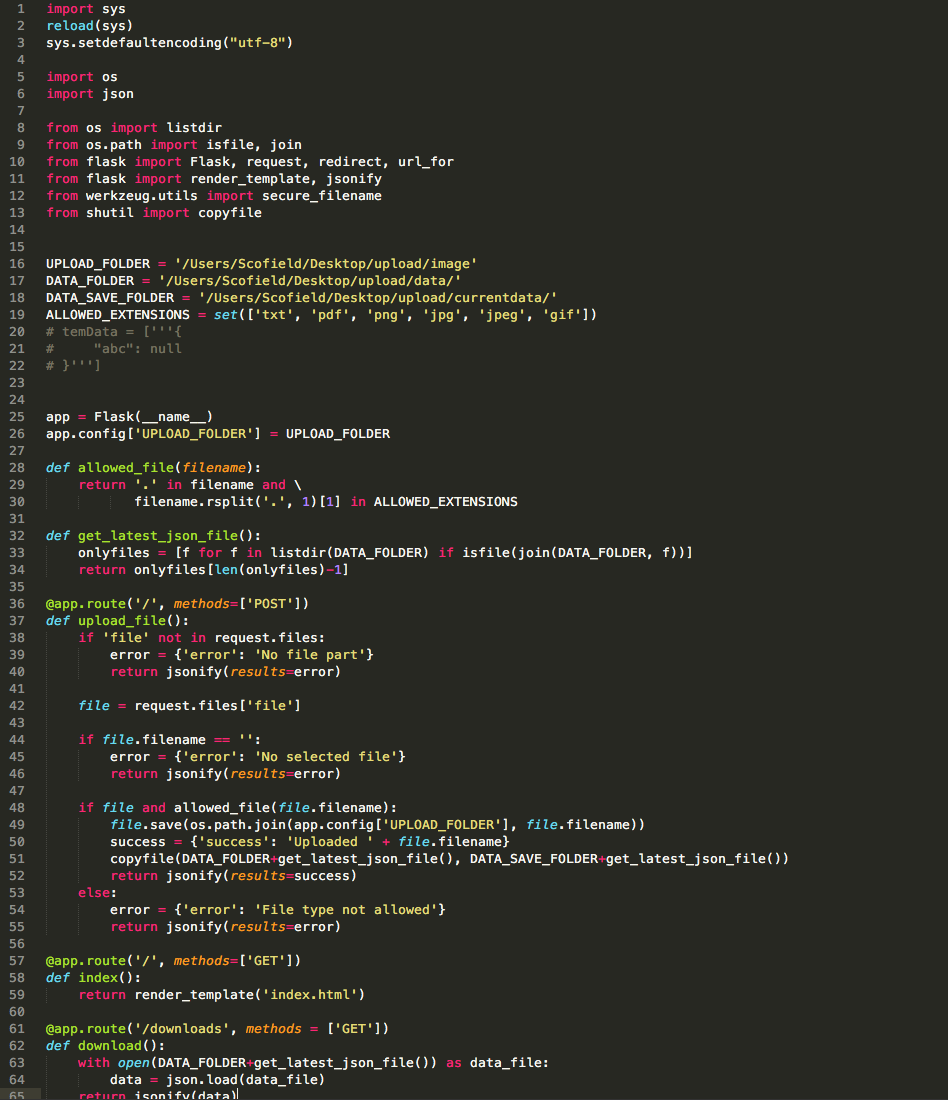

Sever Script

The web server is designed to be a convenient and unified interface for every system component to upload and download data wirelessly. In this project, a server script based on phython is engineered, for python's compactness and compatibility with the RasberryPi robot. In that script three main APIs are created:

1. "URL/" with POST request: to upload images

2. "URL/downloads" with GET request: to get the most updated distance values that eight ultrasonic sensors uploads

3. "URL/uploads" with GET request: to browse the uploaded files that have been stored.

All the data is transfered in JSON format.

The screenshot of the server python script.

1. "URL/" with POST request: to upload images

2. "URL/downloads" with GET request: to get the most updated distance values that eight ultrasonic sensors uploads

3. "URL/uploads" with GET request: to browse the uploaded files that have been stored.

All the data is transfered in JSON format.

The screenshot of the server python script.

Messaging Between The Drone and Server

Communication between the drone and the computing center is implemented by the mobile device and the web server. The messaging consists of two parts, the image uploading and the data downloading.

Uploading Images:

Every time the drone takes a photo, the mobile device will try to get the image from the drone wirelessly. After the image is fetched from drone, the mobile device will send the image to web server through a specific API. The uploaded images will be stored together for photomontage program to load and render.

Downloading Data:

By keeping calling the second API in last section, the mobile device is able to parse the JSON format response from the web server, and to calculate the target orientation of drone through the navigation algorithm by processing this data.

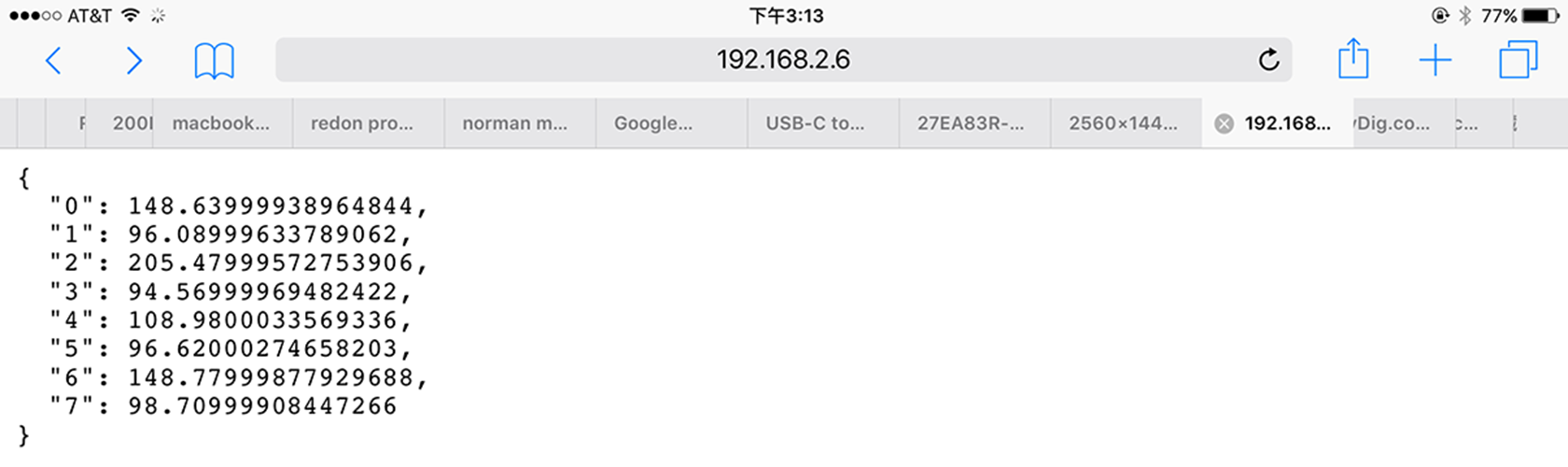

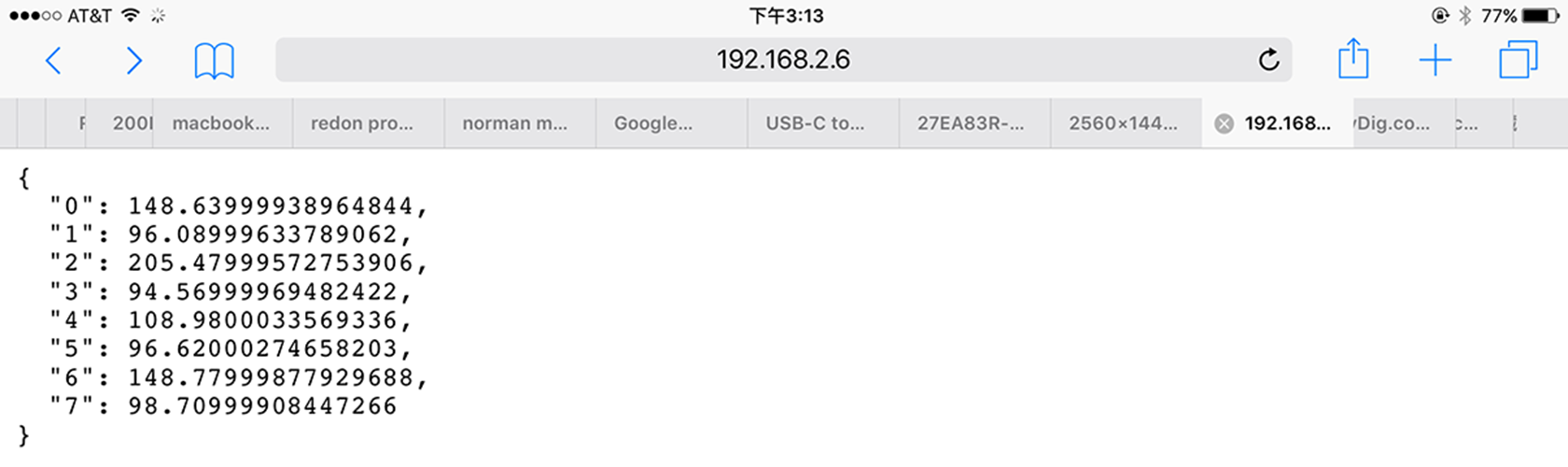

The "URL/downloads" API for downloading distance data from the sensor, in JSON format.

Uploading Images:

Every time the drone takes a photo, the mobile device will try to get the image from the drone wirelessly. After the image is fetched from drone, the mobile device will send the image to web server through a specific API. The uploaded images will be stored together for photomontage program to load and render.

Downloading Data:

By keeping calling the second API in last section, the mobile device is able to parse the JSON format response from the web server, and to calculate the target orientation of drone through the navigation algorithm by processing this data.

The "URL/downloads" API for downloading distance data from the sensor, in JSON format.

Future Works

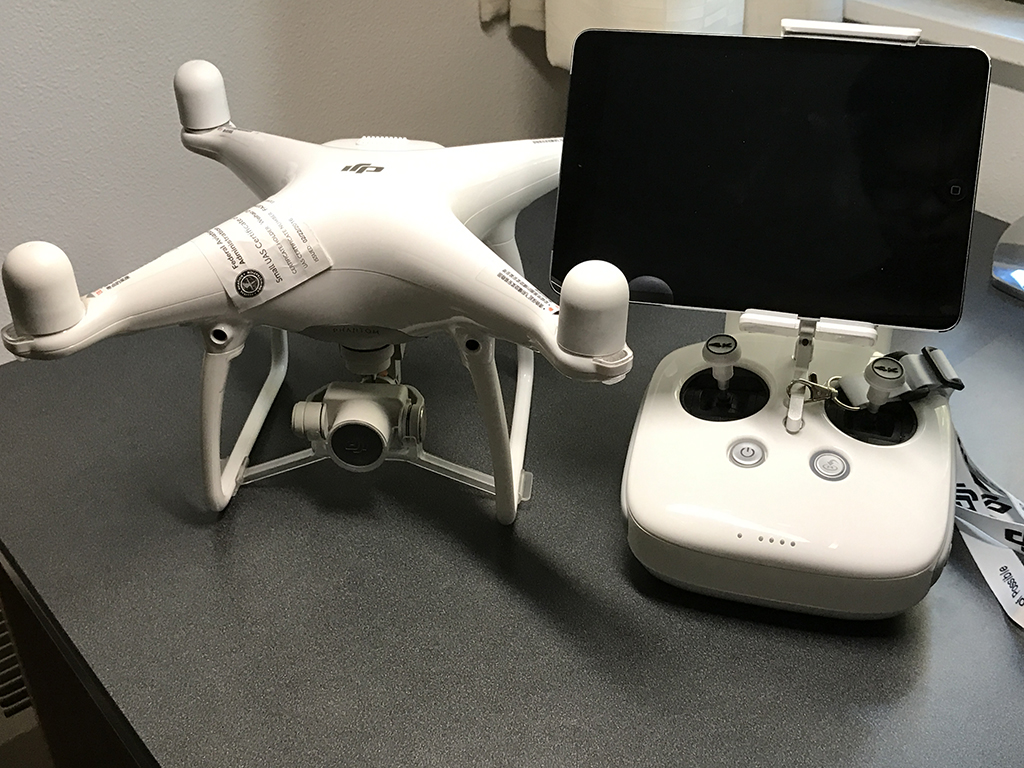

1. Migrate to a smaller drone

DJI Phantom 4 features itself for safe indoor flying because of it's obstacle avoidance system based on computer vision and localization system based on its ultrasonic sensor and optic-flow camera. However, it's noisy and relatively too big in size for indoor use. The newly released DJI Mavic Pro shares the exact same features with Phantom 4 but much smaller and queiter.

Making the drone smaller is also beneficial in encouraging the audiences' interaction with the drone. For now, because of the noise and size, Phantom 4 seems to be too frightening for audience to look closely. However, people favor the Mavic pro. The possibility of interaction between people and machines could bring this project to the next level.

DJI Mavic Pro drone, controller.

2. Full-room locolization system

The ability to localize itself is the key to make drones fly safely and to follow the orders from the controllers. In outdoor environment, GPS can easily help the drone keep track of itself. However, the lack of GPS signal in the indoor enviornment makes it difficult to fly safely.

In this project, the drone uses ultrasonic sensor towards the ground for keeping the drone in the same height, and uses the optic-flow camera to keep it from moving around. This machanism could gurantee to keep the drone at the same position vertically and horizontally when no order is given, rather than hovering around the room. However, unlike using the GPS, the drone is unable to its position compared to the room. Therefore, there is no way for drone to know if it is too close to the walls or some objects in the room.

In comparison to that local machanism of localization, some full-room locolization systems, which are generally utilized for motion capturing in animation movies or Virtual Reality technology, should be introduced. In that way, a virtual space with the location data of each object in the space can be reconstructed, thereby the drone can avoid other objects, or a group of drones can fly together without crashing themselves. The most complete solution in the market is OptiTrack system, whereas many other options could be used in negotiation of robustness, precision and coverage space.

Some projects with OptiTrack:

MagicLab “24 Drone Flight” with Daito Manabe

OptiTrack Drones Demo

DJI Phantom 4 features itself for safe indoor flying because of it's obstacle avoidance system based on computer vision and localization system based on its ultrasonic sensor and optic-flow camera. However, it's noisy and relatively too big in size for indoor use. The newly released DJI Mavic Pro shares the exact same features with Phantom 4 but much smaller and queiter.

Making the drone smaller is also beneficial in encouraging the audiences' interaction with the drone. For now, because of the noise and size, Phantom 4 seems to be too frightening for audience to look closely. However, people favor the Mavic pro. The possibility of interaction between people and machines could bring this project to the next level.

DJI Mavic Pro drone, controller.

2. Full-room locolization system

The ability to localize itself is the key to make drones fly safely and to follow the orders from the controllers. In outdoor environment, GPS can easily help the drone keep track of itself. However, the lack of GPS signal in the indoor enviornment makes it difficult to fly safely.

In this project, the drone uses ultrasonic sensor towards the ground for keeping the drone in the same height, and uses the optic-flow camera to keep it from moving around. This machanism could gurantee to keep the drone at the same position vertically and horizontally when no order is given, rather than hovering around the room. However, unlike using the GPS, the drone is unable to its position compared to the room. Therefore, there is no way for drone to know if it is too close to the walls or some objects in the room.

In comparison to that local machanism of localization, some full-room locolization systems, which are generally utilized for motion capturing in animation movies or Virtual Reality technology, should be introduced. In that way, a virtual space with the location data of each object in the space can be reconstructed, thereby the drone can avoid other objects, or a group of drones can fly together without crashing themselves. The most complete solution in the market is OptiTrack system, whereas many other options could be used in negotiation of robustness, precision and coverage space.

Some projects with OptiTrack:

MagicLab “24 Drone Flight” with Daito Manabe

OptiTrack Drones Demo