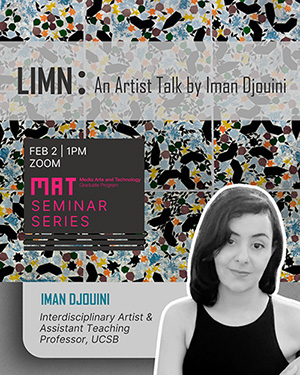

Speaker: Iman Djouini, UCSB College of Creative Studies.

Monday, February 2nd, 2026 at 1pm via Zoom.

Abstract

This talk will present a selection of artworks produced over the past six years. Djouini will discuss the research and processes informing her practice, with particular attention to her current and ongoing series Rendre, which will be shown in an upcoming solo presentation at the Venice Art Biennale as part of the Personal Structures exhibition.

Bio

Djouini, is an interdisciplinary artist. Her primary mediums include print media, book arts, typography, placemaking, and hybrid‐forms (including print + digital / emerging technologies).

Current research draws from ancient North African folktales, recounted over generations through oral traditions by matriarchs, using printed typographic compositions and sound to explore the evolution of language across the region and its diaspora. Djouini explores ancient women’s scripts, blending computational linguistics, art, and women’s perspectives. The works examine how linguistic characters such as sinographs travel, transform, and mutate across multiple languages, time and cultures. Typography becomes both a visual and conceptual tool, investigating how typographic characters form spatial relationships, and digital manipulation generates layered meanings.

Thematic concerns: Structural and conceptual connection between pattern and linguistics. Language as a System of Pattern, Visual Patterns in Written Languages, Patterns in Computational Linguistics, Pattern in Oral Traditions and Folklore.

Work fits into genres: New Media, Contemporary Art& Linguistics, global issues.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

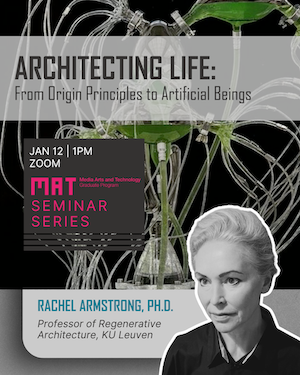

Speaker: Rachel Armstrong PhD, practitioner, theorist, Professor of Regenerative Architecture at KU Leuven

Monday, January 12th, 2026 at 1pm via Zoom

Abstract

My practice is driven by the ambition to design life—a synthetic, bottom-up pursuit of liveliness itself. This talk traces a research trajectory that builds on origin-of-life sciences, orchestrating three core agencies to prefigure a future artificial life form: AI ontologies (‘soft’ ALife) to model intelligence; electroactive microbes (‘wet’ ALife) to provide a metabolic, communicative ‘flesh’; and dynamic mineral substrates to form resilient, structural ‘bones.’ Such a pursuit demands a foundational ethic: to take full responsibility for the designed entities. Following Latour, this means learning to love our monsters. The ambition is not spectacle, but to forge mutualistic relationships with constructed life—relationships as demanding and creative as those with nature. The result is architectures that counter an extractive industrial logic by contributing through their day-to-day existence to the overall liveliness of the world. Expanding the potential of life is an Earth imperative. While autonomous artificial life remains the horizon, the urgent work is to build its ethical, material, and intellectual prerequisites. I will present this work, arguing for a future where our built environment is not just sustainable, but constitutively alive.

For further reading, you can download a PDF of her book "Liquid Life: On Non-Liner Materiality"

Bio

Professor Rachel Armstrong, PhD, is a practitioner and theorist whose work establishes a new trajectory for architecture: the design of artificial life. With a First Class Honours in medical science (University of Cambridge) and a PhD in Architecture (UCL), her practice is a synthetic pursuit of liveliness grounded in origin-of-life sciences. Her designs and prototypes are vibrant, metabolic systems that host, support, and propagate life, transforming architecture's role from creating static spaces to cultivating dynamic, living ecosystems.

Professor Rachel Armstrong's current roles also include:

Project Coordinator: Microbial Hydroponics (Mi-Hy)

Microbial WiFi Project

Exhibitor: Spika

EIC Ambassador

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

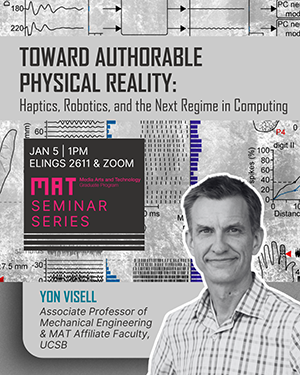

Speaker: Professor Yon Visell, Associate Professor of Mechanical Engineering and MAT affiliate faculty

Monday, January 5th, 2026 at 1pm. Elings Hall room 2611 and via Zoom

Abstract

I will present recent and ongoing work in the RE Touch Lab on emerging haptic and robotic technologies. Modern computing and AI operate at extraordinary speed over vast, high-dimensional data spaces, but their agency in the physical world is sharply constrained. Haptic and robotic systems provide this agency, but with constrained physical output bandwidth: they provide few degrees of freedom, and operate at far slower speeds than is feasible in computational domains. I will describe ongoing research in my group that is directed at alleviating such bottlenecks and at enabling computational systems with greater physical agency. A logical extension of these ideas: computationally authorable physical reality, an unmapped possibility whose territory and implications are compelling, if unclear.

Bio

Visell is Associate Professor of Mechanical Engineering and MAT Affiliate Faculty at the University of California, Santa Barbara. Visell directs the RE Touch Lab, where they pursue fundamental and applied research on the future of interactive technologies, with emphasis on haptics, robotics, and electronics, including emerging opportunities in human-computer interaction, sensorimotor augmentation, soft robotics, and interaction in virtual reality.

Dr. Visell’s research has been generously supported through multiple awards from the National Science Foundation and other government agencies, tech industry companies, and philanthropic foundations. He has published more than 75 scientific works, and served as editor and author of two books on VR, including "Human Walking in Virtual Environments" (Springer Verlag, 2013). His work has received four awards and more than a dozen award nominations at prominent academic conferences. Dr. Visell is a recipient of the National Science Foundation CAREER Award (2018), of a Hellman Family Foundation Faculty Fellowship (2017), and a Google Faculty Research Award (2016).

Dr. Visell spent more than five years in industry working at technology companies. He was the digital signal processing developer at Ableton from 2001 to 2003, where he wrote algorithms that have shaped music produced by artists ranging from The Roots to Deadmau5. Previously, he was a research scientist working on speech recognition at Vocal Point (now part of Nuance, makers of the Siri voice assistant). Prior to that, he designed auditory displays for underwater sonar at ARL, Austin, Texas. Dr. Visell later worked in interactive art, design, and robotics research at the University of the Arts Zurich, at FoAM, Belgium, at the Interaction Design Institute Ivrea, and at the art-architecture-technology group Zero-Th, which he co-founded in 2004. His creative works and activities have been presented at cultural venues including Ircam / Centre Pompidou (Paris, France), SIGGRAPH, Phaeno Science Center (Wolfsburg, Germany), La Gaité Lyrique (Paris), the Oboro Center (Montreal), and the Biennale of Design St. Etienne (France).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Friday, December 19th, 2025

12pm PST

Zoom only

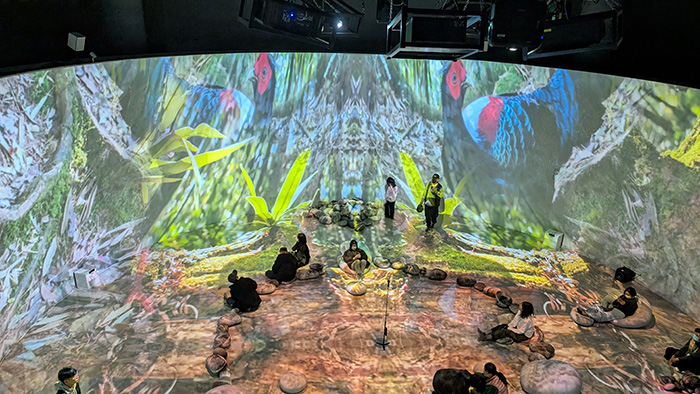

Abstract

This thesis investigates how musical compositional thinking can be translated into immersive audiovisual environments, focusing on cross-platform strategies applicable to both VR and the AlloSphere. Using The Golden Boy as a large-scale case study, the research examines how techniques such as theme-and-variation, gestural development, timbral transformation, and spatial counterpoint can function as the structural foundations of immersive visual music. The project further tests whether the GRAIN and SCOPE diagrams, originally developed for two-dimensional audiovisual analysis, can operate as practical compositional and analytical tools in multidimensional spatial media.

The work was implemented and evaluated across two contrasting platforms: the Apple Vision Pro (VR) and the three-story AlloSphere, whose 26-projector, 60-speaker infrastructure introduces unique constraints of scale, stereoscopy, and spatial audio. Technical and perceptual testing revealed how architectural form, viewing position, stereoscopic thresholds, and multi-channel spatialization significantly reshape the behavior of abstract audiovisual material. These findings suggest that musical ideas such as fragmentation, temporal acceleration and deceleration (accelerando/ritardando), gestural articulation, and textural development require re-interpretation when translated into immersive space, where depth, motion, and scale function as primary formal parameters.

The thesis proposes a composition-based, cross-platform framework for immersive visual music that integrates musical structure with spatialized image–sound relationships. The outcomes contribute to immersive media, visual music, and transdisciplinary composition by offering a musically informed methodology capable of maintaining coherence across heterogeneous immersive environments.

Tuesday, December 2nd, 2025

1pm PDT

Elings Hall room 1605 and via Zoom

Textile craftsmanship is a complex and powerful form of making that, for centuries, has developed and refined methods for manipulating fibers, producing textiles with distinct structures, functions, and cultural significance. Digital fabrication tools have long been intertwined with textile production, particularly in crafts well-suited for automation. In this dissertation, I adopt a craft-centered perspective to explore how digital fabrication can expand the expressive potential of handmade textile practices that remain too complex for machines to mimic. I present a series of works that investigate the integration of computational tools and manual methods that operate together (1) to produce new forms of handmade textile crafts, (2) inform new computational design tools for textile crafts, and (3) create more accessible and personally meaningful entry points into computational making for crafters, youth, and creative learners. My work is evaluated with craft enthusiasts, crochet practitioners, and high school students through a series of workshops and the design and production of physical textile artifacts. The findings suggest that it is possible to integrate computational tools in a way that respects, follows, and extends traditional techniques while enabling innovative artifacts, characteristics, and functionality.

Speaker: Joel Jaffe, MAT MSc student

Monday, November 24th, 2025 at 1pm PST. Elings Hall room 2611 and via Zoom

Abstract

Digital audio programming is both niche and ubiquitous. It spans technology that brings the entire history of recorded music to your fingertips (Spotify), enables personal wireless transmission with sleek hardware (Apple AirPods), and even enshrines the sounds of analog (Neural Amp Modeler). It is an art and science practiced by relatively few, yet touches the daily lives of us all. Mirroring the nature of analog audio processors, modularity and composability are hallmarks of the field. Usage of popular Digital Audio Workstations (DAWs) is synonymous with the use of plugin software extending their capabilities, and options for these “processors” are as numerous as mobile apps, powered by the accessibility of their development. In contrast, development of audio software for embedded systems retains a much higher barrier to entry, despite the recent convergence of desktop and embedded architectures and major overlap of design principles for audio software in both platforms. Additionally, audio programming for the web remains a frontier as the Web Audio API has only recently solidified. By identifying the common subset of functionality and rules required for an encapsulated audio processor to run in desktop, embedded, and web environments, a plugin standard can be created that enables accessible development and deployment to all of these targets from a unified interface- enabling an ocean of developer creativity to be unleashed for all to hear.

Bio

Joel A. Jaffe is an MSc student with emphases in digital audio, electric instruments, and embedded systems. His off-campus pursuits revolve around rock music performance and production, as well as the maintenance, repair, and modification of instruments. His research investigates practical digital tools for electric instrumentalists and their development as meaningful interaction between music academia and practicing musicians.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Tomás Henriques

Monday, November 17th, 2025 at 1pm PST in room 2611 Elings Hall and via Zoom

Abstract

This talk will delve into creative ideas to make new interfaces for the growing field of human-computer interaction.

The SPR1NG controller will be showcased using games specifically developed for it. Issues such as interface ergonomics, player interactivity, and user experience will be brainstormed. Efforts, challenges and lessons learned in trying to bring a new technology into a commercial product will be discussed.

Bio

Tomás Henriques is a composer, researcher, and full professor at SUNY Buffalo State University. His research focuses on new interfaces for musical expression, spatial audio design, human-computer interaction, and computer-aided vision.

Dr. Henriques has garnered international recognition, highlighted by a First-Place award at the 2010 Guthman Musical Instrument Competition for the invention of the “Double Slide Controller.” His innovations also include the multi-patented “Sonik Spring” technology, the “See-Through-Sound” initiative—an international project leveraging computer-aided vision to assist the visually impaired—and his collaboration with Yamaha engineers to develop a 52.1 surround mixing system installed in Ciminelli Hall at Buffalo State.

Dr. Henriques is the Founder of CoreHaptics, a startup technology company specializing in mechatronic controllers.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Friday, September 5th, 2025

9am PDT

Elings Hall room 2611 and via Zoom

Since the 1950s, computer artists have used generative systems to explore creative agency in collaboration with autonomous processes that exhibit emergent behavior and self-organization. In the 1990s, artistic and scientific investigations into life-like computation introduced aesthetic frameworks inspired by biological growth and digital morphogenesis. More recently, advancements in materials and fabrication technologies have made translating generative processes into the physical domain increasingly feasible.

This dissertation presents findings from my artistic practice, conceptualized as emergent expression, in the context of distributed creative agencies involving computational and material processes. These processes can display novel behaviors that influence the form in unexpected ways. The research encompasses both virtual and physical domains, investigating how generative systems can be effectively integrated into digital fabrication and craft workflows. Through practice-led research and collaborative projects, I develop computational complex systems informed by artificial life aesthetics, as well as new fabrication methodologies tailored for craft applications. These systems result in hybrid prototypes that demonstrate how generative form, material behavior, and human intervention can be combined into cohesive artworks.

This investigation centers emergence as a creative construct, builds on my art practice-based research, and leads to the development of novel computational tools, techniques, and workflows that negotiate human creative agency.

Wednesday, September 10th, 2025

1pm PDT

Elings Hall room 2003 and via Zoom

Abstract

The concept of "flow" describes the state and the experience of total absorption that fuels creativity, deep concentration, and optimal performance. Music is one of its most powerful triggers. While flow in solo performance has been widely studied, the elusive dynamics of flow in group performance remain underexplored.

Therefore, my project, Flow Sphere, aims to bridge this gap by combining neuroscience and media art. Using EEG and ECG signals captured with a MUSE 2 headband, the system visualizes brain and heart activity in immersive 3D forms inside MAT’s AlloSphere. EEG signals unfold as spiraling "flow streams" like silky ribbons, while ECG pulses shimmer as vibrating stars. These signals are also sonified, creating a multi-sensory biofeedback environment where performers can perceive their own mental states.

A user study compares solo and group performance sessions, integrating physiological signals with self-report flow questionnaires to assess their experience. Preliminary results suggest that successful ensemble music performing enhances the intensity of individual flow. Beyond offering an immersive experience, this project demonstrates how real-time biofeedback can link subjective reports and objective neural data. Future iterations will expand to real-time sound synthesis, multi-headset synchronization, and adaptive machine learning to refine the analysis of musical flow.

Friday, June 13th, 2025

2pm PDT

Elings Hall room 2003 and via Zoom

Abstract

Most of the world’s megacities are located in the Low Elevation Coastal Zone (LECZ), which represents 2% of the world’s total land area and 11% of the global population. The number of people living in the LECZ has increased by 200 million from 1990 to 2015 and is projected to reach 1 billion people by 2050. These areas are especially vulnerable to the effects of coastal processes such as sea level rise, coastal erosion, and flooding, exacerbated by warming global climates. The goal of coastal sciences is to characterize these processes to inform coastal management projects and other applied use cases. Coastal environments are exceptionally dynamic—there are several marine and land processes that occur on a range of spatio-temporal scales to influence the hydro- and morphological profile to various extents across discrete coastal sections. The spatio-temporal sampling requirements for characterizing coastal processes, coupled with the volatile nature of the area, make in-situ sampling difficult and traditional remote sensing techniques ineffective. Therefore, bespoke remote sensing solutions are required.

Our goal is to aggregate knowledge and review modern practices that pertain to remote sensing of coastal environments for oceanographic, morphological, and ecological field research in order to provide a framework for developing low-cost integrated remote sensing systems for coastal survey and monitoring.

A multidisciplinary approach that incorporates biological, physical, and chemical data gathered through a combination of remote sensing, ground truth observations, and numerical models subject to data assimilation techniques is currently the optimal method for characterizing coastal phenomena. Data fusion algorithms that integrate data from disparate sensor types deployed in conjunction are used to produce more accurate and detailed information. There is an international network of remote sensing systems that deploy a variety of sensors (e.g., radar, sonar, and multispectral image sensors) from a range of platforms (e.g., spaceborne, airborne, shipborne, and land-based) to compile robust open-source datasets that facilitate coastal research. However, models are currently limited by a lack of data pertaining to particular environmental parameters, and the extent and regularity of high-resolution data collection projects. The scientific coastal monitoring and survey network must be expanded to address this need. Recent technological advancements—including heightened accuracy and decreased footprint of sensors and microprocessors, increased coverage of GNSS and internet services, and implementation of machine learning techniques for data processing—have enabled the development of scalable remote sensing solutions that may be used to expand the global network of environmental survey and monitoring systems.

Monday, June 9, 2025

10am PDT

Elings Hall room 2611 and via Zoom

Abstract

The integration of Artificial Intelligence (AI) into mass cultural production has brought its underlying limitations—stereotypical representations, distorted perspectives, and misinformation—into the spotlight. Many of the risks of AI disproportionately impact minority cultural groups, including Black people. Now that AI is embedded in institutions like education, journalism, and museums, it is transforming how we access and reproduce sociocultural knowledge, while continuing to exclude or misrepresent many aspects of Black culture, if they are included at all. To reimagine AI systems as tools for meaningful and inclusive creative production, we must look beyond conventional technical disciplines and embrace a radical, decolonized imagination. I am to use Afrofuturism—a methodological framework rooted in Afrodiasporic visions of the future and grounded in critical engagement with race, class, and power—as a rich foundation for creating more equitable and imaginative technological innovations.

As a starting point, I build on existing calls to develop technology that does more than mitigate harm—a space I define as liberatory technology. Within this space, I identify liberatory collections—community-led repositories that amplify Black voices—as powerful models of data curation that empower communities historically marginalized by traditional AI and archival systems. My survey of fourteen such collections reveals innovative, culturally rooted approaches to preserving and sharing knowledge. I use these findings to argue for consent-driven training models, sustained funding for community-based initiatives, and the meaningful integration of Black histories and cultures into AI systems.

Expanding on this foundation, I have conducted preliminary interviews with Afrofuturist data stewards—Black technologists who carefully collect, curate, store, and use data related to Black speculative projects. My early analysis shows that these creators approach AI with a strong sense of cultural responsibility—not only to critique it, but to retool it in service of Black life. They navigate this historically fraught technological space by reclaiming tools once used for harm and repurposing them toward Black joy, rest, and healing. Through Afrofuturist perspectives, they reimagine historical data and artifacts, envisioning futures grounded in possibility rather than oppression.

UCSB | Tuesday, June 3 | 5-8PM

California NanoSystems Institute, Elings Hall (2nd Floor)

Research, exhibitions, demos.

Explore the latest work of MAT’s research labs in their full splendor and experience the unique and justly famous AlloSphere.

Paid parking is available in lot 10 (adjacent to Elings Hall)

SBCAST | Thursday, June 5 | 6-10PM (Live performances begin 8PM)

Santa Barbara Center for Art, Science and Technology (531 Garden Street)

Installations, performances, urbanXR

Enjoy Installations, performances, urbanXR, in a unique and festive setting at Santa Barbara’s extraordinary SBCAST compound.

This second event takes place as part of Santa Barbara’s “First Thursday,” highlighting the collaboration of the MAT Alloplex Studio/Lab@SBCAST with the SBCAST and the Santa Barbara creative community. Live music performances (from 8-10 p.m) will feature the MAT Create Ensemble. Large-scale projection-mapping will be presented after dark.

Paid parking is available in city lots 10 and 11.

Exhibition Video

A list of the exhibits can be found here: show.mat.ucsb.edu.

Deep Cuts are heard in boardrooms and whispered through backdoor channels. They echo in creative, thought-provoking work that’s been both cherished and cast aside. They’re felt by those with eyes on the margins and hands in the process—those shaping what’s next from the edge.

Existing between abstraction and lived experience, Deep Cuts is a warning, a wound, a hidden gem. It’s a call to attention.

MAT is a deep cut.

Media Arts and Technology is a graduate program at UC Santa Barbara that bridges the humanities and sciences. We design and deploy cutting-edge tools to create work that lives in the gradients between and beyond disciplines. Our practices span fabrication, musical composition and performance, computer graphics, immersive installation, and more. We harness the power of digital technology to produce bold, experimental, and defiantly original works that cut deep in every sense of the word. Creative and critical, from the edge of campus and the cutting edge of ideas, our fresh light illuminates the deep cuts of our complex world.

This exhibition is our reveal. It surfaces what’s often unseen: the quietly radical, the structurally complex, the playfully subversive. Here, algorithms dance, light speaks, and code performs. In our hands, technology is not just a tool—it’ s a creative co-conspirator shining light into the unknown.

Deep Cuts is both a reckoning and a celebration. It honors what has been lost or overlooked, refuses to be silenced, and boldly carves bright new pathways through the unknown.

"There is a crack, a crack, in everything. That’ s how the light gets in."

- Leonard Cohen

Both EoYS events will showcase our students' cutting-edge research and new media artworks and work from our MAT courses.

Sabina Hyoju Ahn

Alejandro Aponte

Sam Bourgault

Emma Brown

J.D. Brynn

Deniz Caglarcan

Pingkang Chen

Payton Croskey

Ana Cárdenas

Colin Dunne

Diarmid Flatley

Devon Frost

Yuehao Gao

Amanda Gregory

Joel A. Jaffe

Nefeli Manoudaki

Ryan Millett

Erik Mondrian

Megumi Ondo

Lucian Parisi

Iason Paterakis

Weihao Qiu

Marcel Rodriguez-Riccelli

Jazer Sibley-Schwartz

Mert Toka

Ashley Del Valle

Shaw Yiran Xiao

Karl Yerkes

Emilie Yu

Anna Borou Yu

Yifeng Yvonne Yuan

See the article in UCSB's news magazine "The Current" → MAT end of year show fuses art and engineering.

Monday, June 2nd, 2025

9:30am PDT

Elings Hall room 2611 and via Zoom

Abstract

Visual artificial intelligence systems increasingly shape how images are perceived, interpreted, and created, prompting new questions about the design, transparency, and experiential nature of machine vision. This thesis investigates experiential AI, an approach grounded in perceptual experience, interpretability, and interaction. Drawing from machine learning, human-computer interaction, and computational media arts, it explores how visual AI can be made not only technically capable but also perceptually meaningful and socially intelligible.

At the core of this inquiry is the development of design strategies that reveal and structure the internal processes of image analysis and synthesis. These strategies treat visual computation as a site of creative and conceptual engagement, emphasizing interpretability through multimodal and interactive systems.

Through two case studies, the thesis demonstrates how users can engage directly with the perceptual mechanisms of AI. The first focuses on visualizing the representational structures within neural networks as spatial environments, facilitating intuitive understanding of how visual features evolve. The second introduces gaze-informed conditioning techniques for generative systems, using perceptual signals to guide and evaluate image synthesis.

Together, these investigations propose a broader research direction for interactive visual computation, one that foregrounds transparency, user interpretation, and perceptual alignment. This work contributes to the growing field of explainable and human-centered AI by demonstrating that visual models can be both expressive and interpretable. It proposes new possibilities for designing visual AI systems that are not only high-performing, but also capable of reasoning about their outputs in ways that support creativity, insight, and trust.

Speaker: George Legrady

Monday, May 19th at 1pm PST via Zoom

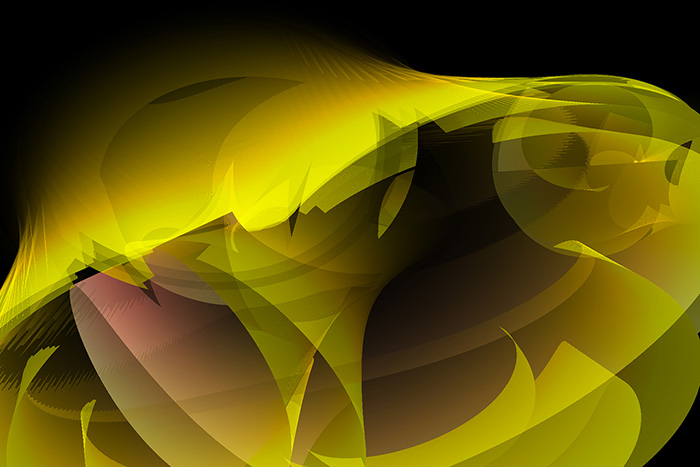

George Legrady is an interdisciplinary digital media artist, author, researcher and Distinguished Professor in the Media Arts & Technology Graduate program at the University of California, Santa Barbara where he directs the Experimental Visualization Lab. The overall focus of his research and practice is based on the study of how image-generating technologies (camera, computer imaging systems, software) inadvertently redefine the data they process, and how this affects the content and meaning of the images, objects, and time-based media that these image-generating machines produce.

Legrady belongs to the first generation of photographic-based artists to integrate computational processes in the mid-1980s for creating “Born-Digital” visualizations. His current artistic and research projects explore algorithmic processes for photographic imaging and data visualization through semantic categorization and self-organizing systems, interactive computational-based art installations, and mixed-realities narrative development. A key focus is the creative potential of such technologies for aesthetic coherence and expression.

Legrady has held previous academic appointments at University of Western Ontario (Photography, 1977-1981), University of Southern California (Photography and computer art, 1984-1988), San Francisco State University (Conceptual Design / Information Arts, 1989-1997), Merz University of Visual Communication, Stuttgart (Digital media, 1995-2000) and visiting faculty positions at North Texas State University, Denton, Texas (Photography, 1976), Nova Scotia College of Art & Design, Halifax, (Photography, 1979), California Institute of the Arts (Photography, 1982-1984), UCLA (Photography, 1983; Digital media, 1998), Hungarian Academy of Fine Arts, Budapest (Intermedia, 1994), Fellow at the Institut des Mines, ParisTech (Diaspora Lab, 2017, 2018), and University of New South Wales, Sydney (iCinema Center for Interactive Research lab, 2018).

His artistic practice and research have been funded by a John Simon Guggenheim Fellowship (2016), Graham Foundation Advanced Institute for the Fine Arts grant (2019). Robert W. Deutsch Foundation (2011-2014), National Science Foundation (IIS, 2011, ASC 2012), Creative-Capital Foundation Emerging Trends (2003), the National Endowment for the Arts (1996), the Daniel Langlois Foundation for the Arts, Science and Technology (2000), the Canada Council for the Arts (multiple), Ontario Arts Council (multiple). University research grants awarded at the University of Southern California, San Francisco State University, and UC Santa Barbara.

Legrady has exhibited his works at major museums internationally with over 60 solo exhibitions, and they are in permanent collections at the Centre Pompidou, Paris (2024); Art Gallery of Ontario, Toronto (2021); Los Angeles County Museum of Art (2009, 2019); Santa Barbara Museum of Art (2019); ZKM, Centre for Art & Technology (1996, 2016); 21c Museum & Hotel, Cincinnati (2013); San Francisco Museum of Art (2012); D.E.Shaw & Co Consulting, NYC (2008); Corporate Executive Board, Arlington (2008); Pro Ahlers Art Foundation, Hanover, Germany (2008); Whitney Museum of American Art (2005), NYC; McIntosh Gallery, Western University, London (2020); Centre Gantner, Belfort, FR (2005); American Museum of Art at the Smithsonian, Washington (1987); Canada Council Art Bank (1982, 1990); National Galleries of Canada, Ottawa (1986); Musée d’art contemporain, Montreal (1984); Museum London, Canada (1980).

He has completed three data-based commissions: “Making Visible the Invisible”, Seattle Public Library (2005-present), “Kinetic Flow”, Santa Monica / Vermont Station, Hollywood Red line, Los Angeles Metro Rail (2006), and “Data Flow”, Corporate Executive Board, Arlington VA (2007-2008).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Thursday, May 15th, 2025

9:30am

Elings Hall room 2611 and via Zoom

Abstract

The advent of generative AI has introduced unprecedented creative capacities in image-making, accompanied by a parallel expansion in automation. Yet, because these systems are trained on vast datasets calibrated for statistical normativity, they risk engendering a homogenization of visual culture—flattening aesthetic diversity, marginalizing visual histories, and diminishing creative complexity. Furthermore, the considerable computational resources and specialized technical competencies required for meaningful engagement with these models pose significant barriers to access and customization, further constraining their creative potential.

This dissertation explores how computational creatives can address these challenges by reclaiming agency within generative AI systems through artistic and technical interventions that involve repurposing and combining different models and designing novel computational systems. It proposes that creative authorship resides not only in the final output but also in the systems configured to produce them. Rather than treating generative AI as a fixed tool, this research approaches AI models as elements within flexible systems—comprising modular components, datasets, and workflows—that can be reconfigured based on aesthetic objectives, artistic intentions, and cultural contexts.

Grounded in a practice-led systems-oriented methodology, the research investigates three main aspects: (1) applying AI models in art projects to meet creative computational needs; (2) extending the creative affordances of AI models by designing hybrid code–AI workflows and real-time interactive experiences; and (3) mitigating the visual culture gap between AI-generated imagery and advanced photographic aesthetics through systematic model fine-tuning, feature engineering, dataset curation, and the composition of cross-model AI systems.

Through these practices, the research demonstrates how computational artists can act as orchestrators of generative AI systems—building custom datasets with AI models, combining multiple models to solve complex tasks, and designing novel interfaces to reshape the user experience—thereby shaping and diversifying aesthetic outcomes beyond the constraints of current AI image systems.

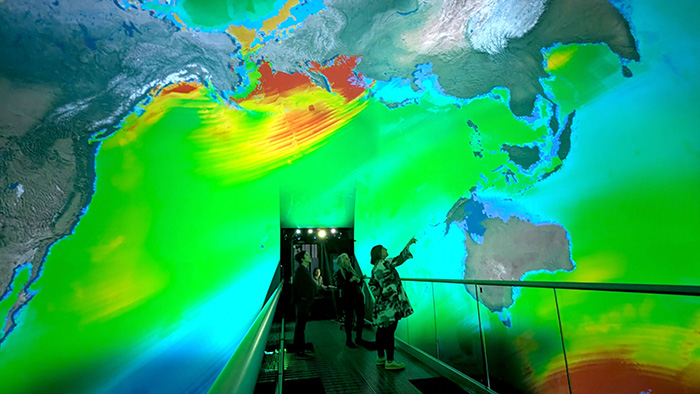

The event will take place on May 10, 2025 at 10am.

Professor Kuchera-Morin will discuss the connections between a selection of artworks with another presenter Frederick Janka, Executive Director of the Carolyn Glasoe Bailey Foundation. The selected artworks will be discussed along with the connections between them and current and upcoming artworks and projects at the Allosphere and the Museum of Contemporary Art Santa Barbara.

Sketches for Sensorium showcases core elements of the late environmental artist Newton Harrison’s (1932 - 2022) long-term project, Sensorium for the World Ocean. It will premiere at the AlloSphere as a satellite to the UC Irvine Beall Center for Art and Technology’s forthcoming exhibition, Future Tense: Art, Complexity, and Uncertainty, produced in partnership with the 2024 Getty PST Art: Art and Science Collide initiative. The installation will incorporate immersive audio and visual scientific climate and ocean health data provided by the Ocean Health Index of the Halpern Lab at the Bren School of Environmental Science & Management.

Sketches for Sensorium is a project of the Center for the Study of the Force Majeure in collaboration with Virtual Planet Technologies, Almost Human Media, and the AlloSphere Research Group. It will premiere with an original spatialized composition and an interactive data world, following Newton’s wish to impart a sense of hope to audiences.

www.independent.com/2024/09/11/sketches-of-sensorium-part-of-getty-pst-art-at-uc-santa-barbara

allosphere.ucsb.edu/research/sketches_of_sensorium/2024.html

Speaker: Tobias Höllerer

Monday, May 12th, 2025. Elings Hall room 1601 and via Zoom

Abstract

In this talk, I will try to discuss the trajectory of technology adoption over the past 30 years, as seen through the lens of milestones in my field, Human-Computer Interaction. We have witnessed amazing technological advancement, especially over the past few years. At the same time, I think it is fair to say that humans are in danger of diminishing themselves in their continued increase of reliance on automation. I will draw on research from students in the Four Eyes Lab (for research in Imaging, Interaction, and Innovative Interfaces) and report from the overall research landscape in the field of Human-Computer Interaction, to outline a few things the current generation of the technologically privileged and future gatekeepers of technology (i.e. you) could do to keep human creators and practitioners in charge and on a self-improving pathway.

Bio

Tobias Höllerer is Professor of Computer Science and affiliated faculty in Media Arts and Technology at UCSB. He directs the “Four Eyes” Laboratory, conducting research in the four I's of Imaging, Interaction, and Innovative Interfaces. His research spans several areas of HCI, real-time computer vision, computer graphics, social and semantic computing, and visualization. He obtained my PhD in computer science from Columbia University in 2004. In 2008, he received the US National Science Foundation’s CAREER award for his work on “Anywhere Augmentation”. This work enabled seamless mobile augmented reality and demonstrated that even passive use of AR can improve the experience for subsequent users. He served as a principal investigator on the UCSB Allosphere project, designing and utilizing display and interaction technologies for a three-story surround-view immersive situation room. He co-authored a textbook on Augmented Reality and has (co-)authored over 300 peer-reviewed journal and conference publications in areas such as augmented and virtual reality, computer vision and machine learning, intelligent user interfaces, information visualization, 3D displays, mobile and wearable computing, and social and user-centered computing. Several of these publications received Best Paper or Honorable Mention awards at esteemed venues including IEEE ISMAR, IEEE VR, ACM VRST, ACM UIST, ACM MobileHCI, IEEE SocialCom, and IEEE CogSIMA. He is a senior member of the IEEE and IEEE Computer Society and member of the IEEE VGTC Virtual Reality Academy. He was named an ACM Distinguished Scientist in 2013. He served as an associate editor for the journal IEEE Transactions on Visualization and Computer Graphics and has taken on numerous organizational roles for scientific conferences, such as program chair for ACM VRST 2016, IEEE VR 2015 and 2016, ICAT 2013, IEEE ISMAR 2010 and 2009, general chair of IEEE ISMAR 2006, and as a past member and current vice chair of the steering committee of IEEE ISMAR.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Nicholas Dogris, Ph.D., BCN, QEEG-D

Monday May 5th, at 1pm PST via Zoom

Abstract

This talk will cover the clinical use and creative implications of seven forms of neurostimulation, including Pulsed Electromagnetic Field stimulation (pEMF), Transcranial Alternating Current stimulation (tACS), Transcranial Direct Current stimulation (tDCS), Transcranial Random Noise stimulation (tRNS), Transcranial Advanced Pink & Brown Noise stimulation (tAPNS/tABNS), Transcranial Photobiomodulation (tPBM) and Transcranial Vagus Nerve stimulation (tVNS).

Bio

Nicholas J. Dogris, Ph.D., BCN, QEEG-D, is a highly accomplished psychologist, neuroscientist, and neurotechnology innovator with over 25 years of experience in the field of EEG-based neurotherapy, having developed the first synchronized QEEG (quantitative electroencephalography) system. He is the CEO and co-founder of NeuroField, Inc., a pioneering company specializing in advanced neurostimulation and neuromodulation technologies. In addition to his work in research and development, Dr. Dogris is a licensed psychologist who operates Neurofield Neurotherapy, Inc. in Santa Barbara, California, alongside his wife, where he integrates cutting-edge neurotherapy techniques into clinical practice. His joint venture with Dr. Thompson, The School of Neurotherapy, aims to bring high-quality, comprehensive training to the field of Neurotherapy through the three pillars of Education, Technology, and Application.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

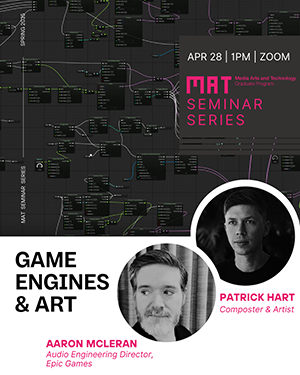

Speakers: Aaron McLeran and Patrick Hart

Monday April 28, at 1pm PST via Zoom

Abstract

This colloquium will present a live performance, featuring acoustic instruments driving real-time procedural systems powered by Unreal Engine's MetaSounds, Niagara, Materials, and MetaHuman technologies.

Following the short performance, we will open a broader discussion on the creative potential of game technology in contemporary digital media. The discussion will explore how features originally designed for games can be reimagined to produce expressive instruments, rich interactivity, and dynamic real-time visuals. As game engines become both more comprehensive and more accessible, they offer artists new opportunities for distribution, audience exchange, and interdisciplinary exploration.

This talk invites artists, engineers, and researchers to consider the creative implications of working with game engines beyond their original domain.

Bios

Aaron McLeran is the audio engineering director at Epic Games working with a team developing all the audio tech in Unreal Engine. His team supports the games Epic makes as well as games made by Unreal Engine licensees and the community. His primary goal at Epic is to develop powerful interactive and procedural audio and music creation tools in a mainstream game engine that work both at the scale of independent artists and small teams as well as AAA, large-scale game development. He has a background in sound design, composition, music performance, and physics. He's worked on a variety of games and different game companies, plays jazz trumpet, and used to be a high school teacher. He graduated MAT in 2009.

Patrick Hart is a musician in Los Angeles who explores the intersection of procedural music, interactive art, and games. His music has received press in The Guardian, Billboard, and The Sunday Times. Patrick is currently working on a new music creation game at Aria Labs. In 2024 he received an Epic MegaGrant to build Unreal audio tools, and spoke about procedural music at Unreal Fest and GameSoundCon. Patrick's film, TV, and commercial credits include ESPN, HBO, Microsoft, Nike, and others.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Tuesday, April 29th, 2025

3pm PDT

Eings Hall room 1601 and via Zoom

Abstract

Digital fabrication technologies, such as 3D printers and computer numerical control (CNC) milling machines, have the potential to support manual craft production because they are designed for low-volume manufacturing. However, off-the-shelf digital fabrication workflows primarily focus on automation, repeatability, and simulation. These approaches often result in fully automated processes designed for highly processed materials and material-agnostic machine toolpath generation. They also leave little room for manual intervention and tend to conceal material and machine knowledge within computational abstractions. I propose to reframe interactions with digital fabrication machines to be more like handmade crafts. This perspective is important for the future of digital fabrication because working at the level of material and machine behaviors is necessary for the viability of digitally fabricated products. It can also broaden the expressive possibilities of digital fabrication practices and can support the development of unique, meaningful, and long-lasting products by promoting values of customization inherent to craft. This approach motivates three research questions: (1) How can fabrication workflows integrate manual and computational processes? (2) How can direct control of digital fabrication machine properties support new design spaces? (3) How can a digital fabrication system make material properties discoverable rather than hidden in computational abstractions? In this dissertation, I investigate these questions through the development of three digital fabrication systems. CoilCAM enables craftspeople to define the machine toolpath of a clay 3D printer mathematically. Millipath supports the creation of carved textures with a CNC-milling machine through the design and parametrization of machine movements. WORM is an embodied system that supports human collaboration with a robot arm through manual craft actions. I conclude by discussing the implications of an action-oriented framework for digital fabrication and offer guidelines for system development that address software structure, design capabilities, human engagement with machines and materials, and forms of interdisciplinary approaches for development and evaluation.

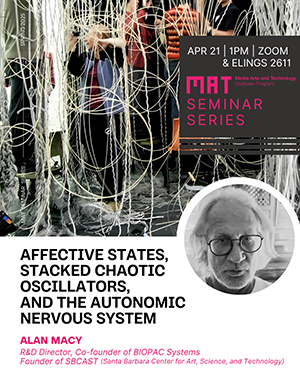

Speaker: Alan Macy

Monday April 21, at 1PM PST in Elings Hall room 2611 and via Zoom

Abstract

The presentation focuses on the nature of affect, its relationship to emotion and sensed physical phenomena occurring in the body. Also considered is the relationship between organ systems in the context of autonomic nervous system behavior. Aspects of chronobiology are introduced. Conjecture, regarding measures of systemic wellness and resilience, will be ventured. If circumstances allow there will be a demonstration of what happens when one eats a slice of lemon.

Bio

Alan Macy is the R&D Director, co-founder of BIOPAC Systems and an applied science artist. His arts/research efforts focus on sensory processing, physiological expressions and translations.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Jonathan Schooler

Monday, April 14, 2025. 1pm PDT, Elings Hall room 2611 and via Zoom

Abstract

Professor Schooler is the UCSB Distinguished Professor of Psychological and Brain Sciences and director of the META Lab and Center for Mindfulness and Human Potential. This talk will provide a whirlwind overview of assorted projects and theories that I have been working on that encourage openness. The first portion will consider two interventions we have been investigating for fostering openness at the state and trait levels. First, a project demonstrating the impact of critically acclaimed short animated film clips on states of curiosity, creativity, intellectual humility and conceptual expansiveness; and second, a mindful curiosity app that we have been developing to foster changes in trait curiosity. The other half of the talk will explore several theories of the mind that encourage opening our perspective to the nature of consciousness and its relationship to the physical world. This includes a neurocognitively grounded theory of consciousness that proposes that the mind can be conceived of as entailing a hierarchically organized set of nested observer windows (NOWs) with each potentially entailing its own stream of consciousness, and a philosophically grounded theory of time, which proposes that our current account of time may better accommodate conscious experience, if it is expanded to include three dimensions: objective time, subjective time and alternative time. Potential ways of visually depicting these theories will also be considered.

Bio

Jonathan Schooler, PhD, is a Distinguished Professor of Psychological and Brain Sciences at the University of California Santa Barbara, Director of UCSB’s Center for Mindfulness and Human Potential, and Acting Director of the Sage Center for the Study of the Mind. He received his Ph.D. from the University of Washington in 1987 and then joined the psychology faculty of the University of Pittsburgh. He moved to the University of British Columbia in 2004 as a Tier 1 Canada Research Chair in Social Cognitive Science and joined the faculty at UCSB in 2007. His research intersects philosophy and psychology, including the relationship between mindfulness and mind-wandering, theories of consciousness, the nature of creativity, and the impact of art on the mind. Jonathan is a fellow of several psychology societies and the recipient of numerous grants from the US and Canadian governments and private foundations. His research has been featured on television shows including BBC Horizon and Through the Wormhole with Morgan Freeman, as well as in print media including the New York Times, the New Yorker, and Nature Magazine. With over 270 publications and more than 45,000 citations he is a five time recipient of the Clarivate Analytics Web of Science™ Highly Cited Researcher Award and is ranked by Academicinfluence.com among the 100 most influential cognitive psychologists.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

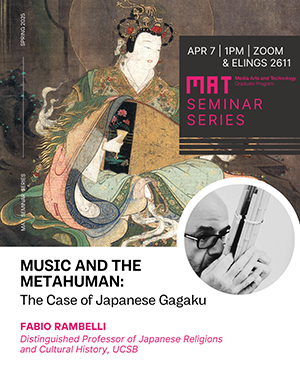

Speaker: Fabio Rambelli

Monday, April 7th, 2025. 1pm PST Elings Hall, room 2611 and via Zoom

Abstract

Professor Rambelli is the Distinguished Professor of Japanese Religions and Cultural History and International Shinto Foundation Chair in Shinto Studies at UCSB. You'll hear about various instances of the metahuman aspects of Gagaku music and Bugaku dances, as well as how this metahuman function impacted the understanding and use of musical instruments and their agency.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: MAT Professor JoAnn Kuchera-Morin

Monday, March 31st, 2025. 1pm Elings Hall 1605 and via Zoom

Abstract

What would it be like if we could generate, control and transform complex information like quantum mechanics or molecular dynamics the way that a composer or artist creates and transforms a work of art, if we could use our senses to perceive very complex n-dimensional information intuitively and as second nature?

I would like to discuss composing and performing complex systems like quantum mechanics by using the model of music composition and performance. A complex system is an arrangement of a great number of various elements with intricate relationships and interconnections. They are difficult to model and predict. Components of a complex system may appear to act spontaneously such that predicting the outcome of the complete system at any given point of time may be difficult if not impossible. In this respect composing music is analogous to building a complex system. The system changes and unfolds over time at many different levels of temporal and spatial dimensions and the outcome of the total system may not be predictable at various stages of composing the work. As a composer creating these complex systems the creative process involves what I describe as non-linear leaps of intuition. Could these leaps be quantum phase transitions? Let’s explore the possibilities.

Bio

Composer JoAnn Kuchera-Morin is Director and Chief Scientist of the AlloSphere Research Facility and Professor of Media Arts and Technology and Music at the University of California, Santa Barbara. Her research focuses on creative computational systems, multi-modal media systems content and facilities design. Her years of experience in digital media research led to the creation of a multi-million dollar sponsored research program for the University of California: the Digital Media Innovation Program.

She was Chief Scientist of the Program from 1998 to 2003. The culmination of Professor Kuchera-Morin’s creativity and research is the AlloSphere, a 30-foot diameter, 3-story high metal sphere inside an echo-free cube, designed for immersive, interactive scientific and artistic investigation of multi-dimensional data sets. Scientifically, the AlloSphere is an instrument for gaining insight and developing bodily intuition about environments into which the body cannot venture—abstract higher dimensional information spaces, the worlds of the very small or very large, and the realms of the very fast or very slow. Artistically, it is an instrument for the creation and performance of avant-garde new works and the development of new modes and genres of expression and forms of immersion-based entertainment. JoAnn Kuchera-Morin earned a Ph.D. in composition from the Eastman School of Music, University of Rochester in 1984.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speakers: Michael Fox and Brad Bell

Monday, March 10th, 2025 at 1pm PDT, room 1605 Elings Hall and via Zoom.

Abstract

Only recently has computation fostered profound new ways of designing, fabricating, constructing, and thinking about architecture. While the profession sits at the end of the beginning of this historically transformative shift, it is now possible to look back upon the rapidly maturing landscape of projects, influencers, and tools that have finally begun to catch up with the visionary thinking of the past. A newly-released book, The Evolution of Computation in Architecture, is the first comprehensive overview of the pioneering works, events, and people that contributed to the paradigm shift defined by computation in architecture. Join authors Brad Bell and Michael Fox as they discuss their book – this conversation is sure to inspire students of computation in architecture, as well as researchers and practicing architects to think about how the tools we use and the ways we design our buildings and environments with them can truly impact our lives.

Michael Fox received his Master of Science in Architecture degree with honors from MIT and his undergraduate professional degree in Architecture from the University of Oregon. He has been elected twice as the President of ACADIA (Association for Computer Aided Design in Architecture). Fox founded a research group at MIT to investigate interactive and behavioral architecture, which he directed for 3 years. He has taught at Art Center College of Design, USC, MIT, HKPU, and SCIARC and is a Full Professor at Cal Poly Pomona. Fox’s work has been featured in numerous international periodicals and books and has been exhibited worldwide. He is the author of two previous books on architectural computation. He is a practicing registered architect and directs the office of FoxLin Architects. foxlin.com.

Brad Bell received his Master of Science in Architecture degree from Columbia University Graduate School of Architecture Planning & Preservation and a Bachelor of Environmental Design from Texas A&M. Brad is the former Director of the School of Architecture (2016-2023) and an Associate Professor at The University of Texas at Arlington. He currently directs the Digital Architecture Research Consortium (DARC) at UT Arlington and was a founding member of TEX-FAB (2008-2017). In 2020 Brad was honored by the Texas Society of Architects with the Award for Outstanding Educational Contribution in Honor of Edward J. Romieniec FAIA. Brad is a member of the Board of Directors of The Dallas Architecture Forum and Chairs The Forum’s Lecture Programming Committee. He has previously taught at Tulane University and the University of Colorado. His research focuses on innovative material applications and computational fabrication within the architectural design process. darc.uta.edu.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Sterling Crispin

Monday, March 3rd, 2025 at 1pm PST via Zoom.

Abstract

The emergence of blockchains as an artistic medium has provided new possibilities for artists working with code. Ethereum and other smart contract blockchains allow artists to publish Turing-complete, self-executing code that permanently stores and processes data on the network. This represents the "World Computer" thesis of crypto—an open, decentralized computational layer—contrasted with the speculative "Casino" that has dominated headlines.

Beyond its reputation as a marketplace driven by hype and speculation, blockchain technology enables autonomous, trustless, and censorship-resistant software, creating a fertile ground for generative and code-based art to thrive onchain. These unique properties offer artists both new creative opportunities and an alternative to traditional gatekeepers, allowing for permanent, self-sustaining artworks that exist independently of any centralized authority.

This talk will explore blockchain as both an economic instrument and a creative medium. We’ll discuss how artists can engage with crypto meaningfully beyond speculation and imagine what the future of onchain generative art might look like as the world accelerates.

Bio

Sterling Crispin (born 1985) is a conceptual artist and software engineer who creates smart contracts, generative art, machine intelligence, and techno-sculpture. Crispin’s artwork oscillates between the computational beauty of nature, and our conflicting cultural narratives about the apocalypse.

Crispin’s artwork has been exhibited in museums and galleries worldwide, including ZKM Karlsruhe, The Mexican National Center for the Arts, The Seoul Museum of Art, Museum of Contemporary Art Denver, The Deutsches Hygiene Museum Dresden, and the Venice Biennale. As well as published in The New York Times, Frieze, Wired, MIT Press, BOMB, Rhizome, ARTNews and Art in America.

His corporate career as a software engineer and designer has been focused in the AR and VR industry since 2014 at companies like Apple, and Snap as well as several startups.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speakers: Olifa Ching-Ying Hsieh, Timothy Wood and Weihao Qiu

Monday, February 24th, 2025 at 1pm PST in Elings Hall room 1605 and via Zoom.

Abstract

The subconscious is where your intrinsic qualities thrive; where seeds of inspiration reside; and where many impulses, emotions, and thoughts are hidden and never expressed. Sometimes they only appear in dreams.

α-Forest is a participatory immersive theater with healing qualities, created by Olifa Ching-Ying Hsieh, Timothy Wood, and Weihao Qiu. The work integrates electronic sound, interactive design, AI imaging technology, Electroencephalography (EEG), and motion tracking to create an immersive meditative journey through the mountain forests of Taiwan and the subconscious mind resulting in interactive real-time co-created content.

At a residency base offered by the Experimental Forest of National Taiwan University, the artists collected unique forest sounds from a mountainous area in central Taiwan, Nantou. They also visited the region’s indigenous tribe and learned about their culture. During a residency in a mountainous region in Xinyi Township of Nantou County, Taiwan, the team members were immersed in the natural energy emanating from Taiwan's mountains and trees, and were also deeply inspired by the indigenous Bunun culture. The Bunun people believe in living in harmony with nature; their everyday life and farming activities are conducted according to the lunar cycle. Through “dream reading” (Taisah in the Bunun language), they explore various signs that connect their dreams to reality. To them, “dreams” are like a bridge that connects one’s inner consciousness to the outside world. The Bunun’s unique “eight-part harmony” is performed by several men huddling in a circle, singing deep sounds that slowly build up, creating a unique sound field with rich vibrations and resonance. This group chanting is a meditative prayer ritual that uses vocal sounds to send spiritual thoughts to the heavens.

This project’s concept and healing experiential design are inspired by this unique cultural experience. By using the immersive technology of the National Taiwan Museum of Fine Arts U-108 SPACE, it constructs a multi-sensory healing experience with synchronized resonance with the audio-optical frequencies, modulating one’s mind and body with natural energy, and embarking on a journey of self-exploration into inner consciousness.

Olifa Ching-Ying Hsieh

Program Coordinator / Interactive Electroacoustic Composer

Timothy Wood

Visual Director / Audiovisual and Embodied Interaction Design

Weihao Qiu

Technical Director / Interactive AI Visual Design

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

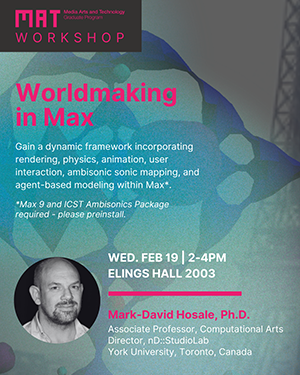

The goal of this workshop is to provide participants with a toolset whereby they can design a world and populate it with transmodal agents with the potential of modelling complex systems.

Dr. Hosale is an Associate Professor in Computational Arts, and the Director of nD::StudioLab at York University, Toronto, Canada.

Speaker: Xavier Amatriain

Monday, February 10th, 2025 at 1pm PST via Zoom

Abstract

Generative AI is rapidly transforming how we create, from generating photorealistic images and composing music to fundamentally altering the product development lifecycle. But as AI takes on more creative tasks, a critical question emerges: Are we witnessing the dawn of a new era of human-AI collaboration, or are we ceding too much control to the machines? This presentation explores the evolving landscape of product thinking in the age of GenAI, drawing lessons from the complex history of AI's interaction with creative fields. We'll delve into recent breakthroughs in generative models, examine their impact on both artistic practices and product development, and discuss how we can design AI-powered tools that augment, rather than diminish, human creativity. Join me as we navigate the GenAI frontier, grapple with the ethical and artistic implications of this powerful technology, and consider the crucial role of human ingenuity in shaping the future of creation.

Bio

Xavier (Xavi) Amatriain returns to UCSB, where he previously taught in the Media Arts and Technology department and led research and development for the Allosphere. His time at UCSB, following his PhD, sparked a deep interest in the potential of technology to empower creative expression. This foundational experience, combined with his research background in AI, has shaped his career trajectory, leading him to explore the broader implications of artificial intelligence across diverse fields. While his early work explored the intersection of AI and media creation, his focus has evolved to encompass the wider landscape of AI and its impact on product development and user experience. His career has included pivotal roles at Netflix, where he helped develop the recommendation algorithms, and LinkedIn, where he led product innovation. Currently, as VP of Product for AI and Compute Enablement (ACE) at Google, he leads teams pushing the boundaries of generative AI, exploring its potential to transform how users interact with technology and how products are conceived and built. He's particularly interested in the evolving relationship between humans and AI, a topic he'll be exploring in today's talk, focusing on the challenges and opportunities presented by generative AI in the context of product development and the future of user interaction.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

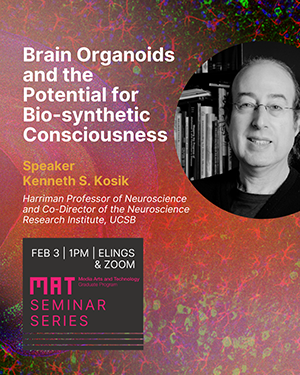

Speaker: Kenneth S. Kosik, M.A. M.D

Monday, February 3rd at 1PM PST 2025

Abstract

Brain organoids are not miniaturized brains. They are prepared by taking skin or blood cells from a person, converting them to stem cells, and differentiating those stem cells to neurons by encasing them in a gel-like material that induces growth in three dimensions. Their size is limited to only a few millimeters because they lack a vasculature system to provide nutrients. They make a remarkable but ultimately incomplete diversity of cell types that bear some resemblance to the developmental anatomy of the brain. Most intriguingly, their electrical signals have a high degree of temporal structure at multiple time scales. We have thought about these observations as an intrinsic framework for encoding information in a setting devoid of conventional information in the form of sensory inputs.

Inevitably, the question of brain organoid consciousness has arisen. Given current organoid technology it does not appear that they fit any of the operational definitions of consciousness. Advances in the field will introduce internal processing systems into organoids through statistical learning, closed loop algorithms and engram formation as well as interactions with the external world, and even embodiment through fusion with other organ systems. At that point we will be faced with questions of biosynthetic consciousness and establishing some well-conceived opinions in advance of that day is a useful exercise.

Bio

Kenneth S. Kosik, M.A. M.D. served as professor at the Harvard Medical School from 1996-2004 when he became the Harriman Professor of Neuroscience and Co-Director of the Neuroscience Research Institute at the University of California Santa Barbara. He has conducted seminal research in Alzheimer's disease genetics and cell biology. He co-authored Outsmarting Alzheimer’s Disease. His work in Colombia on familial Alzheimer’s disease has appeared in the New York Times, BBC, CNN, PBS and CBS 60 Minutes.

Dr. Kenneth Kosik UCSB Commencement Speech 2016

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

The Glass Box Gallery is open Monday - Friday from 9am to 5pm.

Join us at our opening reception on Thursday, January 16th from 5:30pm to 8:30pm, featuring live performances by our own CREATE Ensemble, Yifeng Yvonne Yuan, and Lucian Parisi.