Tuesday, June 11th, 2024

10 -11:30am PDT

Experimental Visualizaton Lab (room 2611 Elings Hall)

Abstract

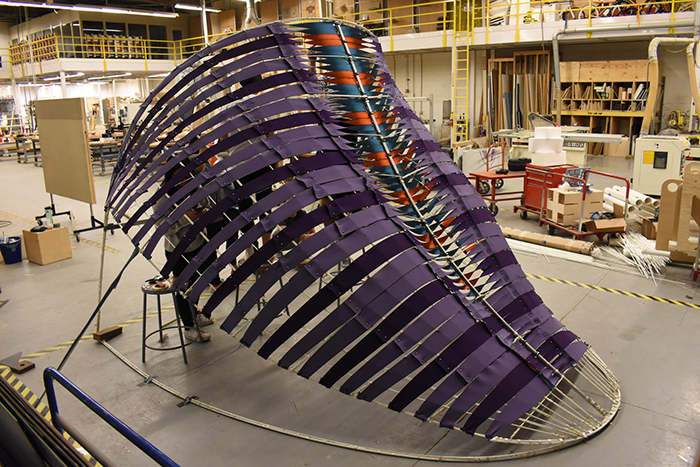

The complexity of meshing physical design requirements with data-driven logic has long been the focus and vexation of artists and engineers wanting to build kinetic sculptural and data display artifacts. Traditional shape displays require high complexity, as previous models require a one to one ratio between actuator number and data resolution. We present SILICONE DREAMS, an open-source system for building soft shape display devices which can convey trends about continuous data as well as function in artistic installations. SILICONE DREAMS utilizes a silicone top layer over a grid of actuator controlled shafts in order to interpolate between data values without added design complexity. We have added programmable LED lighting underneath the silicone layer to extend the possibilities of data representation. Soft Shape Display also functions as a kinetic sculptural object which can be positioned either horizontally or vertically and has been designed for live collaboration with performing artists and as a self-contained system for generating changing 3D shapes. Because the SILICONE DREAMS system is modular, it can easily expand to incorporate other forms of media such as screen-based visuals and generative audio. Soft Shape Display is controlled by an embedded system with custom software, and contributes a generalizable system for future shape display design for both artistic and scientific use cases.

Speakers: Tina Dolinšek and Uroš Veber

Monday, June 3rd, 2024 at 1pm PST via Zoom.

Abstract

PIFcamp is a week-long international summer art, technology and hacking camp, located in the Trenta valley in the Slovenian Alps. At PIFcamp, art, technology, and knowledge converge in a maelstrom of intense activity. Camp participants take the lead in conducting workshops, practical field trips, lectures, fieldwork and on-sight briefings. They actively participate in the development of various DIY/DITO/DIWO projects while collaborating in a creative working environment.

This summer, the 10th edition of the camp will take place in the picturesque Triglav National Park. Within the presentation Tina and Uroš from the Projekt Atol team, key organisers of the camp, will share its history, core principles, art and technology collaborations, community initiatives, and related networks.

Bio

Tina Dolinšek is Ljubljana based new-media art producer, education curator, and cultural facilitator, who has been working at Projekt Atol Institute since 2019 and collaborating with it since 2015. She has collaborated with numerous Slovenian cultural organisations, including LJUDMILA, Ljubljana Digital Media Lab and contributed articles to various Slovenian media. Since 2015 she has been the main engine behind PIFcamp and its success.

Uroš Veber is a cultural organizer, producer, and editor who develops and leads projects and programmes that transcend the boundaries between art, technology and science. Most of his work involves international productions in contemporary art, international networking and informal education and in his role as the main producer at the non-profit Projekt Atol Institute, he has been involved in numerous productions of a large number of young slovenia based media and conceptual artists. He is an active advocate for NGOs and the self-employed in the cultural sector and also co-leads two small music labels, rx-tx and kafana.

Projekt Atol Institute is a non-profit cultural and research organization, founded in 1992 by Marko Peljhan and formally established in 1994 in Ljubljana, Slovenia, as only the second cultural NGO in the country. Atol’s activities range from art and research art production, music events and exhibitions to technological development. Atol also started its music label rx:tx in 2002 and established a strong international network that enables its organization of transnational events, such as Changing Weathers, Resilients, Feral labs and Re-wilding culture. Over the past 10 years, Projekt Atol has primarily focused on developing new artworks and education setups with both local and international guest artists and developing a strong ecosystem of local creative communities. . Together with the Delak Institute and Ljudmila, ATOL runs an artist-led venue with studios, development laboratories, and workshops called osmo/za, located in Ljubljana.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Thursday, May 30th, 2024

1 - 2:30pm PST.

Experimental Visualizaton Lab (room 2611 Elings Hall)

Abstract

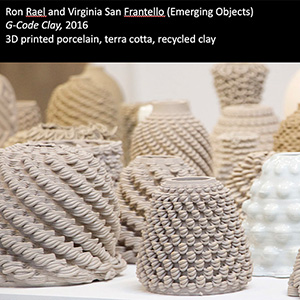

This thesis presents the design and outcomes of SketchPath, a system that uses hand-drawn toolpaths to design for clay 3D printing. Drawing, as a direct manipulation technique, allows artists to design with the expressiveness of CAM-based tools without needing to work with a numerical system or constrained system. SketchPath works to provide artists with direct control over the outcomes of their form by not abstracting away machine operations or constraining the kinds of artifacts that can be produced. Artifacts produced with SketchPath emerge at a unique intersection of manual qualities and machine precision, creating works that blend handmade and machine aesthetics. In interactions with our system, ceramicists without a background in CAD/CAM were able to produce more complex forms with limited training, suggesting the future of CAM-based fabrication design can take on a wider range of modalities.

Wednesday, May 29, 2024

9am PDT

Experimental Visualizaton Lab (room 2611 Elings Hall)

Abstract

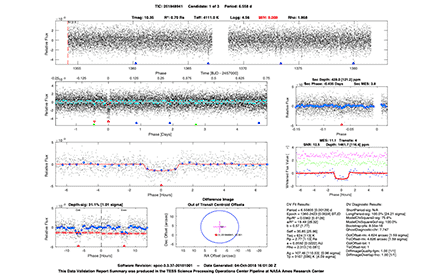

Recent machine learning research has demonstrated that many task-specific AI models now surpass human performance on static benchmarks. However, in real-world applications where human users collaborate with, or rely on AIs, key questions remain: Do these advancements in AI models inherently improve the user experience or augment users' capabilities? When and how should we partner users with AI to form effective human-AI teams? This dissertation explores new forms of human-AI collaboration in the context of real-world computer vision tasks. We shape a research space where users play different roles in diverse AI-assisted workflows -- from passive recipients of AI model outputs to active participants who steer the shaping of the model. 1) We developed intuitive user interfaces to help users, in this case astrophysicists, leverage deep-learning segmentation models in different scenarios. The end-to-end model enhances the accuracy of automated processing of daily space observations from 20+ telescopes globally. The AI-integrated GUI tool injects confidence into researchers' manual analysis of scientific imagery. 2) We proposed the concept of "restrained and zealous AIs" to harness the complementary strength in human-AI teams. Insights from a month-long user study involving 78 professional data annotators suggest that recommendations from ill-suited AI counterparts may detrimentally affect users' skills. 3) Finally, we brought a novel concept of "in-situ learning" to augmented reality, where the user interacts with physical objects to train spatially-aware AI models that can remember the personalized environment and objects for various tasks. Each project elevates the end user to a more active and engaged role in the inference, training, and evaluation processes of human-in-the-loop machine learning. In summary, this dissertation provides insights into the optimal times and methods for teaming humans with AI for real-world collaboration, informing the design of future AI-assisted systems.

Speaker: Daniel Bolojan

Monday, May 13th, 2024 at 1pm PST via Zoom.

Abstract

The lecture will explore the evolution of Creative AI, particularly in the context of design, creative industries, shifting from singular, general-purpose models to a diverse ecosystem of specialized, interconnected systems. This transition highlights Creative AI's transformative impact in various design domains, signaling a new era of innovation and creativity in design. The focus will be on forward-looking projects that illustrate this paradigm shift, demonstrating how AI is becoming an essential, collaborative partner in the design process. The discussion will address the unique challenges and strategies for integrating AI into the complexities of design, emphasizing the importance of a multimodal approach. Implications of this shift will be considered, exploring how Creative AI can be effectively tailored to the specific needs of design contexts.

Bio

Daniel Bolojan is the Director of the Creative AI Lab and an Assistant Professor of AI and Computational Design at FAU, a Ph.D. candidate at Die Angewandte Vienna and Creative AI and Computational Design Specialist at CoopHimmelblau. Recognized as a leading voice in Creative AI, Deep Learning, and computational design within architectural realms, his research delves into the creation of deep learning strategies tailored for architectural design. This research addresses the complexities of shared-agency, designer creativity, and augmentation of design potency.

As a Creative AI and Computational Design Specialist at Coop Himmelb(l)au, Daniel pioneered the development of the award winning DeepHimmelblau Neural Network. This innovative project was developed with the aim of augmenting design processes and designers’ abilities. In 2013, he founded his own research studio Nonstandardstudio, a research studio that operates at the confluence of several crucial domains, including creative AI, deep learning, computation, multi-agent systems, generative design, and algorithmic techniques.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Deniz Çağlarcan

Monday, May 6th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

While multidisciplinarity, interdisciplinarity, and transdisciplinarity have become new trends in the artistic field, the methodology and creative process of artwork differ according to the artist's primary disciplinary background. As a composer, I am exploring how music compositional techniques can inform the visual compositional process across various art disciplines. Specifically, I aim to investigate how understanding the connection between sound and image can enhance our comprehension of visual art and the creation of visual music compositions, as well as how these techniques can be utilized for artistic creativity. By examining the similarities and differences in the structure and meaning of sound and image in visual music composition, I seek to gain a better understanding of how these elements interact and can be utilized to inform visual composition. Ultimately, this approach can inspire artists to establish connections among different artistic disciplines.

In this research, my goal is to provide guidelines based on translating musical compositional techniques and concepts, such as tape music techniques, the relationship between syntactic structure and semantic discourse of the original material, and its role in gestural, textural, and formal structure, counterpoint, orchestration, spatialization, and music analysis methods such as Pierre Schaeffer’s “sound objects,” “TARTYP,” and reduced listening; R. Murray Schafer’s “signal,” “keynote,” “soundmark,” and “symbol”; François Bayle’s Image-Sound relation; and Simon Emmerson’s “language grid” scheme into visual arts, enabling the audience to apply the same principles to other artistic disciplines. I will investigate this relation into two main subjects; translation of the compositional techniques into visual arts in the concept of visual music and the relation of the meaning to the source material.

Bio

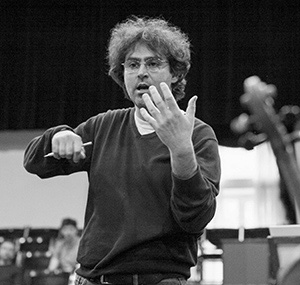

Deniz Çağlarcan is a Los Angeles-based composer, violist, conductor and transdisciplinary artist initially from Istanbul, Turkey. He investigates the sonic quality of "electronic music" by any means and realizes this idealized environment as a model for his musical language. Çağlarcan's works explore the interaction among acoustic instruments, electronic sounds, visuals, and other art disciplines within their morphological attributes. Besides, he is intrigued to create an environment by utilizing various immersive audio techniques as well as visuals and spatial elements that surround the audience. He performs interdisciplinary works collaborating with painters, media artists, computer graphics developers, and machine learning engineers.

His works include solo instrumental pieces, chamber music, large ensembles, tape/electroacoustic works, live-electronic, mixed works, audio/visual compositions, and site-specific sound installations. Besides his composition career as a violist, he performs in solo concerts, chamber music, new music ensembles, and popular music. He is also co-founder of the ADE Duo ensemble. He studied orchestral conducting for over eight years, and the lastest at Central Michigan University, he continued very in- depth study with José-Luis Maúrtua. He holds a Master of Music in Viola Performance from Central Michigan University and a Master of Arts in Composition from Bilkent University.

Çağlarcan is currently a Ph.D. student in Composition studying with João Pedro Oliveira and Master of Science in Media Arts and Technology with Curtis Roads at the University of California, Santa Barbara. He has studied with notable composers and performers; Mark Andre, Beat Furrer, Annette Vande Gorne, Tolga Yayalar, Bruno Mantovani, Ken Ueno, Pierluigi Billone, Clara Iannotta, Alberto Posadas, Isabel Mundry, Ulrich Kreppein, Laura San Martin, Jay C. Batzner, Alicia Valoti, Sheila Browne, Scott Woolweaver, Yuri Gandelsman, Tatjana Masurenko, Walter Küssner, Hartmut Rohde, Alexander Zemtsov, Ulrich Mertin, Christine Ruthledge.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Liam Young

Monday, April 29th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

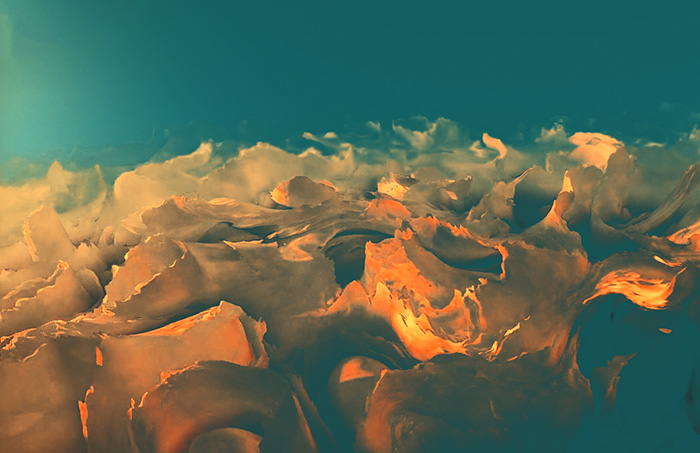

Following centuries of colonization, globalization, and never-ending economic extraction, we have remade the world from the scale of the cell to the tectonic plate. The dystopias of science fiction that previously read as speculative cautionary tales are now the stage sets of the everyday as many of us live out our lives in a disaster film playing in real-time. In this seemingly futureless moment, the storytelling performance 'Planetary Imaginaries' will take us on a sci-fi safari through a screenscape of alternative and hopeful worlds. Slipping between fiction and documentary, the journey will be both an extraordinary image of tomorrow, and an urgent illumination of the environmental questions that are facing us today.

Bio

Liam Young is a designer, director and BAFTA nominated producer who operates in the spaces between design, fiction and futures. Described by the BBC as ‘the man designing our futures’, his visionary films and speculative worlds are both extraordinary images of tomorrow and urgent examinations of the environmental questions facing us today. As a worldbuilder he visualizes the cities, spaces and props of our imaginary futures for the film and television industry and with his own films he has premiered with platforms ranging from Channel 4, Apple+, SxSW, Tribeca, the New York Metropolitan Museum, The Royal Academy, Venice Biennale, the BBC and the Guardian. His films have been collected internationally by museums such as MoMA New York, the Art Institute of Chicago, the Victoria and Albert Museum, the National Gallery of Victoria and M Plus Hong Kong and has been acclaimed in both mainstream and design media including features with TED, Wired, New Scientist, Arte, Canal+, Time magazine and many more. His film work is informed by his academic research and has held guest professorships at Princeton University, MIT, and Cambridge and now runs the ground breaking Masters in Fiction and Entertainment at SCI Arc in Los Angeles. He has published several books including the recent Machine Landscapes: Architectures of the Post Anthropocene and Planet City, a story of a fictional city for the entire population of the earth.

Planet City

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

saccades (2002)

by Ted Moore (saxophone, electronics, and video) and Kyle Hutchins (saxophone)

April 27th, 5pm - 8pm

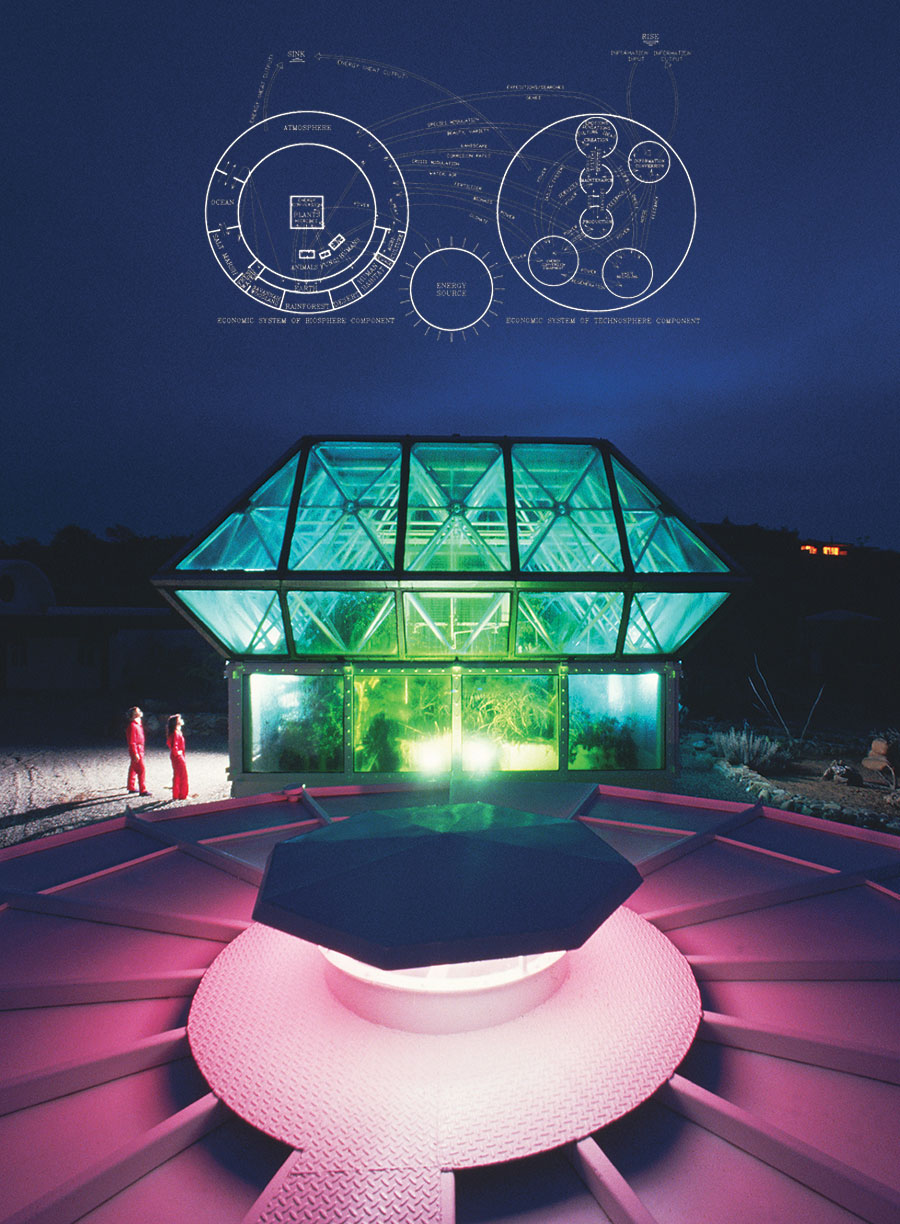

CNSI AlloSphere, Elings Hall 2621

There will be multiple performances of the work starting at 5pm.

A "saccade" is a rapid movement of the eyeball between two fixed focal points.

During this brief moment, the brain hides this blurry motion from our perception. Once a saccade motion has begun, the destination cannot change, meaning that if the target of focus disappears the viewer won’t know until the saccade completes. If the field of vision is changing too quickly, the saccades may never be able to arrive at and focus on a target, instead, the objects in view are only perceived through peripheral vision. This phenomenon is imitated by the sound and video presented in the piece. It also serves as a metaphor for the density of information and high entropy experiences we constantly face. A scroll on social media, smartphone alerts, big data, technological advancements and predictions, the abundance of choices in the grocery aisle.

Ted Moore (he / him) is a composer, improviser, and intermedia artist whose work fuses sonic, visual, physical, and acoustic elements, often incorporating technology to create immersive, multidimensional experiences. After completing a PhD in Music Composition at the University of Chicago, Ted served as a postdoctoral Research Fellow in Creative Coding at the University of Huddersfield as part of the ERC-funded FluCoMa project, where he investigated the creative potential of machine learning algorithms and taught workshops on how artists can use machine learning in their creative music practice. Ted has continued offering workshops around the world on machine learning and creativity including at the University of Pennsylvania, Center for Computer Research in Music and Acoustics (CCRMA) at Stanford University, and Music Hackspace in London. Ted’s music has been presented by leading cultural institutions such as MassMoCA, South by Southwest, The Walker Art Center, and National Sawdust and presented by ensembles such as Talea Ensemble, International Contemporary Ensemble, the [Switch~ Ensemble], and the JACK Quartet. Ted has held artist residences with the Phonos Foundation in Barcelona, the Arts, Sciences, & Culture Initiative at the University of Chicago, and the Studio for Electro-Instrumental Music (STEIM) in Amsterdam. His sound art installations combine DIY electronics, embedded technologies, and spatial sound have been featured around the world including at the American Academy in Rome and New York University.

Kyle Hutchins is an internationally acclaimed performing artist and improviser. He has performed concerts and taught masterclasses across five continents at major festivals and venues in Australia, Belgium, Canada, Chile, China, Croatia, the Czech Republic, England, France, Germany, Ireland, Latvia, Mexico, Scotland, South Korea, and across the United States including Carnegie Hall, The Walker Art Center, World Saxophone Congress, Internationales Musikinstitut Darmstadt, International Computer Music Conference, among many others. He has recorded over two dozen albums on labels such as Carrier, Klavier, GIA, farpoint, Mother Brain, and his work has been recognized by awards and grants from DOWNBEAT, New Music USA, The American Prize, American Protégé International Competition, Music Teachers National Association, Mu Phi Epsilon Foundation, and others. As a specialist in experimental performance practice and electroacoustic new music, Kyle has performed well over 200 world premieres of new works for the saxophone. He has worked with some of the leading composers and performers of our time including Pauline Oliveros, George Lewis, Chaya Czernowin, Georges Aperghis, Richard Barrett, Steven Takasugi, Claire Chase, Douglas Ewart, Duo Gelland, and Zeitgeist. Over the past fifteen years, Kyle has built long standing collaborations and championed the music of many close collaborators such as Ted Moore, Tiffany M. Skidmore, Joey Crane, Emily Lau, Elizabeth A. Baker, Charles Nichols, Eric Lyon, and many more wonderful artists and dear friends. Kyle has served on the faculty of Virginia Tech since 2016 where he is Assistant Professor of Practice and Director of the New Music + Technology Festival at the Institute for Creativity, Arts, and Technology. Kyle has a Doctor of Musical Arts and Master of Music degree from the University of Minnesota, and Bachelors of Music in performance and Bachelors of Music Education degrees from the University of North Texas. His teachers include Eugene Rousseau, Eric Nestler, Marcus Weiss, and James Dillon. Kyle is a Yamaha, Légère, and E. Rousseau Mouthpiece Performing Artist.

Times

04:00 pm EST Fri, Mar 26, 2024 (New York, USA)

03:00 pm CDT Fri, Mar 26, 2024 (Chicago, USA)

01:00 pm PST Fri, Mar 26, 2024 (Los Angeles, USA)

08:00 pm UTC Fri, Mar 26, 2024 (Coordinated Universal Time)

Moderated by: Bhavleek Kaur, Virginia Melnyk, and Gustavo Rincon (PhD MAT)

Session Description

This survey of AI, Computation, Fabrication, Information (Data) & Robotics – Speculating on future trends of built form – looking at how research practice and experimentation come together to blur the lines between the creative imagination and the real. An Aesthetic Challenge to existing formal languages of material form – redefining New Mediated Architectures. While humans have built environments inspired by nature, what new research practices have contributed to extending the potential of A.I. & Robotics – imagining new ways of thinking from physical to virtualized paradigms as well as mixed paradigms. Information is intertwined with our states of humanity. We ask our community of artists, scientists and researchers to share visions as “proposals” for a better world by revealing their research as a paradigm shift engaging current technologies. This session will explore the conceptual implications of the potentiality of new visions for change in contemporary research practice combining the Arts, Design, and Sciences in A.I., (AR/VR/XR/Real) Worlds & Verses, Robotics, and Speculative Design/Arts Futures.

Presenters

For more information about the event: Anticipating the Architecture(s) of The Future

For more information about SPARKS: dac.siggraph.org

Speaker: Juan Escalante

Monday, April 22nd, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

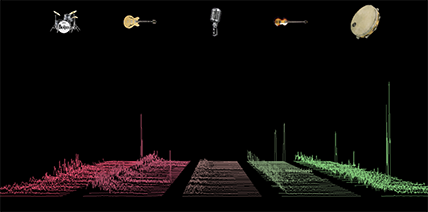

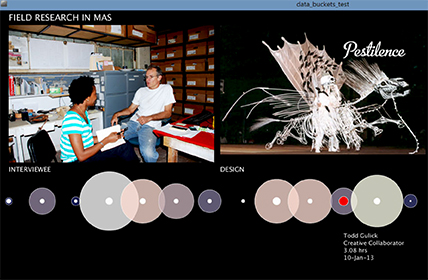

My work relies on diagrams to parse the inner and outside world. These maps become machines of abstraction. They enable the translation of one medium, domain, or dataset into another. I use sound, drawings, and code to complete a process where computer programming occupies a central role, serving as an orchestration mechanism. Throughout most of my practice, source material, both empirical and speculative, is reinterpreted through a process of stochastic computation.

As a result, the work manifests through a wide range of outputs, such as performances, audiovisual work, graphic notation, print, and screen-based media. Over time, all of these forms rescript one another and keep the creative process in a state of flux. For the MAT Winter 2024 Seminar, I will discuss three recent software art projects using graphic scores, electronic sounds, and artificial intelligence.

Bio

Juan Manuel Escalante (b. Mexico City) is a Southern California-based artist and educator working with computer code, modular synthesizers, and analog drawings. His work has been shown in major festivals and exhibitions worldwide. He was a member of the National System of Art Creators (2017-2019 National Endowment for the Arts, MWX) and received the Corwin Award (1st prize) for Electronic-Acoustic Composition in 2016. Escalante holds an MFA in Architecture Design (UNAM) and a Ph.D. in Media Arts and Technology (University of California, Santa Barbara). He is currently an Assistant Professor in the Department of Art at the California State University, Fullerton.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Friday, April 19th, 2024

7:30pm

The concert features works by Earl Howard (performing live on synthesizer and saxophone), Paris-based composer Horacio Vaggione, the late Corwin Chair Clarence Barlow, UCSB Music Composition graduate student Dariush Derekshani, and CREATE’s Associate Director Curtis Roads.

Event Details (PDF):

This event is free admission and open to the public.

Speaker: Andrés Burbano

Monday, April 15th, 2024 at 1pm PST via Zoom.

Abstract

"Machines, Imagination and Latin American Visions: A Panorama of Profound Contributions" investigates the emergence of technologies in Latin America to create images, sounds, video games, and physical interactions. The book contributes to the construction of a historiographical and theoretical framework for understanding the work of creators who have been geographically and historically marginalized through the study of five exemplary and yet relatively unknown artifacts built by engineers, scientists, artists, and innovators. It offers a broad and detailed view of the complex and sometimes unlikely conditions under which technological innovation is possible and of the problematic logics under which these innovations may come to be devalued as historically irrelevant. Through its focus on media technologies, the book presents the interactions between technological and artistic creativity, working towards a wider understanding of the shifts in both fields that have shaped current perceptions, practices, and design principles while bringing into view the personal, social, and geopolitical singularities embodied by particular devices. It will be an engaging and insightful read for scholars, researchers, and students across a wide range of disciplines, such as media studies, art and design, architecture, cultural history, and the digital humanities.

Bio

Andrés Burbano is Professor in the Arts and Humanities School at the Open University of Catalunya (Barcelona, Spain) and Visiting Lecturer at Donau-Universität (Krems, Austria). He holds a Ph.D. in Media Arts and Technology from the University of California at Santa Barbara (California, EEUU) and has developed most of his academic career in the School of Architecture and Design at Universidad de los Andes (Bogotá, Colombia). Burbano works as a researcher, curator, and interdisciplinary artist. His research projects focus on media history and media archaeology in Latin America and the Global South, 3D modeling of archaeological sites, and computational technologies' historical and cultural impact. Burbano has been appointed as ACM SIGGRAPH 2024 Chair.

Professor Burbano's latest book:

Different Engines - Media Technologies From Latin America

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Alberto Estevez

Monday, April 8th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

The Modern Movement of the 20th century worked to design "from the spoon to the city." We, the inhabitants of the 21st century, can transcend working only on the surface of things, as has been done for millennia. Now it is time to design "from the DNA to the planet". From the cell and the bit, to beyond, to the entire Solar System. Thus, is shown here, on some ideas, multi-scalar and transdisciplinary works, around the fusion of the biological world and the digital world apply to architecture & design, genetics and computation, natural intelligence and artificial intelligence, bio-learning and machine-learning, biological techniques and digital techniques, bio-manufacturing and digital-manufacturing.

Bio

Alberto T. Estévez, architect, with a professional office of architecture and design in Barcelona since 1983. Chairman-professor, founder (in 1996) and first director of ESARQ, the School of Architecture of UIC Barcelona (Universitat Internacional de Catalunya), as an avant-garde school during its first 9 years. Founder (in 1998) and first director of UIC Architecture PhD and Masters. Director of iBAG-UIC Barcelona (Institute for BioDigital Architecture & Genetics), that includes the Genetic Architectures Research Group and Office (since 2000), and the Master’s Degree in Biodigital Architecture (since 2000). He has written more than 300 publications, participated in a large number of exhibitions, congresses, committees, and invited lectures around the world, presenting his ideas, projects and works.

geneticarchitectures.weebly.com

www.biodigitalarchitecture.com

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Chris Kallmyer

Monday, March 11th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

The lecture will explore how sound and context transform the way we use technology to inspire meaning and change in listeners. Through this talk we will outline the strategies employed by mid-century experimentalists, pre-enlightenment collectivists, and the prospect of a post-industrial revolution. Chris will expand upon the generative work currently installed in Elings Hall, Song Cycle, and the emerging relationships between audience and performer – artist and community – technology and environment.

Bio

Chris Kallmyer is an artist working at the intersection of music, architecture, and design. Through his work, he creates collective experiences driven by his interests in listening, landscape, and community. His multi-disciplinary works have been exhibited and performed at the San Francisco Museum of Modern Art, Walker Art Center, Pulitzer Arts Foundation, Los Angeles Philharmonic, and STUDIO TeatrGaleria in Warsaw among other spaces in America, Europe, and Asia. His studio is located on the Silver Penny Farm in Petaluma, CA.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

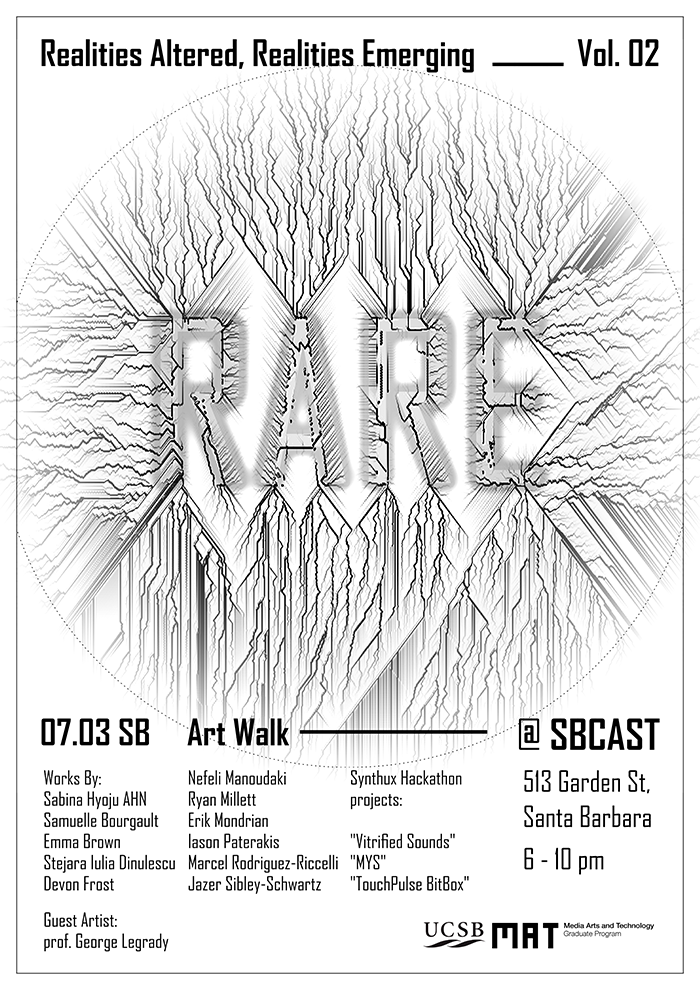

Thursday, March 7, 2024

6 - 10pm

RARE_vol.02: Realities Altered Realities Emerging

Works by:

Synthux Hackathon projects:

"Vitrified Sounds"

"MYS"

"TouchPulse BitBox"

Guest Artist:

Professor George Legrady

The Santa Barbara Center for Art, Science and Technology (SBCAST) is located at 513 Garden Street in downtown Santa Barbara.

Speaker: Weidi Zhang

Monday, March 4th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

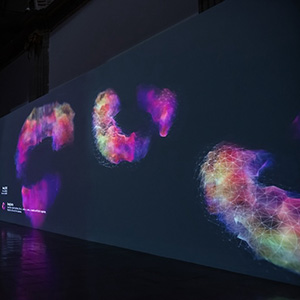

Weidi will present her practice-based research on Speculative Assemblage. She'll introduce her recent experimental visualization artworks, including 'ReCollection,' 'Cangjie's Poetry,' 'Wayfarer,' and 'Astro.' Her discussion will delve into her practices at the intersection of immersive art, AI system design, and experimental visualization.

Bio

Weidi Zhang is a new media artist/designer based in Los Angeles and Phoenix. She is a tenure-track Assistant Professor of immersive experience design at the Media and Immersive eXperience (MIX) center of Arizona State University. Her interdisciplinary art and design research investigates A Speculative Assemblage at the intersection of immersive media design, experimental data visualization, and interactive AI art.

Her works are featured in international media art and design awards, such as the Best In Show Award in SIGGRAPH (2021,2022), Red Dot Design Award (2022), Honorary Mention in Prix Ars Electronica (2022), Juried Selection in Japan Media Arts Festival(2020), Lumen Prize shortlists (2020, 2021), and others. Her works have been exhibited internationally such as Times Art Museum (CN), Centre de Cultura Contemporània de Barcelona, Society For Arts and Technology (CA), SwissNex Gallery (USA), V2_Lab (NL), ISEA, CVPR, IEEE VISAP, Mutek (MX), Mira Fest (Spain), Zeiss Major Planetarium (GE), Planetarium 1 (RUS), and among others. She holds her Ph.D. degree in Media Arts and Technology at the University of California, Santa Barbara, an MFA degree in Art + Technology at the California Institute of the Arts, and a BFA degree in Photo/Media at the University of Washington, Seattle. She lectured at both UC Santa Barbara and The Ohio State University.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

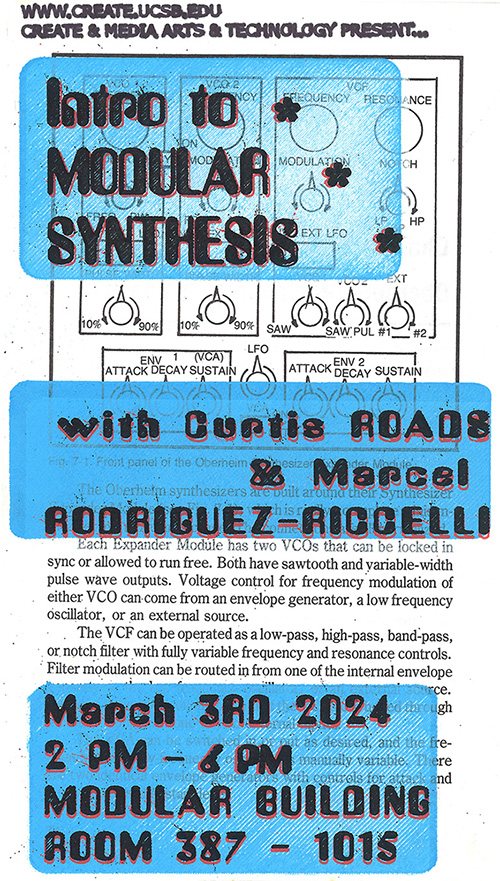

Sunday, March 3rd, 2024

2-6pm

Modular Building 387 - 1015

Intro to Modular Synthesis

Hybrid In Person / Virtual Workshop

Description

CREATE and Media Arts & Technology present, an Intro to Modular Synthesis Workshop by Curtis Roads and Marcel Rodriguez-Riccelli. They'll be giving a retrospective of the history of synthesis and analog computing, before breaking down the basics with demonstration on a hardware eurorack system, and giving participants the foundational knowledge needed to delve further into creating music with modular synthesizers on their own. There will also be a practical portion, in which participants will be able to create their own eurorack synthesizers with the free virtual eurorack software VCV Rack 2.

We encourage anybody who’s curious to join, regardless of experience or skill level. Modular synthesis is a fun and engaging way for both people with no musical background to begin to understand musical concepts and for studied musicians to expand their practice. Foreknowledge of certain basic musical concepts is assumed in the lesson plan, but more time can be taken to go further in depth as is necessary at participant’s request.

The workshop will also be possible over Zoom.

Friday March 1st, 2024

3:30pm PST

Experimental Visualizaton Lab (room 2611 Elings Hall)

Abstract

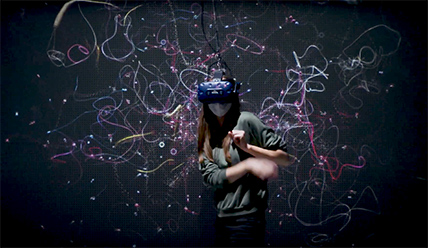

Virtual reality (VR) perspective-taking experiences focused on imagined intergroup contact with individuals from marginalized groups can increase prosocial behavior toward them (van Loon, Bailenson, Zaki, Bostick, Willer 2018). Intergroup contact theory hypothesizes that reducing anxiety, which is the cause of increased stereotyping against the outgroup, and permeating the social encounter with positive emotions (Miller, Smith, & Mackie, 2004) leads to prejudice mitigation (Pettigrew & Tropp, 2006). Beyond these insights from social psychology, sparse literature has explored how to design immersive story worlds to instill prosocial attitudes and behaviors, including the effectiveness of employing photorealistic techniques. This research fills this gap and challenges the assumption that human perceptions inside virtual and physical worlds are equal if digital assets are photorealistic.

Through the creation of a taxonomy of design for inclusive VR, developed from playtesting six state-of-the-art VR experiences, it identifies which affordances and methodologies are significant to inducing prosocial attitudes in immersive social encounters and contributes a pragmatic approach to the design of VR for bias reduction while considering the craft, ethical and humanistic dimensions of the medium.

Speaker: Dana Diminescu

Monday, February 26th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

In "Why I Came," which is also the title of her latest work, Prof. Dana Diminescu will showcase a series of "research / creation" projects she has undertaken. These projects involve experimental protocols, including surveys and prototypes, challenging the notion of a research world separate from the realms of art.

The session will culminate with "Why I Welcome," an interactive installation utilizing data from a refugee reception platform. Since 2015, the SINGA association's program "J’accueille!” (I welcome) has connected French families with refugees, resulting in thousands of hosts by the end of 2022. "Why I Welcome" has become a part of the permanent collection at the National Museum of Immigration History | Palais de la Porte Dorée, in Paris. It was also presented exclusively at the Gaîté Lyrique – Factory of the Time, an interactive art installation venue in Paris during its opening weekend in May, 2023, highlighting Dana Diminescu's research on hospitality and connected migrants.

Bio

Dana Diminescu is a social science researcher and artist, currently holding the position of Senior Lecturer/Associate Professor at the Télécom Paris engineering school, and is a member of Spiral, the art & science chair of the Institut Polytechnique de Paris. She serves as the Coordinator of the DiasporasLab. She is renowned for her work on the "connected migrant" and for introducing various epistemological and methodological innovations. Notably, she spearheaded the e-Diasporas Atlas project, which received recognition for the 2012 Digital Humanities Awards. Recently, Diminescu developed the JokaJobs application for jobseekers from Generation Y, designed specifically for smartphones.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Wednesday, February 21st, 2024

12:30 - 2pm PST

Room 2003 (MAT Conference Room)

Abstract

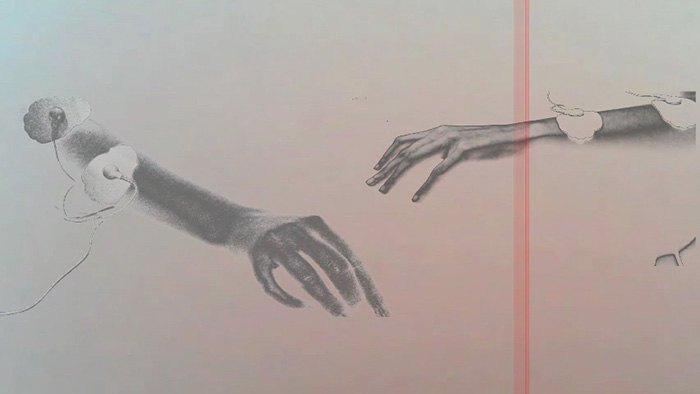

Our feelings of being in control over our actions and their consequences are broadly referred to as the sense of agency, which is of importance within the disciplines of neuroscience, haptics, and human-computer interaction. However, each field defines and contextualizes agency with unique perspectives, considerations, and applications. This report begins by examining our sense of agency when performing hand movements — referred to throughout as kinesthetic agency — and subsequent sensorimotor consequences affecting our perception of touch. It further explores and contextualizes our sense of agency at the intersection of human-computer interaction, aesthetics, and creative collaboration, highlighting the importance of a user’s perceived agency throughout their interactions with systems, tools, or iterative processes.

While both perceived and kinesthetic agencies of interacting entities are important lenses through which we design and evaluate interactive systems, it is additionally useful to analyze how their intersections and couplings afford co-produced aesthetic outcomes. I distinguish between agency sharing, conceptualized as a continuous collaboration between two or more entities, and agency exchange, conceptualized as the hand-off of decision-making or control between entities. The nuances of – and degrees to which – perceived and/or kinesthetic agency is shared and/or exchanged between entities impact their interactions with the system as a whole, as well as the co-produced outcomes.

Through the presentation and critical analysis of four co-productive systems, tools, or pipelines developed in my own studio and research practice between 2020 and 2023, I impart my perspective and considerations for designing interactions and systems for artistic co-production. Metrics of evaluation include 1) schematic representations of how agency is shared and/or exchanged between agentic entities and systems or processes, 2) aesthetic critique of the outcomes (i.e. drawings, sculptures, live installations, etc.) as artworks in their own right, and 3) developer, user, and audience feedback during iteration and exhibition. Such an analysis, performed during the design and iteration stages of system development, can aid artists, researchers, and developers in engaging with unique co-design and co-productive processes.

Tuesday, February 20, 2024

10am PST

Room 1601 Elings Hall and via Zoom

Abstract

A longstanding goal in haptics is to engineer high-fidelity displays for spatially distributed haptic feedback, specified as digital media. Such haptic displays would make it possible to touch, feel, and interact with dynamic scenes, objects, or information presented anywhere in a continuous display medium, such as an interactive surface or three-dimensional environment. They would thus represent the haptic analogs of two- or three-dimensional video displays. This dissertation advances knowledge in the design and operational principles of such haptic displays, focusing on emerging technologies that harness and control propagating mechanical waves to deliver spatiotemporally distributed haptic feedback. Central to the innovation of wave-mediated haptic display is that the spatial resolution of haptic feedback is governed by the control of wave transmission rather than by the number and density of actuators, as would be the case in conventional approaches. This wave-mediated strategy reduces complexity, enabling practical and scalable displays capable of reproducing dynamic haptic media. This Ph.D. dissertation contributes new display designs exploiting wave transmission, algorithms and methods for wave-mediated haptic display, experimental findings that mechanically and perceptually characterize the proposed display methods, and investigations of the influence of skin biomechanics on display fidelity.

Speaker: Spencer Lowell

Monday, February 12th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

Join internationally renowned technology photographer Spencer Lowell as he presents his practice and discusses his (in production) book The Nature of Things - about the complex and evolving relationship between humans and technology.

Bio

Spencer Lowell is an award-winning Los Angeles-based photographer whose work blurs the line between art and science.

His assignments for many of the world’s leading magazines have taken him from a research ship in the Mediterranean to a desalination plant in Dubai to Norway’s Global Seed Vault to the Fukushima Daiichi nuclear plant to Mark Zuckerberg’s office.

Drawn into the field at age 16 by his first job in a one-hour photo lab, Spencer studied at Art Center College of Design in Pasadena. His graduation project, which included platinum palladium prints of images taken by the Hubble Space Telescope, caught the eye of officials from NASA’s Jet Propulsion Laboratory, who commissioned him to document their work. The resulting photos of the construction of the Mars rover “Curiosity” wound up published in Time and several other magazines.

Since then, Spencer's images of scientific laboratories and industrial facilities, and portraits of leading researchers and corporate moguls, have appeared on the covers of the New York Times Magazine, Time, Wired, Fortune and Popular Science, as well as in the pages of GQ, The Atlantic, Esquire, Rolling Stone and many other publications. He has also created commercial work for clients ranging from IBM to Google to Budweiser to Christie’s to The San Francisco Symphony as well as companies he can’t name because of NDA's. He was named a “photographer to watch” by Photo District News in 2011, and his work has been honored by American Photographer, Communication Arts Photo Annual and PDN Photo Annual.

A video recording of the talk is available on Vimeo.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Márton Orosz

Monday, January 29th, 2024 at 1pm PST. Elings Hall room 1605 and via Zoom.

Abstract

Márton Orosz's presentation explores the life and work of György Kepes, an early pioneer of new media art. Kepes, a painter, designer, photographer, impresario, and polymath, founded the Center for Advanced Visual Studies (CAVS) at MIT in 1967, serving as a precursor to contemporary media art institutions and educational programs.

Kepes was among the first who suggested the intersection of art, science, and technology and the first one who established a program within the academic curriculum dedicated to this innovative pursuit. His significance lies in his cybernetic approach to crafting multisensory urban environments, exploration of novel theories on human perception evident in his 1944 textbook "Language of Vision," and the innovative repurposing of scientific images into aesthetic objects, showcased in his 1951 exhibition, "The New Landscape."

Orosz explores Kepes's contributions to visual aesthetics, his creative process, and visionary concepts that provided prosthetics to mimic nature, offering an avant-garde perspective in Post-War art history for building a sustainable world. The lecture introduces Kepes's realized and unrealized artworks, illustrating his mission to humanize science and cultivate ecological awareness through the creative use of technology.

The central question addressed is how to develop an agenda that bridges aesthetics and engineering, not merely as a gap-filling exercise but as a means to forge a human-centered ecology using cutting-edge technology. What were the chances in Kepes's time and what are the chances today to democratize our vision through the power of the (thinking) eye?

Bio

Dr. Márton Orosz serves as the founder and Curator of the Collection of Photography and Media Arts at the Museum of Fine Arts – Hungarian National Gallery in Budapest. Since 2014, he has held the position of Director at the museum dedicated to Victor Vasarely, which is part of the same institution. Dr. Orosz also holds the role of scientific advisor to the Kepes Institute in Eger and the Michèle Vasarely Foundation in Puerto Rico. He has curated numerous exhibitions across the globe, written books and articles on various art-related subjects, and delivered lectures in several locations, including Europe, the United States, and Asia. His research and publications encompass a wide range of fields, including light-based media, photography, avant-garde collecting, abstract geometric and kinetic art, computer art, motion picture, and animated film. His first documentary film, titled György Kepes – Interthinking Art + Science was completed in 2023 and garnered recognition and accolades at many international film festivals.

A video recording of the talk is available on Vimeo.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Leslie Garcia

Monday, January 22nd, 2024 at 1pm PST via Zoom

Abstract

In this seminar we will analyze the relationship and origins of cybernetics and its technological intersections. We will also address the evolution of AI from a historical context and some of its practitioners from artistic research.

Bio

Interspecifics is an international Independent artistic research bureau founded in Mexico City in 2013. We have focused our research on the use of sound and A.I., to explore patterns emerging from biosignals and the morphology of different living organisms as a potential form of non-human communication. With this aim, we have developed a collection of experimental research and education tools we call Ontological Machines. Our work is deeply shaped by the Latin American context where precarity enables creative action and ancient technologies meet cutting-edge forms of production. Our current lines of research are shifting towards exploring the hard problem of consciousness and the close relationship between mind and matter, where magic appears to be fundamental. Sound remains our interface to the universe.

A video recording of the talk is available on Vimeo.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Sunday, January 21st from 5-6pm at SBCAST (531 Garden St, Santa Barbara) and via Zoom

The panelists for this presentation are Raphaël Bessette, T Braun, Amanda Gutierrez, Laura Magnusson.

Abstract

A research-creation panel with four Montréal-based PhD researchers (Concordia University) who will share their research-creation on the topics of Feminist/Queer/Trans* Research-Creation Methodologies. This will be an intimate setting with some light refreshments and snacks provided.

Bios

Raphaël Bessette

Raphaël was born and raised in Gatineau, on the unceded territory of the Algonquin-Anishinabeg Nation and is currently pursuing their PhD on the unceded lands of the Kanien’kehá:ka Nation in Tio'tiá:ke/Montreal. They hold a BA in political science (Université de Montréal), MA in Gender Studies (Université de Genève), an MA in Visual Anthropology (Université Paris Nanterre), and a DIU in research-creation (Université Paris-8/Université Paris Nanterre). Working across documentary and experimental film, their work has been shown at festivals such as Premier Regards - Festival International Jean Rouch (Paris), Festival International du Film Ethnographique du Québec and Festival de la Poésie de Montréal/Tio'tiá:ke.

Their current PhD research is situated at the intersections of trans* studies, critical disability studies, science and technology studies and research-creation and focuses on the parallel and connected practices of trans* embodiment and experimental filmmaking. They explore the relations between materials and bodies in their engagements with prosthetics (binders, breast forms, tucking underwear, packing, stand-to-pee devices, sex-toys, etc.) and in filmmaking techniques of frame-by-frame animation and process cinema.

T Braun

T Braun is an interdisciplinary artist who creates virtual worlds, drag performances, and interactive installations that challenge binary notions of gender. They are currently based in Tiohtià:ke (Montreal) and are pursuing a Ph.D. in Humanities at Concordia University. Their Ph.D. work explores how queer VR enthusiasts envision the metaverse, create gender-affirming content, and form virtual communities. They are currently conducting interviews and co-creating virtual worlds with trans* artists in the social VR platform VRChat.

Amanda Gutierrez

Amanda Gutierrez (b. 1978, Mexico City) explores the experience of political listening and gender studies by bringing into focus soundwalking practices. Trained and graduated initially as a stage designer from The National School of Theater, Gutiérrez uses a range of digital media tools to investigate everyday life aural agencies and collective identities. Approaching these questions from aural perspectives continues to be of particular interest to Gutiérrez, who completed her MFA in Media and Performance Studies at the School of the Art Institute of Chicago. She is currently elaborating on the academic dimension of her work as a Ph.D. candidate in Arts and Humanities at Concordia University in the Arts and Humanities Doctoral program. Gutiérrez has held numerous international art residencies such as FACT, Liverpool in the UK, ZKM in Germany, TAV in Taiwan, Bolit Art Center in Spain, and her artwork has been exhibited internationally in venues such as The Liverpool Biennale in 2012, Lower Manhattan Cultural Council, Harvestworks in NYC, SBC Gallery, Undefined Radio in Montreal, Errant Bodies Studio Press in Berlin, among other.

Laura Magnusson

Laura Magnusson is a Canadian interdisciplinary artist and filmmaker based in Tiohtià:ke/ (Montreal). Her current research-creation explores and elucidates felt experiences of violence and trauma through installation, sculpture, drawing, performance, and video. She is a trained scuba diver who has filmed underwater in Iceland and Mexico, using the medium water as a site for healing and reconnecting to the body. Magnusson holds an MFA in Interdisciplinary Art from the University of Michigan (2019), and is currently pursuing a PhD in Interdisciplinary Humanities at Concordia University.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Danielle Garrison

Monday, January 8th, 2024 at 1pm PST in room 1605 Elings Hall and via Zoom

Abstract

In this gathering, you are invited to interact with my heart tethering research. We will sense our heartbeats somatically through sensorial guiding and biometrically (pulse oximeters that translate into sound, lights and haptics) in collaboration with Ryan McCullough. We will practice tethering with a horizontal aerial fabric loop, which acts as a touch conduit between our bodies. We will engage the heart as a gateway to sense our nervous system states, determining how we co-sense our spatial relation. Please wear comfortable clothes, socks, and bring a pen.

Danielle creates interactive aerial dance experiences to re-ignite embodied interaction in a post-touch era questioning what aerial arts can do. She has been creating an emergent aerial dance technique called tethering, which consists of a horizontal fabric that mediates touch to develop practices and guidelines around consent in artistic collaborations. Materializing as a research-creation project, heart tethering engages polyvagal theory as a language to co-sense and identify nervous system states in relational encounters by tuning into heart-based dynamics. This process hones awareness of the heartbeat by sensing the heart somatically (scanning layers from external proprioception to internal interoception called heartception) and biometrically (pulse oximeters that translate into sound, lights and haptics) in collaboration with Ryan McCullough. The event centers around the three phases of the heartbeat—contract, rest, release—as a guiding framework to explore movement, adapt spacing, slow timing, and experiment with a spectrum of response-abilities. This research asks what happens when we become more aware of our heart in each moment and how does it change our relation with another? What emerges when we prioritize co-sensing and communicating with creative methodologies that honor complexities and critically engage with the ever-evolving practice of consent within encounters? Danielle is an interdisciplinary humanities PhD student in research-creation at Concordia University (Montréal) directed by Angélique Willkie, Erin Manning, and VK Preston and the University of Montpellier (France) supervised by Alix de Morant, as well as a 4-year Fulbright Specialist (2021-2025).

Bio

While pursuing her MFA in Dance (somatics/aerial arts) from the University of Colorado-Boulder, Danielle was a Fulbright Scholar to France (2017-2018) where she created an interdisciplinary project weaving contemporary dance, circus, visual arts and film exploring the topic of grief in news media. Recent aerial residencies include SenseLab (Montréal), Milieux (Montréal), Nils Obstrat (Paris), Ecole Media Art du Grand Chalon (Chalon- sur- Saône), La Grainerie (Toulouse), the Circus Dialogues Project’s 4th encounter (Avallon), and SBCAST (Santa Barbara Center for Arts, Science and Technology). Danielle has performed and/or taught for Aerial Dance Chicago, Frequent Flyers Productions, Les Rencontres de Danse Aérienne, the Berlin Circus Festival, Frequent Flyers Aerial Dance Festival, Santa Barbara Floor to Air Festival, Aerial Greece, and the San Francisco Aerial Dance Festival. In 2020, she co-created Aerial Reflexionando (virtual aerial arts colloque) with Ana Prada to support critical exchange on contemporary aerial arts in the Americas.

A video recording of the talk is available on Vimeo.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Tuesday, December 12th, 2023

11am PST

transLAB (Room 2615, Elings Hall) and via Zoom

Abstract

The coming wave of new media technologies relying on generative composition and Artificial Intelligence (AI) has introduced novel opportunities in transmodal synthesis and creative synergies within real-time composition networks. The term ‘perforated systems’ as proposed by Marcos Novak, is used to describe the collaborative systems that arise from dialogues between diversified environments operating through flowing fields of data. Such systems allow for the creation of complex artificial, physical, and hybrid environments in Extended Reality (XR) through real-time collaboration.

This master thesis delves into the intersection of XR technologies, transmodal environments, and generative AI in speculative architectural composition, unveiling paradigms of hybrid ‘perforated systems’ in the form of interior and urban installations. These installations propose alternative environments through a fusion of tangible artifacts, olfactory, audio, and visual components, and explore the interplay between dynamic and diverse data-driven compositions in XR.

Ranging from biodata-driven abstract worlds in Virtual Reality (VR), to Mixed Reality (MR) installations like the ‘Synaptic Time Tunnel’ presented in SIGGRAPH 2023, and AI-generated urban projection mapping performances, these endeavors fuse cutting-edge technologies with generative AI tools to achieve maximal complexity with minimal initial conditions.

In the age of data, networks, and AI, the potential for collaboration between different fields and creators is vast and profound. The present thesis aims to demonstrate how transdisciplinary ‘perforated’ networks can generate complex and abstract compositions that emerge from simple elements and expand into Extended Reality. The objective is to harness the potential of merging "perforated systems" with AI, XR technologies, and the built environment, to foster the development of immersive and collaborative experiences that push the boundaries of worldmaking.

Speaker: Jenni Sorkin

Monday, December 4th, 2023 at 12pm noon PST in room 1605 Elings Hall and via Zoom

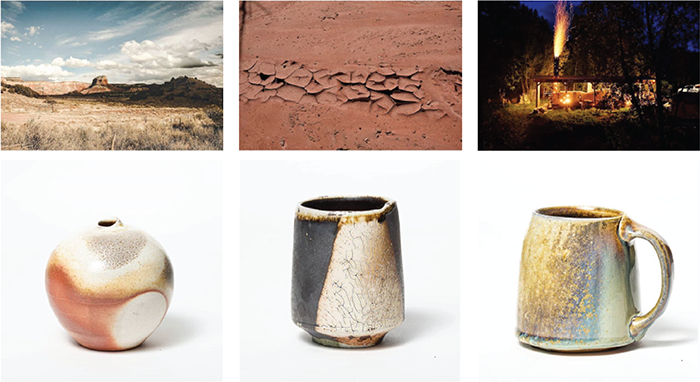

Abstract

In looking back to Arts & Crafts predecessors, this lecture argues for a historical framework for digital ceramics.

Bio

Jenni Sorkin is Professor of History of Art & Architecture at University of California, Santa Barbara, and is affiliated in the Art, Feminist Studies, and History Departments. She writes on the intersections between gender, material culture, and contemporary art, working primarily on women artists and underrepresented media. Her books include: Live Form: Women, Ceramics and Community (University of Chicago, 2016), Revolution in the Making: Abstract Sculpture by Women Artists, 1947-2016 (Skira, 2016) and Art in California (Thames & Hudson, 2021), as well as numerous essays in journals and exhibition catalogs. She serves on the University of California Press Editorial Board, as the Co-Executive Editor of Panorama: the Association of Historians of American Art, and is a member of the Editorial Board of the Journal of Modern Craft. She received her PhD in the History of Art from Yale University.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Anna Mansueti

Monday, November 27th, 2023 at 1pm PST via Zoom

Abstract

In Demining Reimagined, I explore new ways to supplement and improve humanitarian demining practices, UXO mission planning and reporting procedures, and mine action campaigning. The project consists of a booklet containing Anna’s research on this topic, stories about landmines and cluster munitions, drawings, detailed maps, and a video performance titled ‘Hazardous Fragmentation Distance(s)’.

Bio

Anna Mansueti is an interdisciplinary artist and designer. She completed her BA in Studio Art from the University of Colorado Boulder in 2012, and upon graduating, commissioned in the US Navy as an Explosive Ordnance Disposal (EOD) officer. For the following ten years, she served as a bomb technician and diver, conducting humanitarian mine action missions and multinational training operations with foreign partner forces in Europe and Southeast Asia. During her time as a Master in Design Studies (MDes) candidate at the GSD, Anna focused on the aftermath of war, unexploded ordnance and environmental degradation, and post-conflict reconciliation. She has also worked on a series of projects about veteran mental health, post-traumatic stress, and gender equity in the con, and is currently interested in new ways to supplement and improve the demining space. She is passionate about using artistic intelligence to reimagine new ways in the face of sustained periods of violence.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Ralf Baecker

November 20th, 2023 at 1pm PST via Zoom.

Abstract

After publishing his comprehensive book, Professor and artist and researcher Ralf Baecker is returning to the MAT Seminar to present his latest research and performance endeavor, Natural History of Networks in discussion with Professor Marko Peljhan and curators Daria Parkhomenko and Andreas Broeckmann.

Bio

Ralf Baecker (*1977 Düsseldorf, Germany) is an artist working at the interface of art, science, and technology. Through installations, autonomous machines, and performances, he explores the underlying mechanisms of new media and technology. His objects perform physical realizations of thought experiments that act as subjective epistemological objects to pose fundamental questions about the digital, technology and complex systems and their entanglements with the socio-political sphere. His projects seek to provoke new imaginaries of the machinic, the artificial and the real. A radical form of engineering that bridges traditionally discreet machine thinking with alternative technological perspectives and a new material understanding that makes use of self-organizing principles.

Baecker has been awarded multiple prizes and grants for his artistic work, including the grand prize of the Japan Media Art Festival in 2017, an honorary mention at the Prix Ars Electronica in 2012 and 2014, the second prize at the VIDA 14.0 Art & Artificial Life Award in Madrid, a working grant of the Stiftung Kunstfonds Bonn, the Stiftung Niedersachsen work stipend for Media Art 2010 and the stipend of the Graduate School for the Arts from the University of the Arts in Berlin and the Einstein Foundation.

His work has been presented in international festivals and exhibitions, such as the International Triennial of New Media Art 2014 in Beijing, Künstlerhaus Wien, ZKM | Center for Art and New Media in Karlsruhe, Martin-Gropius-Bau in Berlin, WINZAVOD Center for Contemporary Art in Moscow, Laboral Centro de Arte in Gijón, Centre de Cultura Contemporània de Barcelona (CCCB), NTT InterCommunication Center in Tokyo, Kasseler Kunstverein and Malmö Konsthall.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

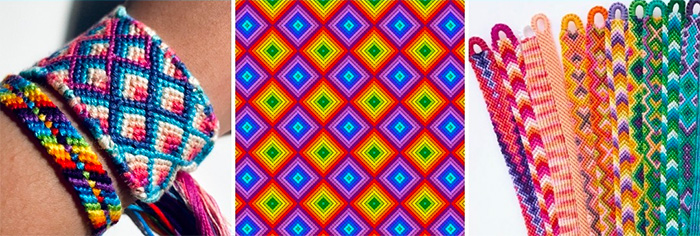

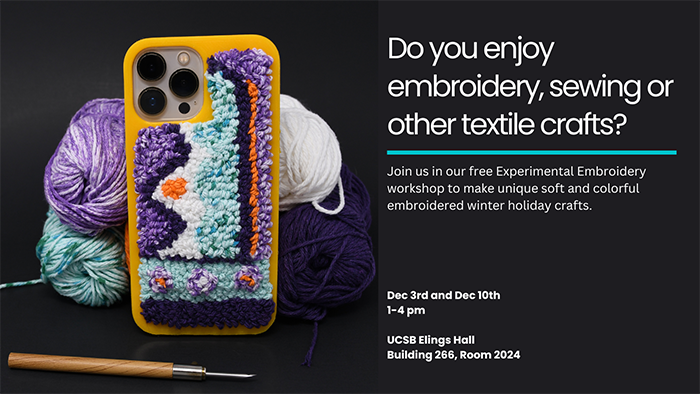

November 18th, 1-5pm

Elings Hall room 2024

The workshop is part of a research project aimed at investigating the application of computer-aided design in drafting Macramé friendship bracelet patterns. It will start with a short crafting session to introduce participants to the basics of making friendship bracelets, a form of Macramé textile craft, as well as common design characteristics of friendship bracelet patterns. It will then present a node-based, visual programming design system as the main digital tool for participants to use to create their very own bracelet patterns. The workship aims to combine aspects of manual textile crafting, parametric design, and visual programming.

To learn more about the workshop and the work involved in the project, visit ecl.mat.ucsb.edu/events/parametricMacrame.

Speaker: Somayeh Dodge

Monday, November 6th, 2023 at 1pm PDT Room 1605 Elings Hall and via Zoom.

Abstract

Visualization is a key element in data-driven knowledge discovery and computational movement analysis. With the widespread increase in the availability and quality of space-time data capturing movement trajectories of individuals, meaningful representations and visualization techniques are needed to map and communicate movement patterns captured in the data. Proper representation of movement patterns and their dependencies grounded on cartographic principles and intuitive visual forms can facilitate scientific discovery, decision-making, collaborations, and foster understanding of movement. Using several use cases, this presentation discusses different approaches to mapping movement in static and dynamic displays to support knowledge discovery from human and animal movement data. I will also demo DynamoVis, an open-source software developed in Java and Processing to design, record and export custom animations and multivariate visualizations from movement data, enabling visual exploration and communication of animal movement patterns.

Bio

Somayeh Dodge is an Associate Professor of Spatial Data Science in the UCSB Department of Geography. She received her PhD in Geography with a specialization in Geographic Information Science from the University of Zurich in 2011. She is a recipient of the 2021 NSF CAREER award, and the 2022 Emerging Scholar Award from the Spatial Analysis and Modeling Specialty Group of the American Associations of Geographers. Her research focuses on developing data analytics, knowledge discovery, modeling, and visualization techniques to study movement in human and ecological systems. Somayeh is the Co-Editor in Chief of the Journal of Spatial Information Science, and a member of the editorial boards of multiple journals, including Geographical Analysis, and Cartography and Geographic Information Science, and the Journal of Geographical Systems.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Thursday, November 2, 2023 from 6-10pm.

The University of California, Santa Barbara's Media Arts and Technology (MAT) program is a unique graduate program that combines computer science, engineering, digital art research, electronic music, and emerging media. On November 2nd, MAT will showcase its students' cutting-edge research and new media artworks at the Santa Barbara Center for Art, Science, and Technology (SBCAST).

Location: 513 Garden St, Santa Barbara

Date: Thursday, November 2, 2023

6-10pm

Participating Artists:

Speaker: Lev Manovich

Monday, October 30th, 2023 at 1pm PDT via Zoom.

Dr. Lev Manovich is a Presidential Professor at The Graduate Center, City University of New York, and the founder and director of the Cultural Analytics Lab. In 2013 Manovich appeared in the List of 25 People Shaping the Future of Design (Complex). In 2014 he was included in the list of 50 most interesting people building the future (The Verge).

Manovich played a key role in creating four new research fields: new media studies (1991-), software studies (2001-), cultural analytics (2007-) and AI aesthetics (2018-). He is the author and editor of 15 books including Artificial Aesthetics (2022), Cultural Analytics (2020), AI Aesthetics (2018), Theories of Software Culture (2017), Instagram and Contemporary Image (2017), Software Takes Command, (Bloomsbury Academic, 2013), Black Box - White Cube (Merve Verlag Berlin, 2005), Soft Cinema (The MIT Press, 2005), The Language of New Media (The MIT Press, 2001), Metamediji (Belgrade, 2001), Tekstura: Russian Essays on Visual Culture (Chicago University Press, 1993) as well as 180 articles which have been published in 35 countries and reprinted around 700 times. He is also one of the editors of Quantitative Methods in Humanities and Social Science book series (Springer).

The Language of New Media is translated into 14 languages and is used a textbook in tens of thousands of programs around the world. According to the reviewers, this book offers "the first rigorous and far-reaching theorization of the subject"; "it places [new media] within the most suggestive and broad-ranging media history since Marshall McLuhan."

Manovich was born in Moscow where he studied fine arts, architecture, and computer programming. He moved to New York in 1981, receiving an M.A. in Visual Science and Cognitive Psychology (NYU, 1988) and a Ph.D. in Visual and Cultural Studies from the University of Rochester (1993). Manovich has been working with computer media as an artist, computer animator, designer, and developer since 1984.

His digital art projects were shown in 120 group and 12 personal exhibitions worldwide. The lab’s projects were commissioned by MoMA, New Public Library, and Google. "Selfiecity" won Golden Award in Best Visualization Project category in the global competition in 2014; "On Broadway" received Silver Award in the same category in 2015. The venues that showed his work include New York Public Library (NYPL), Google's Zeitgeist 2014, Shanghai Art and Architecture Biennale, Chelsea Art Museum (New York), ZKM (Karlsruhe, Germany), The Walker Art Center (Minneapolis, US), KIASMA (Helsinki, Finland), Centre Pompidou (Paris, France), ICA (London, UK), and Graphic Design Museum (Breda, The Netherlands).

In 2007 Manovich founded Software Studies Initiative (renamed Cultural Analytics Lab in 2016.) The lab pioneered computational analysis and visualization of massive cultural visual datasets in the humanities. The lab's collaborators included the Museum of Modern Art in NYC, Getty Research Institute, Austrian Film Museum, Netherlands Institute for Sound and Image, and other institutions that are interested in using its methods and software with their media collections. Since 2012 and 2016, Manovich directed a number of projects that present an analysis of 16 million Instagram images shared worldwide.

He received grants and fellowships from Guggenheim Foundation, Andrew Mellon Foundation, US National Science Foundation, US National Endowment for the Arts (NEH), Twitter, and many other agencies.

Between 1996 and 2012, Manovich was a Professor in Visual Arts Department at University of California San Diego (UCSD) where he was teaching classes in digital art, new media theory, and digital humanities. In addition, Manovich was a visiting professor at California Institute of the Arts, The Southern California Institute of Architecture (SCI-Arc), University of California Los Angeles (UCLA), University of Amsterdam, Stockholm University, University of Art and Design in Helsinki, Hong Kong Art Center, University of Siegen, Gothenburg School of Art, Goldsmiths College at the University of London, De Montfort University in Leicester, the University of New South Wales in Sydney, The University of Tyumen, Tel Aviv University and Central Academy of Fine Arts (CAFA) in Shanghai. Between 2009 and 2017, he was a faculty at European Graduate School (EGS). He was also the core faculty member at The Strelka Institute for Media, Architecture, and Design, Moscow (2016-2019) and a visiting faculty in School of Cultural Studies and Philosophy, Higher School of Economics, (Moscow, Russia (2020 - 2021).

Manovich is in demand to lecture on his research topics around the world. Since 1999 he presented over 750 invited lectures, keynotes, seminars, and master classes in North and South America, Asia, and Europe.

Topical writings: manovich.net

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Aaron Hertzmann

Monday, October 23rd, 2023 at 1pm PDT. Room 1605 Elings Hall and via Zoom.

Aaron Hertzmann is a Principal Scientist at Adobe, and Affiliate Faculty at University of Washington. He received a BA in computer science and art/art history from Rice University in 1996, and a PhD in computer science from New York University in 2001. He was a Professor at University of Toronto for 10 years, and has also worked at Pixar Animation Studios and Microsoft Research. He has published over 100 papers in computer graphics, computer vision, machine learning, robotics, human-computer interaction, visual perception, and art. He is an ACM Fellow and an IEEE Fellow.

research.adobe.com/person/aaron-hertzmann

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Thursday, October 19th, 2023 at 5pm. Room 387-1015.

Abstract

Models for the interaction of the player with the instrument are fundamental to the accurate synthesis of sound based on physically inspired models. Depending on the musical instrument, the palette of possible interactions is generally very broad and includes the coupling of body parts, mechanical objects and/or devices with various components of the instrument. In this talk we focus on the interaction of the player with strings, whose simulation requires accurate models of the fingers, dynamic models of the bow, of the plectrum and of the friction of objects such as bottle necks. We also consider collisions and imperfect pressure on the fingerboard as important side effects and playing styles. Our models do not depend on the specific numerical implementation but are simply illustrated in the digital waveguide scheme.

Gianpaolo Evangelista is professor of Music Informatics at the University of Music and Performing Arts Vienna, Austria. Previously he was professor of Sound Technology at Linköping University, Sweden, researcher and assistant professor at the University “Federico II” of Naples, Italy and adjunct professor at the Polytechnic of Lausanne (EPFL), Switzerland. He received the Laurea in Physics from the University “Federico II” of Naples and the Master and PhD in Electrical and Computing Engineering from the University of California Irvine. He has collaborated with several musicians among including Iannis Xenakis (Paris) and Curtis Roads. His interests are in all applications of Signal Processing, Physics and Mathematics to Sound and Music, particularly for the analysis, synthesis, special effects and the separation of sound sources.

Speaker: Lydia Zimmermann

Monday, October 16th, 2023 at 1pm PDT via Zoom.

Bio

Lydia Zimmermann (Barcelona, 1966) has written and directed fiction films, TV movies, documentaries, video art and produced filmic installations. She has taught filmmaking at universities and NGOs. She has filmed in Spain, Australia, Mexico, Haiti, Burkina Faso and Colombia, combining humanitarian and teaching work. She has participated in the creation of Cine Institute, a school for young Haitian filmmakers (2011-2015), taught workshops at the Gambidi acting school in Ouagadougou, Burkina Faso (2017-2018), and supported the creation of the indigenous media collective Ñambi Rimai (2018-201).

In her filmography, she explores the hybrid combination of genres and acting naturalism. She currently lives between Zurich and Barcelona and is writing her next fiction film, a co-production between Eddie Saeta (SP) and Tilt Production (CH).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Speaker: Garnet Hertz

Monday, October 9th, 2023 at 1pm PDT.

Room 1605 Elings Hall and via Zoom.

Abstract

Author of "Art + DIY Electronics" (MIT Press, 2023). A systematic theory of DIY electronic culture, drawn from a century of artists who have independently built creative technologies.

Since the rise of Arduino and 3D printing in the mid-2000s, do-it-yourself approaches to the creative exploration of technology have surged in popularity. But the maker movement is not new: it is a historically significant practice in contemporary art and design. This book documents, tracks, and identifies a hundred years of innovative DIY technology practices, illustrating how the maker movement is a continuation of a long-standing creative electronic subculture. Through this comprehensive exploration, Garnet Hertz develops a theory and language of creative DIY electronics, drawing from diverse examples of contemporary art, including work from renowned electronic artists such as Nam June Paik and such art collectives as Survival Research Laboratories and the Barbie Liberation Organization.

Hertz uncovers the defining elements of electronic DIY culture, which often works with limited resources to bring new life to obsolete objects while engaging in a critical dialogue with consumer capitalism. Whether hacking blackboxed technologies or deploying culture jamming techniques to critique commercial labor practices or gender norms, the artists have found creative ways to make personal and political statements through creative technologies. The wide range of innovative works and practices profiled in Art + DIY Electronics form a general framework for DIY culture and help inspire readers to get creative with their own adaptations, fabrications, and reimaginations of everyday technologies.

Bio

Garnet Hertz is Canada Research Chair in Design and Media Arts, and is Associate Professor of Design at Emily Carr University. His art and research investigates DIY culture, electronic art and critical design practices. He has exhibited in 18 countries in venues including SIGGRAPH, Ars Electronica, and DEAF and has won top international awards for his work, including the Oscar Signorini Award in robotic art, a Fulbright award, and Best Paper Award at the ACM Conference on Human Factors in Computing Systems (CHI). He has worked as Faculty at Art Center College of Design and as Research Scientist at the University of California Irvine. His research is widely cited in academic publications, and popular press on his work has disseminated through 25 countries including in publications like The New York Times, Wired, The Washington Post, NPR, USA Today, NBC, CBS, TV Tokyo and CNN Headline News.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

For previous seminars, please visit our MAT Seminars Video Archive.

Suleyman will also give a lecture for the UCSB Arts and Lectures series in Campbell Hall on October 5th, 2023 at 7:30pm.