Date: Tuesday, December 22nd

Time: 3pm

Location: Elings Hall, room 2003 (MAT conference room).

Abstract:

Modular synthesizers are currently experiencing a massive resurgence in popularity. Their endless flexibility and exotic interfaces have captured the imaginations of composers and musicians worldwide. In the past few years, the number of Eurorack manufacturers has gone from a small handful to more than a hundred, with over 1,000 modules available for purchase. In the software realm, many new tools and packages are available, while older tools are receiving major makeovers to attract the new audience.

Despite this incredible explosion of interest, the amount of focus on educating users has been surprisingly underwhelming. New users are buying systems only to find that their modules come with zero documentation. Many of the modules that come with documentation only inform users what the inputs and outputs are, and not how to use the modules inside of complex patches.

In this talk, two modular software packages created for education will be presented: Euromax for Max/MSP 5+, and Euro Reakt for Reaktor 6. We will listen to open-source modular recordings and analyze their patch diagrams. We will look at initial drafts of a modular textbook, along with the lessons learned from teaching modular synthesis in a classroom environment. Finally, we will learn about original modular designs and research, including a new hardware module, software by Unfiltered Audio, and Multi-Phasor Synthesis, a novel and flexible approach to complex waveform generation.

Date: Wednesday, December 16th

Time: 1pm

Location: Elings Hall, room 2003 (MAT conference room).

Abstract:

In this research I am focusing on a proof of concept system that will allow the artist to easily create and transform hypercomplex fractals through real-time ray casting. The ray casting system that I am developing gives the user not only control over various parameters of the fractal equation but the equation itself via easy access to the shader itself, where the computation takes place. This system is being built on an interactive real-time multiple display platform that is being developed by our research group that facilitates full surround stereo and interactivity in the background framework. This will allow the fractal framework to easily scale to multiple displays and systems.

Date: Thursday, December 3rd

Time: 4pm

Location: Elings Hall, room 2615 (transLAB).

Abstract:

To advance the use of geometric algebra in practice, we develop computational methods for generating 3D forms with the conformal model. Three discrete parameterizations of spatial structures – symmetric, kinematic, and curvilinear – are applied to synthesize new shapes on a computer. Our algorithms detail the implementation of space groups, linkage mechanisms, and rationalized surfaces, illustrating techniques that directly benefit from the underlying mathematics, and demonstrating how they might be applied to various scenarios. Each technique engages the versor – as opposed to matrix – representation of transformation, which allows for structure-preserving operations on geometric primitives. This covariant methodology facilitates constructive design through geometric reasoning: incidence and movement are expressed in terms of spatial variables such as lines, circles and spheres. In addition to providing a toolset for generating forms and transformations in computer graphics, the resulting expressions could be used in the design and fabrication of machine parts, tensegrity systems, robot manipulators, deployable structures, and freeform architectures. Building upon existing CGA specific algorithms, these methods participate in the advancement of geometric thinking, leveraging an intuitive spatial logic that can be creatively applied across disciplines, ranging from time-based media to mechanical and structural engineering, or reformulated in higher dimensions.

Date: Friday, December 4th

Time: 11am

Location: Elings Hall, room 2615 (transLAB).

Abstract:

In 1958 the 20th century architect and composer Iannis Xenakis transformed lines of glissandi from the graphic notation of Metastasis into the ruled surfaces of nine concrete hyperbolic parabolas of the Philips Pavilion. Over the next 20 years Xenakis developed the Polytopes, multimodal sites composed of sound and light, and in 1978 his Diatope bookended these spectacles and once again transformed the architectural and musical modalities using a general morphology. These poetic compositions were both architecture and music simultaneously; forming the best examples of what Marcos Novak would later coin as archimusic.

Contemporary examples have continued to experiment with this interdisciplinary domain and though creative and interesting the outcomes have yet to yield results that move this transformational conversation forward. This dissertation proposes to examine how to advance this transformational field of archimusic by introducing (A) an evaluative method to analyze prior trans-disciplinary works of archimusic, and (B) a generative model that integrates new digital modalities into the transformational compositional process based on the evaluative method findings. Together these two developments are introduced as archimusical synthesis, and aim to contribute a novel way of thinking and making within this dynamic spatial and temporal territory. The dissertation research also proposes to organize and categorize the field of archimusic as an end in itself, presenting the trans-disciplinary territory as a studied and understood discipline framed for continued exploration.

Date: Wednesday, December 9th

Time: 11am

Location: Elings Hall, room 2611 (Experimental Visualization Lab).

Abstract:

The way we listen to music has been changing fundamentally in the past two decades with the increasing availability of digital recordings and portability of music players. Up-to-date research in music recommendation systems attracted millions of users to online, music streaming services, containing tens of millions of tracks (e.g. Spotify, Pandora). The main focus of research in recommender systems has been algorithmic accuracy and optimization of ranking metrics. However, recent work has highlighted the importance of other aspects of the recommendation process, including explanation, transparency, control and user experience in general. Building on these aspects, this dissertation explores user interaction, control and visual explanation of music related mood metadata during recommendation process. It introduces a hybrid recommender system that suggests music artists by combining mood-based and audio content filtering in a novel interactive interface. The main vehicle for exploration and discovery in music collection is a novel visualization that maps moods and artists in the same, latent space, built upon reduced dimensions of high-dimensional artist-mood associations. It is not known what the reduced dimensions represent and this work uses hierarchical mood model to explain the constructed space. Results of two user studies, with over 200 participants each, show that visualization and interaction in a latent space improves acceptance and understanding of both metadata and item recommendations. However, too much of either can result in cognitive overload and a negative impact on user experience. The proposed visual mood space and interactive features, along with the aforementioned findings, aim to inform design of future interactive recommendation systems.

Date: Tuesday, December 8th

Time: 10am

Location: Elings Hall room 2003 (MAT conference room).

Abstract:

Forces of Nature is a re-usable platform of custom-built hardware and software for creating software-defined kinetic sculpture. A two-dimensional array of high-energy electromagnets synchronized by a microcontroller project an animated electromagnetic field, visualized in ferrofluid.

Through interactions of water, ferrofluid, gravity, and the generated magnetic output field, Forces of Nature is capable of exhibiting a wide variety of visual patterns and expressive sequences of motion. These expressive units are recombined in pre-scripted sequences (timed to music or other performances) or directed in real time through a USB connection to a host machine. The host machine may be creating a performance in reaction to live sensor input from a viewer, feedback sensors aimed at the fluid vessel, algorithmic design, pseudorandom patterns of lower level sequences, or any number of potential inputs in combination.

Speaker: Mike Harding.

Time: Monday, December 7, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

How a cultural organization and its artists respond to and develop their relationship with technology from 1982 onwards.

Bio:

Curator & Producer; Lecturer & Publisher; Author & Editor; occasional exhibitions, installations and performances. He has been running the audio-visual label Touch for 30 years and in this period has acquired much experience and information on disseminating cultural sounds to a wider audience. Since 1982: Touch, with Jon Wozencroft (senior lecturer in the Department of Communication & Design at the Royal College of Art, London).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Stephen Travis Pope.

Time: Monday, November 9, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

Recording channel strips are an instrumental component in source processing in music recording as well as the processes of mixing and mastering. The stages of a typical channel strip include: Input, Dynamics, Equalization, Effects, and Output Routing. This presentation starts with a brief history of recording channel strips, and continues with a survey of current hardware and software implementation. The final section is the presenter's requirements for the ideal recording channel strip.

Bio:

Stephen Travis Pope has realized his musical works in the North America (Toronto, Stanford, Berkeley, Santa Barbara, Havana) and Europe (Paris, Amsterdam, Stockholm, Salzburg, Vienna, Berlin). His music is available from Centaur Records, Perspectives of New Music, Touch Music, SBC Records, Absinthe Records, and the Electronic Music Foundation. Stephen also has over 100 technical publications on music theory and composition, computer music, and artificial intelligence. From 1995-2010 he worked at the University of California, Santa Barbara (UCSB).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Time: Monday, November 2, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

When requested by my Los Angeles-based art-collector friend Raj Dhawan to write music for an exhibition of his Alphonse Mucha (1860-1939) collection, I at once thought of basing the music on that of a Mucha contemporary and compatriot. My choice fell on the composer Leoš Janáček (1854-1928), born, like Mucha, in Moravia (then in the Austrian Empire, today in the Czech Republic). Thirty-seven selected Mucha paintings are matched by an equal number of Janáček pieces, many of them movements of larger works. The bigger the paintings, the longer the music selections. At first the music is constrained to the range of a minor seventh, all notes outside this range being discarded. The notes are also redistributed among five instruments - flute, clarinet, violin, cello and piano - and the range gradually increases to just over four octaves, each instrument being allotted exactly ten notes by then. Analog to this, each Mucha painting is first shown only with its most widespread color, the rest rendered in grey. During the run of each Janáček music, the colors of the Mucha works are expanded in range to finally include all the original ones. This audiovisual composition bears the title )ertur(, which could be expanded to include words like aperture (English, French), "apertura" (Czech, Italian, Polish), "copertura" (Italian), "abertura"cobertura" (Spanish, Portuguese) or "obertura" (Spanish). "Ertur" means "peas" in Icelandic.

Bio:

Born in 1945, Clarence Barlow obtained a science degree at Calcutta University in 1965 and a pianist diploma from Trinity College of Music London the same year. He studied acoustic and electronic composition from 1968-73 at Cologne Music University as well as sonology from 1971-72 at Utrecht University. His use of a computer as an algorithmic music tool dates from 1971. He initiated and in 1986 co-founded GIMIK: Initiative Music and Informatics Cologne, chairing it for thirteen years. He was in charge of computer music from 1982-1994 at the Darmstadt Summer Courses for New Music and from 1984-2005 at Cologne Music University. In 1988 he was Director of Music of the XIVth International Computer Music Conference, held that year in Cologne. From 1990-94 he was Artistic Director of the Institute of Sonology at the Royal Conservatory in The Hague, where from 1994-2006 he was Professor of Composition and Sonology. At UCSB he functions as professor at the Music Department (as Corwin Endowed Chair and Head of Composition), Media Arts and Technology and the College of Creative Studies. His interests are the algorithmic composition of instrumental, electronic and computer music, music software development as well as interdisciplinary activities e.g. between music and language and the visual.

music.ucsb.edu/people/clarence-barlow

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Irene Kuling, Vrije Universiteit Amsterdam.

Time: Monday, October 26, 4pm.

Location: Engineering Science Building, room 2001.

Abstract:

How do you know where your hand is? Probably you don’t think about this question too often. However, knowledge about the position of your hand is essential during your daily interaction with all kinds of objects. Two factors that play an important role in the position sense of the hand are vision and proprioception. In this talk I’ll present some of my work on visuo-proprioceptive position sense of the hand. This work reveals systematic (subject-dependent) errors between the visual and the proprioceptive perceived position of the hand. These errors are consistent over time, influenced by skin stretch manipulations, and resistant to force manipulations. An interesting question is how we can use this knowledge in the design of human-machine interaction.

Bio:

Irene A. Kuling received a B.Sc. degree in Physics at Utrecht University (2008) and a M.Sc. degree in Human-Technology Interaction from Eindhoven University of Technology (2011) in the Netherlands. Currently she is working toward the PhD degree in the field of visuo-haptic perception at Vrije Universiteit Amsterdam. Her research is part of the H-Haptics project, a large Dutch research program on haptics. Irene studies the integration of and differences between proprioceptive and visual information about the hand. Her research interests include human perception, haptics and psychophysics.

www.researchgate.net/profile/Irene_Kuling

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, October 15, 2015

Time: 4pm

Location: Elings Hall, Experimental Visualization Lab, room 2611

Abstract:

This work presents a case study to explore the interface and interactive design, gestural input, and control strategies for electroacoustic vocal performance. First, I explore a series of historical experiments of electronic instrument/ interface design using gestural input and control strategies back from the early 1950’s to the present. Second, I explore the current real-time vocal processing techniques and control mechanisms, and the lack of theoretical support and scientific evaluation for real-time gestural control enhancing electroacoustic vocal performance. Then, I propose a series of taxonomy of gestural input and control mechanisms in electroacoustic vocal performance, and provide examples to demonstrate each of these mechanisms. Finally, I present my case study - Tibetan Singing Prayer Wheel (TSPW), a hand-held, wireless, sensor-based musical instrument with a human-computer interface that simultaneously processes vocals and synthesizes sound based on the performer’s hand gestures with a one-to-many mapping strategy. A physical model simulates the singing bowl, while a modal reverberator and a delay-and-window effect process the performer’s vocals. This system is designed for an electroacoustic vocalist interested in using a solo instrument to enrich her/his vocal expressions and to achieve performance goals that would normally require multiple instruments and activities.

The installation consists of interactive video projections, touchable reactive sculptures, and live video feeds displayed at different areas of the UCSB campus simultaneously. It will be open to the public from Wednesday, September 30 to Friday October 2, from 9am to 5pm, at the Glass Box Gallery in the Art Building.

The main dance performance is performed by Kiaora Fox and Esron Gates, and will take place on Tuesday, September 29, at 8pm.

Funding assistance provided by an IHC Visual and Performing Arts Grant.

- 2pm: New Students

- 3pm: Returning Students and New Students

Location: Elings Hall room 1601

Refreshments will be served.

Date: Thursday, September 10, 2015

Time: 11am

Location: Elings Hall, room 2003 (MAT conference room).

Abstract:

Musical looping enables composers to create an arbitrarily large amount of music from just a (temporarily) small sample that maintains consistent rhythm and meter. Yet, incessant repetition, a consequence of looping, quickly becomes monotonous, which composers have historically addressed by layering multiple looping samples to provide variation. The layering of loops is certainly a well-founded compositional technique, but it only creates a diversion from the repetition.

Shifty Looping addresses the monotony problem of traditional looping while upholding the advantages. In this technique developed by Matthew Wright (2006), loop points are dynamic and the playback position randomly walks amongst these possible loop points. However, this system requires a manual preprocessing and analysis stage that hinders an ideal user experience, and the loop points found may contain audible discontinuities.

I present a web application implementing Shifty Looping using data mining techniques to automate the manual analysis and preprocessing stages. Through a comparison of the audio content of the output samples from both this implementation and the original, I show that this system is able to find better loop points and reduce audible discontinuities while maintaining consistent rhythm. Future work includes increasing the system’s robustness and ability to work across different styles and genres of music.

Time: Tuesday, June 9, 8pm

Location: The Fun Zone, in downtown Santa Barbara

The event featured performances by several MAT students, special guest performers, and many of the students in Charlie Roberts' Spring quarter course "Introduction to Algorithmic Composition and Sound Synthesis". Most of the performers used Gibber, a creative coding environment that runs in the browser, developed by Charlie Roberts.

Special guest performer Chad McKinney gave a talk about a framework called Necronomicon, that he and his brother Curtis are developing, that combines OpenGL, audio synthesis and live interaction.

Time: Tuesday, June 9, 1pm

Location: Systemics Lab, room 2810 Elings Hall

Abstract:

In 1962 Karlheinz Stockhausen’s "Concept of Unity in Electronic Music" introduced a connection between the parameters of intensity, duration, pitch, and timbre using an accelerating pulse train. In 1973 John Chowning discovered that complex audio spectra could be synthesized by increasing vibrato rates past 20Hz. In both cases the notion of increased speed to produce timbre was critical to discovery. Although both composers also utilized sound spatialization in their works, spatial parameters were not unified with their synthesis techniques. This dissertation examines software studies and multimedia works involving the use of spatial and visual data to produce complex sound spectra. The culmination of these experiments, Spatial Modulation Synthesis, is introduced as a novel, mathematical control paradigm for audio-visual synthesis, providing unified control of spatialization, timbre, and visual form using high-speed sound trajectories.

The unique, visual sonification and spatialization rendering paradigms of this dissertation necessitated the development of an original audio-sample-rate graphics rendering implementation, which, unlike typical multimedia frameworks, provides an exchange of audio-visual data without downsampling or interpolation. This enables bidirectional use of data as graphics and sound.

Time: Friday, June 5th, 11am - 12pm

Location: Experimental Visualization Lab, room 2611 Elings Hall

Abstract:

Robotic systems are poised to enter nearly every facet of our lives due to many converging factors including advanced controls/AI techniques, low-cost components and design tools, the accessibility of these to the general public, a national interest in STEM, and the outreach efforts of making/hacking culture.

Just as these robotic systems have entered our manufacturing workforces, they will enter our homes, schools, workplaces, hospitals, battlefields, and museums. And just as humans have had to develop safe, efficient, and compelling ways to work with factory robots, we will have to do the same for smart homes, autonomous vehicles, and intelligent classrooms.

Currently, creators of robotic media systems lack a design framework which addresses the interconnected roles of physical morphology, behavior, time, perception, function, and intelligence. Design solutions are nearly always ad-hoc, and designers lack a methodology for objective comparison between the many forms that these systems may take. The field of artificial intelligence has only relatively recently begun to explore the components of intelligence that are embodied, affective, or non-verbal.

This dissertation proposes a unified approach to the design of robotic media systems through the application of knowledge from the animal cognition, social robotics, controls theory, cinema, and media arts fields. The dual roles of observer and observed, the many components of intelligence, and the distribution of intelligence throughout a physical embodiment are connected through the proposed Morphology-Intelligence Matrix, which is presented as a tool for designing robotic media systems which must interact with humans in intelligent ways.

To support and illustrate this approach, this dissertation describes and analyzes many robotic systems ranging from the military to the artistic, including several original works.

Speaker: Edward Zajec, Professor Emeritus of Computer Art, Syracuse University New York.

Time: Thursday, May 28, 5pm - 6:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

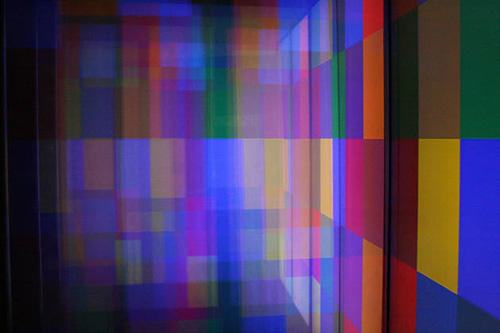

The problem stated in the title hints at a wider problematic, which does not involve only the articulation of light in a time-space continuum, but must also consider this whole from a sound-image and art-science perspective. In turn, all this implies a search to find a common ground between these multiple overlapping realities and disciplines. Throughout the years it has been my main concern not to eliminate distinction between the different fields or to erase boundaries but rather to smooth the crossings by seeking out areas of common interest. I am taking this occasion to present some of the interconnections between the various parts that I was able to uncover in the process of developing my work. To be more specific, I will discuss, amongst other issues, the role of rhythm in the time dimension and the role of interval in the space dimension in the process of seeking new ways of envisioning the world with the aid of the new technologies.

Bio:

The problem stated in the title hints at a wider problematic, which does not involve only the articulation of light in a time-space continuum, but must also consider this whole from a sound-image and art-science perspective. In turn, all this implies a search to find a common ground between these multiple overlapping realities and disciplines. Throughout the years it has been my main concern not to eliminate distinction between the different fields or to erase boundaries but rather to smooth the crossings by seeking out areas of common interest. I am taking this occasion to present some of the interconnections between the various parts that I was able to uncover in the process of developing my work. To be more specific, I will discuss, amongst other issues, the role of rhythm in the time dimension and the role of interval in the space dimension in the process of seeking new ways of envisioning the world with the aid of the new technologies.

The Edward Zajec lecture is presented by the Systemics Public Program, led by Professor Marko Peljhan and the UCIRA Integrative Methodologies series in collaboration with the MAT End of Year Show team and the Media Arts and Technology lecture series program.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

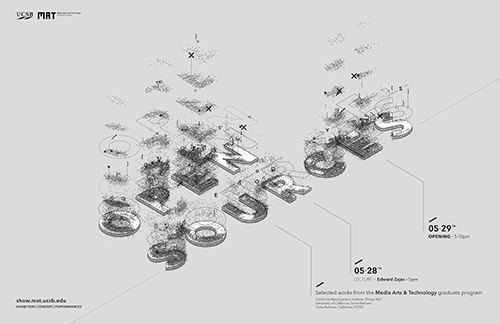

Friday, May 29th, 5-10pm

Lecture by Edward Zajec, Thursday, May 28th, 5pm

Elings Hall, University of California, Santa Barbara

"Open Sources" is the Media Arts and Technology program's End of Year Show at UC Santa Barbara. Showcasing graduate student work that connects emergent media, computer science, engineering, architecture, electronic music and digital art research, "Open Sources" aims to represent the mission of MAT: to enable the creation of hybrid work that informs both the scientific and aesthetics discourses.

The exhibition features installations, performances, and concerts by over 20 artists from the MAT community. A diverse selection of work spans themes such as human-robot interaction, generative sound and visual art, experimental music, computer vision, virtual and augmented reality, and many other transdisciplinary subjects.

We present ongoing cutting-edge research at MAT including tours of the AlloSphere: a three story, large-scale, audio and visual immersive instrument and laboratory.

"Open Sources" features source code from each of the works as a commented, critical edition, providing a window into the inner workings of the projects exhibited.

This year's curatorial approach disrupts the convention of only displaying the surface-level aesthetic of a work. In the early days of media art, knowledge of programming languages was sparse, limited to a small domain of trained engineers who turned to the arts, with only the most intrepid of artists venturing to learn computer code. Today, programming is an essential skill for not only many artists, but also scientists, designers, and educators. By showcasing source code, "Open Sources" celebrates widespread code literacy while exposing the structural fabric of a piece as an additional critical dimension.

A catalog documenting both the show and MAT's research at the cutting edge of art and technology will be available at the opening.

As a pre-opening event on Thursday evening, "Open Sources" is proud to present a lecture by Edward Zajec, Professor Emeritus of Computer Art, Syracuse University New York, titled "Spectral Modulator - The Problem of Articulating Duration with Light".

Website: show.mat.ucsb.edu

Speaker: Dr. Paul Hubel, Senior Image Scientist at Apple.

Time: Monday, May 11, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

The talk will give a brief overview of how computational imaging has evolved over the years and the impact it has had on photography. After the early years where sensors and interpolation algorithms improved to catch and surpass the resolution achievable with film, the development of white balance, color, and tone correction algorithms, and the inclusion of new focus technology, panoramic imaging, high dynamic range, and image stabilization have all combined to realize the promise and populatiry of computational photography.

Bio:

Dr. Paul M. Hubel has been a Imaging Architect at Apple, Inc. since 2008 where he works on color and image processing issues for digital photography and camera systems. Among other projects, his color correction methods have been used for all iOS images since the iPhone3S. From 2002-2008, Dr. Hubel worked on imaging solutions for high-end DSLR camera systems at Foveon, Inc. and before that he worked for ten years as a Principal Project Scientist at Hewlett-Packard Laboratories working on digital cameras, photofinishing, scanners, copiers, and printers. Prior to this, Dr. Hubel worked at the Rowland Institute for Science and then as a PostDoc/lecturer at MIT-Media Laboratory. Dr. Hubel received his B.Sc. in Optics from The University of Rochester in 1986, and his D.Phil. in Engineering from Oxford University in 1990 for his dissertation on Color Reflection Holography. Dr. Hubel has published many technical papers, book chapters, and authored over 50 patents.

www.apple.com/iphone/world-gallery

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Dr. Jim Spohrer, Director of IBM Global University Programs, and head of the IBM Cognitive Systems Institute.

Time: Monday, May 4, 12 noon.

Location: Engineering Science Building, room 1001.

Abstract:

Cognitive assistants are beginning to appear for more and more occupations – from doctors to chefs to biochemists – boosting creativity and productivity of workers. Given this important trend a better understanding of the role of cognitive assistants in the design of smart service systems will be needed. This talk will describe IBM's Watson and Cognitive Computing efforts, as well as broader industry and societal trends. For example, how will cognitive assistants be applied in higher education to assist faculty, students, researches, and administrative staff. He will also mention NSF's program to fund translational research on smart service systems, including cognitive assistants for roles in those systems.

Bio:

Dr. Spohrer is Director IBM Global University Programs and leads IBM’s Cognitive Systems Institute. The Cognitive Systems Institute works to align cognitive systems researchers in academics, government, and industry globally to improve productivity and creativity of problem-solving professionals, transforming learning, discovery, and sustainable development. IBM University Programs works to align IBM and universities globally for innovation amplification and T-shaped skills. Jim co-founded IBM’s first Service Research group, ISSIP Service Science community, and was founding CTO of IBM’s Venture Capital Relations Group in Silicon Valley. He was awarded Apple Computers’ Distinguished Engineer Scientist and Technology title for his work on next generation learning platforms. Jim has a Yale PhD in Computer Science/Artificial Intelligence and MIT BS in Physics. His research priorities include service science, cognitive systems for smart holistic service systems, especially universities and cities. With over ninety publications and nine patents, he is also a PICMET Fellow and a winner of the S-D Logic award

Speaker: Dr. Angus Forbes, Assistant professor in the Department of Computer Science at University of Illinois at Chicago.

Time: Monday, April 27, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

In the first part of this talk, I showcase recent work from the Electronic Visualization Lab on visualizing complex scientific datasets: I present a variety of novel visualizations to represent causal relationships in biological pathways; and I introduce new techniques for visualizing and interacting with brain network data. Furthermore, I discuss intersections between artistic investigations, human-centered design strategies, and scientific methodologies, and explore how integrating these approaches can be an effective way to generate novel research. In the second part of the talk, I provide an overview of and highlights of the IEEE VIS Arts Program, a forum that promotes dialogue about the relation of aesthetics and design to visualization and visual analytics.

Bio:

Dr. Forbes is an assistant professor in the Department of Computer Science at University of Illinois at Chicago, where he directs the Creative Coding Research Group within the Electronic Visualization Laboratory. His research investigates novel techniques for representing and interacting with complex scientific information, and has appeared recently in IEEE Transactions on Visualization and Computer Graphics, ACM Multimedia, IEEE BigData, IS&T/SPIE Electronic Imaging, and Leonardo. Dr. Forbes is chairing the IEEE VIS Arts Program (VISAP’15), to be held in Chicago in October 2015.

More information about his research and artworks can be found at:

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Date: Wednesday, April 15, 2015.

Time: 7:30pm.

The concert will feature works by Ragnar Grippe, Fernando Rincon Estrada, Ron Sedgwick, Abstract Jak, and Micheal Hetrick.

Speaker: Reza Ali.

Time: Monday, April 20, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

I’ll be reflecting on my recent work and talking about my time at MAT and how it was critical in my growth as an artist, designer, and engineer.

Bio:

Reza Ali is a hybrid unicorn who is obsessed with nature, computer graphics, and interfaces. He has worked with agencies, technology companies, musicians and other unicorns. Reza is currently researching 3D printing, computational geometry, and Cinder & C++11.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Matthew Biederman.

Time: Monday, April 13, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

Performance, real-time, sensing, visualization, and media metamorphosis - but to what end? Perception vs illusion, spectacle as concept and optics vs intent - Biederman will discuss these ideas through the lens of 'new' technologies and media arts, and placing their trajectory within the larger context of the arts and society while examining a few of his recent works.

Bio:

Matthew Biederman works across media and milieus, architectures and systems, communities and continents since 1990. He creates works that utilize light, space and sound to reflect on the intricacies of perception mediated through digital technologies through installations, screen-based work and performance. His work has been featured at: Lyon Bienniale, Sonic Acts, Istanbul Design Bienniale, The Tokyo Museum of Photography, ELEKTRA, MUTEK, and CTM among others.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: John Underkoffler, CEO and co-founder of Oblong Industries.

Time: Monday, April 6, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Bio:

John Underkoffler is CEO and co-founder of Oblong Industries. Oblong’s trajectory builds on decades of foundational work at the MIT Media Laboratory, where John was responsible for innovations in real-time computer graphics systems, large-scale visualization techniques, and the I/O Bulb and Luminous Room systems. He has been science advisor to films including Minority Report, The Hulk (A.Lee), Aeon Flux, Stranger Than Fiction, and Iron Man. John also serves as adjunct professor in the USC School of Cinematic Arts, on the National Advisory Council of Cranbrook Academy, on the board of trustees of Sequoyah School, on the advisory board of the 5D Organization, and on the board of MIT’s E14 Fund.

www.ted.com/speakers/john_underkoffler

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Xavier Serra, Professor at Pompeu Fabra University, Barcelona, Spain.

Time: Friday, April 3, 3:00pm.

Location: Studio Xenakis, Music 2215.

Abstract:

Music is a universal phenomenon that manifests itself in every cultural context with a particular personality and the technologies supporting music have to take into account the specificities that every musical culture might have. This is particularly evident in the field of Music Information Retrieval, in which we aim at developing technologies to analyse, describe and explore any type of music. From this perspective we started the project CompMusic in which we focus on a number of MIR problems through the study of five music cultures: Hindustani (North India), Carnatic (South India), Turkish-makam (Turkey), Arab-Andalusian (Maghreb), and Beijing Opera (China). We work on the extraction of musically relevant features from audio music recordings related to melody and rhythm, and on the semantic analysis of the contextual information of those recordings.

In this talk I will do an overview of CompMusic and also briefly mention other research projects being carried out at the Music Technology Group of the Pompeu Fabra University in Barcelona that relate to the analysis, description and synthesis of sound and music signals.

Speaker: Eric Paulos - Assistant Professor, Electrical Engineering and Computer Science, Berkeley Center for New Media, University of California, Berkeley.

Time: Monday, March 9, 12 noon - 1:30pm.

Location: Engineering Science Building, room 2001.

Abstract:

This talk will present and critique a new body of evolving collaborative work at the intersection of art, computer science, and design research. It will present an argument for hybrid materials, methods, and artifacts as strategic tools for insight and innovation within computing culture. It will explore and demonstrate the value of urban computing, citizen science, and maker culture as opportunistic landscapes for intervention, micro-volunteerism, and a new expert amateur. Finally, it will present and question emerging materials and strategies from the perspective of engineering, design, and new media.

Bio:

Eric Paulos is the founder and director of the Tactical Hybrid Ecologies group and an Assistant Professor in Electrical Engineering and Computer Sciences at UC Berkeley and faculty within the Berkeley Center for New Media. Previously, Eric held the Cooper-Siegel Associate Professor Chair at Carnegie Mellon University where he was faculty within the Human-Computer Interaction Institute. He also founded the Urban Atmospheres group at Intel Research where a body of early urban computing projects were developed. Eric’s work spans a broad range of research territory from robotics, urban computing, citizen science, design research, critical making, and new media art. Eric received his PhD in Electrical Engineering and Computer Science from UC Berkeley but his real apprenticeship was earned through over two decades of explosive, excruciatingly loud, and quasi-legal activities with a band of misfits at Survival Research Laboratories.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Chris Randall - Founder of Audio Damage, Inc.

Time: Monday, March 2, 12 noon - 1:30pm

Location: Engineering Science Building, room 2001

Abstract:

In this presentation, Chris will talk about the art of making a musician-friendly user interface, with examples (both good and bad), and propose a general methodology for presenting abstract concepts such as cellular automata and neural networks in a musician-friendly and usable manner.

Bio:

Chris Randall is the co-owner of Audio Damage, Inc., a company that develops hardware and software for music-making. An entirely self-taught musician, programmer, and user interface designer, he has nearly 200 album credits as artist, producer, or both, and has designed over 75 commercial software and hardware products for Audio Damage, iZotope, Eventide, and Cycling ’74. He teaches electronic music and entrepreneurship in the music industry at Phoenix College, and writes the popular Analog Industries blog about music technology and the creative process.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Time: Friday, March 6, 12 noon - 1:00pm

Location: CNSI conference room, room 3001 (third floor), Elings Hall

Abstract:

Choreographic strategies from the domain of dance are applied to the development of complex kinetic sculptures, through the use of closed-loop robotic systems. Methodologies and tools are created which enable this inter-disciplinary approach. Evidence is provided that the choreographed sculpture enabled by this work may activate human brain’s mirror neuron system substantially more than other forms of abstract art. The methodologies and tools allow the application of choreographic manipulations not only to moving sculptures, but also to ecosystems incorporating combinations of sculptural, digital and corporeal elements. Taxonomies for kinetic sculpture motion, for simulating gravity in kinetic sculpture and for physical/digital kinetic interactions are developed which additionally inform the motivation for the novel methodologies and tools. It is shown that this approach facilitates the exploration of low-level visual phenomena, and the exploration of themes of non-duality and self-reflection. Precedents in art history are provided, as well as background in cognitive psychology and philosophy which informs these cognitive and self-reflective explorations. Effective facilitation is further demonstrated through a variety of case studies from the author’s practice. Finally, a detailed set of tools and methodologies are provided, including structural design, motion simulation, controls design and graphical framework, which together may provide unencumbered creative exploration in a variety of related artistic endeavors.

Speakers: Professors Jeffrey Shaw and Sarah Kenderdine, School of Creative Media at City University Hong Kong.

Time: Monday, February 23, 12 noon - 1:30pm

Location: Engineering Science Building, room 1001

Abstract:

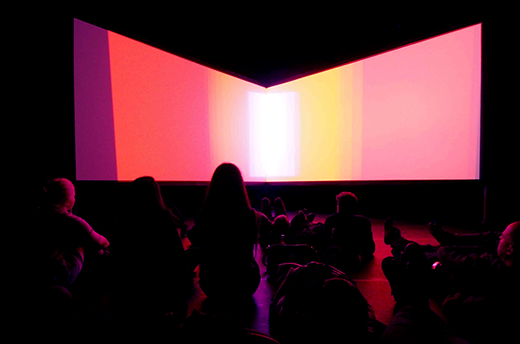

In his presentation Professor Shaw will draw on Willem Flusser’s concepts of ‘apparatus, program and freedom’ to elucidate his technical, conceptual and aesthetic researches in the fields of virtual and augmented reality, immersive visualization environments, navigable cinematic and interactive narrative systems. At the heart of innovative techniques for virtual reality are the corporeal experience and the evolution of a heterogeneous range of interactive relationships that come together to constitute co-active, co-creative and emergent modalities of viewer in-corporation in the data sphere. Embodiment explodes traditional narrative strategies and signals a shift from isolated individual experience to interpersonal theaters of exchange and social engagement. In addition, the opportunities offered by interactive and 3D technologies for enhanced cognitive exploration and interrogation of high dimensional data are rich fields of experimentation.

Bios:

Professor Jeffrey Shaw (Hon.D.CM) has been a leading figure in new media art since the 1960′s. In a prolific oeuvre of widely exhibited and critically acclaimed works he has pioneered and set benchmarks for the creative use of digital media (www.jeffrey-shaw.net). Shaw was the founding director of the ZKM Institute for Visual Media Karlsruhe (1991-2002), and in 2003 he was awarded an Australian Research Council Federation Fellowship to co-found and direct the UNSW iCinema Research Centre Sydney (www.icinema.unsw.edu.au). Since 2009 Shaw is Chair Professor of Media Art and Dean of the School of Creative Media at City University Hong Kong (www.cityu.edu.hk/scm), as well as Director of the Applied Laboratory for Interactive Visualization and Embodiment (www.cityu.edu.hk/alive) and Centre for Applied Computing and Interactive Media (http://www.acim.cityu.edu.hk/). Shaw is also Visiting Professor at the Institute for Global Health Innovation at Imperial College London, and the Central Academy of Fine Art (CAFA) Beijing.

Professor Sarah Kenderdine researches at the forefront of interactive and immersive experiences for museums and galleries. In the last 10 years Kenderdine had produced over 60 exhibitions and installations for museums worldwide. In these installation works, she has amalgamated cultural heritage with new media art practice, especially in the realms of interactive cinema, augmented reality and embodied narrative.

Sarah concurrently holds the position of Professor, National Institute for Experimental Arts (NIEA), University of New South Wales Art | Design (2013—) and Special Projects, Museum Victoria, Australia (2003—). She is Adjunct Prof. and Director of Research at the Applied Laboratory for Interactive Visualization and Embodiment (ALiVE), City University of Hong Kong and Adjunct Prof. at RMIT.

Recent books include: PLACE-Hampi: Inhabiting the Panoramic Imaginary of Vijayanagara, Heidelberg: Kehrer Verlag, 2013 and Theorizing Digital Cultural Heritage: a critical discourse, Cambridge: MIT Press, 2007.

In 2014, she was awarded the Australian Council for Humanities and Social Sciences (CHASS) Prize for Distinctive Work: the Pure Land projects. In 2013, the International Council of Museum Award (Australia) and the Australian Arts in Asia Awards Innovation Award for: the PLACE-Hampi Museum, India. Others include: Tartessos Prize 2013 for contributions to virtual archaeology worldwide and, the Digital Heritage International Congress & IMéRA Foundation Fellowship (Aix-Marseille University) 2013.

niea.unsw.edu/people/professor-sarah-kenderdine

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Kenneth Fields - Associate Professor, Central Conservatory of Music, Beijing, China and Director of Syneme Network Music Performance Studio.

Time: Monday, February 9, 12 noon - 1:30pm

Location: Engineering Science Building, room 2001

Abstract:

In Radio as an Apparatus of Communication, Brecht wrote, “Thus there was a moment when technology was advanced enough to produce the radio and society was not yet advanced enough to accept it.” He lamented the lost creative opportunity of a perfectly capable bi-directional medium which gravitated instead toward broadcasting. Walter Benjamin formulated how radio and TV reflected the image of the economic system. It was not a limitation of technology, but a limitation of social capacitance that patterned the communicational potential toward the one-to-many model.

We are now in more conducive times, thanks to the internet, for the envisioned many-to-many, decentralized projection of presence (next generation radio/tv). What started as the complex interlinking of hypertexts, is now moving toward linked networks of live streams. This talk will explore the new technologies and practices that are emerging to support many-to-many, live performance scenarios.

Bio:

Kenneth Fields engaged in interdisciplinary studies across multiple departments (art, music and cognitive science), receiving a Doctorate in Media Arts from the University of California at Santa Barbara in 2000. Ken then moved to China in 2000 to participate in the development of nascent digital arts/music programs at China’s Central Conservatory of Music (Professor in the China Electronic Music Center, CEMC) and Peking University (School of Software, Department of Digital Art and Design). Concurrently, he lectured at China’s Academy of Fine Arts while presenting his work internationally. A major accomplishment of this period was to lead a team project to translate the Computer Music Tutorial by Curtis Roads (Published in 2011). From 2008 to 2013, Ken held a Tier I Canada Research Chair position in Telemedia Arts (University of Calgary) and is now back full-time in Beijing at China’s Central Conservatory of Music directing a new doctorate program and studio focused exclusively on network music performance.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Julie Martin - Director of E.A.T. and Executive Producer of a Series of E.A.T. films on DVD.

Time: Monday, February 2nd, 12 noon - 1:30pm

Location: Engineering Science Building, room 2001

Abstract:

The talk will explore the history of E.A.T. from 1960s — Billy Klüver’s first collaborations with Jean Tinguely on Homage to New York in 1960 through the projects and activities of Experiments in Art and Technology in the 1960s and ’70s to the present, with emphasis on the idea of collaboration between artists and engineers and scientists, how this concept developed over the life of the organization.

Bio:

Born in Nashville, Tennessee, Julie Martin graduated from Radcliffe College with a BA in philosophy and received a Masters degree in Russian Studies from Columbia University. In 1966 she worked as production assistant to Robert Whitman on a series of his theater performances, culminating in the series 9 Evenings: Theatre & Engineering in October 1966. She joined the staff of Experiments in Art and Technology in 1967, and over the years worked closely with Billy Klüver on projects and activities of the organization. Currently she is director of E.A.T. and executive producer of a series of E.A.T. films on DVD that document each of the ten artists’ performances at 9 Evenings, and is editing a book on the art and technology writings of Billy Klüver.

She was co-editor with Billy Klüver and Barbara Rose of the book Pavilion, documenting the E.A.T. project to design, construct and program the Pepsi Pavilion for Expo ’70 in Osaka, Japan. She was co-author with Billy Klüver of the book, Kiki’s Paris, an illustrated social history of the artists’ community in Montparnasse from 1880 to 1930. She also collaborated with Klüver on numerous articles on art and technology during the 1980s and 1990s.

More recently she has worked as coordinating producer for performances by Robert Whitman including the video cell phone performance Local Report (2005); Passport (2010), two simultaneous theater performances that shared images transmitted over the Internet; and Local Report 2012, an international video cell phone performance and installation at Eyebeam in New York.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speaker: Chris Chafe - Professor, Director of the Center for Computer Research in Music and Acoustics (CCRMA) at Stanford University.

Time: Monday, January 26, 12 noon - 1:30pm

Location: Engineering Science Building, room 2001

Abstract:

A dozen years of composing sonifications in collaboration with scientists, MD’s and engineers have produced a range of art and insights bearing on measured phenomena. Data sources have included Internet traffic, greenhouse gas levels, ripening tomatoes, DNA sequences from synthesized biological parts, sea level rise, fracking signals and brain waves. The outcomes span concert music, gallery sound art and applications for practical monitoring devices. I will present examples of both real-time and non-realtime approaches and some of the first considerations in attempting to translate extra-musical data into music and sound.

Bio:

Chris Chafe is a composer, improvisor, and cellist, developing much of his music alongside computer-based research. He is Director of Stanford University’s Center for Computer Research in Music and Acoustics (CCRMA). At IRCAM (Paris) and The Banff Centre (Alberta), he pursued methods for digital synthesis, music performance and real-time internet collaboration. CCRMA’s SoundWIRE project involves live concertizing with musicians the world over.

Online collaboration software including jacktrip and research into latency factors continue to evolve. An active performer either on the net or physically present, his music reaches audiences in dozens of countries and sometimes at novel venues. A simultaneous five-country concert was hosted at the United Nations in 2009. Chafe’s works are available from Centaur Records and various online media. Gallery and museum music installations are into their second decade with “musifications” resulting from collaborations with artists, scientists and MD’s. Recent work includes the Brain Stethoscope project, PolarTide for the 2013 Venice Biennale, Tomato Quintet for the transLife:media Festival at the National Art Museum of China and Sun Shot played by the horns of large ships in the port of St. Johns, Newfoundland.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.

Speakers: Laleh Mehran, Associate Professor, Graduate Director of Emergent Digital Practices, University of Denver, and Chris Coleman, Associate Professor, Director of Emergent Digital Practices, University of Denver.

Time: Monday, January 12, 12 noon - 1:30pm

Location: Engineering Science Building, room 2001

Abstract:

The talk will be a combination of our individual work as well as our collaborations focused on the tangled landscapes of the physical and the digital.

Bios:

Laleh Mehran creates elaborate environments in digital and physical spaces. Focused on multifaceted intersections between politics, religion, and science, Mehran strives to call attention to the implicit connections between them, while raising the question of the viewer’s relation to each of these fundamental systems. Meditative rather than didactic, Mehran’s artworks are invitations to think again about each of these paradigms and the profound connections that bind them and as such her work is of necessity as veiled as it is explicit, as personal as it is political and as critical as it is tolerant.

Professor Mehran received her MFA from Carnegie Mellon University in Electronic Time-Based Media. Her work has been shown individually and as part of art collectives in international venues including the International Symposium on Electronic Art in the United Arab Emirates, the National Taiwan Museum of Fine Arts in Taiwan, FILE (Electronic Language International Festival) in Brazil, the European Media Arts Festival in Germany, the Massachusetts Museum of Contemporary Art, the Carnegie Museum of Art, The Georgia Museum of Art, The Andy Warhol Museum, and the Denver Art Museum.

Chris Coleman was born in West Virginia, USA and he received his MFA from SUNY Buffalo in New York. His work includes sculptures, videos, creative coding and interactive installations. Coleman has had his work in exhibitions and festivals in nearly 20 countries including Brazil, Argentina, Singapore, Finland, Sweden, Italy, Germany, France, China, the UK, Latvia, and across North America. His open source software has been downloaded more than 35,000 times by users in over 100 countries and is used globally in physical computing classrooms. He currently resides in Denver, CO and is an Associate Professor and the Director of Emergent Digital Practices at the University of Denver.

For more information about the MAT Seminar Series, go to:

www.mat.ucsb.edu/595M.