Date: Thursday, December 7th

Time: 12pm

Location: Elings Hall room 1601

Abstract:

Tamuyal is a mobile, location-based game that provides K-12 students an introduction to science, technology, engineering, and mathematics (STEM). The word Tamuyal is Yucatec Mayan for “in the cloud”, appealing to both indigenous Mayan cosmology and modern cloud computing. Since females and minorities continue to be largely underrepresented in STEM fields, there has been research addressing this problem from various angles. However, no one has created a game that aims to educate and motivate students to pursue higher education in STEM. Tamuyal accomplishes this task by combining modern day technology, culturally relevant art, and a recommender system to create an educational gamified experience tailored to individual tastes to make visits more engaging. Through the use of Mesoamerican artwork and cultural representations, we generate an experience intended to increase interest and familiarity in STEM education and careers, particularly for students who are largely underrepresented in these fields.

Date: Wednesday, December 6th

Time: 9am

Location: MAT Conference room, 2003 Elings Hall

Abstract:

The Republic of India is regarded as the largest democracy in the world, due to the sheer number of it’s inhabitants. At the same time according to the Economist Intelligence Unit’s democracy index, as of 2016, India ranks 32nd and is categorized as a flawed democracy. Matdan is an interdisciplinary project integrating information visualization methodologies with social sciences to build a data visualization platform to educate the electorate in India. It aims to act as a catalyst in India’s transformation from a flawed democracy to a full democracy.

The project is at present developed for the 2017 Legislative Elections for the state of Gujarat in collaboration with TCPD (Trivedi Center of Political Data), Ashoka University. The deliverable is an interactive data visualization built utilizing the javascript D3 library. The application has three visualization segments each derived from a different dataset. The first segment visualizes the historical Indian election dataset of TCPD. It is a time-series dataset of parameters attached with every constituency in Gujarat. The second section visualizes multivariate data profiling candidates contesting in the upcoming elections. This is extracted from the affidavit data collected by the Election Commision of India. The final section visualizes aggregated socio economic parameters accumulated from the Open Government Data (OGD) platform of the Indian Government.

The project aims to visualize this data to educate the electorate of the background and historical performance record of the competing candidates and parties, and enable them to be able to make an informed voting decision.

Speaker: Andres Cabrera

Time: Monday, December 4, 2017, 1pm

Location: Elings Hall, room 1605

Distributed computing has been ubiquitous since the inception of computing. It can solve issues with processing and memory constraints, heterogeneity of platforms, peripheral accessibility and physical location constraints. From SETI@home to Bitcoin, it has entered the mainstream. It is still however relatively unexplored and seldom used in Media Arts - with the exception of control interfaces and network performance-, mainly due to the high technical barrier of entry. This talk will address the challenges and possibilities of distributed computation for Multimedia Arts.

Bio

Andres Cabrera is CREATE (Center for Research in Electronic Art Technology) Research Director and Media Systems Engineer with the AlloSphere Research Group at UCSB. Graduated as classical guitarist from Universidad de los Andes in Bogota, Colombia and moved to Computer Music and eventually Media Arts through his contact and participation with the Csound Open Source community. His research focuses on spatial audio and interactive media systems and languages.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Time: Monday, November 27, 2017, 1pm

Location: Elings Hall, room 1601

MAT students Dennis Adderton, Hannen (Hannah) Wolfe and Cecilia Wu will present their research, scholarly and creative activities to fellow students and the UCSB Community.

Speaker: Magda El Zarki

Time: Monday, November 20, 2017, 1pm

Location: Elings Hall, room 1601

This talk will focus on three wide ranging games that we have worked on extensively over the past 4 years: Elmina - A Slave Castle: history and cultural heritage, Sankofa - The Asante of West Africa: education, culture and storytelling, and MineBike - MindCraft on a Bike: exergames and rehabilitation. I will discuss the origin of each idea, the purpose, the process, the challenges, and the outcomes. All three games were developed under the guidance of UCI faculty, with mostly local student talent - from UCI’s pool of game students, with some assistance from students from neighboring Art schools. For Sankofa we were fortunate enough to be given a small pool of seed funding that enabled us to hire some external concept artists, 3D animators and riggers.

Bio

Magda El Zarki currently holds the position of Professor in the Department of Computer Science at the University of California, Irvine, where she is involved in various research activities related to telecommunication networks and networked computer games. She is currently the Director of the Institute of Virtual Environments and Computer Games and was the co-creator of the Computer Game Science Degree program which she directed for the first 2 years. Prior to joining UC, Irvine, she was an Associate Professor in the Department of Electrical Engineering at the University of Pennsylvania in Philadelphia where she also held the position of Director of the Telecommunications Program. She was director of the Networked Systems Graduate Program at UC, Irvine from 2005 – 2007. From 1992 – 1996 she held the position of Professor of Telecommunications at the Technical University of Delft, Delft, The Netherlands. She was the recipient of the Cor Wit Chair in Telecommunications at TU Delft from 2004 – 2006. Ms. El Zarki has served as an editor for several journals in the telecommunications area, and is still actively involved in many international conferences. She was on the board of governors of the IEEE Communications society and was the vice chair of the IEEE Tech. Committee for Computer Communications. She is co-author of the textbook: Mastering Networks – An Internet Lab Manual.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Wednesday November 15, 2017

Time: 7:30pm

Location: Lotte Lehmann Concert Hall

Sound Resistance presents works by music composition student Rodney DuPlessis, recent graduate Ori Barel, the CREATE Ensemble led by Karl Yerkes, and faculty members Yon Visell and Curtis Roads.

The featured work is two parts from FAUST (1962) by Else Marie Pade.

Else Marie Pade (1924–2016) was a Danish composer. In her youth, Pade was active in the anti-fascist resistance. She began by distributing illegal newspapers in 1943. In 1944 she received training in the use of weapons and explosives. She joined an all-female explosives group aimed at identifying the telephone cables in Aarhus with resistance organiser Hedda Lundh. Their goal was to blow up the telephone network when the Allied invasion came, so the Nazis would be left incommunicado. In 1944 she was arrested by the Nazi Gestapo and condemned to the Frøslev prison. Through a prison window she saw a shooting star and heard music from within. She began composing. The prisoners held song evenings to keep their spirits up. After the war she was educated at the Royal Danish Academy of Music in Copenhagen. Later she participated in the famous Darmstadt Summer Courses with Pierre Schaeffer and Karlheinz Stockhausen. In 1954 she became the first Danish composer of electronic music.

Time: Monday, November 13, 2017, 1pm

Location: Elings Hall, room 1601

MAT students Joseph Tilbian, Mark Hirsch and Ehsan Sayyad will present their research, scholarly and creative activities to fellow students and the UCSB Community.

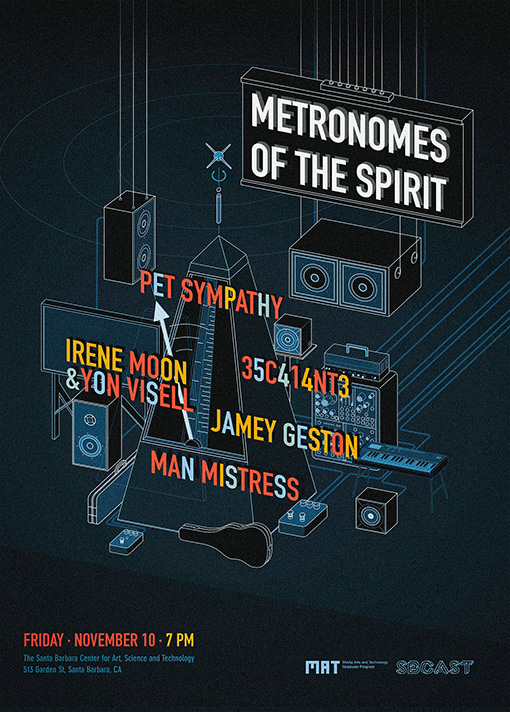

Date: Friday November 10, 2017

Time: 7pm

Location: SBCAST, downtown Santa Barbara

Performing members of the MAT/UCSB community include Owen Campbell, Juan Manuel Escalante, Jamey Geston, Mark Hirsch, Sölen Kiratli, Josh Mueller, Payam Rowghanian, Katja Seltmann, and Yon Visell.

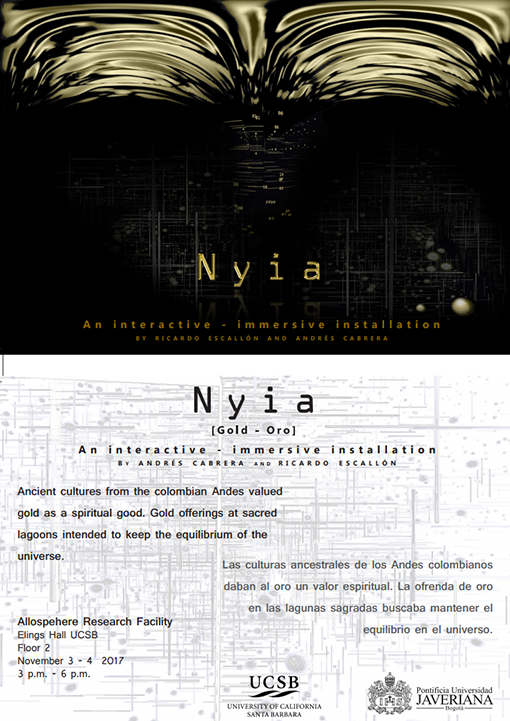

Dates: November 3 & 4, 2017

Times: 3pm to 6pm

Location: The AlloSphere, 2nd floor Elings Hall

"Hacía el indio dorado su ofrecimiento echando todo el oro que llevaba a los pies en el medio de la laguna, y los demás caciques que iban con él y le acompañaban, hacían lo propio."

"The golden indian would make his offering throwing all the gold at his feet in the middle of the lagoon, and the rest of the chieftains with him did the same."

Juan Rodríguez Freyle (1566-1642)

Nyia is an interpretation of the material vs. spiritual value of gold, based on the offerings made by native pre-Hispanic cultures in the sacred lagoons of the Colombian Andes. It is that same mythical gold that led Spanish conquistadores to follow the legend of El Dorado in search of fortune, bringing death and devastation. In contrast to a sonification (sound work), Nyia is an ‘imagication’: an experiential artwork for which the creative began as sounds and music and then combined with an entrancing, visual narrative.

Speaker: Rebecca Allen

Time: Monday, November 6, 2017, 1pm

Location: Elings Hall, room 1601

Rebecca Allen will review selected works in her long history as an artist working with emerging technologies; beginning with the early use computer animation to her current work with virtual reality. Her early work, before the invention of the personal computer and consumer software, could only be done in research labs. This gave her the opportunity to not only create unique new forms of art, but to play a role in the invention of new technological tools to create that art. The research lab has continued to be the place that has sparked and inspired artistic ideas throughout her career. Her most recent interest in Neuroscience, brain imaging and VR explores areas of perception, philosophy and behavior.

Bio

Rebecca Allen is an internationally recognized artist inspired by the aesthetics of motion, the study of behavior and the potential of advanced technology. Her artwork, which takes the form of virtual and augmented reality art installations, experimental video and large-scale performances, spans over three decades and embraces the worlds of fine art, performing arts, pop culture and technology research. Her early interest in utilizing computers as a tool for artists led to her pioneering art and research in computer generated human motion, artificial life and other procedural techniques for creating art. Allen’s work is exhibited internationally and is part of the permanent collection of the Centre Georges Pompidou in Paris and Whitney Museum and Museum of Modern Art in New York. She has collaborated with artists such as Kraftwerk, Mark Mothersbough (Devo), John Paul Jones (Led Zeppelin), Peter Gabriel, Carter Burwell, Twyla Tharp, Joffrey Ballet, La Fura dels Baus and Nam June Paik. Rebecca moves fluidly between artist studio and research lab, using her research to inform her art. She was founding Chair of the UCLA Department of Design Media Arts and is currently a professor there. She was founding director of two Nokia Research labs and has led research and creative teams at UCLA, MIT Media Lab Europe, One Laptop per Child, NYIT Computer Graphics Lab and elsewhere.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Fukushima! Fukushima! is a conceptual virtual reality installation exploring the post-modern condition of human habitation and technological catastrophe. Based on the artist's memory and photography at the 10 km evacuation zone around Fukushima Daiichi Nuclear Power Plant, along with the voice and testimonies from local residents, an immersive virtual world is created to connect one's personal experience with the volatile apparition of collective trauma. A non-linear story-telling technique is employed that the audience can freely walk, observe, interact and habituate themselves at this marginalized and forgotten land at the corner of Japan. The lines between human subject, bystander, habitant, and technological refugee are, therefore, blurred, broken, and re-examined.

SBCAST is located at 513 Garden St, in downtown Santa Barbara.

Speakers: Scott Ross and Brett Leonard

Time: Monday, October 30, 2017, 1pm

Location: Elings Hall, room 1601

Scott Ross |

Brett Leonard |

Scott Ross and Brett Leonard are pioneers in the entertainment industry, now partnering on a new endeavor in virtual storytelling. Their company Virtuosity has assembled the best and brightest in the fields of science, technology, psychology, medicine, storytelling, programming, coding, creativity, art direction and executive management.

Scott Ross Bio

Dr. Ross is one of the most notable pioneers in digital media, technology and entertainment. He founded, along with James Cameron, Digital Domain, one of the largest digital production studios in the motion picture and advertising industries.

Under Ross’ direction, Digital Domain garnered multiple Academy Award nominations, receiving its first Oscar for the ground breaking visual effects in TITANIC. A second Oscar for WHAT DREAMS MAY COME and a third for THE CURIOUS CASE OF BENJAMIN BUTTON followed that success. Under his watch, Digital Domain also developed the compositing software, NUKE, which to this day is still the industry standard. Prior to forming Digital Domain he led George Lucas’ vast entertainment empire, running ILM, Skywalker Sound, LucasFilm Commercial Productions and DroidWorks.

Ross has played a significant role in the worldwide advertising industry as well. Having started commercial production companies whilst at LucasFilm (ILM and LCP) as well as Digital Domain’s Commercials Division, he has led two of the largest VFX commercial production companies on the planet.

Brett Leonard Bio

Brett Leonard is an award-winning Filmmaker/Futurist with over 25 years of Hollywood experience as a pioneer in both the development and use of new media technologies. He is widely recognized as a top thought- leader in Virtual Reality since introducing the concept and term to popular culture in his seminal hit film Lawnmower Man - Many pioneers and tech leaders of the emerging VR industry count Lawnmower Man as one of their key inspirations for creating the actual “reality” of this world-changing medium.

Brett is a sought-after speaker for the rapidly growing VR/AR industry, giving keynotes at events around the globe as a leading expert on defining interactive storytelling, and on providing an ethical framework for these powerful new mediums.

Brett’s core philosophy, born out in all of his work is: “To empower people to create and experience compelling story, character, and emotion in any new medium, no matter what the technology being used to enable it”.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Michael Gurevitch

Time: Monday, October 16, 2017, 1pm

Location: Elings Hall, room 1601

This talk situates the practice of designing digital musical instruments with respect to models of musical-social interaction. I argue that the conventional composer-performer-listener model, and the underlying metaphor of music-as-communication upon which it relies, cannot reflect the richness of interaction and possibility afforded by digital technologies. Building on Paul Lansky’s vision of an expanded and dynamic social network, I present an alternative, ecological view of music-making, in which the opportunities for creation, design, and the production of meaning emerge from the inherent uncertainty in the interfaces that mediate musical-social interactions. However, the increased potential afforded by digital systems is undermined by our tendency to treat digital musical instrument design as a form of invention, wherein the various roles in this network are collapsed into a single individual. Using examples from my own practice, I describe approaches to designing instruments that respond to the technologies that form the interfaces of the network, which can include scores and stylistic conventions.

Bio

Michael Gurevich is Associate Professor and Chair of Performing Arts Technology at the University of Michigan School of Music, Theatre & Dance, where he teaches courses in physical computing, electronic music performance, and interdisciplinary collaboration. His research employs quantitative, qualitative, humanistic, and practice-based methods to explore the aesthetic and interactional possibilities that can emerge in music performance with computer systems. Prior to the University of Michigan, Professor Gurevich was a Lecturer at the Sonic Arts Research Centre (SARC) at Queen’s University Belfast, and a research scientist at the Institute for Infocomm Research in Singapore. He holds a B.M. from McGill University and an M.A. and Ph.D. from the Center for Computer Research in Music and Acoustics (CCRMA) at Stanford. He is an active author and editor in the New Interfaces for Musical Expression (NIME), computer music, and human-computer interaction communities, was Music Chair for the 2012 NIME conference in Ann Arbor, and is a Vice-President of the International Computer Music Association.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

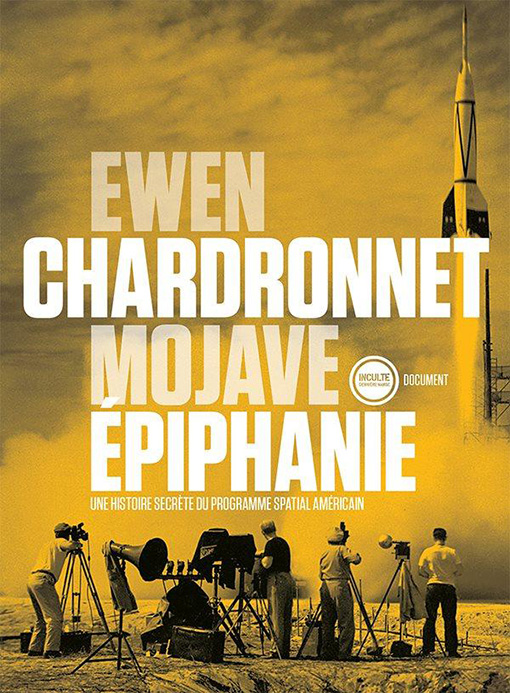

Speaker: Ewen Chardronnet

Time: Monday, October 2, 2017, 1pm

Location: Elings Hall, room 1601

Ewen wrote his last book "Mojave Epiphanie" during his residency, that was partially also supported by the UC Institute for Research in the Arts. Ewen is an instrumental figure in the European media arts production/distribution/reflection and has been so over the past 15 years.

Bio

Ewen Chardronnet is an author, journalist, curator and artist from France. He has since the early 90s participated in many artistic endeavors (music, performances, films, fanzines, installations, residences, production, group exhibitions) and wrote as an essayist in numerous publications and participated in numerous conferences. He has served on various boards and committees in the field of art and technology, and has worked as a consultant. He co-founded with the collective Bureau d'études The Laboratory Planet journal and write regularly on art&science and maker&hacker cultures in Makery.info magazine. Active in the field of space culture since 1995, he released in 2016 a non-fiction narrative book on the life of the founders of the Jet Propulsion Laboratory between 1935 and 1955 (Mojave Epiphanie, Inculte, march 2016) with the support of the UCIRA. Ewen Chardronnet will introduce his new project for an art&science residency program at the Roscoff Marine Station in Brittany, France ; as well as the recently co-founded Aliens in Green artists group, a tactical theater project where these « Aliens in Green are agents from a planet-turned-laboratory allowing earthlings to identify the numerous collisions between capitalist and xenopolitical forces.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, September 21st

Time: 3pm

Location: MAT Conference room, 2003 Elings Hall

Abstract:

To foster adoption of sonification across disciplines, and increase experimentation with sonification by non-specialists, a software prototype is proposed, which will demonstrate the distillation of many sonification tasks, encapsulating them in a single software application. The appliation will be operable from a GUI interface inspired by multimedia programs, such as audio editors and word processors. Further, the system will associate methods of sonification with the data they sonify, in session files, which will make sharing and reproducing sonifications easier.

It is posited that facilitating experimentation by non-specialists will increase the potential growth of sonification into fresh territory, encourage discussion of sonification techniques and uses, and create a larger pool of ideas to draw from in advancing the field of sonification.

Date: Friday, September 15th

Time: 10am

Location: MAT Conference room, 2003 Elings Hall

Abstract:

This project explores the unique character of interactive storytelling using filmed footage in mixed reality, combining virtual reality (VR) and augmented reality (AR) elements. Integration of computer-rendered artifacts and real-world video sequences, omni-directional narratives, and methods of interactivity are at the center of discussion. It demonstrates a scenario where fictional narratives with interaction possibilities are presented to the audience based on real scenes. This project is built in Unity and supported by HTC Vive for VR experience. It applies footage shot by the Nokia OZO stereo surround-view capturing system.

Effective interactive storytelling benefits from a creative drama manager that builds the basic narrative system, a thoughtful agent model that weaves in natural logic in order to achieve the dramatic goals intelligently, and a susceptive user model to sense audience choices and inputs. The rise of VR/AR brings new possibilities to the drama manager and more importantly, a whole new system for the user model. Mixed reality takes interactivity to a higher level, where the user gains more control over the direction and the pacing of the narrative. It fosters a better immersive experience, especially as display and haptic technologies develop further. However, challenges remain in the coherence of computer rendering and reality, in particular as constrained by the real-time requirement.

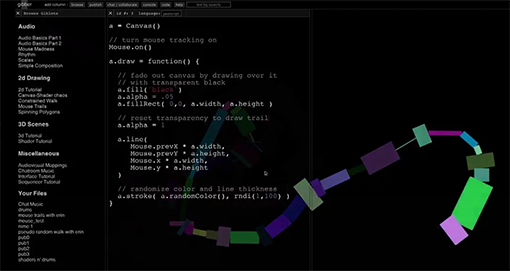

Thursday, August 3, 7pm

SBCAST

513 Garden St.

Santa Barbara

Charlie Roberts will produce some groovy sounds in a live coding performance using "Gibberwocky", a suite of live coding software co-authored by Charlie and Graham Wakefield. Also, Graham and Haru Ji will present their exhibit "Portrait", an audiovisual animation and real-time generative simulation.

sbcast.org/events/event/1st-thursday-2

Gibber

Portrait

Date: Tuesday, July 11th, 2017

Time: 2pm

Location: Engineering Science Building, room 2001

Abstract:

The intricate, beautiful patterns of fluid dynamics have captured the imaginations of artists and scientists for centuries. Although some composers have written atmospheric music steeped in the mood of fluid flow, the systematic generation of sound from fluid dynamics is a newer, more unexplored area of research. A recurring problem, however, is computational cost—high-quality simulations of fluid dynamics are time- and memory-consuming.

This dissertation comprises two distinct projects. The first is a novel data compression algorithm that alleviates the memory burden of subspace fluid simulations. Using a transform-based lossy compression scheme along with a sparse frequency domain reconstruction, we achieve an order of magnitude compression without any visible artifacts. Compression of this scale allows users to feasibly run complex scenes on a standard laptop.

The second is a framework for generating correlated fluid motions and audio spectra. Aesthetically, the goal is to construct a unified process to connect the sounds and visuals while retaining artistic and musical expression. Our technique is inspired by the shapes and sounds formed by the modes of vibration of Chladni plates. By computing the spectrum of the characteristic velocity fields of a fluid, we form an analogous map between fluid flow and mixtures of audible frequencies. As such, the framework unites the spectral content of both the visuals and the sounds. We then explore and categorize several different techniques of modulating this spectrum over time in a series of short audiovisual studies.

Date: Friday, June 16th, 2017

Time: 2pm

Location: transLAB (Elings Hall 2615)

Abstract:

In 1958 the 20th-century architect and composer Iannis Xenakis transformed lines of glissandi from the graphic notation of Metastasis into the ruled surfaces of nine concrete hyperbolic parabolas of the Philips Pavilion. Over the next 20 years Xenakis developed the Polytopes, multimodal sites composed of sound and light, and in 1978 his Diatope bookended these spectacles and once again transformed the architectural and musical modalities using a general morphology. These poetic compositions were both architecture and music simultaneously; forming the best examples of what Marcos Novak would later coin as archimusic. Contemporary examples have continued to experiment with this interdisciplinary domain, and though creative, the outcomes have neglected to move this transformational conversation forward.

This dissertation proposes to examine how to advance this transformational field of archimusic by introducing (A) an evaluative method to analyze prior trans-disciplinary works of archimusic, and (B) a generative model that integrates new digital modalities into the transformational compositional process based on the evaluative method findings. Together these two developments are introduced as archimusical synthesis and aim to contribute a novel way of thinking and making within this dynamic spatial and temporal territory. The dissertation research also proposes to organize and categorize the field of archimusic as an end in itself, presenting the trans-disciplinary territory as a studied and understood discipline framed for continued exploration.

Speaker: John Thompson

Time: Monday, May 22, 1pm

Location: Elings Hall, room 3001

This talk will focus on my work in a specific subset of visual music, electroacoustic audio-visual music. I will discuss three of my compositions, "Stream Stone Surface" "Accretion Flows" and "Electrotactile Maps". These compositions exhibit a tight coupling of sonic and visual components. The intended result in an audio-visual alloy, stronger than its individual parts. Each of the compositions employs a different strategy to combine the sonic and visual materials. I will discuss these strategies and speak to the results in practice.

John Thompson is a composer of electroacoustic music and electroacoustic audio-visual music. His recent audio-visual works feature a tight coupling of sonic and visual components. He is an advocate for music that explores otherness, contemplation and alternate paths toward beauty. He co-founded and co-directs the annual Root Signals Festival of Electronic Music and Media Art in 2014. In 2009, he began the Channel Noise concert series, which features an international selection of electroacoustic works.

John directs the Music Technology Program at Georgia Southern University where he is Professor of Music. He teaches courses ranging from audio programming to interactive media. He is currently an At-Large Director for the International Computer Music Association. He received his PhD from the University of California, Santa Barbara where he studied music composition and media arts with JoAnn Kuchera-Morin, Curtis Roads, Stephen Travis Pope, William Kraft, and Marcos Novak. As a National Science Foundation Postdoctoral Scholar, he investigated interactive systems in the California Nanosystems Institute’s Allosphere, a large space for immersive and interactive data exploration.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Friday, June 2nd

Time: 1pm

Location: Engineering Science Building, room 2001.

Melange, image by Kurt Kaminski.

Abstract:

The convergence of GPUs and spatial sensors fosters the exploration of novel interactive experiences. Next generation audiovisual synthesis instruments benefit greatly from such technologies because their components require significant computing resources and robust input methods. One technique that shares these requirements is physical simulation. The expressive potential of real-time physical simulation is rarely used in the domain of visual performance.

I present Melange, an audiovisual instrument that maps gestural input to a highly evocative real-time fluid dynamics model for synthesizing image and sound. Using general-purpose GPU computing and a structured light depth sensor, I explore different visual and sonic transformations of fluid flow as an interactive computational substance.

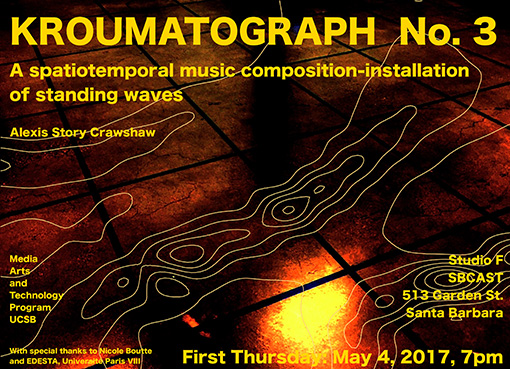

Thursday, May 4, 7pm

Studio F

SBCAST

513 Garden St.

Santa Barbara

Speaker: JoAnn Kuchera-Morin, Professor, Media Arts and Technology Graduate Program and Department of Music

Time: Thursday, May 4, 2-3pm

Location: Elings Hall, room 1601

RSVP at: https://forms-csep.cnsi.ucsb.edu/forms/PDS/Registration.php.

This talk is hosted by The UC Santa Barbara Center for BioEngineering.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Vladimir Todorovic

Time: Monday, May 1, 1pm

Location: Elings Hall, room 1605

This talk is an overview of my extensive artistic practice from the time I graduated at UCSB in 2004 until today. I will reflect on my experiences and creative methods used in my collaborative projects in a variety of media encompassing: generative art, procedural animation, game art, rapid prototyping data visualizations, code art, new media art installation and performance, as well as in art-house cinema projects. The subjects and themes that prevail in my artistic practice vary widely. They include: environmental visions, class and cultural differences, migrations, intangible heritage, memories, landscapes, nature, and sustainability. I define these projects as sensory and thought-provoking time-based narrative architectures where narrative potential has to be triggered and released by the active participation of the viewer. While designing narrative architectures, I find the process of bricolage defined by Claude Levi-Strauss, also essential in understanding my practice. Working with materials that are available, accumulating and tinkering with accessible technological means have resulted in a body of work that I attempt to understand. What is certain is that these art projects are completed out of the necessity to create and be in a dialogue with the participants of my narrative architectures.

Vladimir Todorovic is a filmmaker, multimedia artist and educator based in Singapore. He is working as an associate professor at the School of Art, Design and Media, NTU. His projects have won several awards and have been shown at hundreds of festivals, exhibitions, museums and galleries including: Visions du Reel, Cinema du Reel, IFFR (42nd, 40th and 39th), Festival du Nouveau Cinema, BIFF, SGIFF, L’Alternativa, YIDFF, Siggraph, ISEA (2016, 2010, 2008, 2006), Ars Electronica, Transmediale, Centre Pompidou, House of World Cultures, The Reina Sofia Museum and Japan Media Art Festival.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Wednesday, April 26, 2017

Time: 1:30 - 2:50pm

Location: College of Creative Studies, Bldg 494, Room 143

Abstract:

In my research, two prototype systems were developed to explore programming music using mobile touchscreen interactions and technologies. The first of these, miniAudicle for iPad, is an environment for programming ChucK code on an iPad. The second prototype developed is a sound design and music composition system utilizing touch and hand-written stylus input. In this system, called “Auraglyph,” users draw a variety of audio synthesis structures, such as oscillators and filters, in an open canvas. Ultimately, we believe this research shows that the critical parameters for developing sophisticated software for new interaction technologies are consideration of the technology's inherent affordances and mindful attention to design.

Bio:

Spencer Salazar is a computer musician and researcher currently serving as Special Faculty at the California Institute of the Arts School of Music. His research and teaching are focused on computer-based music expression, composition, and experience, with specific attention to mobile software development, systems for music programming, and computer-based music ensembles. Previously he pursued his doctoral studies at Stanford CCRMA. He has also created new software and hardware interfaces for the ChucK audio programming language, prototyped consumer electronics for Microsoft, and architected large-scale social music interactions for Smule, an iPhone application developer. He received a Bachelor of Science and Engineering in Computer Science from Princeton University in 2006 and will receive a Doctor of Philosophy degree (Ph.D.) in Computer-based Music Theory and Acoustics from Stanford University in 2017.

This guest lecture is part of the "MUSC CS 105 Embodied Sonic Meditation" course, offered by CCS in spring 2017.

Speaker: Adriene Jenik

Time: Monday, April 3rd, 1pm

Location: Elings Hall, room 1605

Artist and desert dweller Adriene Jenik will discuss the recent transition of her artistic practice leading from 30 years of pioneering experiments with narrative media and computing projects. Reflecting on the importance of place in telematic embodiment, new frameworks for understanding "audience" and beginning again, the talk will be of interest to those who have been or are currently at a crossroads in their life and art. Newly completed and in-progress projects (including several in-progress) will be presented.

Adriene Jenik is an artist, educator and arts leader who resides in the southwestern United States. Her computer and media art spans several decades including pioneering work in interactive cinema and live telematic performance. Jenik's artistic projects straddle and trouble the borders between art and popular culture. She was an early member of the Paper Tiger Television collective (1985-91) and a founding member of the Deep Dish TV Alternative Satellite network. Her video productions include the video short, "What's the Difference Between a Yam & a Sweet Potato?" (with J. Evan Dunlap), and the award-winning live satellite TV broadcast, "EL NAFTAZTECA: Cyber-Aztec TV for 2000 A.D." (with Guillermo Gómez-Peña and Roberto Sifuentes). "MAUVE DESERT: A CD-ROM Translation" is Jenik's internationally acclaimed interactive road movie based on the novel Le Désert Mauve by French Canadian author Nicole Brossard. Her creative research project, DESKTOP THEATER (1997-2002), was a series of live theatrical interventions and activities in public visual chat rooms developed with multi-media maven Lisa Brenneis. She recently completed a new experimental narrative "SPECFLIC 1.9" (60:00, 2013) at the Scottsdale Museum of Contemporary Art. Her current research work in “data humanization” is in development.

Jenik received her BA in English from Douglass College, Rutgers University and her MFA in Electronic Arts from Rensselaer Polytechnic Institute. She has taught a broad range of electronic media classes at California Institute of the Arts (CalArts), UC Irvine, University of Southern California (USC), and UCLA's New Media Lab and UC San Diego where she was a full-time research faculty member in the Visual Arts Department for 11 years. A founding professor of the Interdisciplinary Computer Arts Major at UCSD and the Digital Culture program at ASU, Jenik has taught electronic and digital media to generations of students. She served as the Katherine K. Herberger endowed chair of Fine Arts and Director of the School of Art at Arizona State University, one of 5 schools that make up the Herberger Institute for Design and the Arts from 2009-2016, and is currently a professor of Intermedia at ASU.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Oliver Gaycken

Time: Monday, March 13, 1pm

Location: Engineering Building, room 2001

World War II saw a massive surge in the use of cinema by the US military, ranging from propaganda films to gun-camera and combat footage to educational and test films. While the first two categories are well known (e.g. Frank Capra’s Why We Fight, the combat documentation of the Signal Corps companies, and William Wyler’s documentaries Memphis Belle and Thunderbolt), the extensive use of film in military education and testing remains largely unknown. This paper will examine and contextualize the films made by the Army Air Force’s Aviation Psychology Program during World War II. A key figure in this program was the perceptual psychologist J. J. Gibson, whose use of film in the evaluation and training of pilot candidates utilized insights from his prior research on perception for the automobile industry, where he established the concept of "optical flow". Gibson’s model of perception, which would culminate in his later theory of "ecological perception", provided a more dynamic understanding of how perceptual cues interacted with spatial movement. Film’s ability to simulate aspects of movement made it a crucial component of his wartime endeavors. Indeed, identifying and training potential pilots and gunners required a utilization of film that replicated novel perceptual experiences—film became a test medium that simulated situations of demanding visual performance.

As Gibson noted, his results were “to some extent relevant not only to aviation but also to general education and to the theory of visual learning.” Beyond providing a notable example of cinema’s participation in what Avital Ronell has termed modernity’s “test drive,” Gibson’s WWII test films provide an extraordinarily fine-grained account about the development of nontheatrical media. The Aviation Psychology Program explicitly built on prior research into the cinema’s efficacy as an educational tool, particularly the study by the University of Chicago’s Frank N. Freeman, Visual Education: A Comparative Study of Motion Pictures and Other Forms of Instruction (1924). But it also introduced new insights into how cinema’s ability to mimic human perception could be fine-tuned, including a particular emphasis on the "showing of situations from a subjective point of view".

Oliver Gaycken received his BA in English from Princeton University and his Ph.D. from the University of Chicago. He previously has taught at York University (Toronto) and Temple University. His teaching interests include silent-era cinema history, the history of popular science, and the links between scientific and experimental cinema. He has published on the discovery of the ophthalmoscope, the flourishing of the popular science film in France at the turn of the 1910s, the figure of the supercriminal in Louis Feuillade's serial films, and the surrealist fascination with popular scientific images. His book Devices of Curiosity: Early Cinema and Popular Science, appeared with Oxford University Press in the spring of 2015.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Friday, March 17, 2017

Time: 5pm

Location: MAT Conference Room (Elings Hall 1605)

Abstract:

The process of segmenting a sound signal into small grains (less than 100 ms), and reassembling them into a new time order is known as granulation. Articulation of the grains’ spatial characteristics can be achieved through various techniques, allowing one to choreograph the position and movement of individual grains. However, this spatial information is generally arbitrarily generated during the synthesis stage.

This dissertation introduces a novel theory and technique called Spatiotemporal Granulation. Through the use of spatially encoded signals, the proposed technique segments spatial and temporal information producing grains that are localized in both space and time.

Ambisonics is a technology that represents full-sphere spatial sound through the use of Spherical Harmonics. Using the encoded spatial information as part of the analysis-synthesis process enables the extraction of grains by spatial position, in addition to temporal position. Examples of potential effects and transformations include manipulation of spatial characteristics, spatial cross synthesis, and spatial gating. Angkasa, a software tool for performing Spatiotemporal Granulation will be presented as a proof of concept in the Allosphere.

Speaker: Ricardo Dominguez

Time: Monday, March 6, 1pm

Location: Engineering Building, room 2001

What is the relationship between data bodies and real bodies? Electronic Disturbance Theater co-founder Ricardo Dominguez cites this as a fundamental question for the group at the time of its founding in 1997, and even though this was twenty years ago, it still strikes us as pressing and unresolved. It is fundamentally a question of presence, a question of the relationship between the real and the virtual. I will trace out a cognitive map of the question based on a number of key gestures and theories that started in the early 1980's and still create the performative matrix of his collaborative projects now.

Ricardo Dominguez is a co-founder of The Electronic Disturbance Theater (EDT), a group who developed virtual sit-in technologies in solidarity with the Zapatistas communities in Chiapas, Mexico, in 1998. His recent Electronic Disturbance Theater 2.0/b.a.n.g. lab project ( with Brett Stalbaum, Micha Cardenas, Amy Sara Carroll, and Elle Mehrmand, the Transborder Immigrant Tool (a GPS cell phone safety net tool for crossing the Mexico/US border) was the winner of “Transnational Communities Award” (2008), an award funded by Cultural Contact, Endowment for Culture Mexico–US and handed out by the US Embassy in Mexico. It also was funded by CALIT2 and the UCSD Center for the Humanities. The Transborder Immigrant Tool has been exhibited at the 2010 California Biennial (OCMA), Toronto Free Gallery, Canada (2011), The Van Abbemuseum, Netherlands (2013), ZKM, Germany (2013), as well as a number of other national and international venues. The project was also under investigation by the US Congress in 2009-2010 and was reviewed by Glenn Beck in 2010 as a gesture that potentially “dissolved” the U.S. border with its poetry. Dominguez is an associate professor at the University of California, San Diego, in the Visual Arts Department, a Hellman Fellow, and Principal Investigator at CALIT2. UCSD. He also is co-founder of "particle group" with artists Diane Ludin, Nina Waisman, and Amy Sara Carroll, whose art project about nano-toxicology titled "Particles of Interest: Tales of the Matter Market" has been presented at the House of World Cultures, Berlin (2007), the San Diego Museum of Art (2008), Oi Futuro, Brazil (2008), CAL NanoSystems Institute, UCLA (2009), Medialab-Prado, Madrid (2009), E-Poetry Festival, Barcelona, Spain (2009), Nanosférica, NYU (2010), SOMA, Mexico City, Mexico (2012): http://hemisphericinstitute.org/hemi/en/particle-group-intro. He is a Hellman Fellow and has received a Society for the Humanities Fellowship at Cornell University (2017-18).

visarts.ucsd.edu/faculty/ricardo-dominguez

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Ben Bogart

Time: Monday, February 27, 1pm

Location: Engineering Building, room 2001

I strive to be a generalist; I am an artist who works at the edges of disciplines integrating and challenging knowledge, most recently in cognitive science. I favor a broad and integrative view of knowledge creation that rejects specialism. In the background of my work is an ongoing inquiry into the relation between the world as conceived and the world as independent of cognition. I think of subjects (imagination) and objects (reality) as mutually constructive; as subjects we project and impose categories on objects, while objects’ physical reality as independent of cognition constrains and challenges those categories. I use computational systems to examine the power struggle between subjects and objects. I build machine subjects that manifest categorization processes (unsupervised clustering algorithms) that suppress variation in order to emphasize sameness. My machine subjects categorize, organize and reduce the infinite complexity of sensory reality. In doing so they participate in a process of abstraction that breaks sensed reality into atomic particles that serve as the material from which novel images are constructed. These "mental" images are of the world—their mechanisms uncover underlying statistical truths about reality as independent of cognition, but they are also of us—they are projections of bounded subjective understanding. In this talk I will introduce the conceptual context for my work and present a survey of selected computational works including "Dreaming Machines" "Self-Organized Landscapes" "Watching and Dreaming" "As our gaze peers off into the distance, imagination takes over reality...quot; and current work in development.

Ben Bogart is a Vancouver-based interdisciplinary artist working with generative computational processes (including physical modeling, chaos, feedback systems, evolutionary computation, computer vision and machine learning) and has been inspired by knowledge in the natural sciences (quantum physics and cognitive neuroscience) in the service of an epistemological enquiry. Ben has produced processes, artifacts, texts, images and performances that have been presented at academic conferences and art festivals in Canada, the United States of America, the United Arab Emirates, Australia, Turkey, Finland, Germany, Ireland, Brazil, Hong Kong, Norway and Spain. He has been an artist in residence at the Banff Centre (Canada), the New Forms Festival (Canada) and at Videotage (Hong Kong). His research and practice have been funded by the Social Science and Research Council of Canada and the Canada Council for the Arts. Ben holds both master’s and doctorate degrees from the School of Interactive Arts and Technology at Simon Fraser University. During his master’s study he developed a site-specific artwork that uses images captured live in the context of installation as raw material in its ‘creative’ process. In is doctoral work he made "a machine that dreams" that is framed as both a model of dreaming and a site-specific artistic work manifesting an Integrative Theory of visual mentation developed during his doctorate. Ben is currently using this model in the appropriation and reconstruction of popular cinematic depictions of artificial intelligence.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Jeffrey Greenberg

Time: Tuesday, February 28, 10:30am

Location: Elings Hall, room 2611

In the past few years, neural networks, have re-emerged as one of the key technologies in AI related work. Because of their ability to find solutions in complex problem spaces, they are being applied in almost every field at this time. On one hand they are used to recognize and transform information from images, sound, speech, or text applications, and on the other they can generate and modify inputs as well, creating pictures, music, speech, poems, and software. Though the latter is very weak with comparison to the efficacy of the former, the step forward is amazing. In this talk we’ll look a brief technical look at architecture and theory of neural networks to form an intuitive understanding of how they work and the challenges there are in computing them. We’ll pay particular attention to convolutional neural nets in particular. We’ll look at various related visualization techniques for understanding how these conv-nets process information such as direct matrix representations, t-SNE, and see what insights we can gain through generative work, like DeepDream & DeepVis. t-SNE is one visualization that can be easily applied to multi-dimensional data to form insights with low effort. There are others that come from NLP, which is a different AI approach than neural networks, that are used when working with language. Two we’ll look at are based on ‘word embeddings’ : Word2Vec, and network mapping (controversy measures).

Jeffrey Greenberg has a degrees in Bioengineering and Performance Art from UCSD. Currently, VP of Engineering at PeerWell.co, where he is focused on improving outcomes of surgeries and other treatments, he has patents in ultrasound imaging, and voice recognition applications. With a history of product and deep technology innovation in such diverse areas as medicine and diagnostic imaging, social media, telephony, operating systems, and games, he aims to work on technology for doing good. Combining experiences at Bell Labs, working on AI, and edge technology together with an Art and culture focus ( including NEA, NYSCA, and foundation grants) he has a history of finding areas that matter and advancing the state-of-the-art.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Time: Tuesday, February 28, 2pm.

Location: CITS Seminar Room, 1310 Social Sciences and Media Studies Building.

"Fake News" is just the tip of the iceberg. The News has been outpaced by recent technological innovations. As a product it is not a category that excites a lot of investment. For years, revenue at most newspapers has been falling. Increasingly they are pressured to generate revenue by focusing on ‘engagement’, while their focus on improving on their core product offering, truth, has wavered. At best, they focus on innovations in distribution, opening their paywalls to social media, and innovating on reader experiences with interactive content. Though their product is supposed to be a useful tool for truth-seeking and evidence-grounded decision making, it has instead been gamed by anyone who can afford a cable channel, or who for less money can create a web-page or post a tweet.

In this talk, Greenberg broadly reconsiders the product and processes of the News, from sourcing through distribution, with an eye to making it both more robust and compelling to stand up in the face of significant technologies in play. Starting with the assertion that the news ‘article’ is ‘dead’, he proposes some guides for replacing it with more useful conveyances. He will propose a news ‘substrate’ that provides a (more) solid ground where the underpinnings of fact and assertion are clearer. The aim is to articulate the components of a refactored News, including not only product guidelines but some of the particular technologies and explorations needed to concretely make this a reality.

Speaker: Lance Putnam

Time: Tuesday, February 21, 6pm.

Location: Elings Hall, 2nd floor

In this talk, Lance presents the use of space curves as a fundamental construct for audiovisual composition. Curves provide an attractive starting point for audiovisual synthesis as they are relatively easy to translate into sound and graphics. Systems for producing curves for art and design date back to at least the 18th century and have carried through the technological stages of mechanical, electronic, and digital. Contemporary uses of space curves will be presented through Lance's audiovisual compositions "S Phase" and "Adrift" the hydrogen atom composition "Probably/Possibly?" done in collaboration with Dr. JoAnn Kuchera Morin and Dr. Luca Peliti, and the "Mutator VR" virtual reality experience done in collaboration with Dr. William Latham and Professor Stephen Todd at Goldsmiths college. The talk will be followed by immersive performances of the 3D audiovisual works "Adrift" and "Probably/Possibly?" in the UCSB AlloSphere three-story virtual environment.

Lance Putnam is a composer with interest in generative art, audiovisual synthesis, digital sound synthesis, and media signal processing. His work explores questions concerning the relationships between sound and graphics, symmetry in art and science, and motion as a spatiotemporal concept. He holds an M.A. in Electronic Music and Sound Design and a Ph.D. in Media Arts and Technology from the Media Arts and Technology program at the University of California, Santa Barbara. His dissertation "The Harmonic Pattern Function: A Mathematical Model Integrating Synthesis of Sound Graphical Patterns" was selected for the Leonardo journal LABS 2016 top abstracts. His audiovisual work "S Phase" has been shown at numerous locations including the 2008 International Computer Music Conference in Belfast, Northern Ireland and the Traiettorie Festival in Parma, Italy. His work "Adrift" an audiovisual composition designed for virtual environments, is on rotation in the UCSB AlloSphere and was performed live at the 2015 Generative Arts Conference in Venice, Italy. From 2008 to 2012, he conducted research in audio/visual synthesis at the AlloSphere Research Facility and TransLab in Santa Barbara, California. From 2012 to 2015, he was an assistant professor at the University of Aalborg in the Department of Architecture, Design and Media Technology where he also taught multimedia programming in the Art and Technology program. He is currently investigating new approaches to procedural art as a research associate in Computing at Goldsmiths, University of London under the Digital Creativity Labs. Here he is developing the virtual reality experience "Mutator VR" which has been shown shown at New Scientist Live, London, at East Gallery, Norwich University of Arts, and at Cyfest 17, St. Petersburg, Russia.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Vangelis Lympouridis

Time: Monday, February 13, 1pm.

Location: Engineering Science Building, room 2001

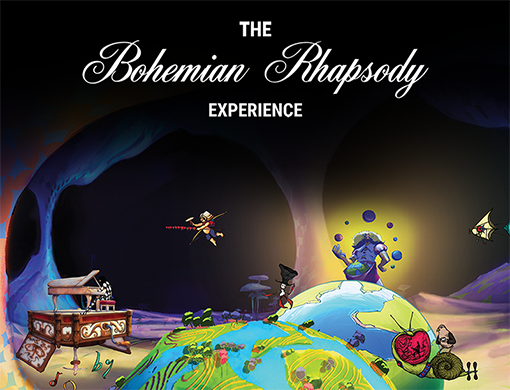

Virtual Reality has generated a lot of interest in recent years and there is a lot of progress already in formulating its language in both the academia and the industry. Vangelis will talk about the emergence of VR as a new medium and its ability to shape the way we experience musical content. The talk will cover a wide range of topics including best practices for VR, mechanics of engagement, immersive audio production, production design and development pipelines, artistic integrity, systems design and engineering. As a case-study, he’ll discuss his latest production, the Bohemian Rhapsody Experience, an innovative mobile VR application based on Freddy Mercury’s legendary song, which was developed in collaboration with Google Play and Queen. You can download the Bohemian Rhapsody Experience App in advance at www.enosis.io, in order to form your personal opinion, inquiries and discussion points. The App is available for Android and iOS smartphones that are VR compatible. For those who do not have a Google Cardboard or equivalent 3D mobile VR viewer, the app includes a 360 story mode.

Vangelis Lympouridis is the founder of Enosis VR. After a successful career in academia he founded Enosis, a cutting-edge production company that specializes in immersive virtual reality applications. Before launching his company Vangelis oversaw operations and research at the MxR Studio, the main VR Hub at the School of Cinematic Arts at the University of Southern California, where he explored Virtual Reality and Whole Body Immersion with an interdisciplinary team of students. While at USC, he produced a series of innovative VR projects including Project Syria: An Immersive Experience, commissioned by the World Economic Forum and exhibited in Davos, the Sundance Film Festival, the Sheffield Doc Fest and the Victoria and Albert Museum in London; Use of Force, funded by the Tribeca Film Festival and AP Google; and F1, a cinematic immersive experience presented at the Formula 1 Grand Prix in Singapore. Vangelis has a PhD in Whole Body Interaction and an MSc in Sound Design from the University of Edinburgh. He also holds a BFA in Sculpture and Environmental Art from Glasgow School of Art. He lives and works in Los Angeles, California.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

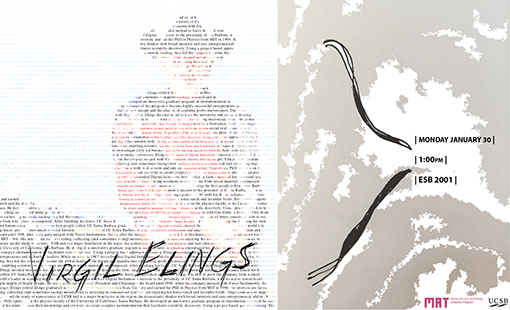

Time: Monday, January 30, 1pm.

Location: Engineering Science Building, room 2001

An iconoclastic thinker with broad interests and rare entrepreneurial ability, Virgil Elings, PhD, spent 20 years on the physics faculty of the University of California, Santa Barbara. He developed an innovative graduate program in instrumentation in which he encouraged his students to harness their knowledge and creativity to create complex instrumentation that facilitates scientific discovery. Using a project based approach to learning, Elings’ teaching and guidance have led many graduates of his program to become highly successful entrepreneurs and technology leaders. While on a leave in 1987, he cofounded Digital Instruments with Gus Gurley, a former student of his from several years earlier.

Using no outside funding, they led the company to become the global leader in the design and manufacture of scanning probe microscopes. These microscopes magnify objects by tens of millions of times, enabling scientists to see the very atoms from which materials are composed. After finishing his leave, UC Santa Barbara asked Elings to sever his ties with Digital Instruments. Elings decided instead to leave the university and continue to develop the firm. Applying his own unique style and business acumen, his uncanny knack for hiring the best people (often UC Santa Barbara graduates), and his philosophy of allowing staff to freely channel their creativity, Elings was able to grow the company from a small start-up to the world’s leader in scanning microscopes. Elings attributes much of Digital Instrument’s success to the proximity of UC Santa Barbara, with its active research environment and ample supply of bright people. He served as Digital Instruments’ President and Chairman of the board until 1998, when the company merged with Veeco Instruments. Shortly after the merger, Elings retired.

Elings graduated in 1961 with his B.S. in Mechanical Engineering from Iowa State University and earned his PhD in Physics from MIT in 1966. His interests are far-ranging, from riding, collecting (and sometimes racing) motorcycles to investing in commercial real estate and enjoying his horse ranch and lavender fields. Elings continues to support teaching, research and the study of nanoscience at UCSB and is a major benefactor in the region.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, January 26th

Time: 4-7pm, reception at 3:45pm.

There is also a live performance on Thursday, February 2nd at SBCAST from 7-8pm, 513 Garden St, Santa Barbara, CA 93101.

The performance turns into an installation piece and is open for public viewing on Friday, January 27th, 4-7pm

"How to Explain Pictures to a Live Paro" is an interactive durational performance artwork about gun control policies in the US, and the safety and security of students. It is an invitation to a conversation about these issues and a tribute to the students who have lost their lives in shooting incidents at schools. The artist will be performing with Paro, a robotic baby harp seal (a therapeutic robot), which is scientifically proven to be helpful in hospitals, elderly houses, and trauma therapy. Paro has come to help facilitate the interaction, communication, and healing through a dialogue that has been long postponed in our community.

You can also check out #DearParo on Facebook at 4:00pm PT on Thursday, January 26th, to see the video live, and virtually interact with the piece and other live audience members online.

Speaker: Howard Massey, audio consultant, journalist and author.

Time: Monday, January 23rd, 1pm.

Location: Engineering Science Building, room 2001

Some of the most important and influential recordings of all time were created in British studios during the 1960s and 1970s: iconic facilities like Abbey Road, Decca, Olympic, Trident, and AIR. This presentation will unravel the origins of the so-called "British Sound", and celebrate the people, equipment, and innovative recording techniques that came out of those hallowed halls.

Howard Massey is a music industry consultant, audio journalist, and author of "Behind The Glass", "Behind The Glass Volume II", and "The Great British Recording Studios".

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Henry Segerman, Department of Mathematics, Oklahoma State University.

Time: Friday, January 20th, 1pm.

Location: Elings Hall, room 1601

Spherical (or "360 degree") still and video cameras capture light from all directions, producing a sphere of image data. What kinds of post-process transformations make sense for spherical photographs and video? We can rotate the sphere, but is there an analogue to zoom in flat video?

By viewing the sphere of image data as the Riemann sphere, we can use complex numbers to describe the positions of the pixels. By scaling the complex plane, we get something like a zoom effect, with which we can make a spherical version of the Droste effect. By applying other complex functions, we can "unwrap" the sphere, producing other Escher-like impossible images and video.

The code to generate these effects is written in Python, and much of it is available on GitHub.

Henry Segerman is a mathematician, working mostly in three-dimensional geometry and topology, and a mathematical artist, working mostly in 3D printing. He is an assistant professor in the Department of Mathematics at Oklahoma State University, and author of the new book "Visualizing Mathematics with 3D Printing".

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Friday, January 13th

Time: 5:15pm

Location: Data Visualization Lab, Elings Hall, room 2611

Abstract:

Over the recent years, content creation in spatial audio has moved away from multichannel to object-based approaches, where instead of specifying audio signals for each speaker channel, audio is created with positional data. This is then rendered to speakers using one of the many spatial sound rendering techniques (VBAP, DBAP, Ambisonics, etc.). However, workflows for creating content in such systems are usually ad-hoc and vary vastly between software, algorithms, and controllers. The unit generator (UGen) is an abstraction used in MUSIC N languages to structure and define sound processing as an interconnected graph. This project will present a novel extension of the UGen graph for object-based spatial audio. By abstracting spatial position as a continuously varying signal transmitted along with the audio, we can allow for interconnections between processing blocks that exert transformations on both the sound and its spatial information. This will be shown to open up the possibility to define and explore spatial effects and transformations. Finally, this project presents "Lithe", a C++ framework to build object-based audio-graphs. It will also present an example use-case of this library to build a modular synthesis application called "Lithe Modular".