Speaker: Professor Vincent Hayward

Time: Monday, December 3rd, 2018, at 1pm.

Location: Elings Hall, room 1601.

The astonishing variety of phenomena resulting from the contact between fingers and objects may be regarded as a trove of information that can be extracted by organisms to learn about the nature and the properties of what is touched. This richness, which is completely different from that available to the other senses, is likely to have fashioned our somatosensory system at all levels of its organisation, from early mechanics to cognition. The talk will illustrate this idea through examples and show how the physics of mechanical interactions shape the messages that are sent to the brain; and how the early stages of the somatosensory system en route to the primary areas are organised to process these messages.

Vincent Hayward is presently on leave from Sorbonne Université. Before, he was with the Department of Electrical and Computer Engineering at McGill University, Montréal, Canada, where he became a full Professor in 2006 and was the Director of the McGill Centre for Intelligent Machines from 2001 to 2004. Vincent Hayward is an elected Fellow of the IEEE. He was an ERC advanced grant holder from 2010 to 2016. Since January 2017, he is Professor of Tactile Perception and Technology at the School of Advanced Studies of the University of London, supported by a Leverhulme Trust Fellowship.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Dr. Elizabeth Chrastil

Time: Monday, November 26th, 2018, at 1pm.

Location: Elings Hall, room 1601.

Navigation is a central part of daily life. For some, getting around is easy, while others struggle, and certain clinical populations display wandering behaviors and extensive disorientation. Working at the interface between immersive virtual reality and neuroimaging techniques, my research demonstrates how these complementary approaches can inform questions about how we acquire and use spatial knowledge. In this talk, I will discuss both some of my recent work and upcoming experiments that center on three main themes: 1) how we learn new environments, 2) the type of spatial information we learn from environments, and 3) how individuals differ in their spatial abilities. The behavioral and neuroimaging studies presented in this talk inform new frameworks for understanding spatial knowledge, which could lead to novel approaches to answering the next major questions in navigation.

Dr. Elizabeth Chrastil is an Assistant Professor in the Department of Geography at UCSB and is a faculty member in the Interdisciplinary Dynamical Neuroscience Program. Dr. Chrastil is the Associate Director of the Research Center for Virtual Environments and Behavior (RECVEB) at UCSB. Dr. Chrastil received her PhD from Brown University and did her postdoctoral work at Boston University. She also received an MS in biology from Tufts University and a BA from Washington University in St. Louis.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

The program will feature works by faculty, staff, and students and a historical work, "Gesang der Jünglinge (Song of the Youths)" (1956), by the celebrated German composer Karlheinz Stockhausen (1928-1979). Featured faculty include Clarence Barlow, Jeremy Haladyna, and Curtis Roads. Featured students include Yissin Chandran, Lena Mathew, Stewart Engart, and alumnus Anthony Paul Garcia. Admission is free.

Speaker: Juan Manuel Escalante

Time: Monday, November 5th, 2018, at 1pm.

Location: Elings Hall, room 1605.

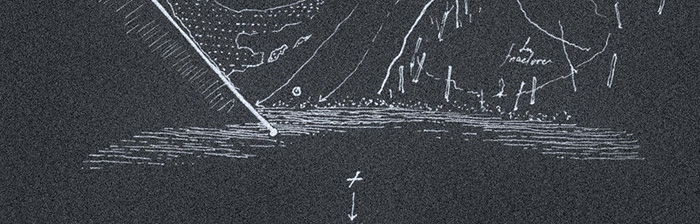

Zvoki Soče (Sounds of Soča) is a one week project made in the Julian Alps during PIFcamp 2018: a hacker-based open experience where art, technology and knowledge meet.

Zvoki Soče features field recordings, electronic sounds and visual scores. This talk will share these sounds, placing a strong emphasis on the creative context behind it. It will also discuss the visual translation strategies used to parse sonic information into a graphic notation system.

Audience members will have the chance to see the original drawings of this project, and browse the book, which will be presented publicly for the first time.

After seven years of studying architecture and graduating with honours in both college and master programs, Juan Manuel decided finally to dedicate his life to the arts and design.In recent years he has been using code as a creative medium. Throughout Realität (founded in 1998); his work has been shown in the US, France, the United Kingdom, Spain, Mexico, South Korea, Slovenia and featured in various festivals including OFFF (Barcelona and Mexico), Mutek, Binario, Ceremonia, Currents, amongst others. He has been awarded twice with the “Young Creators” grant by the National Fund for the Arts (2010 and 2013), the first prize for two consecutive years for “Best multimedia art application of the year” by the AMU (University Scholars Multimedia Association), and a current National Member of Art Creators (National Endowment for the Arts / MX).

He is a former member of the Master and PhD program in Architecture (National Autonomous University of Mexico) where he taught and directed its Media Lab. He is currently doing research to achieve his PhD in Media Arts & Technology at the University of California Santa Barbara (UCSB).

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Jennifer Jacobs

Time: Monday, October 29th, 2018, at 1pm.

Location: Elings Hall, room 1601.

Integrating computational tools into art, design, and engineering poses substantial challenges. New technologies present artists, designers, and engineers with rapidly changing toolsets and rigid platforms, whereas developers of computational tools struggle to provide appropriate constraints and degrees of freedom to match the needs of diverse practitioners. I study ways to address these challenges by developing systems that bridge manual and automated production and applying programming environments that enable practitioners to develop personalized software tools. In this talk, I present three future avenues of this research: systems for linked editing of multimodal artwork, systems for integrating manual control and automation in digital fabrication, and parametric tools for exploring alternatives in gestural creation. Each of these research avenues has the potential to provide access to powerful computational affordances while preserving opportunities for a variety of approaches to computational production.

Jennifer Jacobs is a Brown Institute Postdoctoral Fellow at Stanford University, working in the Computer Science Department. Her research examines ways to diversify participation and practice in computational creation by building new tools, software, and programming languages that integrate emerging and established forms of production. Jennifer received her PhD from the MIT Media Lab in the Lifelong Kindergarten Research Group. She completed a MS in the Media Lab’s High-Low Tech Group and an MFA in Integrated Media Art at Hunter College. Her work has been presented at international venues including Ars Electronica, SIGGRAPH, and CHI, where she has received multiple best-paper awards.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Miller Puckette

Time: Monday, October 15th, 2018, at 1pm.

Location: Elings Hall, room 1601.

In this talk I'll describe three recent results of a musical collaboration between me and the composer Kerry Hagan. Two of these are seriocomical performance art pieces: "Who was that timbre I saw you with?" and the upcoming "Cover fire". These entail using new musical interfaces (sometimes called "NIMEs") to control computer audio processes in ways that are designed to be hard to master. In a very different vein, the performance/installation, "Remnant", measures the echos of sounds made by four performers off each others' bodies and uses the resulting impulse responses as a time-varying, artificial aural space.

Miller Puckette obtained a B.S. in Mathematics from MIT (1980) and Ph. D. in Mathematics from Harvard (1986), winning an NSF graduate fellowship and the Putnam Prize Scholarship. He was a member of MIT's Media Lab from its inception until 1987, and then a researcher at IRCAM (l'Institut de Recherche et de Coordination Musique/Acoustique), founded by composer and conductor Pierre Boulez. At IRCAM he wrote Max, a widely used computer music software environment, released commercially in 1990 and now available from Cycling74.com.

Puckette joined the music department of the University of California, San Diego in 1994, where he is now professor. From 2000 to 2011 he was Associate Director of UCSD's Center for Research in Computing and the Arts (CRCA; now defunct).

He is currently developing Pure Data ("Pd"), an open-source real-time multimedia arts programming environment. Puckette has collaborated with many artists and musicians, including Philippe Manoury (whose Sonus ex Machina cycle was the first major work to use Max), Rand Steiger, Vibeke Sorensen, Juliana Snapper, and Kerry Hagan. Since 2004 he has performed with the Convolution Brothers. He has received honorary degrees from the University of Mons and Bath Spa University and the 2008 SEAMUS Lifetime Achievement Award.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Fabian Offert

Time: Monday, October 8th, 2018, at 1pm.

Location: Elings Hall, room 1601.

The primary goal of machine learning models today is not the mechanization of reason but the mechanization of perception. As a consequence, many of the tasks that machine learning models face are aesthetic tasks, ranging from the classification of images (CNNs) to the generation of completely new images (GANs). At the same time, the technical opacity of machine learning models makes it inherently difficult to properly evaluate their results. In fact, the interpretability of machine learning models has not only become an independent field of research within computer science but has also grown into an increasingly important philosophical challenge. The once speculative phenomenological question "how does the machine perceive the world" has become a real-world problem. In this talk I will try to answer the question "how does the machine perceive the world" by means of both a technical and a philosophical close reading of contemporary machine learning algorithms from the domain of interpretable machine learning. I will present ongoing research on the potential of feature visualizations for Digital Art History, on the notion of representation in feature visualizations, and on the aesthetics of ML-generated imagery.

Fabian Offert is a scholar, curator and stage designer. His work integrates technically informed critical and artistic approaches to the epistemological and aesthetic issues arising from contemporary machine learning algorithms. Currently, he is a doctoral candidate and Regent’s Fellow in the Media Arts and Technology program at the University of California, Santa Barbara, where he also teaches. His academic work has been featured at NIPS, ECCV, SIGGRAPH, Harvard University, EPFL, and other research universities. Before coming to California, Fabian worked at Goethe Institut New York and ZKM | Karlsruhe where he curated and managed several large-scale media art exhibitions. He received his Diploma degree from Justus Liebig University Gießen, where he was a German National Academic Foundation Fellow and student of composer and director Heiner Goebbels. His stage design work, supported by Kunststiftung NRW, the French Ministry of Culture, and others, is frequently shown in museums and theaters all over Europe, most recently at Museum Kunstpalast, Düsseldorf.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Friday, June 15th, 2018.

Time: 1pm

Location: Engineering and Science Building room 2001.

Abstract:

Siren is a software environment for exploring rhythm and time through the lenses of algorithmic composition and live-coding. It leverages the virtually unlimited creative potential of the algorithms by treating code snippets as the building blocks for audiovisual playback and synthesis. Employing the textual paradigm of programming as the software primitive allows the execution of patterns that would be either impossible or too laborious to create manually.

The system is designed to operate in a general-purpose manner by allowing multiple compilers to operate at the same time. Currently, it accommodates SuperCollider and TidalCycles as its primary programming languages due to their stable real-time audio generation and event dispatching capabilities.

Harnessing the complexity of the textual representation (i.e. code) might be cognitively challenging in an interactive real-time application. Siren tackles this by adopting a hybrid approach between the textual and visual paradigms. Its front-end interface is armed with various structural and visual components to organize, control and monitor the textual building blocks: Its multi-channel tracker acts as a temporal canvas for organizing scenes, on which the code snippets could be parameterized and executed. It is built on a hierarchical structure that eases the control of complex phrases by propagating small modifications to lower levels with minimal effort for dramatic changes in the audiovisual output. It provides multiple tools for monitoring the current audio playback such as a piano-roll inspired visualization and history components.

Date: Friday, June 15th, 2018.

Time: 11am

Location: MAT conference room, Elings Hall.

Abstract:

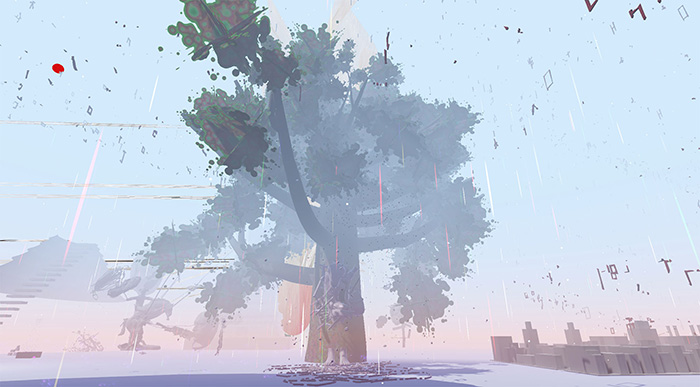

During the past few years, artists have been interpreting and transforming celebrated paintings into virtual reality experiences. This ‘virtualization’ process creates 3D scenes from 2D paintings, animates static graphics, and provides the audience with immersive 360-degree experiences. However, most of the transformed VR experiences are based on a direct translation approach, and thus lack original perspective, spatial narrative, and interaction mechanisms to engage and provoke the audience. Moreover, from a broader perspective, the current visual language of virtual reality is somewhat limited compared to diversified painting languages. The visual style of most VR projects tends to be either realistic or based on simple geometric shapes.

This project, "Reincarnation," takes abstract surrealism as its reference to explore an alternative virtual reality art experience. The project builds upon a series of Yves Tanguy's paintings to create an illusory and surreal experience, to seek and argue the animism in various matters, and to challenge the anthropocentric view of the world.

The project develops along three levels. The first is a study of Tanguy's fascinating creation of non-representational biomorphic objects and the surreal atmosphere his worlds convey. The method employed begins with analyzing the qualities of his worlds through comparing with traditional paintings and other surrealist artworks. The method also includes creating objects that are intended to "paraphrase" components from his paintings but with a third-dimensional imagination and biotic motion added to them. The second is an examination of how to recreate such experiences inside virtual reality. The process utilizes shape morphing, visual occlusion, multi-world scale, and interactive scene switching to unfold a narrative and to create an irrational virtual environment. The third is my own artistic and expressive investigation of the animism in non-realistic objects and the interdependent worldview. There are objects, created freely from my mind, that likewise have a biotic motion and mingle with the scene. Also, an interactive role-switch mechanism is developed to engage the audience from different perspectives.

The making process of this project references surrealist artistic techniques, such as collage and the chain game, to generate more imagery drawn from the unconscious. While "Reincarnation" is a juxtaposition of different elements from my understanding of Tanguy's paintings and my own unconscious, the methodology of this project could be further applied to future VR works in the area of surrealism.

Date: Monday, June 11th, 2018

Time: 12:00 noon

Location: Elings Hall, room 1605

Abstract:

In the last two decades we have witnessed the rise of multiple open-source electronic platforms based on embedded systems. By the nature of their design, they have mainly targeted physical computing and graphics applications. Sound recording, reproduction, synthesis, and processing have been usually made available through extensions. One of the key factors attributed to the success of these platforms is in the simplifications made to programming their computers. What we have not seen yet is a full featured audio-centric multi-channel platform capable of high resolution and low latency sound synthesis and processing. We attribute this to the lack of a high level language targeting such a platform.

This dissertation presents Stride, a new language designed for real-time sound synthesis, processing, and interaction design. Through hardware resource abstraction and separation of semantics from implementation, Stride targets a wide range of computation devices such as micro-controllers, system-on-chips, general purpose computers, and heterogeneous systems. With a novel and unique approach at handling sampling rates as well as clocking and computation domains, Stride prompts the generation of highly optimized target code. The design of the language facilitates incremental learning of its features and is characterized by intuitiveness, usability, and self-documentation.

Date: Tuesday, May 29th, 2018

Time: 10:40am

Location: Elings Hall, room 1605

Abstract:

The state-of-the-art multi-display setups powered by distributed GPU architectures (DGA) open significant potential for visual creation and scientific visualization by providing an ultra-high resolution computational display surface. However, as a DGA consists of many distributed computers, complex network operations are required to utilize all the screens as a unified display area. This in turn creates an accessibility barrier, since the users of such a system have to deal with multiple networked computers, an array of synchronization and rendering problems and closed and obtuse system integration solutions. These factors seriously block a whole set of creators from using DGA displays as a productive visual instrument.

Chameleon is a universal toolkit for dynamic visual computation on multi-display setups powered by distributed computers. The toolkit is designed to include only the most-necessary tools for DGA computation. With it, one can match existing content created for a single computer and display it on a multi-display system with DGA. Moreover, the toolkit provides a seamless user experience of cross-computer operations by providing a graphical user interface (GUI), where the user can group any or all screens as one rendering surface and attach desired content to it. Users can also directly adjust the content with built-in control signals on the fly. After the user finishes the configuration of all screens, he/she can save the current composition as a preset. The user is then able to switch back and forth between different saved presets. Additionally, he/she can create a timeline of presets to enable the system to automatically loop through them.

Chameleon is the first step of development aimed at enabling the use of DGA systems by an expanded user audience.

There will also be a free public talk with the artist, brunch, and museum open house on Sunday May 27 between 10am and 1230 am in the CCBER Classroom, Harder South Building. The brunch is free and open to the public.

www.eventbrite.com/e/the-radical-listener-sensing-landscapes-sounding-biospheres-tickets-45087973342

Inside a blockchain factory

Professor Roads' lecture will focus on the publishing model as opposed to the streaming and blockchain model of music distribution. Evangelists for streaming and blockchain models are obsessed with concocting new schemes that can make potentially everyone a star by eliminating the middleman, that is, publishers, curators, producers, and institutions in general.

Ebbing Sounds is a three-day interdisciplinary symposium and concert series investigating new ways of music production, listening, and critique. As music access today is dominated by streaming formats mediated by industry distributors and algorithm curation, Ebbing Sounds explores how streaming shapes our interaction with sound and music. A group of international artists, musicians, theorists, and sound streaming industry mediators will gather to discuss the formats and materialities of new emerging music and sound in the context of an algorithm-driven industry-audience relationship.

Ebbing Sounds is organized by zweikommasieben in cooperation with Marcella Faustini, DeForrest Brown Jr. and swissnex San Francisco, and is is kindly supported by Pro Helvetia Schweizer Kulturstiftung, Consulate General of Canada, FONDATION SUISA, Goethe-Institut San Francisco and the Cultural Services of the French Embassy in the United States.

Date: Wednesday, May 23rd, 2018

Time: 9am

Location: Harold Frank Hall, room 1132

Abstract:

Structure from motion (SfM) is a powerful tool to achieve 3D reconstructions from a large collection of photos. A crucial step of structure from motion is to construct a matching graph from the photo collections. In a matching graph, images are nodes and edges represent matching image pairs that share visual content. Despite many recent progress, constructing a good matching graph is still a bottleneck for most SfM algorithms, since most images do not match.

This project presents GraphMatch, an approximate yet efficient method for building the matching graph for large-scale SfM pipelines. First, we show by using a score computed from Fisher vectors of any two images, a better prior can be obtained to indicate image matching than the conventional vocabulary tree prior. Next, by using the graph distance between vertices in the underlying matching graph as a second prior, we show matching graph can be greatly densified. Finally, inspired by the PatchMatch algorithm, by combining these two priors into an iterative “sample-and-propagate” scheme, GraphMatch finds the most image pairs as compared to competing, approximate methods while at the same time being the most efficient.

Speaker: Chuck Grieb

Time: Monday, May 21st, 2018, at 1pm.

Location: Elings Hall, room 1601.

Be careful what you say to your children. Watching Ray Harryhausen’s Jason and the Argonaughts with his father, four year old Chuck Grieb asked how the skeletons "came alive". His father’s answer, "They got skinny actors" led the boy to a quest for the truth and a lifelong love for the fantastic.

Having earned his MFA in Film Production form USC, Chuck landed in the animation industry, where he has worked as a storyboard artist, animation director, animator, character designer, development artist, etc. for studios including Disney and Nickelodeon. Also a teacher, Chuck coordinates the animation program and teaches animation at Cal State University, Fullerton.

Chuck’s work in animation has encompassed the use of traditional, 2D Digital, and 3D Digital technology. Chuck’s award winning animated short films (Roland’s Trouble, Exact Change Only, and Oliver’s Treasure) have screened in over 90 festivals all around the world.

Chuck continues to create art and animation, pursuing his love for storytelling and the magic he felt when first seeing Ray Harryhausen’s skeletons come alive. A member of Society of Illustrators Los Angeles and SCBWI , Chuck has been exploring illustrative art, his work having been featured in esteemed publications including Spectrum: The Best in Contemporary Fantastic Art, Imagine FX, Infected By Art, and Exposé.

Chuck is represented by the Jill Corcoran Literary Agency.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Date: Thursday, May 17, 2018

Time: 3pm

Location: Engineering Science Building, room 2001

Abstract:

Human beings are deeply expressive through our voice and body language. To a vocalist, the body is both a musical instrument and an instrument for expressing emotions and intentionality. The singer can position the body and manipulate the vocal system in a way that improves tone quality and/or conveys nonverbal communication to the audience. Extending this concept of the singer’s body as an instrument to the digital world, a sustained interest in developing digital musical instruments (DMI) to real-time control vocal processing has been explored. Yet, no follow-up scientific evaluation of such DMI and its gesture-sound mapping strategies has been conducted. General design principles in this field are also absent. These little-studied art-science questions are crucial to systemize the fundamental theory in contemporary vocal performance and to make better art tools to further expand the human repertoire. This dissertation situates the analysis of designing and evaluating mediation technologies as artistic tools that can be used to promote the core values of underrepresented cultural heritage.

From a scientific perspective, I conducted quantitative and qualitative research and proposed the first scientific evaluation framework for DMI design in vocal processing, specifically the mappings from (bodily) action to (sound) perception, from the audience’s perspective. Empirical data show evidence of valid design and identifying the audience's degree of musical engagement. From the engineering perspective, I defined DMI design principles based on my DMI design experience and the criteria of efficiency, subjectivity, affordance, culture constraints, social meaning-making, and technology. These investigations on body-mind connections through mediation technologies provided the fundamentals of "Embodied Sonic Meditation," an artistic practice rooted in the richness of Tibetan contemplative culture, embodied cognition, and "deep listening." It invites new ways of using sensorimotor coupling through auditory feedback to create novel artistic expressions and to deepen our engagement in the world that we are living in. Three multimedia pieces — Mandala, Tibetan Singing Prayer Wheel, and Resonance of the Heart — were realized as proof-of-concept case studies. This series of work demonstrates that through the practice of "Embodied Sonic Meditation" and her own work, a media artist cannot only promote traditional underrepresented culture but also project these cultural values and identities into the cutting-edge art and technology context of contemporary society.

Speaker: Lisa Snyder

Time: Monday, May 7th, 2018, at 1pm.

Location: Elings Hall, room 1601.

Sure, it’s cool, but is it scholarship? Media hype for 3D and VR worlds is impossible to avoid, and digital technologies are increasingly pushing the boundaries of academic knowledge production with methods that are decidedly challenging to the old-school standards of our more traditional disciplines. In this presentation, I will briefly describe the architectural reconstruction process using my real-time simulation model of the World’s Columbian Exposition of 1893 as a case study, and then explore the roadblocks to transitioning this type of scholarship and technology into an accepted vehicle for research, pedagogy, and publication.

Lisa is OIT’s Director of Campus Research Initiatives, Acting Director of the Research Technology Group (RTG), and Manger of GIS, Visualization, and Modeling Group within the RTG. Through these roles, Lisa is responsible for providing institutional leadership and support to coordinate and build campus capacity for innovative research and pedagogy through institutional coordination, alignment, and development of IT system requirements related to research computing and research data management. She also works to facilitate broad-based, campus-wide collaboration and coordination with all academic departments, administrative and academic support units, campus computing facilities, and media/communications offices to align research and research computing with institutional direction.

Lisa has a Master of Architectural History from the University of Virginia and a Ph.D. in Architecture from UCLA. Her primary research is on pedagogical applications for interactive computer models of historic urban environments. She developed the reconstruction model of the Herodian Temple Mount installed in 2001 at the Davidson Center in Jerusalem, and expanded the project in 2007 with a reconstruction of the early Islamic structures on the site during the eighth century. Lisa has also worked extensively on a reconstruction model of the 1893 World’s Columbian Exposition that is shown intermittently at Chicago’s Museum of Science and Industry.

Currently, with funding from the National Endowment for the Humanities, Lisa is leading a team to develop VSim, a software interface that provides users with the ability to craft narratives in three-dimensional space as well as the ability to embed annotations and links to primary and secondary resources and web content from within the modeled environments (HD-50958-10 and HK-50164-14). In 2017, Lisa and Elaine Sullivan (UCSC) published "Digital Karnak: An Experiment in Publication and Peer Review of Interactive, Three-Dimensional Content," in the Journal of the Society of Architectural Historians, which used a 3-D reconstruction of the ancient Egyptian temple complex as the basis for a publication prototype that uses VSim to embed academic argument and annotations within the 3-D reconstruction.

Snyder was also co-PI on “Advanced Challenges in Theory and Practice in 3D Modeling of Cultural Heritage Sites,” an NEH Summer Institute that was hosted at UMass Amherst in June 2015 with a follow-up symposium at UCLA in June 2016 (HT-50091-14). She is an Associate Editor of Digital Studies / Le Champ Numérique and was a senior member of the Urban Simulation Team at UCLA from 1996 to 2013.

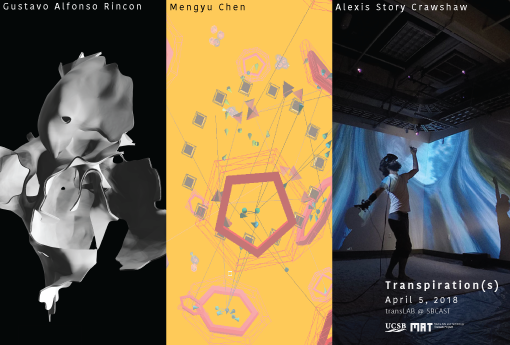

Artists: Mengyu Chen, Alexis Story Crawshaw, Cindy Kang, Enrica Lovaglio, Anshul Pendse, Gustavo Alfonso Rincon, Sahar Sajadeih, Ehsan Sayyad, Tim Wood, Jing Yan, Zhenyu Yang, Yin Yu, and Weidi Zhang.

Future: Invisible: Tripping: Machine proposes an open, tolerant, combinatorial, and creative stance toward encounters with the unknown. To begin with, two notions are brought together: future tripping and invisible machine. From their collision, two new notions are generated: future invisible and tripping machine.

The first, Future Invisible, alludes to an ancient saying by the sophist Isocrates (to whom much of the model of paideia is credited): "Μηδενὶ συμφορὰν ὀνειδίσῃς· κοινὴ γὰρ ἡ τύχη καὶ τὸ μέλλον ἀόρατον."(in English: “Taunt no one’s misfortune; for to all fortune is common and the future invisible.”)

The second, Tripping Machine, alludes to Paul Klee’s painting Twittering Machine, and to the impinging of the artificial on nature. But, whereas tripping might be construed as negative, the impulse here is to choose to see that-which-trips as providing an opportunity to solve, not forget, the problems that arise when worlds collide.

Twittering Machine, in turn, directs attention to Paul Klee’s "Pedagogical Sketchbook," which contains a wealth of ideas for how to make anything, including worlds, by balancing and reconciling forces inherent in the field of their encounter.

These two notions, one ancient, one modern, collide/collude with worldmaking and VR and transvergence and all the ideas we are exploring in the present and are anticipating for our future.

Other combinations are possible. All are welcome. Writing "future: invisible: tripping: machine:" explodes all the components, making all available for recombination, pointing to the openness to these other combinatorial possibilities, and to any that arise as the work/world progresses.

Notably, "tripping" now leans into "twittering" — a notion of travel is maintained, but the fear of falling/failing is replaced by hopeful birdsong.

Embodiment follows: these words are not idle, they are enacted. They become instructions to a form of worldmaking that takes its cues from the rainforest or the coral reef or the living city. A group of transvergent worldmakers build in parallel, with no initial plan other than the establishment of a sustainable ecosystem within which diversity can thrive. Intentions collide and are resolved as opportunities. Step by step, one teetering/twittering trip at a time, beauty emerges from ever-unfolding balance.

Ms. Walker will perform with Dr. Richard Boulanger of the Berklee School of Music. Also featured will be audiovisual works by Corwin Professor Clarence Barlow. The concert will open with a performance by the CREATE Ensemble, a laptop chamber ensemble directed by Karl Yerkes.

Elaine Walker received her degree in Music Synthesis Production from Berklee College of Music in 1991, and worked as a lab monitor in the electronic music labs until 1999. Upon graduation she founded the all-electronic band, ZIA, using homemade alternate MIDI controllers and microtonal scales. She received her Masters Degree in Music Technology from New York University in 2001. While at NYU, Elaine managed the Music Technology lab monitor crew. Her thesis was on using chaos mathematics to compose and perform music. She built a hardware MIDI device to play "chaos melodies", allowing the performer control over the mathematics, tempo and dynamics. For several years that followed, she was one of two music editors for the cartoon Pokemon with 4Kids Entertainment, and later GoGoRiki. Elaine has performed with, mixed and produced the band Number Sine. She has also done freelance music editing, mixing and keyboards for various Phoenix bands including Vitruvian and Alcoholiday. She has continued to perform with ZIA, build new MIDI instruments and research microtonality. In the Summers Elaine performs her duties as Education and Public Outreach Coordinator for the Haughton-Mars Project with NASA scientists in the Canadian High Arctic.

Speakers: JODI

Time: Monday, April 30th, 2018, at 1pm.

Location: Elings Hall, room 1601.

JODI is a collective of two artists, JOan and DIrk, who have been creating original artworks for the World Wide Web since the mid-1990s.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

This month will feature work by Sihwa Park, Timothy Wood and Yin Yu. Rides from campus to SBCAST (and back) will be provided, stayed tuned for more details.

Speaker: Ingmar H. Riedel-Kruse

Time: Monday, April 16th, 2018, at 3pm.

Location: Elings Hall, room 1601.

We currently witness a revolution in life-science technology and discovery. I will share my vision that microbiological systems should be as constructible, interactive, programmable, accessible, and useful as our personal electronic devices. Biological processes transcend electronic computation in many ways, e.g., they synthesize chemicals, generate active physical forms, and self-replicate - thereby promising entirely new applications to foster the human condition.

My lab’s research focuses on dynamic multicell assemblies, and we take a combined bottom-up / top-down approach:

(1) We utilize synthetic biology and biophysics approaches to facilitate the engineering and understanding of multicell assemblies. I will demonstrate an orthogonal library of genetically encoded heterophilic cell-cell adhesion pairs that enables self-assembled patterns of bacteria at the 5 μm scale, furthermore the optogenetic control of homophilic cell-cell adhesion that enables the programming of biofilm patterns at the 25 μm scale (‘Biofilm Lithography’).

(2) We pioneered ‘Interactive Biotechnology’ that enables humans to directly interact with living multicell assemblies in real-time. I will provide the rational for this interactivity, conceptualize biotic processing unit (BPUs), and demonstrate multiple platforms based on phototactic Euglena cells, e.g., tangible museum exhibits, scalable biology cloud experimentation labs, biotic video games, and swarm programming languages.

Applications of our work aim to advance microbiology research, smart-materials science, STEM education, the scientific method, and the arts.

Ingmar H. Riedel-Kruse is an Assistant Professor of Bioengineering at Stanford University. His research seeks to make it easier to engineer and program multicellular biological systems, circuits, and devices in order to foster the human condition. It is his vision that micro-biological systems are as constructible, interactive, programmable, accessible, and useful as current electronic devices. He runs an interdisciplinary lab integrating diverse areas like synthetic biology, biophysics, human-computer interaction design, embedded cyber-physical systems, modeling, education, and games. He received his Diploma in theoretical solid-state physics at the Technical University Dresden, did his PhD in experimental biophysics at the Max Planck Institute of Molecular Cell Biology and Genetics, followed by a postdoc at the California Institute of Technology.

Speaker: Allison Okamura

Time: Monday, April 16th, 2018, at 1pm.

Location: Elings Hall, room 1601.

Haptic (touch) feedback can play myriad roles in enhancing human performance and safety in skilled tasks. In teleoperated surgical robotics, force feedback improves the ability of a human operator to effectively manipulate and explore patient tissues that are remote in distance and scale. In virtual and augmented reality, wearable and touchable devices use combinations of kinesthetic (force) and cutaneous (tactile) feedback to make rich, immersive haptic feedback both more compelling and practical. In this talk, I will present a collection of novel haptic devices, control algorithms, and user performance studies that demonstrate a wide range of effective design approaches and promising real-world applications for haptic feedback.

Allison M. Okamura received the BS degree from the University of California at Berkeley in 1994, and the MS and PhD degrees from Stanford University in 1996 and 2000, respectively, all in mechanical engineering. She is currently Professor in the mechanical engineering department at Stanford University, with a courtesy appointment in computer science. She was previously Professor and Vice Chair of mechanical engineering at Johns Hopkins University. She has been an associate editor of the IEEE Transactions on Haptics, editor-in-chief of the IEEE International Conference on Robotics and Automation Conference Editorial Board, an editor of the International Journal of Robotics Research, and co-chair of the IEEE Haptics Symposium. Her awards include the 2016 Duca Family University Fellow in Undergraduate Education, 2009 IEEE Technical Committee on Haptics Early Career Award, 2005 IEEE Robotics and Automation Society Early Academic Career Award, and 2004 NSF CAREER Award. She is an IEEE Fellow. Her academic interests include haptics, teleoperation, virtual environments and simulators, medical robotics, neuromechanics and rehabilitation, prosthetics, and engineering education. Outside academia, she enjoys spending time with her husband and two children, running, and playing ice hockey.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Jennifer Jacobs

Time: Friday, April 13th, 2018, at 1pm.

Location: Engineering Sciences Building, room 2003.

Computation offers enormous creative potential; however, human creative production is complex, multifaceted, and people are creative in vastly different ways. Different creative practices are elusive to define, difficult to measure, and challenging to embody in technological systems. In this talk, I describe my efforts to support professional creative practitioners who work in domains that are traditionally separate from programming by building computational systems that align with their existing skills and practices. I present efforts to support computational creation in three interconnected areas: systems for bridging procedural and manual practice, meta-tools tools for creative production, and creative collaboration as research inquiry. Collectively, these efforts demonstrate how integrating creative practice and systems research can produce approachable, expressive computational systems that foster confidence and agency among new groups of practitioners, improve understanding of different creative communities, and inform the development of diverse computational systems.

Jennifer Jacobs is a Brown Institute Postdoctoral Fellow at Stanford University, working in the Computer Science Department. Her research examines ways to diversify participation and practice in computational creation by building new tools, software, and programming languages that integrate emerging and established forms of production. Jennifer received her PhD from the MIT Media Lab in the Lifelong Kindergarten Research Group. She completed a MS in the Media Lab’s High-Low Tech Group and an MFA in Integrated Media Art at Hunter College. Her work has been presented at international venues including Ars Electronica, SIGGRAPH, and CHI, where she has received multiple best-paper awards.

Speaker: Huaishu Peng

Time: Wednesday, April 11th, 2018, at 12pm.

Location: Elings Hall, room 1601.

3D printing technology has been widely applied to produce well-designed objects. There is a hope to make both the modeling process and printing outputs more interactive, so that designers can get in-situ tangible feedback to fabricate objects with rich functionalities. To date, however, knowledge accumulated to realize this hope remains limited. In this talk, I will present two lines of research. The first line of work aims at facilitating an interactive process of fabrication. I demonstrate novel interactive fabrication systems that allow the designer to create 3D models in AR with a robotic arm to print the model in real time and on-site. The second line of work concerns the fabrication of 3D printed objects that are interactive. I report new techniques for 3D printing with novel materials such as fabric sheet, and how to print one-off functional objects such as sensor and motor. I will conclude the talk by outlining future research directions built upon my current work.

Huaishu Peng is a Ph.D. candidate in the Information Science department at Cornell University. His multi-disciplinary research interests range from human-computer interaction design, robotic fabrication to material innovation. He builds software systems and machine prototypes that make the design and fabrication of 3D models interactive. He also looks into new techniques that can fabricate 3D interactive objects. His work has been published in CHI, UIST and SIGGRAPH and won Best Paper Nominee. His work has also been featured in media such as Wired, MIT Technology Review, Techcrunch, and Gizmodo. In addition, he is serving on the UIST organizing committees as the poster co-chair and on the CHI program committees as an associate chair.

Speaker: Cindy Hsin-Liu Kao

Time: Monday, April 9th, 2018 at 3pm.

Location: Elings Hall, room 1601.

Sensor device miniaturization and breakthroughs in novel materials are allowing for the placement of technology increasingly close to our physical bodies. However, unlike all other locations for technological deployment, the human body is not simply another surface for enhancement—it is the substance of life, one that encompasses the complexity of individual and social identity. Yet, technologies for placement on the body have often been developed separately from these considerations, with a dominant emphasis on engineering breakthroughs. My research involves opportunities for cultural interventions in the development of technologies that move beyond clothing and textiles, and that are purposefully designed to be placed directly on the skin.

In my research, body craft is defined as existing cultural, historical, and fashion-driven practices and rituals associated with body decoration, ornamentation, and modification. As its name implies, hybrid body craft (HBC) is an attempt to hybridize technology with body craft materials, form factors, and application rituals, with the intention of integrating new technological functions having no prior relationships with the human body with existing cultural practices. With this grounding, HBC can support the generation of future techno customs in which technology is integrated into culturally meaningful body adornments.

Example artifacts of the HBC framework and engineering approach include DuoSkin, an on-skin interface fabrication process grounded in metallic temporary tattoo practices, and NailO, a fingernail mounted track-pad that doubles as a nail art sticker. Hybrid in design, these artifacts concomitantly serve as technological devices and body art. While they encompass the functionality of on-body technologies, they can be applied, worn, and experienced as body crafts. By incorporating cultural considerations into the design of on-body technologies, I explore opportunities for extending their lifetimes and purposes beyond mere novelty and into the realms of cultural customs and traditions. The technology of today is soon obsolete, yet cultural customs are passed on, and carried on, throughout generations.

Cindy Hsin-Liu Kao is a Taiwanese born engineer and artist, currently a PhD candidate at the MIT Media Lab. She is advised by Chris Schmandt in the Living Mobile group. Her research practice, themed Hybrid Body Craft, blends aesthetic and cultural perspectives into the design of on-body interfaces. She also creates novel processes for crafting technology close to the body. Her research has been presented at various conferences and magazines (ACM CHI, UbiComp/ISWC, TEI, UIST, ACM Interactions), while receiving media coverage by CNN, TIME and Forbes. Her work has been exhibited and shown internationally at The Boston Museum of Fine Art, Ars Electronica, Dutch Design Week, and New York Fashion Week. She has worked at Microsoft Research developing cosmetics-inspired wearables, and is a recipient of the Google Anita Borg Scholarship. Among her awards are a honorable mention/best paper award at ACM CHI and UIST, the A'Design Award, the Fast Company Innovation by Design Award Finalist, an Ars Electronica STARTS Prize Nomination, and the SXSW Interactive Innovation Award.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Laurel Beckman

Time: Monday, April 9th, 2018 at 1pm.

Location: Elings Hall, room 1601.

Visual and conceptual richness, pleasure and open curiosity, layered meaning that enjoys absurdity, nuance, odd leaps, perverse symbology. Two examples in lieu of an abstract generalization (though Abstraction is great, necessary and under appreciated IRL!): 1. Derived from the empathy-eliciting body gestures of classic clowns, typographic-like characters (mutant offspring of international logotypes and street writing), present as gesturing fools and speculative vowels to encourage messy behavior in the face of scary times that may otherwise prompt reserve (‘New Fools’, pre-trump). 2. Resembling the aliens at Stonehenge, timeless yet specific Stonewall revelers share the dance floor with the human-size tools of their rebellion. All action takes place in a/your navel ('It’s Stonewall in My Navel’).

Nurturing eccentric positions and spaces, privileging uncertainty and humor over conventional narrative approaches; I’m particularly interested in themes concerning affect, including those at the crossroads of consciousness + social conditions, meta-physics + science, perceptual phenomena + stage/screen space. My video and public projects often enlist commercial, neglected, and civic spaces in efforts to contribute meaningfully to the cultural landscape. My projects have screened in festivals, public spaces, museums and galleries throughout the world in over 30 countries including the USA, Peru, Canada, Italy, Palestine, France, Australia, India, Brazil, Switzerland, China, Iran, Spain, and the Netherlands. I'm a professor of Art, emphasis in Experimental Video/Animation and Public Practices, at the University of California Santa Barbara, USA.

A recent lengthy interview, at Women CineMakers, Special Edition, vol 6, can be found here: issuu.com/women.cinemakers/docs/viedition/26.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

SBCAST is located at 513 Garden Street in downtown Santa Barbara.

Speaker: JoAnn Kuchera-Morin

Time: Monday, March 12th, 2018 at 1pm.

Location: Elings Hall, room 1601.

The AlloSphere, a 30-foot diameter sphere built inside a 3-story near-to-anechoic cube was invented by composer and digital media pioneer Dr. JoAnn Kuchera-Morin, to facilitate research that intersects arts and science through the immersive, interactive representation and transformation of complex systems. Scientifically, the AlloSphere is an instrument for gaining insight and developing bodily intuition about environments into which the body cannot venture—abstract higher-dimensional information spaces, ideally suited for exploring big and complex data sets. Artistically, it is an instrument for the creation and performance of avant-garde new works and the development of new modes and genres of expression and forms of immersion-based entertainment. The AlloSphere is one of the largest immersive scientific instruments in the world containing unique features such as true 3D, 360-degree projection of visual and aural data, and sensing and camera tracking for interactivity. The instrument is built at its scale and size to facilitate scaling to any computational platform, including mobile devices.

Dr. JoAnn Kuchera-Morin is a composer, Professor of Media Arts and Technology and Music, and a researcher in multi-modal media systems content and facilities design. Her years of experience in digital media research led to the creation of a multi-million dollar sponsored research program for the University of California—the Digital Media Innovation Program—where she was chief scientist from 1998-2003. Through her research as Chief Scientist of UC, Professor Kuchera-Morin built and developed the Digital Media Center and AlloSphere Instrument and the Graduate Program in Media Arts and Technology within the California NanoSystems Institute, a research and creative practice community based on the intersection of science, art, engineering and mathematics. The AlloSphere instrument and software infrastructure design is based on the process of music composition and performance. Professor Kuchera-Morin serves as the Director of the AlloSphere Research Facility and Professor of Media Arts and Technology and Music. She earned a Ph.D. in composition from the Eastman School of Music, University of Rochester.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Andrew Culp

Time: Monday, March 5th, 2018 at 1pm.

Location: Elings Hall, room 1601.

In this talk, media theorist Andrew Culp compares three different types of diagrams: mechanical, chemical, and cybernetic. Beginning with the mechanical, he considers how the introduction of machines beginning with the steam engine embody what Siegfried Giedion calls in Mechanization Takes Command "the movement of movement". He also examines chemical models, especially the molecular bio-pharmacological approach to experimentation introduced by Paul Preciado’s study of testosterone and other hormones. Finally, he looks to network diagrams as what Alexander R Galloway has named "protocological" power, now found in everything from war manuals to business organization texts.

The talk is ultimately about the power of diagrams. Rather than treating them as mere metaphor, he explores how each interdisciplinary form has been actualized across art, science, and politics.

Andrew Culp (PhD Ohio State, 2013) teaches Media History and Theory in the MA Program in Aesthetics and Politics and the School of Critical Studies at CalArts. His published work on media, film, politics, and philosophy has appeared in Radical Philosophy, parallax, angelaki, and boundary 2 online. He also serves on the Governing Board of the Cultural Studies Association. His interest in media stems from the after-lives of technologies born out of the anti-globalization movement of the 1990s. In his first book, Dark Deleuze (University of Minnesota Press, 2016), he proposes a revolutionary new image of Gilles Deleuze’s thought suited to our 24/7 always-on media environment, and it has been translated into numerous languages including Spanish, Japanese, and German.

Current work includes a monograph on technologies of anonymous resistance titled Persona Obscura (under contract with University of Minnesota Press), articles on the influence of the digital/networks on radical politics, and explorations of contemporary theories of pessimism.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

The works highlight novel methods for communicating information, and the possibility for creating insight through non-traditional data representation.

First Thursdays at SBCAST features audio-visual performances and installations by the MAT community in downtown Santa Barbara, on the first Thursday of every month.

SBCAST is located at 513 Garden Street in downtown Santa Barbara.

Time: Thursday, March 1st, 2018, at 5pm.

Location: Studio Xenakis, Music Building room 2215.

Thea Farhadian is a performer/composer based in San Francisco Bay Area and Berlin. Her projects include solo violin and interactive electronics, acoustic improvisation, solo laptop, radio art, and video. Her solo pieces for violin and electronics combine a classical music background with extended technique and digital processing using the program Max/MSP. In 2016 she released her solo CD, Tectonic Shifts, which integrates the violin with interactive electronics.

Thea's work has been seen internationally at venues which include the Issue Project Room, and Alternative Museum New York City, Galerie Mario Mazzoli, Sowieso, and Quiet Cue in Berlin, the Room Series, the Center for New Music, and Meridian Gallery in San Francisco, the Center for Experimental Art and the Aram Kachaturyan Museum in Yerevan, Armenia, International Women's Electroacoustic Listening Room Project at Bimhaus in Amsterdam.

Thea has held residencies at Steim in the Netherlands and at Bait Makan in Jordan, and at the Montalvo Arts Center in Saratoga, California, and has done several university presentation's and participated in panel discussions at Columbia University in New York, and the City University of London. Thea is a former member of the Berkeley Symphony orchestra where she played under Kent Nagano for ten years. She has an MA in Interdisciplinary Arts from San Francisco State and an MFA in Electronic Music from Mills College, and studied Arabic classical music with Simon Shaheen.

In 2009, she was a lecturer in the Art Department at the University of California, Santa Cruz.

For more information, visit: www.theafarhadian.com.

Speaker: Agnieszka Roginska

Time: Monday, February 26th, 2018, at 1pm.

Location: Elings Hall, room 1601.

Dr. Roginska will talk about the latest developments in immersive audio and emerging trends in the field of spatial audio. Her talk will touch on ongoing projects at NYU in the field of immersive sound including research in capturing, reproducing, and simulating immersive experiences for Virtual, Augmented, and Mixed Reality.

Dr. Agnieszka Roginska is Music Associate Professor and Associate Director of the Music Technology program at the Steinhardt School, at New York University. She received a Bachelor’s degree in music from McGill University in Montreal, Canada, with a double major in Piano Performance and Computer Applications in Music. After receiving an M.M. in Music Technology from New York University, she pursued doctoral studies at Northwestern University where she obtained a Ph.D. in 2004. At NYU, Dr. Roginska conducts research in the simulation and applications of immersive and 3D audio including the capture, analysis and synthesis of auditory environments, auditory displays and its applications in augmented acoustic sensing. She is the co-editor of the book titled "Immersive Sound: The Art and Science of Binaural and Multi-Channel Audio". She is an AES Fellow.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

The changing paradigm of performance practice is creating new virtuosity. This work documents performance multidimensionality, identifying processes of musical expression in the field of new music including acoustic and electronic means, including the exploration of computer-mediated interaction and the use of Augmented Instruments. New mediums are currently seen as extensions of instrumental practice and available for creative purposes during the compositional and performative processes.

International D’Addario Woodwinds and Selmer Paris artist, Silverstein Pro Artist, Henrique Portovedo has found his place in contemporary music by working with composers such as R. Barrett, P. Ferreira Lopes, P. Ablinger, P. Niblock, M. Edwards, among others. Portovedo has more than 40 works for saxophone dedicated to him. He was soloist with several orchestras and ensembles including L’Orchestre d’Harmonie de la Garde Republican, Trinity College of Music Wind Orchestra, Orquestra de Sopros da Universidade de Aveiro, Sond'Art Electric Ensemble etc. Co-founder of QuadQuartet and Artistic Director of Aveiro SaxFest, he recorded for several labels including Naxos and Universal. He was artist in residency at ZKM, Karlsruhe, visiting researcher at Edinburgh University and is now visiting researcher at UC Santa Barbara supported by Fulbright Foundation. Henrique is member of the European Saxophone Comité, Tenor Sax Collective, President of the Portuguese Saxophone Association and was the artistic director of the European Saxophone Congress 2017. Henrique gave concerts and masterclasses at prestigious festivals and conservatoires as Real Conservatorio Superior de Musica de Madrid, Conservatoire Royal de Bruxelles, Mallorca SaxFest and Conservatori Superior de Música de les Illes Balears, Trinity Laban Conservatoire of Music & Dance, Hochschule fur Musik Karlsruhe and more.

Join organ phenomenon Cameron Carpenter for a moderated conversation and Q&A session. The event is sponsored by UCSB Arts and Lectures and Media Arts and Technology.

A live concert performance will be held on Monday, February 12 at 7pm at the Granada Theater, in downtown Santa Barbara.

For more information, visit: artsandlectures.ucsb.edu/Details.aspx?PerfNum=3723.

Speaker: Ken Fields

Time: Monday, February 12th, 2018, at 1pm

Location: Elings Hall, room 1601

The Global Loop Orchestra is an experiment in networked musical ritual. We focus on a simple method for approaching a network music performance in order to achieve a larger scale event with more actors involved. We use the software Artsmesh to connect multiple cities and Ableton live to play several sound loops at each node. We test the network ping delay between each of the various participating locations in order to extract the inherent ratios of beats per minute (BPM) on each edge of our mesh - a many to many structure of connectivity. We aim for a chronometrically interlocked musical topology that is inspired by the ancient Greek analog computer, the Antikythera Mechanism, which 2000 years ago modeled the complex mechanics of the solar system. Each performance is an iteration of the same process, but using different loops, with the hope that each performance will achieve a refinement toward our goal of a planetary scale, sonically synchronous loop machine. Syneme has been playing network music for over a decade. The Global Loop Orchestra is part of a project initiated by Syneme (Network Music Lab at the Central Conservatory of Music, Beijing) and the CERNET2 (China Educational Research Network). The image above is a schematic of the gears inside the Antikythera Mechanism and reflects the same type of interlocked annular and phasic relationships we wish to constitute with each instance of the orchestra; the teeth per rotation (TPR) of the mechanic gears above are replaced by the beats per minute (BPM) of our sound files.

Kenneth Fields is currently a Professor at the Central Conservatory of Music in Beijing, China. Previously, Ken held the position of Canada Research Chair in Telemedia Arts, investigating all aspects of live musical performance over high-speed networks. Ken has been developing Artsmesh since 2008, digital presence workstation (DPW) for network music performance. Ken received his Ph.D. in Media Arts from UCSB in 2000. He is on the editorial board of the Journal of Organised Sound.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

The conference celebrates the achievements of today's generation of women media artists using technology in their work, and will include a mixture of paper and poster presentations, demos/workshops, installations and concerts.

Speaker: Pradeep Sen

Time: Monday, February 5th, 2018, 1pm

Location: Elings Hall, room 1601

Monte Carlo (MC) path-tracing is now the most common rendering algorithm used in industries ranging from architectural visualization to feature film production. MC rendering systems produce photorealistic images by simulating the physical flow of light through paths in the scene. However, if too few light paths are computed, the images are filled with objectionable noise, which made MC rendering unfeasible for over two decades. Today, MC denoising algorithms are the most popular tool for removing this noise, and they have been used in films such as Disney’s “Big Hero 6” and featured in products such Pixar’s Renderman and NVIDIA's Iray. However, it was only a few years ago that these post-process, screen-space denoising approaches were considered unsuitable for tackling even small amounts of MC noise, because it was thought they would either leave noise artifacts or overblur scene detail.

In this talk, we will present a first-hand account of the MC denoising revolution that has unfolded over the past decade and the key innovations that made it possible. We begin in the summer of 2008, when we observed the industrial “best-practices” for dealing with MC noise at Sony Pictures Imageworks, one of the first studios to adopt a path-tracer as the primary renderer. The limitations of the available approaches used in production motivated us to start exploring the possibility of robust MC denoising algorithms.

The first key idea we developed that we could output several features computed at render time (sample positions, surface normals, texture values) to make the denoiser more robust and effectively turn the rendering system into a black box. Since these features often contained MC noise, we realized we had to carefully adjust the manner in which these features were used from pixel to pixel in order to remove the noise but preserve the scene detail. The resulting system was the first to demonstrate that high-quality, post-process general MC denoising was indeed possible.

In subsequent work, we observed that the problem could be modeled as a supervised learning problem that would train a system to reproduce a denoised output from noisy inputs. Since training a full denoiser given a limited number of scenes was difficult, we trained an end-to-end system that would output the parameters of a filter that would produce a result comparable to the ground truth. Later, we extended this idea to work compute the final color directly, allowing the denoiser to work robustly in production environments. Our new system, developed in collaboration with Disney and Pixar, was trained using millions of examples from the Pixar film “Finding Dory” and then applied as a proof-of-concept to denoise the renderings for the upcoming Pixar films Cars 3 and Coco, even though they had completely different artistic styles and color palettes. Although MC denoising has been credited as being one of two key “enabling technologies” that brought path-tracing to feature film production, the journey is far from over. We conclude the talk by discussing future directions for MC denoising, and describe how it fits among the pantheon of tools available for variance reduction.

Pradeep Sen is an Associate Professor in the UCSB MIRAGE Lab in the Department of Electrical and Computer Engineering at the University of California, Santa Barbara. He attended Purdue University from 1992 - 1996, where he graduated with a B.S. in Computer and Electrical Engineering. He then attended Stanford University where he received his M.S. in Electrical Engineering in 1998 in the area of electron-beam lithography. In 2000, he joined the Stanford Graphics Lab where he did research on real-time rendering and computational photography. He received his Ph.D. in Electrical Engineering in June 2006, advised by Dr. Pat Hanrahan. His research interests include algorithms for image synthesis, computational image processing, and computational photography, and he is a co-author of over 30 technical publications, including ten SIGGRAPH/SIGGRAPH Asia/ToG publications. Dr. Sen has been awarded more than $2.2 million in research funding, including an NSF CAREER award in 2009.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: David Rosenboom

Time: Monday, January 29, 2018, 1pm

Location: Elings Hall, room 1601

This talk will draw from selected examples of my work over several decades that explore how propositional models for musical worlds have energized my composer-performer practice, which often collapses distinctions among formal percepts and embraces a dynamic dimensionality in musical structures that may be fundamentally emergent and/or co-creative. Selected examples that emphasize interaction strategies in music—including some inspired by socio-cultural-neuro-political emergence—are explored along with their implications for designs and definitions of instruments.

David Rosenboom (b. 1947) is a composer, performer, interdisciplinary artist, author and educator known as a pioneer in American experimental music. During his long career, he has explored ideas about the spontaneous evolution of musical forms, languages for improvisation, new techniques in scoring for ensembles, multi-disciplinary composition and performance, cross-cultural collaborations, performance art and literature, interactive multi-media and new instrument technologies, generative algorithmic systems, art-science research and philosophy, and extended musical interface with the human nervous system. He holds the Richard Seaver Distinguished Chair in Music at California Institute of the Arts, where he has been Dean of The Herb Alpert School of Music since 1990 and serves as a board member of the Center for New Performance. Recent highlights have included a fifty-year retrospective of his music presented in a series of performances at the new Whitney Museum of American Art in New York (2015), a six-month exhibition of his work with brainwave music at Centre Pompidou-Metz in France (2015-2016), a four-month exhibition of his work in computer music at Whitechapel Gallery in London (2015-2016), a retrospective of his music for piano(s) at Tokyo Opera City Recital Hall (2016), the premiere of his Nothingness is Unstable, a work for electronics, acoustic sources and 3-dimentional sound diffusion at ISSUE Project Room in Brooklyn (2017), and numerous publications, recordings, festival performances and keynote speeches at international conferences. Following his retrospective at the Whitney Museum, he was lauded in The New York Times as “an avatar of experimental music.” Rosenboom is a Yamaha Artist.

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.

Speaker: Andres Burbano

Time: Monday, January 22, 2018, 1pm

Location: Elings Hall, room 1601

In this presentation, we will discuss the potentialities that fieldwork offers to many aspects of the media arts creative research practice. Fieldwork opens exciting and challenging experiences from designing gathering data processes to the implementation of collaboration strategies. The interaction with professionals with expertise in the field such as anthropologists, archaeologists, and biologists has the potential to illuminate and transform our practice because it contributes to the re-elaboration of the limits of what happens inside the laboratory. Examples of practical projects in collaboration with professors George Legrady, Angus Forbes, Jonathan Pagliaro and Gabriel Zea will be presented. On the other hand, considering transmission e information display as a crucial component of sharing knowledge and artistic experiences, some projects on exhibition design will be presented, from the James Bay Cultural Atlas to the Macondo Pavillion, from Visualization History Expo to the preparations of the Siggraph 2018 Art Gallery in Vancouver.

Andres Burbano is currently Associate Professor in the Department of Design at Universidad de los Andes in Bogota, Colombia. Burbano holds a Ph.D. in Media Arts and Technology from the University of California Santa Barbara where he wrote a dissertation about media technology history in Latin America. He was ISEA2017 Academic Chair and will be Siggraph 2018 Art Gallery Chair. Burbano was a keynote at Potential Spaces at ZKM 2017 and is visiting lecturer at the Krems University in Austria. "Burbano, originally from Colombia, explores the interactions of science, art and technology in various capacities: as a researcher, as an individual artist and in collaborations with other artists and designers. Burbano's work ranges from documentary video (in both science and art), sound and telecommunication art to the exploration of algorithmic cinematic narratives. The broad spectrum of his work illustrates the importance - indeed, the prevalence - of interdisciplinary collaborative work in the field of digital art."

For more information about the MAT Seminar Series, go to: seminar.mat.ucsb.edu.