Background >> Technique

Wave Field Synthesis and Related Software

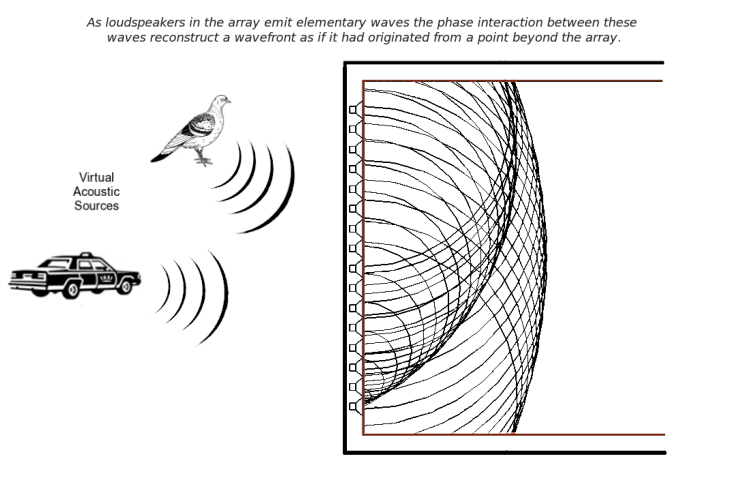

Wave Field Synthesis relies on multiple point sources to reconstruct wave fronts that appear to originate from virtual point sources either within or outside of the listening space. The key advantage WFS arrays have over traditional surround sound speaker setups (e.g. 5.1) is that the listener's position in the listening space will not detract from the aural experience, i.e. there is no "sweet-spot" associated with traditional systems.

The pre-composed elements of Welcome will require the use of an authoring tool. Sound scenes can be developed using the ListenSpace interface along with a host such as ProTools. These scenes are then encoded using the MPEG-4 format which keeps individual signals separate and stores meta-data about each signal's physical location and the effects of the acoustic environment. This scene can then be decoded by a system specific to the installation space generating a separate signal for each point in the WFS array.

IRCAM Wave Field Synthesis Overview (pdf)

Recording

With multiple signals being recorded in concert inside Welcome, an automated system will be used to separate, analyze, process, and reintroduce these signals in some form into the environment. The first stage of this system separates individual signals from each other using the FastICA (Fast Independent Component Analysis) algorithm. For this, multiple recording inputs are required (specifically, the number of inputs should equal, if not exceed, the number of signal sources).

Speech Analysis

These isolated signals will then be separately scanned by a continuous, speaker-independent speech recognition system for recognizable keywords. This system is able to extract words from naturally spoken speech where the beginnings and ends of contiguous words are often slurred together. Furthermore, the system will recognize speech without previous enrollment of the speaker, i.e. specific information about each individual voice the system "hears" is not required.

Survey of the State of the Art in Human Language Technology: Spoken Language Input